|

Lyapunov Equation

The Lyapunov equation, named after the Russian mathematician Aleksandr Lyapunov, is a matrix equation used in the stability analysis of linear dynamical systems. In particular, the discrete-time Lyapunov equation (also known as Stein equation) for X is :A X A^ - X + Q = 0 where Q is a Hermitian matrix and A^H is the conjugate transpose of A, while the continuous-time Lyapunov equation is :AX + XA^H + Q = 0. Application to stability In the following theorems A, P, Q \in \mathbb^, and P and Q are symmetric. The notation P>0 means that the matrix P is positive definite. Theorem (continuous time version). Given any Q>0, there exists a unique P>0 satisfying A^T P + P A + Q = 0 if and only if the linear system \dot=A x is globally asymptotically stable. The quadratic function V(x)=x^T P x is a Lyapunov function that can be used to verify stability. Theorem (discrete time version). Given any Q>0, there exists a unique P>0 satisfying A^T P A -P + Q = 0 if and only if the linear ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

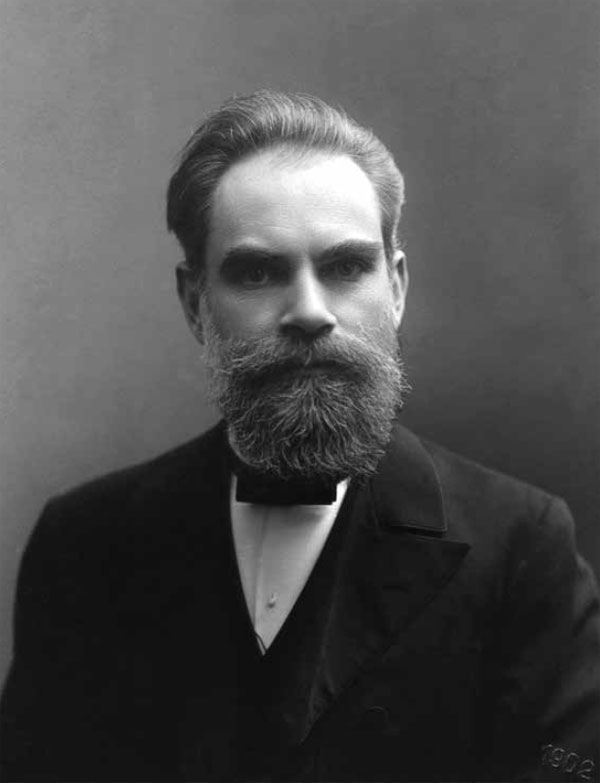

Aleksandr Lyapunov

Aleksandr Mikhailovich Lyapunov (Алекса́ндр Миха́йлович Ляпуно́в, – 3 November 1918) was a Russian mathematician, mechanician and physicist. He was the son of the astronomer Mikhail Lyapunov and the brother of the pianist and composer Sergei Lyapunov. Lyapunov is known for his development of the stability theory of a dynamical system, as well as for his many contributions to mathematical physics and probability theory. Biography Early life Lyapunov was born in Yaroslavl, Russian Empire. His father Mikhail Vasilyevich Lyapunov (1820–1868) was an astronomer employed by the Demidov Lyceum. His brother, Sergei Lyapunov, was a gifted composer and pianist. In 1863, M. V. Lyapunov retired from his scientific career and relocated his family to his wife's estate at Bolobonov, in the Simbirsk province (now Ulyanovsk Oblast). After the death of his father in 1868, Aleksandr Lyapunov was educated by his uncle R. M. Sechenov, brother of the physio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lyapunov Stability

Various types of stability may be discussed for the solutions of differential equations or difference equations describing dynamical systems. The most important type is that concerning the stability of solutions near to a point of equilibrium. This may be discussed by the theory of Aleksandr Lyapunov. In simple terms, if the solutions that start out near an equilibrium point x_e stay near x_e forever, then x_e is Lyapunov stable. More strongly, if x_e is Lyapunov stable and all solutions that start out near x_e converge to x_e, then x_e is said to be ''asymptotically stable'' (see asymptotic analysis). The notion of '' exponential stability'' guarantees a minimal rate of decay, i.e., an estimate of how quickly the solutions converge. The idea of Lyapunov stability can be extended to infinite-dimensional manifolds, where it is known as structural stability, which concerns the behavior of different but "nearby" solutions to differential equations. Input-to-state stability (ISS ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Dynamical System

Linear dynamical systems are dynamical systems whose evolution functions are linear. While dynamical systems, in general, do not have closed-form solutions, linear dynamical systems can be solved exactly, and they have a rich set of mathematical properties. Linear systems can also be used to understand the qualitative behavior of general dynamical systems, by calculating the equilibrium points of the system and approximating it as a linear system around each such point. Introduction In a linear dynamical system, the variation of a state vector (an N-dimensional vector denoted \mathbf) equals a constant matrix (denoted \mathbf) multiplied by \mathbf. This variation can take two forms: either as a flow, in which \mathbf varies continuously with time : \frac \mathbf(t) = \mathbf \mathbf(t) or as a mapping, in which \mathbf varies in discrete steps : \mathbf_ = \mathbf \mathbf_ These equations are linear in the following sense: if \mathbf(t) and \mathbf(t) are two ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hermitian Matrix

In mathematics, a Hermitian matrix (or self-adjoint matrix) is a complex square matrix that is equal to its own conjugate transpose—that is, the element in the -th row and -th column is equal to the complex conjugate of the element in the -th row and -th column, for all indices and : A \text \quad \iff \quad a_ = \overline or in matrix form: A \text \quad \iff \quad A = \overline . Hermitian matrices can be understood as the complex extension of real symmetric matrices. If the conjugate transpose of a matrix A is denoted by A^\mathsf, then the Hermitian property can be written concisely as A \text \quad \iff \quad A = A^\mathsf Hermitian matrices are named after Charles Hermite, who demonstrated in 1855 that matrices of this form share a property with real symmetric matrices of always having real eigenvalues. Other, equivalent notations in common use are A^\mathsf = A^\dagger = A^\ast, although in quantum mechanics, A^\ast typically means the complex conjugate onl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conjugate Transpose

In mathematics, the conjugate transpose, also known as the Hermitian transpose, of an m \times n complex matrix \mathbf is an n \times m matrix obtained by transposing \mathbf and applying complex conjugation to each entry (the complex conjugate of a+ib being a-ib, for real numbers a and b). There are several notations, such as \mathbf^\mathrm or \mathbf^*, \mathbf', or (often in physics) \mathbf^. For real matrices, the conjugate transpose is just the transpose, \mathbf^\mathrm = \mathbf^\operatorname. Definition The conjugate transpose of an m \times n matrix \mathbf is formally defined by where the subscript ij denotes the (i,j)-th entry (matrix element), for 1 \le i \le n and 1 \le j \le m, and the overbar denotes a scalar complex conjugate. This definition can also be written as :\mathbf^\mathrm = \left(\overline\right)^\operatorname = \overline where \mathbf^\operatorname denotes the transpose and \overline denotes the matrix with complex conjugated entries. Other na ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Positive-definite Matrix

In mathematics, a symmetric matrix M with real entries is positive-definite if the real number \mathbf^\mathsf M \mathbf is positive for every nonzero real column vector \mathbf, where \mathbf^\mathsf is the row vector transpose of \mathbf. More generally, a Hermitian matrix (that is, a complex matrix equal to its conjugate transpose) is positive-definite if the real number \mathbf^* M \mathbf is positive for every nonzero complex column vector \mathbf, where \mathbf^* denotes the conjugate transpose of \mathbf. Positive semi-definite matrices are defined similarly, except that the scalars \mathbf^\mathsf M \mathbf and \mathbf^* M \mathbf are required to be positive ''or zero'' (that is, nonnegative). Negative-definite and negative semi-definite matrices are defined analogously. A matrix that is not positive semi-definite and not negative semi-definite is sometimes called ''indefinite''. Some authors use more general definitions of definiteness, permitting the matrices to be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lyapunov Function

In the theory of ordinary differential equations (ODEs), Lyapunov functions, named after Aleksandr Lyapunov, are scalar functions that may be used to prove the stability of an equilibrium of an ODE. Lyapunov functions (also called Lyapunov’s second method for stability) are important to stability theory of dynamical systems and control theory. A similar concept appears in the theory of general state-space Markov chains usually under the name Foster–Lyapunov functions. For certain classes of ODEs, the existence of Lyapunov functions is a necessary and sufficient condition for stability. Whereas there is no general technique for constructing Lyapunov functions for ODEs, in many specific cases the construction of Lyapunov functions is known. For instance, quadratic functions suffice for systems with one state, the solution of a particular linear matrix inequality provides Lyapunov functions for linear systems, and conservation laws can often be used to construct Lyapunov fun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bartels–Stewart Algorithm

In numerical linear algebra, the Bartels–Stewart algorithm is used to numerically solve the Sylvester matrix equation AX - XB = C. Developed by R.H. Bartels and G.W. Stewart in 1971, it was the first numerically stable method that could be systematically applied to solve such equations. The algorithm works by using the real Schur decompositions of A and B to transform AX - XB = C into a triangular system that can then be solved using forward or backward substitution. In 1979, G. Golub, C. Van Loan and S. Nash introduced an improved version of the algorithm, known as the Hessenberg–Schur algorithm. It remains a standard approach for solving Sylvester equations when X is of small to moderate size. The algorithm Let X, C \in \mathbb^, and assume that the eigenvalues of A are distinct from the eigenvalues of B. Then, the matrix equation AX - XB = C has a unique solution. The Bartels–Stewart algorithm computes X by applying the following steps: 1.Compute the real Sch ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vectorization (mathematics)

In mathematics, especially in linear algebra and matrix theory, the vectorization of a matrix is a linear transformation which converts the matrix into a vector. Specifically, the vectorization of a matrix ''A'', denoted vec(''A''), is the column vector obtained by stacking the columns of the matrix ''A'' on top of one another: \operatorname(A) = _, \ldots, a_, a_, \ldots, a_, \ldots, a_, \ldots, a_\mathrm Here, a_ represents the element in the ''i''-th row and ''j''-th column of ''A'', and the superscript ^\mathrm denotes the transpose. Vectorization expresses, through coordinates, the isomorphism \mathbf^ := \mathbf^m \otimes \mathbf^n \cong \mathbf^ between these (i.e., of matrices and vectors) as vector spaces. For example, for the 2×2 matrix A = \begin a & b \\ c & d \end, the vectorization is \operatorname(A) = \begin a \\ c \\ b \\ d \end. The connection between the vectorization of ''A'' and the vectorization of its transpose is given by the commutation matrix ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kronecker Product

In mathematics, the Kronecker product, sometimes denoted by ⊗, is an operation on two matrices of arbitrary size resulting in a block matrix. It is a specialization of the tensor product (which is denoted by the same symbol) from vectors to matrices and gives the matrix of the tensor product linear map with respect to a standard choice of basis. The Kronecker product is to be distinguished from the usual matrix multiplication, which is an entirely different operation. The Kronecker product is also sometimes called matrix direct product. The Kronecker product is named after the German mathematician Leopold Kronecker (1823–1891), even though there is little evidence that he was the first to define and use it. The Kronecker product has also been called the ''Zehfuss matrix'', and the ''Zehfuss product'', after , who in 1858 described this matrix operation, but Kronecker product is currently the most widely used term. The misattribution to Kronecker rather than Zehfuss wa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conformable

In mathematics, a matrix is conformable if its dimensions are suitable for defining some operation (''e.g.'' addition, multiplication, etc.). Examples * If two matrices have the same dimensions (number of rows and number of columns), they are ''conformable for addition''. * Multiplication of two matrices is defined if and only if the number of columns of the left matrix is the same as the number of rows of the right matrix. That is, if is an matrix and is an matrix, then needs to be equal to for the matrix product to be defined. In this case, we say that and are ''conformable for multiplication'' (in that sequence). * Since squaring a matrix involves multiplying it by itself () a matrix must be (that is, it must be a square matrix) to be ''conformable for squaring''. Thus for example only a square matrix can be idempotent. * Only a square matrix is ''conformable for matrix inversion''. However, the Moore–Penrose pseudoinverse and other generalized inverses do not hav ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stable Polynomial

In the context of the characteristic polynomial of a differential equation or difference equation, a polynomial is said to be stable if either: * all its roots lie in the open left half-plane, or * all its roots lie in the open unit disk. The first condition provides stability for continuous-time linear systems, and the second case relates to stability of discrete-time linear systems. A polynomial with the first property is called at times a Hurwitz-stable polynomial and with the second property a Schur-stable polynomial. Stable polynomials arise in control theory and in mathematical theory of differential and difference equations. A linear, time-invariant system (see LTI system theory) is said to be BIBO stable if every bounded input produces bounded output. A linear system is BIBO stable if its characteristic polynomial is stable. The denominator is required to be Hurwitz stable if the system is in continuous-time and Schur stable if it is in discrete-time. In practic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |