|

Load–store Unit

In computer engineering, a load–store unit (LSU) is a specialized execution unit responsible for executing all load and store instructions, generating virtual addresses of load and store operations and loading data from memory or storing it back to memory from registers.''Memory Systems: Cache, DRAM, Disk'' by Bruce Jacob, Spencer Ng, David Wang 2007 page 298 The load–store unit usually includes a queue which acts as a waiting area for memory instructions, and the unit itself operates independently of other processor units. Load–store units may also be used in vector processing, and in such cases the term "load–store vector" may be used.''Computer Architecture: A Quantitative Approach'' by John L. Hennessy, David A. Patterson 2011 pages 293-295 Some load–store units are also capable of executing simple fixed-point and/or integer operations. See also * Address-generation unit * Arithmetic–logic unit * Floating-point unit A floating-point unit (FPU), numeric proc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Engineering

Computer engineering (CE, CoE, or CpE) is a branch of engineering specialized in developing computer hardware and software. It integrates several fields of electrical engineering, electronics engineering and computer science. Computer engineering is referred to as ''electrical and computer engineering'' or '' computer science and engineering'' at some universities. Computer engineers require training in hardware-software integration, software design, and software engineering. It can encompass areas such as electromagnetism, artificial intelligence (AI), robotics, computer networks, computer architecture and operating systems. Computer engineers are involved in many hardware and software aspects of computing, from the design of individual microcontrollers, microprocessors, personal computers, and supercomputers, to circuit design. This field of engineering not only focuses on how computer systems themselves work, but also on how to integrate them into the larger pictur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Execution Unit

In computer engineering, an execution unit (E-unit or EU) is a part of a processing unit that performs the operations and calculations forwarded from the instruction unit. It may have its own internal control sequence unit (not to be confused with a CPU's main control unit), some registers, and other internal units such as an arithmetic logic unit, address generation unit, floating-point unit, load–store unit, branch execution unit or other smaller and more specific components, and can be tailored to support a certain datatype, such as integers An integer is the number zero (0), a positive natural number (1, 2, 3, ...), or the negation of a positive natural number (−1, −2, −3, ...). The negations or additive inverses of the positive natural numbers are referred to as negative in ... or floating-points. It is common for modern processing units to have multiple parallel functional units within its execution units, which is referred to as superscalar design. Th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

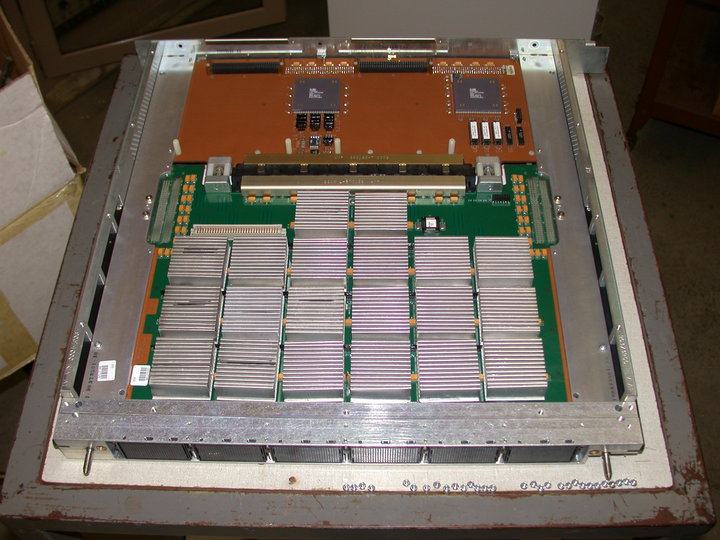

Computer Memory

Computer memory stores information, such as data and programs, for immediate use in the computer. The term ''memory'' is often synonymous with the terms ''RAM,'' ''main memory,'' or ''primary storage.'' Archaic synonyms for main memory include ''core'' (for magnetic core memory) and ''store''. Main memory operates at a high speed compared to mass storage which is slower but less expensive per bit and higher in capacity. Besides storing opened programs and data being actively processed, computer memory serves as a Page cache, mass storage cache and write buffer to improve both reading and writing performance. Operating systems borrow RAM capacity for caching so long as it is not needed by running software. If needed, contents of the computer memory can be transferred to storage; a common way of doing this is through a memory management technique called ''virtual memory''. Modern computer memory is implemented as semiconductor memory, where data is stored within memory cell (com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Register (computing)

A processor register is a quickly accessible location available to a computer's processor. Registers usually consist of a small amount of fast storage, although some registers have specific hardware functions, and may be read-only or write-only. In computer architecture, registers are typically addressed by mechanisms other than main memory, but may in some cases be assigned a memory address e.g. DEC PDP-10, ICT 1900. Almost all computers, whether load/store architecture or not, load items of data from a larger memory into registers where they are used for arithmetic operations, bitwise operations, and other operations, and are manipulated or tested by machine instructions. Manipulated items are then often stored back to main memory, either by the same instruction or by a subsequent one. Modern processors use either static or dynamic random-access memory (RAM) as main memory, with the latter usually accessed via one or more cache levels. Processor registers are normally ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector Processing

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set where its Instruction (computer science), instructions are designed to operate efficiently and effectively on large Array data structure, one-dimensional arrays of data called ''vectors''. This is in contrast to scalar processors, whose instructions operate on single data items only, and in contrast to some of those same scalar processors having additional single instruction, multiple data (SIMD) or SIMD within a register (SWAR) Arithmetic Units. Vector processors can greatly improve performance on certain workloads, notably numerical simulation, Data compression, compression and similar tasks. Vector processing techniques also operate in video game console, video-game console hardware and in graphics accelerators. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Address Generation Unit

The address generation unit (AGU), sometimes also called address computation unit (ACU), is an execution unit inside central processing units (CPUs) that calculates addresses used by the CPU to access main memory. By having address calculations handled by separate circuitry that operates in parallel with the rest of the CPU, the number of CPU cycles required for executing various machine instructions can be reduced, bringing performance improvements. While performing various operations, CPUs need to calculate memory addresses required for fetching data from the memory; for example, in-memory positions of array elements must be calculated before the CPU can fetch the data from actual memory locations. Those address-generation calculations involve different integer arithmetic operations, such as addition, subtraction, modulo operations, or bit shifts. Often, calculating a memory address involves more than one general-purpose machine instruction, which do not necessarily decod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Arithmetic Logic Unit

In computing, an arithmetic logic unit (ALU) is a Combinational logic, combinational digital circuit that performs arithmetic and bitwise operations on integer binary numbers. This is in contrast to a floating-point unit (FPU), which operates on floating point numbers. It is a fundamental building block of many types of computing circuits, including the central processing unit (CPU) of computers, FPUs, and graphics processing units (GPUs). The inputs to an ALU are the data to be operated on, called operands, and a code indicating the operation to be performed (opcode); the ALU's output is the result of the performed operation. In many designs, the ALU also has status inputs or outputs, or both, which convey information about a previous operation or the current operation, respectively, between the ALU and external status registers. Signals An ALU has a variety of input and output net (electronics), nets, which are the electrical conductors used to convey Digital signal (electroni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Floating-point Unit

A floating-point unit (FPU), numeric processing unit (NPU), colloquially math coprocessor, is a part of a computer system specially designed to carry out operations on floating-point numbers. Typical operations are addition, subtraction, multiplication, division, and square root. Modern designs generally include a fused multiply-add instruction, which was found to be very common in real-world code. Some FPUs can also perform various transcendental functions such as exponential or trigonometric calculations, but the accuracy can be low, so some systems prefer to compute these functions in software. Floating-point operations were originally handled in software in early computers. Over time, manufacturers began to provide standardized floating-point libraries as part of their software collections. Some machines, those dedicated to scientific processing, would include specialized hardware to perform some of these tasks with much greater speed. The introduction of microcode in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Load–store Architecture

In computer engineering, a load–store architecture (or a register–register architecture) is an instruction set architecture that divides instructions into two categories: memory access ( load and store between memory and registers) and ALU operations (which only occur between registers). Some RISC architectures such as PowerPC, SPARC, RISC-V, ARM, and MIPS are load–store architectures. For instance, in a load–store approach both operands and destination for an ADD operation must be in registers. This differs from a register–memory architecture (for example, a CISC instruction set architecture such as x86 x86 (also known as 80x86 or the 8086 family) is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel, based on the 8086 microprocessor and its 8-bit-external-bus variant, the 8088. Th ...) in which one of the operands for the ADD operation may be in memory, while the other is in a register. The ear ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |