|

Likert Scale

A Likert scale ( ,) is a psychometric scale named after its inventor, American social psychologist Rensis Likert, which is commonly used in research questionnaires. It is the most widely used approach to scaling responses in survey research, such that the term (or more fully the Likert-type scale) is often used interchangeably with '' rating scale'', although there are other types of rating scales. Likert distinguished between a scale proper, which emerges from collective responses to a set of items (usually eight or more), and the format in which responses are scored along a range. Technically speaking, a Likert scale refers only to the former. The difference between these two concepts has to do with the distinction Likert made between the underlying phenomenon being investigated and the means of capturing variation that points to the underlying phenomenon. When responding to a Likert item, respondents specify their level of agreement or disagreement on a symmetric agree-disa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Psychometrics

Psychometrics is a field of study within psychology concerned with the theory and technique of measurement. Psychometrics generally covers specialized fields within psychology and education devoted to testing, measurement, assessment, and related activities. Psychometrics is concerned with the objective measurement of latent constructs that cannot be directly observed. Examples of latent constructs include intelligence, introversion, mental disorders, and educational achievement. The levels of individuals on nonobservable latent variables are inferred through mathematical modeling based on what is observed from individuals' responses to items on tests and scales. Practitioners are described as psychometricians, although not all who engage in psychometric research go by this title. Psychometricians usually possess specific qualifications, such as degrees or certifications, and most are psychologists with advanced graduate training in psychometrics and measurement theory. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Central Limit Theorem

In probability theory, the central limit theorem (CLT) states that, under appropriate conditions, the Probability distribution, distribution of a normalized version of the sample mean converges to a Normal distribution#Standard normal distribution, standard normal distribution. This holds even if the original variables themselves are not Normal distribution, normally distributed. There are several versions of the CLT, each applying in the context of different conditions. The theorem is a key concept in probability theory because it implies that probabilistic and statistical methods that work for normal distributions can be applicable to many problems involving other types of distributions. This theorem has seen many changes during the formal development of probability theory. Previous versions of the theorem date back to 1811, but in its modern form it was only precisely stated as late as 1920. In statistics, the CLT can be stated as: let X_1, X_2, \dots, X_n denote a Sampling ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Item Response Theory

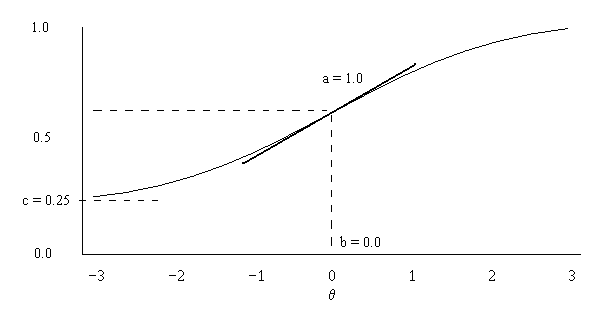

In psychometrics, item response theory (IRT, also known as latent trait theory, strong true score theory, or modern mental test theory) is a paradigm for the design, analysis, and scoring of Test (student assessment), tests, questionnaires, and similar instruments measurement, measuring abilities, attitudes, or other variables. It is a theory of testing based on the relationship between individuals' performances on a test item and the test takers' levels of performance on an overall measure of the ability that item was designed to measure. Several different statistical models are used to represent both item and test taker characteristics. Unlike simpler alternatives for creating scales and evaluating questionnaire responses, it does not assume that each item is equally difficult. This distinguishes IRT from, for instance, Likert scaling, in which ''"''All items are assumed to be replications of each other or in other words items are considered to be parallel instruments". By contrast ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factor Analysis

Factor analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. For example, it is possible that variations in six observed variables mainly reflect the variations in two unobserved (underlying) variables. Factor analysis searches for such joint variations in response to unobserved latent variables. The observed variables are modelled as linear combinations of the potential factors plus "error" terms, hence factor analysis can be thought of as a special case of errors-in-variables models. Simply put, the factor loading of a variable quantifies the extent to which the variable is related to a given factor. A common rationale behind factor analytic methods is that the information gained about the interdependencies between observed variables can be used later to reduce the set of variables in a dataset. Factor analysis is commonly used in psychometrics, pers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Latent Variable Model

A latent variable model is a statistical model that relates a set of observable variables (also called ''manifest variables'' or ''indicators'') to a set of latent variables. Latent variable models are applied across a wide range of fields such as biology, computer science, and social science. Common use cases for latent variable models include applications in psychometrics (e.g., summarizing responses to a set of survey questions with a factor analysis model positing a smaller number of psychological attributes, such as the trait extraversion, that are presumed to cause the survey question responses), and natural language processing (e.g., a topic model summarizing a corpus of texts with a number of "topics"). It is assumed that the responses on the indicators or manifest variables are the result of an individual's position on the latent variable(s), and that the manifest variables have nothing in common after controlling for the latent variable ( local independence). Different ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consensus-based Assessment

Consensus-based assessment expands on the common practice of consensus decision-making and the theoretical observation that expertise can be closely approximated by large numbers of novices or journeymen. It creates a method for determining measurement standards for very ambiguous domains of knowledge, such as emotional intelligence, politics, religion, values and culture in general. From this perspective, the shared knowledge that forms cultural consensus can be assessed in much the same way as expertise or general intelligence. Measurement standards for general intelligence Consensus-based assessment is based on a simple finding: that samples of individuals with differing competence (e.g., experts and apprentices) rate relevant scenarios, using Likert scales, with similar mean ratings. Thus, from the perspective of a CBA framework, cultural standards for scoring keys can be derived from the population that is being assessed. Peter Legree and Joseph Psotka, working together o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ordered Probit

In statistics, the ordered logit model or proportional odds logistic regression is an ordinal regression model—that is, a regression model for ordinal dependent variables—first considered by Peter McCullagh. For example, if one question on a survey is to be answered by a choice among "poor", "fair", "good", "very good" and "excellent", and the purpose of the analysis is to see how well that response can be predicted by the responses to other questions, some of which may be quantitative, then ordered logistic regression may be used. It can be thought of as an extension of the logistic regression model that applies to dichotomous dependent variables, allowing for more than two (ordered) response categories. The model and the proportional odds assumption The model only applies to data that meet the ''proportional odds assumption'', the meaning of which can be exemplified as follows. Suppose there are five outcomes: "poor", "fair", "good", "very good", and "excellent". We a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kruskal–Wallis Test

The Kruskal–Wallis test by ranks, Kruskal–Wallis H test (named after William Kruskal and W. Allen Wallis), or one-way ANOVA on ranks is a non-parametric statistical test for testing whether samples originate from the same distribution. It is used for comparing two or more independent samples of equal or different sample sizes. It extends the Mann–Whitney ''U'' test, which is used for comparing only two groups. The parametric equivalent of the Kruskal–Wallis test is the one-way analysis of variance (ANOVA). A significant Kruskal–Wallis test indicates that at least one sample stochastically dominates one other sample. The test does not identify where this stochastic dominance occurs or for how many pairs of groups stochastic dominance obtains. For analyzing the specific sample pairs for stochastic dominance, Dunn's test, pairwise Mann–Whitney tests with Bonferroni correction, or the more powerful but less well known Conover–Iman test are sometimes used. It is suppo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

McNemar Test

McNemar's test is a statistical test used on paired nominal data. It is applied to 2 × 2 contingency tables with a dichotomous trait, with matched pairs of subjects, to determine whether the row and column marginal frequencies are equal (that is, whether there is "marginal homogeneity"). It is named after Quinn McNemar, who introduced it in 1947. An application of the test in genetics is the transmission disequilibrium test for detecting linkage disequilibrium. The commonly used parameters to assess a diagnostic test in medical sciences are sensitivity and specificity. Sensitivity (or recall) is the ability of a test to correctly identify the people with disease. Specificity is the ability of the test to correctly identify those without the disease. Now presume two tests are performed on the same group of patients. And also presume that these tests have identical sensitivity and specificity. In this situation one is carried away by these findings and presume that b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi-squared Test

A chi-squared test (also chi-square or test) is a Statistical hypothesis testing, statistical hypothesis test used in the analysis of contingency tables when the sample sizes are large. In simpler terms, this test is primarily used to examine whether two categorical variables (''two dimensions of the contingency table'') are independent in influencing the test statistic (''values within the table''). The test is Validity (statistics), valid when the test statistic is chi-squared distribution, chi-squared distributed under the null hypothesis, specifically Pearson's chi-squared test and variants thereof. Pearson's chi-squared test is used to determine whether there is a Statistical significance, statistically significant difference between the expected frequency (statistics), frequencies and the observed frequencies in one or more categories of a contingency table. For contingency tables with smaller sample sizes, a Fisher's exact test is used instead. In the standard application ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |