|

Lex (software)

Lex is a computer program that generates lexical analyzers ("scanners" or "lexers"). It is commonly used with the yacc parser generator and is the standard lexical analyzer generator on many Unix and Unix-like systems. An equivalent tool is specified as part of the POSIX standard. Lex reads an input stream specifying the lexical analyzer and writes source code which implements the lexical analyzer in the C programming language. In addition to C, some old versions of Lex could generate a lexer in Ratfor. History Lex was originally written by Mike Lesk and Eric Schmidt and described in 1975. In the following years, Lex became the standard lexical analyzer generator on many Unix and Unix-like systems. In 1983, Lex was one of several UNIX tools available for Charles River Data Systems' UNOS operating system under Bell Laboratories license. Although originally distributed as proprietary software, some versions of Lex are now open-source. Open-source versions of Lex, based on th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

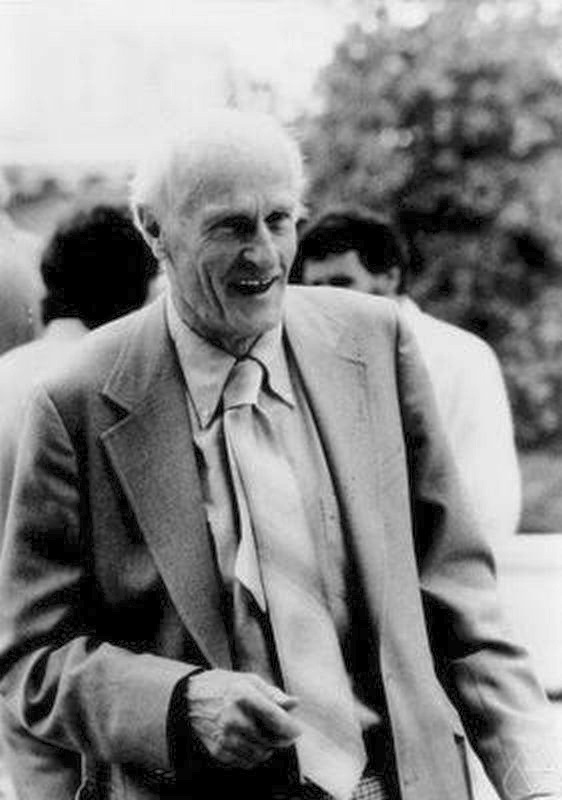

Mike Lesk

Michael E. Lesk (born 1945) is an American computer scientist. Biography In the 1960s, Michael Lesk worked for the SMART Information Retrieval System project, wrote much of its retrieval code and did many of the retrieval experiments, as well as obtaining a BA degree in Physics and Chemistry from Harvard College in 1964 and a PhD from Harvard University in Chemical Physics in 1969. From 1970 to 1984, Lesk worked at Bell Labs in the group that built Unix. Lesk wrote Unix tools for word processing ('' tbl'', '' refer'', and the standard ''ms'' macro package, all for ''troff''), for compiling ('' Lex''), and for networking (''uucp''). He also wrote the Portable I/O Library (the predecessor to stdio.h in C) and contributed significantly to the development of the C language preprocessor. In 1984, he left to work for Bellcore, where he managed the computer science research group. There, Lesk worked on specific information systems applications, mostly with geography (a system for dr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ratfor

Ratfor (short for ''Rational Fortran'') is a programming language implemented as a preprocessor for Fortran#FORTRAN 66, Fortran 66. It provides Structured programming, modern control structures, unavailable in Fortran 66, to replace GOTOs and statement numbers. Features Ratfor provides the following kinds of flow-control statements, described by Kernighan and Plauger as "shamelessly stolen from the language C (programming language), C, developed for the Unix, UNIX operating system by Dennis Ritchie, D.M. Ritchie" ("Software Tools", p. 318): * statement grouping with braces * if-else, while, for, do, repeat-until, break, next * "free-form" statements, i.e., not constrained by Fortran format rules * , >=, ... in place of .LT., .GT., .GE., ... * include * # comments For example, the following code if (a > b) else might be translated as IF(.NOT.(A.GT.B))GOTO 1 MAX = A GOTO 2 1 CONTINUE MAX = B 2 CONTINUE The version of Ratfor in ''Softwar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regular Expressions

A regular expression (shortened as regex or regexp), sometimes referred to as rational expression, is a sequence of character (computing), characters that specifies a pattern matching, match pattern in string (computer science), text. Usually such patterns are used by string-searching algorithms for "find" or "find and replace" operations on string (computer science), strings, or for data validation, input validation. Regular expression techniques are developed in theoretical computer science and formal language theory. The concept of regular expressions began in the 1950s, when the American mathematician Stephen Cole Kleene formalized the concept of a regular language. They came into common use with Unix text-processing utilities. Different syntax (programming languages), syntaxes for writing regular expressions have existed since the 1980s, one being the POSIX standard and another, widely used, being the Perl syntax. Regular expressions are used in search engines, in search ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Flex Lexical Analyser

Flex (fast lexical analyzer generator) is a free and open-source software alternative to lex. It is a computer program that generates lexical analyzers (also known as "scanners" or "lexers"). It is frequently used as the lex implementation together with Berkeley Yacc parser generator on BSD-derived operating systems (as both lex and yacc are part of POSIX), or together with GNU bison (a version of yacc) in *BSD ports and in Linux distributions. Unlike Bison, flex is not part of the GNU Project and is not released under the GNU General Public License, although a manual for Flex was produced and published by the Free Software Foundation. History Flex was written in C around 1987 by Vern Paxson, with the help of many ideas and much inspiration from Van Jacobson. Original version by Jef Poskanzer. The fast table representation is a partial implementation of a design done by Van Jacobson. The implementation was done by Kevin Gong and Vern Paxson. Example lexical analyzer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compiler

In computing, a compiler is a computer program that Translator (computing), translates computer code written in one programming language (the ''source'' language) into another language (the ''target'' language). The name "compiler" is primarily used for programs that translate source code from a high-level programming language to a lower level language, low-level programming language (e.g. assembly language, object code, or machine code) to create an executable program.Compilers: Principles, Techniques, and Tools by Alfred V. Aho, Ravi Sethi, Jeffrey D. Ullman - Second Edition, 2007 There are many different types of compilers which produce output in different useful forms. A ''cross-compiler'' produces code for a different Central processing unit, CPU or operating system than the one on which the cross-compiler itself runs. A ''bootstrap compiler'' is often a temporary compiler, used for compiling a more permanent or better optimised compiler for a language. Related software ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Function (programming)

In computer programming, a function (also procedure, method, subroutine, routine, or subprogram) is a callable unit of software logic that has a well-defined interface and behavior and can be invoked multiple times. Callable units provide a powerful programming tool. The primary purpose is to allow for the decomposition of a large and/or complicated problem into chunks that have relatively low cognitive load and to assign the chunks meaningful names (unless they are anonymous). Judicious application can reduce the cost of developing and maintaining software, while increasing its quality and reliability. Callable units are present at multiple levels of abstraction in the programming environment. For example, a programmer may write a function in source code that is compiled to machine code that implements similar semantics. There is a callable unit in the source code and an associated one in the machine code, but they are different kinds of callable units with different implica ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statement (programming)

In computer programming, a statement is a syntactic unit of an imperative programming language that expresses some action to be carried out. A program written in such a language is formed by a sequence of one or more statements. A statement may have internal components (e.g. expressions). Many programming languages (e.g. Ada, Algol 60, C, Java, Pascal) make a distinction between statements and definitions/declarations. A definition or declaration specifies the data on which a program is to operate, while a statement specifies the actions to be taken with that data. Statements which cannot contain other statements are ''simple''; those which can contain other statements are ''compound''. The appearance of a statement (and indeed a program) is determined by its syntax or grammar. The meaning of a statement is determined by its semantics. Simple statements Simple statements are complete in themselves; these include assignments, subroutine calls, and a few statements which may ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regular Expression

A regular expression (shortened as regex or regexp), sometimes referred to as rational expression, is a sequence of characters that specifies a match pattern in text. Usually such patterns are used by string-searching algorithms for "find" or "find and replace" operations on strings, or for input validation. Regular expression techniques are developed in theoretical computer science and formal language theory. The concept of regular expressions began in the 1950s, when the American mathematician Stephen Cole Kleene formalized the concept of a regular language. They came into common use with Unix text-processing utilities. Different syntaxes for writing regular expressions have existed since the 1980s, one being the POSIX standard and another, widely used, being the Perl syntax. Regular expressions are used in search engines, in search and replace dialogs of word processors and text editors, in text processing utilities such as sed and AWK, and in lexical analysis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Header File

An include directive instructs a text file processor to replace the directive text with the content of a specified file. The act of including may be logical in nature. The processor may simply process the include file content at the location of the directive without creating a combined file. Different processors may use different syntax. The C preprocessor (used with C, C++ and in other contexts) defines an include directive as a line that starts #include and is followed by a file specification. COBOL defines an include directive indicated by copy in order to include a copybook. Generally, for C/C++ the include directive is used to include a header file, but can include any file. Although relatively uncommon, it is sometimes used to include a body file such as a .c file. The include directive can support encapsulation and reuse. Different parts of a system can be segregated into logical groupings yet rely on one another via file inclusion. C and C++ are designed to leverag ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Macro (computer Science)

In computer programming, a macro (short for "macro instruction"; ) is a rule or pattern that specifies how a certain input should be mapped to a replacement output. Applying a macro to an input is known as macro expansion. The input and output may be a sequence of lexical tokens or characters, or a syntax tree. Character macros are supported in software applications to make it easy to invoke common command sequences. Token and tree macros are supported in some programming languages to enable code reuse or to extend the language, sometimes for domain-specific languages. Macros are used to make a sequence of computing instructions available to the programmer as a single program statement, making the programming task less tedious and less error-prone. Thus, they are called "macros" because a "big" block of code can be expanded from a "small" sequence of characters. Macros often allow positional or keyword parameters that dictate what the conditional assembler program gen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Flex Lexical Analyser

Flex (fast lexical analyzer generator) is a free and open-source software alternative to lex. It is a computer program that generates lexical analyzers (also known as "scanners" or "lexers"). It is frequently used as the lex implementation together with Berkeley Yacc parser generator on BSD-derived operating systems (as both lex and yacc are part of POSIX), or together with GNU bison (a version of yacc) in *BSD ports and in Linux distributions. Unlike Bison, flex is not part of the GNU Project and is not released under the GNU General Public License, although a manual for Flex was produced and published by the Free Software Foundation. History Flex was written in C around 1987 by Vern Paxson, with the help of many ideas and much inspiration from Van Jacobson. Original version by Jef Poskanzer. The fast table representation is a partial implementation of a design done by Van Jacobson. The implementation was done by Kevin Gong and Vern Paxson. Example lexical analyzer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenSolaris

OpenSolaris () is a discontinued open-source computer operating system for SPARC and x86 based systems, created by Sun Microsystems and based on Solaris. Its development began in the mid 2000s and ended in 2010. OpenSolaris was developed as a combination of several software ''consolidations'' that were open sourced starting with Solaris 10. It includes a variety of free software, including popular desktop and server software. It is a descendant of the UNIX System V Release 4 (SVR4) code base developed by Sun and AT&T in the late 1980s and is the only version of the System V variant of UNIX available as open source. After Oracle's acquisition of Sun Microsystems in 2010, Oracle discontinued development of OpenSolaris in house, pivoting to focus exclusively on the development of the proprietary Solaris Express (now Oracle Solaris). Prior to Oracle's close-sourcing Solaris, a group of former OpenSolaris developers began efforts to fork the core software under the name ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |