|

Inversion Encoding

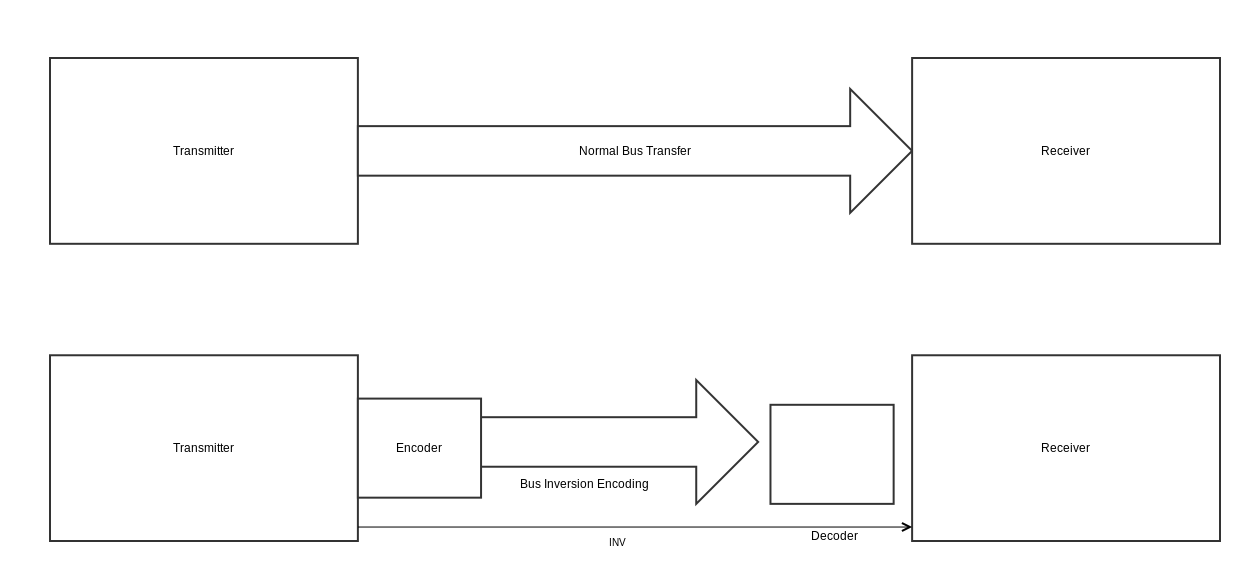

Inversion encoding is an encoding technique used for encoding bus transmissions for low power systems. It is based on the fact that a large amount of power is wasted because of transitions, especially in external buses, and thus reducing these transitions aids optimization of power dissipation. This is done introducing an additional signal line named INV to the bus lines. This signal determines whether the other lines should be inverted or not. Overview The bus-invert encoding technique uses an extra signal (INV) to indicate the "polarity" of the data. Having a bus-invert code word INV@x where @ is the concatenation operator and x denotes either the source word or its ones' complement, the bus-invert decoder takes the code word and produces the corresponding source word. If the INV signal is 1, the result is one's complement of x, otherwise it is x. Usage scenarios * High capacitance lines * High switching activity Bus-invert method # The Hamming distance (the number of bi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bus Encoding

Bus encoding refers to converting/encoding a piece of data to another form before launching on the bus (computing), bus. While bus encoding can be used to serve various purposes like reducing the number of pins, compressing the data to be transmitted, reducing cross-talk between bit lines, etc., it is one of the popular techniques used in system design to reduce dynamic power consumed by the system bus. Bus encoding aims to reduce the Hamming distance between 2 consecutive values on the bus. Since the activity is directly proportional to the Hamming distance, bus encoding proves to be effective in reducing the overall activity factor thereby reducing the dynamic power consumption in the system. In the context of this article, a system can refer to anything where data is transferred from one element to another over bus (viz. System on a Chip (SoC), a computer system, an embedded system on board, etc.). Motivation Power consumption in electronic systems is a matter of concern today ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Power Dissipation

In thermodynamics, dissipation is the result of an irreversible process that takes place in homogeneous thermodynamic systems. In a dissipative process, energy (internal, bulk flow kinetic, or system potential) transforms from an initial form to a final form, where the capacity of the final form to do thermodynamic work is less than that of the initial form. For example, heat transfer is dissipative because it is a transfer of internal energy from a hotter body to a colder one. Following the second law of thermodynamics, the entropy varies with temperature (reduces the capacity of the combination of the two bodies to do work), but never decreases in an isolated system. These processes produce entropy at a certain rate. The entropy production rate times ambient temperature gives the dissipated power. Important examples of irreversible processes are: heat flow through a thermal resistance, fluid flow through a flow resistance, diffusion (mixing), chemical reactions, and electric cu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ones' Complement

The ones' complement of a binary number is the value obtained by inverting all the bits in the binary representation of the number (swapping 0s and 1s). The name "ones' complement" (''note this is possessive of the plural "ones", not of a singular "one"'') refers to the fact that such an inverted value, if added to the original, would always produce an 'all ones' number (the term "complement" refers to such pairs of mutually additive inverse numbers, here in respect to a non-0 base number). This mathematical operation is primarily of interest in computer science, where it has varying effects depending on how a specific computer represents numbers. A ones' complement system or ones' complement arithmetic is a system in which negative numbers are represented by the inverse of the binary representations of their corresponding positive numbers. In such a system, a number is negated (converted from positive to negative or vice versa) by computing its ones' complement. An N-bit ones ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hamming Distance

In information theory, the Hamming distance between two strings of equal length is the number of positions at which the corresponding symbols are different. In other words, it measures the minimum number of ''substitutions'' required to change one string into the other, or the minimum number of ''errors'' that could have transformed one string into the other. In a more general context, the Hamming distance is one of several string metrics for measuring the edit distance between two sequences. It is named after the American mathematician Richard Hamming. A major application is in coding theory, more specifically to block codes, in which the equal-length strings are vectors over a finite field. Definition The Hamming distance between two equal-length strings of symbols is the number of positions at which the corresponding symbols are different. Examples The symbols may be letters, bits, or decimal digits, among other possibilities. For example, the Hamming distance betwe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Two's Complement

Two's complement is a mathematical operation to reversibly convert a positive binary number into a negative binary number with equivalent (but negative) value, using the binary digit with the greatest place value (the leftmost bit in big- endian numbers, rightmost bit in little-endian numbers) to indicate whether the binary number is positive or negative (the sign). It is used in computer science as the most common method of representing signed (positive, negative, and zero) integers on computers, and more generally, fixed point binary values. When the most significant bit is a one, the number is signed as negative. . Two's complement is executed by 1) inverting (i.e. flipping) all bits, then 2) adding a place value of 1 to the inverted number. For example, say the number −6 is of interest. +6 in binary is 0110 (the leftmost most significant bit is needed for the sign; positive 6 is not 110 because it would be interpreted as -2). Step one is to flip all bits, yielding 1001. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signed Number Representation

In computing, signed number representations are required to encode negative numbers in binary number systems. In mathematics, negative numbers in any base are represented by prefixing them with a minus sign ("−"). However, in RAM or CPU registers, numbers are represented only as sequences of bits, without extra symbols. The four best-known methods of extending the binary numeral system to represent signed numbers are: sign–magnitude, ones' complement, two's complement, and offset binary. Some of the alternative methods use implicit instead of explicit signs, such as negative binary, using the base −2. Corresponding methods can be devised for other bases, whether positive, negative, fractional, or other elaborations on such themes. There is no definitive criterion by which any of the representations is universally superior. For integers, the representation used in most current computing devices is two's complement, although the Unisys ClearPath Dorado series mainfram ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Input/output

In computing, input/output (I/O, or informally io or IO) is the communication between an information processing system, such as a computer, and the outside world, possibly a human or another information processing system. Inputs are the signals or data received by the system and outputs are the signals or data sent from it. The term can also be used as part of an action; to "perform I/O" is to perform an input or output operation. are the pieces of hardware used by a human (or other system) to communicate with a computer. For instance, a keyboard or computer mouse is an input device for a computer, while monitors and printers are output devices. Devices for communication between computers, such as modems and network cards, typically perform both input and output operations. Any interaction with the system by a interactor is an input and the reaction the system responds is called the output. The designation of a device as either input or output depends on perspective. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coding Theory

Coding theory is the study of the properties of codes and their respective fitness for specific applications. Codes are used for data compression, cryptography, error detection and correction, data transmission and data storage. Codes are studied by various scientific disciplines—such as information theory, electrical engineering, mathematics, linguistics, and computer science—for the purpose of designing efficient and reliable data transmission methods. This typically involves the removal of redundancy and the correction or detection of errors in the transmitted data. There are four types of coding: # Data compression (or ''source coding'') # Error control (or ''channel coding'') # Cryptographic coding # Line coding Data compression attempts to remove unwanted redundancy from the data from a source in order to transmit it more efficiently. For example, ZIP data compression makes data files smaller, for purposes such as to reduce Internet traffic. Data compressio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uniform Distribution (continuous)

In probability theory and statistics, the continuous uniform distribution or rectangular distribution is a family of symmetric probability distributions. The distribution describes an experiment where there is an arbitrary outcome that lies between certain bounds. The bounds are defined by the parameters, ''a'' and ''b'', which are the minimum and maximum values. The interval can either be closed (e.g. , b or open (e.g. (a, b)). Therefore, the distribution is often abbreviated ''U'' (''a'', ''b''), where U stands for uniform distribution. The difference between the bounds defines the interval length; all intervals of the same length on the distribution's support are equally probable. It is the maximum entropy probability distribution for a random variable ''X'' under no constraint other than that it is contained in the distribution's support. Definitions Probability density function The probability density function of the continuous uniform distribution is: : f(x)=\be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Independence (probability Theory)

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. Mutual independence implies pairwise independe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Two's Complement

Two's complement is a mathematical operation to reversibly convert a positive binary number into a negative binary number with equivalent (but negative) value, using the binary digit with the greatest place value (the leftmost bit in big- endian numbers, rightmost bit in little-endian numbers) to indicate whether the binary number is positive or negative (the sign). It is used in computer science as the most common method of representing signed (positive, negative, and zero) integers on computers, and more generally, fixed point binary values. When the most significant bit is a one, the number is signed as negative. . Two's complement is executed by 1) inverting (i.e. flipping) all bits, then 2) adding a place value of 1 to the inverted number. For example, say the number −6 is of interest. +6 in binary is 0110 (the leftmost most significant bit is needed for the sign; positive 6 is not 110 because it would be interpreted as -2). Step one is to flip all bits, yielding 1001. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |