|

High Performance Computing

High-performance computing (HPC) is the use of supercomputers and computer clusters to solve advanced computation problems. Overview HPC integrates systems administration (including network and security knowledge) and parallel programming into a multidisciplinary field that combines digital electronics, computer architecture, system software, programming languages, algorithms and computational techniques. HPC technologies are the tools and systems used to implement and create high performance computing systems. Recently, HPC systems have shifted from supercomputing to computing clusters and grids. Because of the need of networking in clusters and grids, High Performance Computing Technologies are being promoted by the use of a collapsed network backbone, because the collapsed backbone architecture is simple to troubleshoot and upgrades can be applied to a single router as opposed to multiple ones. HPC integrates with data analytics in AI engineering workflows to generate new ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

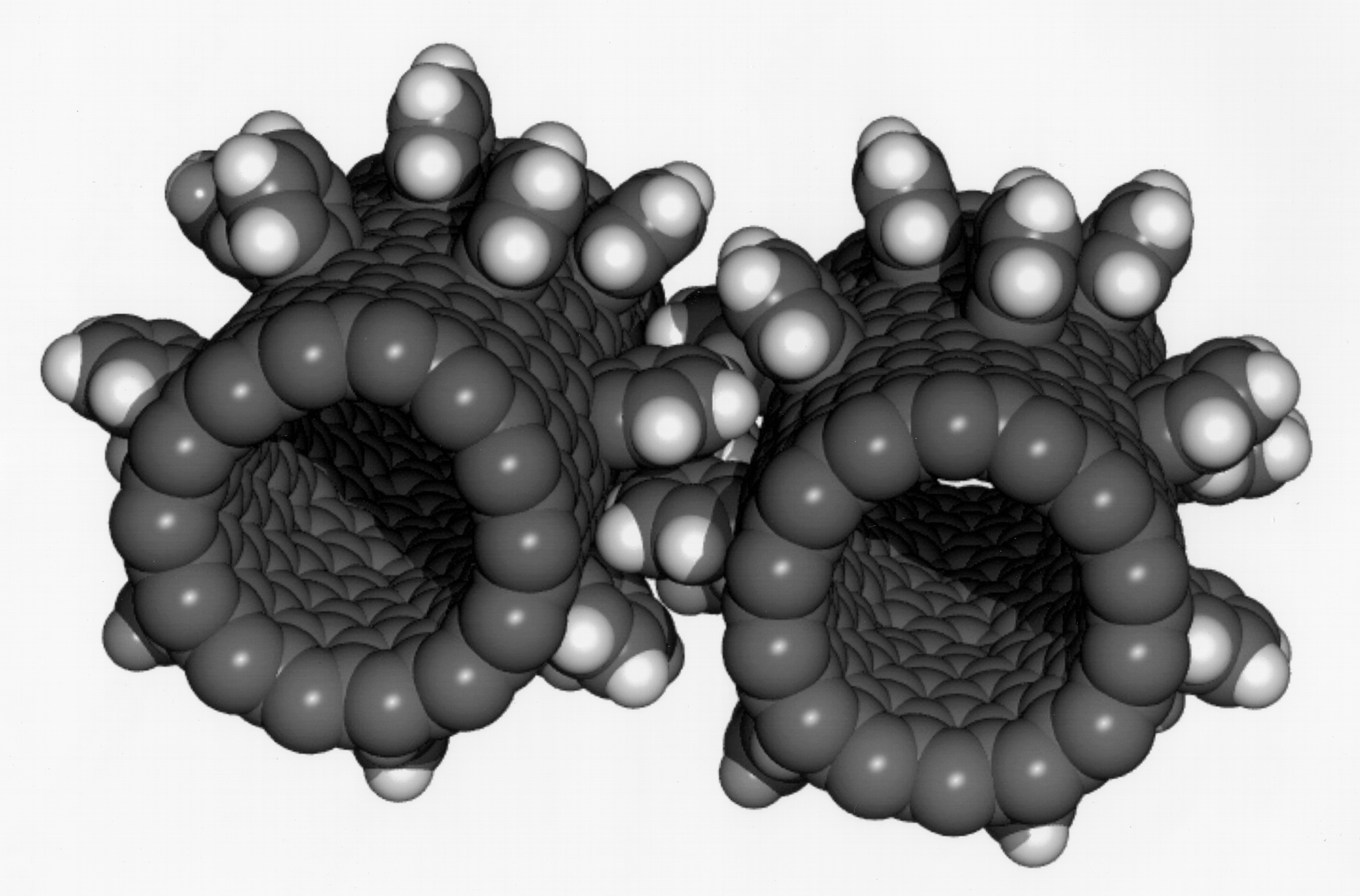

Nanoscience High-Performance Computing Facility

Nanotechnology is the manipulation of matter with at least one dimension sized from 1 to 100 nanometers (nm). At this scale, commonly known as the nanoscale, surface area and quantum mechanical effects become important in describing properties of matter. This definition of nanotechnology includes all types of research and technologies that deal with these special properties. It is common to see the plural form "nanotechnologies" as well as "nanoscale technologies" to refer to research and applications whose common trait is scale. An earlier understanding of nanotechnology referred to the particular technological goal of precisely manipulating atoms and molecules for fabricating macroscale products, now referred to as molecular nanotechnology. Nanotechnology defined by scale includes fields of science such as surface science, organic chemistry, molecular biology, semiconductor physics, energy storage, engineering, microfabrication, and molecular engineering. The associated rese ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Business

Business is the practice of making one's living or making money by producing or Trade, buying and selling Product (business), products (such as goods and Service (economics), services). It is also "any activity or enterprise entered into for profit." A business entity is not necessarily separate from the owner and the creditors can hold the owner liable for debts the business has acquired except for limited liability company. The taxation system for businesses is different from that of the corporates. A business structure does not allow for corporate tax rates. The proprietor is personally taxed on all income from the business. A distinction is made in law and public offices between the term business and a company (such as a corporation or cooperative). Colloquially, the terms are used interchangeably. Corporations are distinct from Sole proprietorship, sole proprietors and partnerships. Corporations are separate and unique Legal person, legal entities from their shareholde ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OS-level Virtualization

OS-level virtualization is an operating system (OS) virtualization paradigm in which the kernel allows the existence of multiple isolated user space instances, including containers ( LXC, Solaris Containers, AIX WPARs, HP-UX SRP Containers, Docker, Podman), zones ( Solaris Containers), virtual private servers ( OpenVZ), partitions, virtual environments (VEs), virtual kernels (DragonFly BSD), and jails ( FreeBSD jail and chroot). Such instances may look like real computers from the point of view of programs running in them. A computer program running on an ordinary operating system can see all resources (connected devices, files and folders, network shares, CPU power, quantifiable hardware capabilities) of that computer. Programs running inside a container can only see the container's contents and devices assigned to the container. On Unix-like operating systems, this feature can be seen as an advanced implementation of the standard chroot mechanism, which changes the apparen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cloud Computing

Cloud computing is "a paradigm for enabling network access to a scalable and elastic pool of shareable physical or virtual resources with self-service provisioning and administration on-demand," according to International Organization for Standardization, ISO. Essential characteristics In 2011, the National Institute of Standards and Technology (NIST) identified five "essential characteristics" for cloud systems. Below are the exact definitions according to NIST: * On-demand self-service: "A consumer can unilaterally provision computing capabilities, such as server time and network storage, as needed automatically without requiring human interaction with each service provider." * Broad network access: "Capabilities are available over the network and accessed through standard mechanisms that promote use by heterogeneous thin or thick client platforms (e.g., mobile phones, tablets, laptops, and workstations)." * Pooling (resource management), Resource pooling: " The provider' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

On-premises Software

On-premises software (abbreviated to on-prem, and often written as "on-premise") is installed and runs on computers on the premises of the person or organization using the software, rather than at a remote facility such as a server farm or cloud. On-premises software is sometimes referred to as " shrinkwrap" software, and off-premises software is commonly called "software as a service" ("SaaS") or "cloud computing". The software consists of database and modules that are combined to particularly serve the unique needs of the large organizations regarding the automation of corporate-wide business system and its functions. Comparison between on-premises and cloud (SaaS) Location On-premises software is established within the organisation's internal system along with the hardware and other infrastructure necessary for the software to function. Cloud-based software is usually served via internet and it can be accessed by users online regardless of the time and their location. Un ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jack Dongarra

Jack Joseph Dongarra (born July 18, 1950) is an American computer scientist and mathematician. He is a University Distinguished Professor Emeritus of Computer Science in the Electrical Engineering and Computer Science Department at the University of Tennessee. He holds the position of a Distinguished Research Staff member in the Computer Science and Mathematics Division at Oak Ridge National Laboratory, Turing Fellowship in the School of Mathematics at the University of Manchester, and is an adjunct professor and teacher in the Computer Science Department at Rice University. He served as a faculty fellow at the Texas A&M University Institute for Advanced Study (2014–2018). Dongarra is the founding director of the Innovative Computing Laboratory at the University of Tennessee. He was the recipient of the Turing Award in 2021. Education Dongarra received a BSc degree in mathematics from Chicago State University in 1972 and a MSc degree in Computer Science from the Illinois In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

HPL (benchmark)

The LINPACK benchmarks are a measure of a system's floating-point computing power. Introduced by Jack Dongarra, they measure how fast a computer solves a dense ''n'' × ''n'' system of linear equations ''Ax'' = ''b'', which is a common task in engineering. The latest version of these benchmarks is used to build the TOP500 list, ranking the world's most powerful supercomputers. The aim is to approximate how fast a computer will perform when solving real problems. It is a simplification, since no single computational task can reflect the overall performance of a computer system. Nevertheless, the LINPACK benchmark performance can provide a good correction over the peak performance provided by the manufacturer. The peak performance is the maximal theoretical performance a computer can achieve, calculated as the machine's frequency, in cycles per second, times the number of operations per cycle it can perform. The actual performance will always be lower than the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Los Alamos National Laboratory

Los Alamos National Laboratory (often shortened as Los Alamos and LANL) is one of the sixteen research and development Laboratory, laboratories of the United States Department of Energy National Laboratories, United States Department of Energy (DOE), located a short distance northwest of Santa Fe, New Mexico, in the Southwestern United States, American southwest. Best known for its central role in helping develop the First Atomic bomb, first atomic bomb, LANL is one of the world's largest and most advanced scientific institutions. Los Alamos was established in 1943 as Project Y, a top-secret site for designing nuclear weapons under the Manhattan Project during World War II.The site was variously called Los Alamos Laboratory and Los Alamos Scientific Laboratory. Chosen for its remote yet relatively accessible location, it served as the main hub for conducting and coordinating nuclear research, bringing together some of the world's most famous scientists, among them numerous Nobel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

United States Department Of Energy

The United States Department of Energy (DOE) is an executive department of the U.S. federal government that oversees U.S. national energy policy and energy production, the research and development of nuclear power, the military's nuclear weapons program, nuclear reactor production for the United States Navy, energy-related research, and energy conservation. The DOE was created in 1977 in the aftermath of the 1973 oil crisis. It sponsors more physical science research than any other U.S. federal agency, the majority of which is conducted through its system of National Laboratories. The DOE also directs research in genomics, with the Human Genome Project originating from a DOE initiative. The department is headed by the secretary of energy, who reports directly to the president of the United States and is a member of the Cabinet. The current secretary of energy is Chris Wright, who has served in the position since February 2025. The department's headquarters are in sou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IBM Roadrunner

Roadrunner was a supercomputer built by IBM for the Los Alamos National Laboratory in New Mexico, USA. The US$100-million Roadrunner was designed for a peak performance of 1.7 petaflops. It achieved 1.026 petaflops on May 25, 2008, to become the world's first TOP500 LINPACK sustained 1.0 petaflops system. In November 2008, it reached a top performance of 1.456 petaFLOPS, retaining its top spot in the TOP500 list. It was also the fourth-most energy-efficient supercomputer in the world on the Supermicro Green500 list, with an operational rate of 444.94 megaflops per watt of power used. The hybrid Roadrunner design was then reused for several other energy efficient supercomputers. Roadrunner was decommissioned by Los Alamos on March 31, 2013. In its place, Los Alamos commissioned a supercomputer called Cielo, which was installed in 2010. Overview IBM built the computer for the U.S. Department of Energy's (DOE) National Nuclear Security Administration (NNSA). It was a hybrid ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Galaxy Formation And Evolution

In cosmology, the study of galaxy formation and evolution is concerned with the processes that formed a heterogeneous universe from a homogeneous beginning, the formation of the first galaxies, the way galaxies change over time, and the processes that have generated the variety of structures observed in nearby galaxies. Galaxy formation is hypothesized to occur from structure formation theories, as a result of tiny quantum fluctuations in the aftermath of the Big Bang. The simplest model in general agreement with observed phenomena is the Lambda-CDM model—that is, clustering and merging allows galaxies to accumulate mass, determining both their shape and structure. Hydrodynamics simulation, which simulates both baryons and dark matter, is widely used to study galaxy formation and evolution. Commonly observed properties of galaxies Because of the inability to conduct experiments in outer space, the only way to “test” theories and models of galaxy evolution is to compare th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiprocessing

Multiprocessing (MP) is the use of two or more central processing units (CPUs) within a single computer system. The term also refers to the ability of a system to support more than one processor or the ability to allocate tasks between them. There are many variations on this basic theme, and the definition of multiprocessing can vary with context, mostly as a function of how CPUs are defined ( multiple cores on one die, multiple dies in one package, multiple packages in one system unit, etc.). A multiprocessor is a computer system having two or more processing units (multiple processors) each sharing main memory and peripherals, in order to simultaneously process programs. A 2009 textbook defined multiprocessor system similarly, but noted that the processors may share "some or all of the system’s memory and I/O facilities"; it also gave tightly coupled system as a synonymous term. At the operating system level, ''multiprocessing'' is sometimes used to refer to the executi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |