|

Empirical Orthogonal Functions

In statistics and signal processing, the method of empirical orthogonal function (EOF) analysis is a decomposition of a signal or data set in terms of orthogonal basis functions which are determined from the data. The term is also interchangeable with the geographically weighted Principal components analysis in geophysics. The ''i'' th basis function is chosen to be orthogonal to the basis functions from the first through ''i'' − 1, and to minimize the residual variance. That is, the basis functions are chosen to be different from each other, and to account for as much variance as possible. The method of EOF analysis is similar in spirit to harmonic analysis, but harmonic analysis typically uses predetermined orthogonal functions, for example, sine and cosine functions at fixed frequencies. In some cases the two methods may yield essentially the same results. The basis functions are typically found by computing the eigenvectors of the covariance matrix of the data set. A m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kernel Trick

In machine learning, kernel machines are a class of algorithms for pattern analysis, whose best known member is the support-vector machine (SVM). The general task of pattern analysis is to find and study general types of relations (for example clusters, rankings, principal components, correlations, classifications) in datasets. For many algorithms that solve these tasks, the data in raw representation have to be explicitly transformed into feature vector representations via a user-specified ''feature map'': in contrast, kernel methods require only a user-specified ''kernel'', i.e., a similarity function over all pairs of data points computed using Inner products. The feature map in kernel machines is infinite dimensional but only requires a finite dimensional matrix from user-input according to the Representer theorem. Kernel machines are slow to compute for datasets larger than a couple of thousand examples without parallel processing. Kernel methods owe their name to the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Biometrika

''Biometrika'' is a peer-reviewed scientific journal published by Oxford University Press for thBiometrika Trust The editor-in-chief is Paul Fearnhead (Lancaster University). The principal focus of this journal is theoretical statistics. It was established in 1901 and originally appeared quarterly. It changed to three issues per year in 1977 but returned to quarterly publication in 1992. History ''Biometrika'' was established in 1901 by Francis Galton, Karl Pearson, and Raphael Weldon to promote the study of biometrics. The history of ''Biometrika'' is covered by Cox (2001). The name of the journal was chosen by Pearson, but Francis Edgeworth insisted that it be spelt with a "k" and not a "c". Since the 1930s, it has been a journal for statistical theory and methodology. Galton's role in the journal was essentially that of a patron and the journal was run by Pearson and Weldon and after Weldon's death in 1906 by Pearson alone until he died in 1936. In the early days, the American ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Varimax Rotation

In statistics, a varimax rotation is used to simplify the expression of a particular sub-space in terms of just a few major items each. The actual coordinate system is unchanged, it is the orthogonal basis that is being rotated to align with those coordinates. The sub-space found with principal component analysis or factor analysis is expressed as a dense basis with many non-zero weights which makes it hard to interpret. Varimax is so called because it maximizes the sum of the variances of the squared loadings (squared correlations between variables and factors). Preserving orthogonality requires that it is a rotation that leaves the sub-space invariant. Intuitively, this is achieved if, (a) any given variable has a high loading on a single factor but near-zero loadings on the remaining factors and if (b) any given factor is constituted by only a few variables with very high loadings on this factor while the remaining variables have near-zero loadings on this factor. If these cond ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transform Coding

Transform coding is a type of data compression for "natural" data like audio signals or photographic images. The transformation is typically lossless (perfectly reversible) on its own but is used to enable better (more targeted) quantization, which then results in a lower quality copy of the original input (lossy compression). In transform coding, knowledge of the application is used to choose information to discard, thereby lowering its bandwidth. The remaining information can then be compressed via a variety of methods. When the output is decoded, the result may not be identical to the original input, but is expected to be close enough for the purpose of the application. Colour television NTSC One of the most successful transform encoding system is typically not referred to as such—the example being NTSC color television. After an extensive series of studies in the 1950s, Alda Bedford showed that the human eye has high resolution only for black and white, somewhat less f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Signal Separation

Source separation, blind signal separation (BSS) or blind source separation, is the separation of a set of source signals from a set of mixed signals, without the aid of information (or with very little information) about the source signals or the mixing process. It is most commonly applied in digital signal processing and involves the analysis of mixtures of signals; the objective is to recover the original component signals from a mixture signal. The classical example of a source separation problem is the cocktail party problem, where a number of people are talking simultaneously in a room (for example, at a cocktail party), and a listener is trying to follow one of the discussions. The human brain can handle this sort of auditory source separation problem, but it is a difficult problem in digital signal processing. This problem is in general highly underdetermined, but useful solutions can be derived under a surprising variety of conditions. Much of the early literature in thi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Orthogonal Matrix

In linear algebra, an orthogonal matrix, or orthonormal matrix, is a real square matrix whose columns and rows are orthonormal vectors. One way to express this is Q^\mathrm Q = Q Q^\mathrm = I, where is the transpose of and is the identity matrix. This leads to the equivalent characterization: a matrix is orthogonal if its transpose is equal to its inverse: Q^\mathrm=Q^, where is the inverse of . An orthogonal matrix is necessarily invertible (with inverse ), unitary (), where is the Hermitian adjoint (conjugate transpose) of , and therefore normal () over the real numbers. The determinant of any orthogonal matrix is either +1 or −1. As a linear transformation, an orthogonal matrix preserves the inner product of vectors, and therefore acts as an isometry of Euclidean space, such as a rotation, reflection or rotoreflection. In other words, it is a unitary transformation. The set of orthogonal matrices, under multiplication, forms the group , known as the orthogonal gr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nonlinear Dimensionality Reduction

Nonlinear dimensionality reduction, also known as manifold learning, refers to various related techniques that aim to project high-dimensional data onto lower-dimensional latent manifolds, with the goal of either visualizing the data in the low-dimensional space, or learning the mapping (either from the high-dimensional space to the low-dimensional embedding or vice versa) itself. The techniques described below can be understood as generalizations of linear decomposition methods used for dimensionality reduction, such as singular value decomposition and principal component analysis. Applications of NLDR Consider a dataset represented as a matrix (or a database table), such that each row represents a set of attributes (or features or dimensions) that describe a particular instance of something. If the number of attributes is large, then the space of unique possible rows is exponentially large. Thus, the larger the dimensionality, the more difficult it becomes to sample the space ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multilinear Subspace Learning

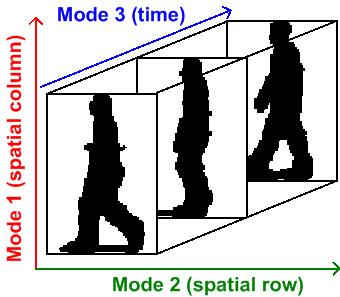

Multilinear subspace learning is an approach to dimensionality reduction.M. A. O. Vasilescu, D. Terzopoulos (2003"Multilinear Subspace Analysis of Image Ensembles" "Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR’03), Madison, WI, June, 2003"M. A. O. Vasilescu, D. Terzopoulos (2002"Multilinear Analysis of Image Ensembles: TensorFaces" Proc. 7th European Conference on Computer Vision (ECCV'02), Copenhagen, Denmark, May, 2002M. A. O. Vasilescu,(2002"Human Motion Signatures: Analysis, Synthesis, Recognition" "Proceedings of International Conference on Pattern Recognition (ICPR 2002), Vol. 3, Quebec City, Canada, Aug, 2002, 456–460." Dimension reduction, Dimensionality reduction can be performed on a data tensor that contains a collection of observations have been vectorized, or observations that are treated as matrices and concatenated into a data tensor.X. He, D. Cai, P. NiyogiTensor subspace analysis in: Advances in Neural Information Proce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multilinear PCA

Within statistics, Multilinear principal component analysis (MPCA) is a multilinear extension of principal component analysis (PCA). MPCA is employed in the analysis of M-way arrays, i.e. a cube or hyper-cube of numbers, also informally referred to as a "data tensor". M-way arrays may be modeled by * linear tensor models such as CANDECOMP/Parafac, or * multilinear tensor models, such as multilinear principal component analysis (MPCA), or multilinear independent component analysis (MICA), etc. The origin of MPCA can be traced back to the Tucker decomposition and Peter Kroonenberg's "3-mode PCA" work.P. M. Kroonenberg and J. de LeeuwPrincipal component analysis of three-mode data by means of alternating least squares algorithms Psychometrika, 45 (1980), pp. 69–97. In 2000, De Lathauwer et al. restated Tucker and Kroonenberg's work in clear and concise numerical computational terms in their SIAM paper entitled "Multilinear Singular Value Decomposition Multilinear may refer to: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Blind Signal Separation

Blind may refer to: * The state of blindness, being unable to see * A window blind, a covering for a window Blind may also refer to: Arts, entertainment, and media Films * ''Blind'' (2007 film), a Dutch drama by Tamar van den Dop * ''Blind'' (2011 film), a South Korean crime thriller * ''Blind'' (2014 film), a Norwegian drama * ''Blind'' (2016 film), an American drama * ''Blind'' (2019 film), an American horror film * ''Blind'' (upcoming film), an upcoming Indian crime thriller, based on 2011 South Korean film of the same name Music * Blind (band), Australian Christian rock group founded in 1999 * Blind (rapper), Italian rapper Albums * ''Blind'' (Corrosion of Conformity album), 1991 * ''Blind'' (The Icicle Works album), 1988 * ''Blind'' (The Sundays album), 1992 * ''Blind!'', a 1985 album by the Sex Gang Children Songs * "Blind" (Breed 77 song), 2006 * "Blind" (Feder song), 2015 * "Blind" (Hercules and Love Affair song), 2008 * "Blind" (Hurts song), 2013 * "B ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |