|

Elastic Weight Consolidation

Catastrophic interference, also known as catastrophic forgetting, is the tendency of an artificial neural network to abruptly and drastically forget previously learned information upon learning new information. Neural networks are an important part of the connectionist approach to cognitive science. The issue of catastrophic interference when modeling human memory with connectionist models was originally brought to the attention of the scientific community by research from McCloskey and Cohen (1989), and Ratcliff (1990). It is a radical manifestation of the 'sensitivity-stability' dilemma or the 'stability-plasticity' dilemma. Specifically, these problems refer to the challenge of making an artificial neural network that is sensitive to, but not disrupted by, new information. Lookup tables and connectionist networks lie on the opposite sides of the stability plasticity spectrum. The former remains completely stable in the presence of new information but lacks the ability to gener ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Network

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a computational model inspired by the structure and functions of biological neural networks. A neural network consists of connected units or nodes called '' artificial neurons'', which loosely model the neurons in the brain. Artificial neuron models that mimic biological neurons more closely have also been recently investigated and shown to significantly improve performance. These are connected by ''edges'', which model the synapses in the brain. Each artificial neuron receives signals from connected neurons, then processes them and sends a signal to other connected neurons. The "signal" is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs, called the '' activation function''. The strength of the signal at each connection is determined by a ''weight'', which adjusts during the learning process. Typically, ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hippocampus

The hippocampus (: hippocampi; via Latin from Ancient Greek, Greek , 'seahorse'), also hippocampus proper, is a major component of the brain of humans and many other vertebrates. In the human brain the hippocampus, the dentate gyrus, and the subiculum are components of the hippocampal formation located in the limbic system. The hippocampus plays important roles in the Memory consolidation, consolidation of information from short-term memory to long-term memory, and in spatial memory that enables Navigation#Navigation in spatial cognition, navigation. In humans, and other primates the hippocampus is located in the archicortex, one of the three regions of allocortex, in each cerebral hemisphere, hemisphere with direct neural projections to, and reciprocal indirect projections from the neocortex. The hippocampus, as the medial pallium, is a structure found in all vertebrates. In Alzheimer's disease (and other forms of dementia), the hippocampus is one of the first regions of th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Latent Learning

Latent learning is the subconscious retention of information without reinforcement or motivation. In latent learning, one changes behavior only when there is sufficient motivation later than when they subconsciously retained the information. Latent learning is when the observation of something, rather than experiencing something directly, can affect later behavior. Observational learning can be many things. A human observes a behavior, and later repeats that behavior at another time (not direct imitation) even though no one is rewarding them to do that behavior. In the social learning theory, humans observe others receiving rewards or punishments, which invokes feelings in the observer and motivates them to change their behavior. In latent learning particularly, there is no observation of a reward or punishment. Latent learning is simply animals observing their surroundings with no particular motivation to learn the geography of it; however, at a later date, they are able to expl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

One-hot

In digital circuits and machine learning, a one-hot is a group of bits among which the legal combinations of values are only those with a single high (1) bit and all the others low (0). A similar implementation in which all bits are '1' except one '0' is sometimes called one-cold. In statistics, dummy variables represent a similar technique for representing categorical data. Applications Digital circuitry One-hot encoding is often used for indicating the state of a state machine. When using binary, a decoder is needed to determine the state. A one-hot state machine, however, does not need a decoder as the state machine is in the ''n''th state if, and only if, the ''n''th bit is high. A ring counter with 15 sequentially ordered states is an example of a state machine. A 'one-hot' implementation would have 15 flip-flops chained in series with the Q output of each flip-flop connected to the D input of the next and the D input of the first flip-flop connected to the Q output of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transfer Learning

Transfer learning (TL) is a technique in machine learning (ML) in which knowledge learned from a task is re-used in order to boost performance on a related task. For example, for image classification, knowledge gained while learning to recognize cars could be applied when trying to recognize trucks. This topic is related to the psychological literature on transfer of learning, although practical ties between the two fields are limited. Reusing/transferring information from previously learned tasks to new tasks has the potential to significantly improve learning efficiency. Since transfer learning makes use of training with multiple objective functions it is related to cost-sensitive machine learning and multi-objective optimization. History In 1976, Bozinovski and Fulgosi published a paper addressing transfer learning in neural network training. The paper gives a mathematical and geometrical model of the topic. In 1981, a report considered the application of transfer learni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generative Model

In statistical classification, two main approaches are called the generative approach and the discriminative approach. These compute classifiers by different approaches, differing in the degree of statistical modelling. Terminology is inconsistent, but three major types can be distinguished: # A generative model is a statistical model of the joint probability distribution P(X, Y) on a given observable variable ''X'' and target variable ''Y'';: "Generative classifiers learn a model of the joint probability, p(x, y), of the inputs ''x'' and the label ''y'', and make their predictions by using Bayes rules to calculate p(y\mid x), and then picking the most likely label ''y''. A generative model can be used to "generate" random instances ( outcomes) of an observation ''x''. # A discriminative model is a model of the conditional probability P(Y\mid X = x) of the target ''Y'', given an observation ''x''. It can be used to "discriminate" the value of the target variable ''Y'', given an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Long Term Memory

Long-term memory (LTM) is the stage of the Atkinson–Shiffrin memory model in which informative knowledge is held indefinitely. It is defined in contrast to sensory memory, the initial stage, and Short-term memory, short-term or working memory, the second stage, which persists for about 18 to 30 seconds. LTM is grouped into two categories known as explicit memory (declarative memory) and implicit memory (non-declarative memory). Explicit memory is broken down into Episodic memory, episodic and semantic memory, while implicit memory includes procedural memory and emotional conditioning. Stores The idea of separate memories for short- and long-term storage originated in the 19th century. One model of memory developed in the 1960s assumed that all memories are formed in one store and transfer to another store after a small period of time. This model is referred to as the "modal model", most famously detailed by Richard Shiffrin, Shiffrin. The model states that memory is first stor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Short Term Memory

Short-term memory (or "primary" or "active memory") is the capacity for holding a small amount of information in an active, readily available state for a short interval. For example, short-term memory holds a phone number that has just been recited. The duration of short-term memory (absent rehearsal or active maintenance) is estimated to be on the order of seconds. The commonly cited capacity of 7 items, found in Miller's Law, has been superseded by 4±1 items. In contrast, long-term memory holds information indefinitely. Short-term memory is not the same as working memory, which refers to structures and processes used for temporarily storing and manipulating information. Stores The idea of separate memories for short-term and long-term storage originated in the 19th century. A model of memory developed in the 1960s assumed that all memories are formed in one store and transfer to other stores after a small period of time. This model is referred to as the "modal model", most ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neocortex

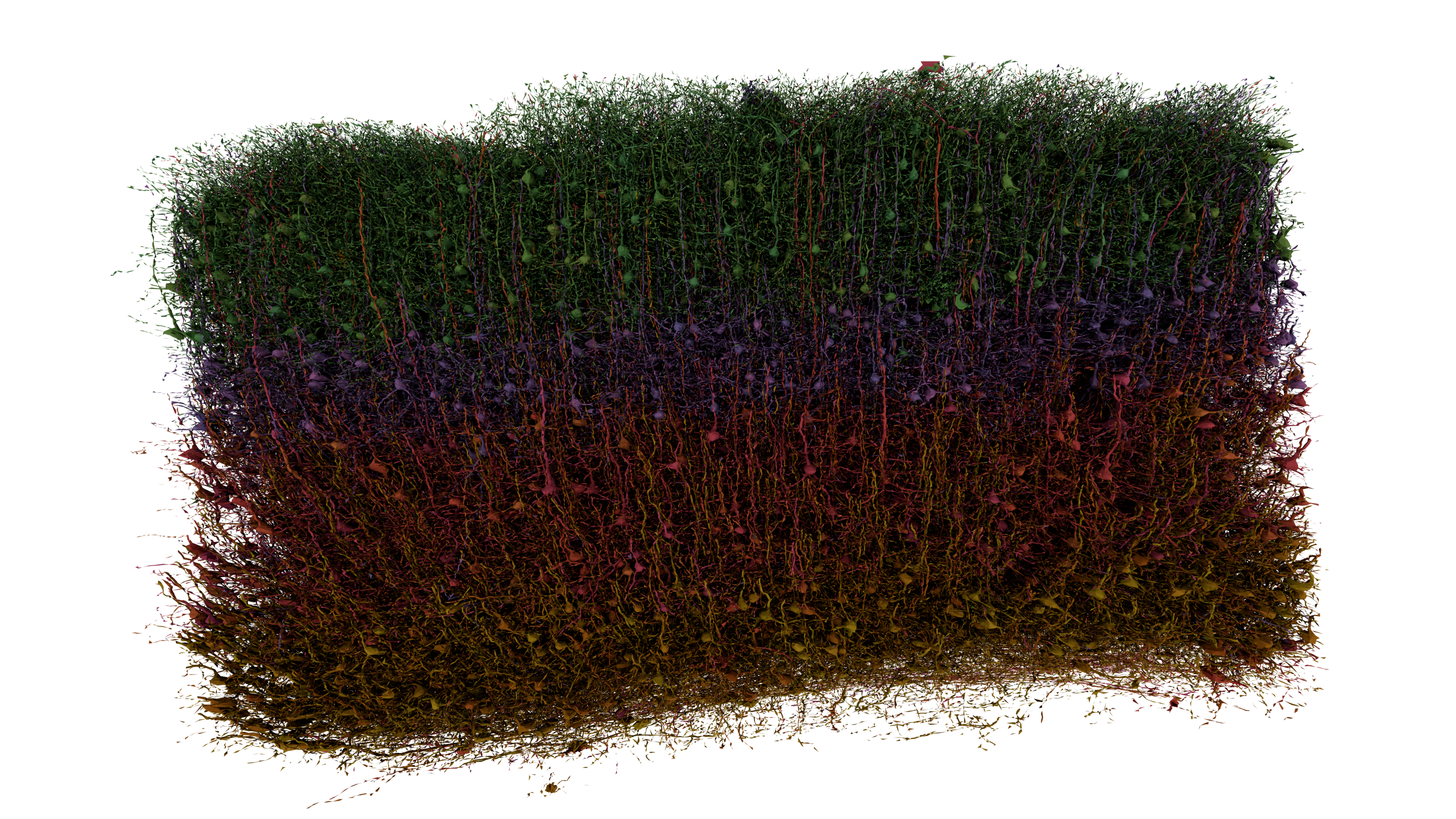

The neocortex, also called the neopallium, isocortex, or the six-layered cortex, is a set of layers of the mammalian cerebral cortex involved in higher-order brain functions such as sensory perception, cognition, generation of motor commands, spatial reasoning, and language. The neocortex is further subdivided into the true isocortex and the proisocortex. In the human brain, the cerebral cortex consists of the larger neocortex and the smaller allocortex, respectively taking up 90% and 10%. The neocortex is made up of six layers, labelled from the outermost inwards, I to VI. Etymology The term is from ''cortex'', Latin, " bark" or "rind", combined with ''neo-'', Greek, "new". ''Neopallium'' is a similar hybrid, from Latin ''pallium'', "cloak". ''Isocortex'' and ''allocortex'' are hybrids with Greek ''isos'', "same", and ''allos'', "other". Anatomy The neocortex is the most developed in its organisation and number of layers, of the cerebral tissues. The neocortex cons ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Connectionism

Connectionism is an approach to the study of human mental processes and cognition that utilizes mathematical models known as connectionist networks or artificial neural networks. Connectionism has had many "waves" since its beginnings. The first wave appeared 1943 with Warren Sturgis McCulloch and Walter Pitts both focusing on comprehending neural circuitry through a formal and mathematical approach, and Frank Rosenblatt who published the 1958 paper "The Perceptron: A Probabilistic Model For Information Storage and Organization in the Brain" in ''Psychological Review'', while working at the Cornell Aeronautical Laboratory. The first wave ended with the 1969 book about the limitations of the original perceptron idea, written by Marvin Minsky and Seymour Papert, which contributed to discouraging major funding agencies in the US from investing in connectionist research. With a few noteworthy deviations, most connectionist research entered a period of inactivity until the mid-1980 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neural Network

A neural network is a group of interconnected units called neurons that send signals to one another. Neurons can be either biological cells or signal pathways. While individual neurons are simple, many of them together in a network can perform complex tasks. There are two main types of neural networks. *In neuroscience, a '' biological neural network'' is a physical structure found in brains and complex nervous systems – a population of nerve cells connected by synapses. *In machine learning, an '' artificial neural network'' is a mathematical model used to approximate nonlinear functions. Artificial neural networks are used to solve artificial intelligence problems. In biology In the context of biology, a neural network is a population of biological neurons chemically connected to each other by synapses. A given neuron can be connected to hundreds of thousands of synapses. Each neuron sends and receives electrochemical signals called action potentials to its conne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |