|

Data Synchronization

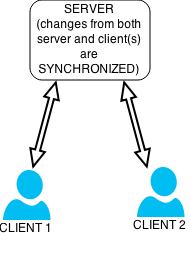

Data synchronization is the process of establishing consistency between source and target data stores, and the continuous harmonization of the data over time. It is fundamental to a wide variety of applications, including file synchronization and mobile device synchronization. Data synchronization can also be useful in encryption for synchronizing public key servers. Data synchronization is needed to update and keep multiple copies of a set of data coherent with one another or to maintain data integrity, Figure 3. For example, database replication is used to keep multiple copies of data synchronized with database servers that store data in different locations. Examples Examples include: * File synchronization, such as syncing a hand-held MP3 player to a desktop computer; * Cluster file systems, which are file systems that maintain data or indexes in a coherent fashion across a whole computing cluster; * Cache coherency, maintaining multiple copies of data in sync across ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Transfer

Data communication, including data transmission and data reception, is the transfer of data, transmitted and received over a point-to-point or point-to-multipoint communication channel. Examples of such channels are copper wires, optical fibers, wireless communication using radio spectrum, storage media and computer buses. The data are represented as an electromagnetic signal, such as an electrical voltage, radiowave, microwave, or infrared signal. '' Analog transmission'' is a method of conveying voice, data, image, signal or video information using a continuous signal that varies in amplitude, phase, or some other property in proportion to that of a variable. The messages are either represented by a sequence of pulses by means of a line code (''baseband transmission''), or by a limited set of continuously varying waveforms (''passband transmission''), using a digital modulation method. The passband modulation and corresponding demodulation is carried out by modem e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Overhead

Overhead in computer systems consists of shared functions that benefit all users or processes but are not directly attributable to any specific task. It is thus similar to overhead in organizations. Computer system overhead shows up as slower processing, less memory, less storage capacity, less network bandwidth, or bigger latency than would be expected from reading the system specifications. It is a special case of engineering overhead. Overhead can be a deciding factor in software design, with regard to structure, error correction, and feature inclusion. Examples of computing overhead may be found in object-oriented programming (OOP), functional programming, data transfer, data structures, and file systems on data storage devices. Software design Choice of implementation A programmer/software engineer may have a choice of several algorithms, encodings, data types or data structures, each of which have known characteristics. When choosing among them, their respective overhead ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Version Control

Version control (also known as revision control, source control, and source code management) is the software engineering practice of controlling, organizing, and tracking different versions in history of computer files; primarily source code text files, but generally any type of file. Version control is a component of software configuration management. A ''version control system'' is a software tool that automates version control. Alternatively, version control is embedded as a feature of some systems such as word processors, spreadsheets, collaborative groupware, web docs, and content management systems, e.g., Help:Page history, Wikipedia's page history. Version control includes viewing old versions and enables Reversion (software development), reverting a file to a previous version. Overview As teams develop software, it is common to Software deployment, deploy multiple versions of the same software, and for different developers to work on one or more different versions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

USB Flash Drives

A flash drive (also thumb drive, memory stick, and pen drive/pendrive) is a data storage device that includes flash memory with an integrated USB interface. A typical USB drive is removable, rewritable, and smaller than an optical disc, and usually weighs less than . Since first offered for sale in late 2000, the storage capacities of USB drives range from 8 megabytes to 256 gigabytes (GB), 512 GB and 1 terabyte (TB). As of 2024, 4 TB flash drives were the largest currently in production. Some allow up to 100,000 write/erase cycles, depending on the exact type of memory chip used, and are thought to physically last between 10 and 100 years under normal circumstances ( shelf storage time). Common uses of USB flash drives are for storage, supplementary back-ups, and transferring of computer files. Compared with floppy disks or CDs, they are smaller, faster, have significantly more capacity, and are more durable due to a lack of moving parts. Additionally, they ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hard Drive

A hard disk drive (HDD), hard disk, hard drive, or fixed disk is an electro-mechanical data storage device that stores and retrieves digital data using magnetic storage with one or more rigid rapidly rotating hard disk drive platter, platters coated with magnetic material. The platters are paired with disk read-and-write head, magnetic heads, usually arranged on a moving actuator arm, which read and write data to the platter surfaces. Data is accessed in a random-access manner, meaning that individual Block (data storage), blocks of data can be stored and retrieved in any order. HDDs are a type of non-volatile storage, retaining stored data when powered off. Modern HDDs are typically in the form of a small disk enclosure, rectangular box. Hard disk drives were introduced by IBM in 1956, and were the dominant secondary storage device for History of general-purpose CPUs, general-purpose computers beginning in the early 1960s. HDDs maintained this position into the modern er ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rsync

rsync (remote sync) is a utility for transferring and synchronizing files between a computer and a storage drive and across networked computers by comparing the modification times and sizes of files. It is commonly found on Unix-like operating systems and is under the GPL-3.0-or-later license. rsync is written in C as a single- threaded application. The rsync algorithm is a type of delta encoding, and is used for minimizing network usage. Zstandard, LZ4, or Zlib may be used for additional data compression, and SSH or stunnel can be used for security. rsync is typically used for synchronizing files and directories between two different systems. For example, if the command rsync local-file user@remote-host:remote-file is run, rsync will use SSH to connect as user to remote-host. Once connected, it will invoke the remote host's rsync and then the two programs will determine what parts of the local file need to be transferred so that the remote file matches the local one ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mirror Website

Mirror sites or mirrors are replicas of other websites. The concept of mirroring applies to network services accessible through any protocol, such as HTTP or FTP. Such sites have different URLs than the original site, but host identical or near-identical content. Mirror sites are often located in a different geographic region than the original, or upstream site. The purpose of mirrors is to reduce network traffic, improve access speed, ensure availability of the original site for technical or political reasons, or provide a real-time backup of the original site. Mirror sites are particularly important in developing countries, where internet access may be slower or less reliable. Mirror sites were heavily used on the early internet, when most users accessed through dialup and the Internet backbone had much lower bandwidth than today, making a geographically-localized mirror network a worthwhile benefit. Download archives such as Info-Mac, Tucows and CPAN maintained worldwide netw ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coda (file System)

Coda is a distributed file system developed as a research project at Carnegie Mellon University since 1987 under the direction of Mahadev Satyanarayanan. It descended directly from an older version of Andrew File System (AFS-2) and offers many similar features. The InterMezzo file system was inspired by Coda. Features Coda has many features that are desirable for network file systems, and several features not found elsewhere. # Disconnected operation for mobile computing. # Is freely available under the GPL # High performance through client-side persistent caching # Server replication # Security model for authentication, encryption and access control # Continued operation during partial network failures in server network # Network bandwidth adaptation # Good scalability # Well defined semantics of sharing, even in the presence of network failure Coda uses a local cache to provide access to server data when the network connection is lost. During normal operation, a user reads ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Filesystem

A clustered file system (CFS) is a file system which is shared by being simultaneously Mount (computing), mounted on multiple Server (computing), servers. There are several approaches to computer cluster, clustering, most of which do not employ a clustered file system (only direct attached storage for each node). Clustered file systems can provide features like location-independent addressing and redundancy which improve reliability or reduce the complexity of the other parts of the cluster. Parallel file systems are a type of clustered file system that spread data across multiple storage nodes, usually for redundancy or performance. Shared-disk file system A shared-disk file system uses a storage area network (SAN) to allow multiple computers to gain direct disk access at the Block (data storage), block level. Access control and translation from file-level operations that applications use to block-level operations used by the SAN must take place on the client node. The mos ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Subversion (software)

Apache Subversion (often abbreviated SVN, after its command name ''svn'') is a version control system distributed as open source under the Apache License. Software developers use Subversion to maintain current and historical versions of files such as source code, web pages, and documentation. Its goal is to be a mostly compatible successor to the widely used Concurrent Versions System (CVS). The open source community has used Subversion widely: for example, in projects such as Apache Software Foundation, FreeBSD, SourceForge, and from 2006 to 2019, GCC. CodePlex was previously a common host for Subversion repositories. Subversion was created by CollabNet Inc. in 2000, and is now a top-level Apache project being built and used by a global community of contributors. History CollabNet founded the Subversion project in 2000 as an effort to write an open-source version-control system which operated much like CVS but which fixed the bugs and supplied some features missing in CVS. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Concurrent Versions System

Concurrent Versions System (CVS, or Concurrent Versioning System) is a version control system originally developed by Dick Grune in July 1986. Design CVS operates as a front end to Revision Control System (RCS), an older version control system that manages individual files but not whole projects. It expands upon RCS by adding support for repository-level change tracking, and a client-server model. Files are tracked using the same history format as in RCS, with a hidden directory containing a corresponding history file for each file in the repository. CVS uses delta compression for efficient storage of different versions of the same file. This works well with large text files with few changes from one version to the next. This is usually the case for source code files. On the other hand, when CVS is told to store a file as binary, it will keep each individual version on the server. This is typically used for non-text files such as executable images where it is difficult ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |