|

Data Redundancy

In computer main memory, auxiliary storage and computer buses, data redundancy is the existence of data that is additional to the actual data and permits correction of errors in stored or transmitted data. The additional data can simply be a complete copy of the actual data (a type of repetition code), or only select pieces of data that allow detection of errors and reconstruction of lost or damaged data up to a certain level. For example, by including computed check bits, ECC memory is capable of detecting and correcting single-bit errors within each memory word, while RAID 1 combines two hard disk drives (HDDs) into a logical storage unit that allows stored data to survive a complete failure of one drive. Data redundancy can also be used as a measure against silent data corruption; for example, file systems such as Btrfs and ZFS use data and metadata checksumming in combination with copies of stored data to detect silent data corruption and repair its effects. I ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Main Memory

Computer data storage or digital data storage is a technology consisting of computer components and recording media that are used to retain digital data. It is a core function and fundamental component of computers. The central processing unit (CPU) of a computer is what manipulates data by performing computations. In practice, almost all computers use a storage hierarchy, which puts fast but expensive and small storage options close to the CPU and slower but less expensive and larger options further away. Generally, the fast technologies are referred to as "memory", while slower persistent technologies are referred to as "storage". Even the first computer designs, Charles Babbage's Analytical Engine and Percy Ludgate's Analytical Machine, clearly distinguished between processing and memory (Babbage stored numbers as rotations of gears, while Ludgate stored numbers as displacements of rods in shuttles). This distinction was extended in the Von Neumann architecture, wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unnormalized Form

In database normalization, unnormalized form (UNF or 0NF), also known as an unnormalized relation or non-first normal form (N1NF or NF2), is a database data model (organization of data in a database) which does not meet any of the conditions of database normalization defined by the relational model. Database systems which support unnormalized data are sometimes called non-relational or NoSQL databases. In the relational model, unnormalized relations can be considered the starting point for a process of normalization. "Unnormalized form" should not be confused with denormalization, where normalization is deliberately compromised for selected tables in a relational database. History In 1970, E. F. Codd proposed the relational data model, now widely accepted as the standard data model. At that time, office automation was the major use of data storage systems, which resulted in the proposal of many UNF/NF2 data models like the Schek model, Jaeschke models ( non-recursive and recur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data

Data ( , ) are a collection of discrete or continuous values that convey information, describing the quantity, quality, fact, statistics, other basic units of meaning, or simply sequences of symbols that may be further interpreted formally. A datum is an individual value in a collection of data. Data are usually organized into structures such as tables that provide additional context and meaning, and may themselves be used as data in larger structures. Data may be used as variables in a computational process. Data may represent abstract ideas or concrete measurements. Data are commonly used in scientific research, economics, and virtually every other form of human organizational activity. Examples of data sets include price indices (such as the consumer price index), unemployment rates, literacy rates, and census data. In this context, data represent the raw facts and figures from which useful information can be extracted. Data are collected using technique ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Memory

Computer memory stores information, such as data and programs, for immediate use in the computer. The term ''memory'' is often synonymous with the terms ''RAM,'' ''main memory,'' or ''primary storage.'' Archaic synonyms for main memory include ''core'' (for magnetic core memory) and ''store''. Main memory operates at a high speed compared to mass storage which is slower but less expensive per bit and higher in capacity. Besides storing opened programs and data being actively processed, computer memory serves as a Page cache, mass storage cache and write buffer to improve both reading and writing performance. Operating systems borrow RAM capacity for caching so long as it is not needed by running software. If needed, contents of the computer memory can be transferred to storage; a common way of doing this is through a memory management technique called ''virtual memory''. Modern computer memory is implemented as semiconductor memory, where data is stored within memory cell (com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Redundancy (information Theory)

Redundancy or redundant may refer to: Language * Redundancy (linguistics), information that is expressed more than once Engineering and computer science * Data redundancy, database systems which have a field that is repeated in two or more tables * Logic redundancy, a digital gate network containing circuitry that does not affect the static logic function * Redundancy (engineering), the duplication of critical components or functions of a system with the intention of increasing reliability * Redundancy (information theory), the number of bits used to transmit a message minus the number of bits of actual information in the message * Redundancy in total quality management, quality which exceeds the required quality level, creating unnecessarily high costs * The same task executed by several different methods in a user interface In the industrial design field of human–computer interaction, a user interface (UI) is the space where interactions between humans and machines o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Redundancy (engineering)

In engineering and systems theory, redundancy is the intentional duplication of critical components or functions of a system with the goal of increasing reliability of the system, usually in the form of a backup or fail-safe, or to improve actual system performance, such as in the case of GNSS receivers, or multi-threaded computer processing. In many safety-critical systems, such as fly-by-wire and hydraulic systems in aircraft, some parts of the control system may be triplicated, which is formally termed triple modular redundancy (TMR). An error in one component may then be out-voted by the other two. In a triply redundant system, the system has three sub components, all three of which must fail before the system fails. Since each one rarely fails, and the sub components are designed to preclude common failure modes (which can then be modelled as independent failure), the probability of all three failing is calculated to be extraordinarily small; it is often outweighed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

End-to-end Data Protection

Data corruption refers to errors in computer data that occur during writing, reading, storage, transmission, or processing, which introduce unintended changes to the original data. Computer, transmission, and storage systems use a number of measures to provide end-to-end data integrity, or lack of errors. In general, when data corruption occurs, a file containing that data will produce unexpected results when accessed by the system or the related application. Results could range from a minor loss of data to a system crash. For example, if a document file is corrupted, when a person tries to open that file with a document editor they may get an error message, thus the file might not be opened or might open with some of the data corrupted (or in some cases, completely corrupted, leaving the document unintelligible). The adjacent image is a corrupted image file in which most of the information has been lost. Some types of malware may intentionally corrupt files as part of their ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Scrubbing

Data scrubbing is an error correction technique that uses a background task to periodically inspect main memory or storage for errors, then corrects detected errors using redundant data in the form of different checksums or copies of data. Data scrubbing reduces the likelihood that single correctable errors will accumulate, leading to reduced risks of uncorrectable errors. Data integrity is a high-priority concern in writing, reading, storage, transmission, or processing of data in computer operating systems and in computer storage and data transmission systems. However, only a few of the currently existing and used file systems provide sufficient protection against data corruption. To address this issue, data scrubbing provides routine checks of all inconsistencies in data and, in general, prevention of hardware or software failure. This "scrubbing" feature occurs commonly in memory, disk arrays, file systems, or FPGAs as a mechanism of error detection and correction. RAID ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Deduplication

In computing, data deduplication is a technique for eliminating duplicate copies of repeating data. Successful implementation of the technique can improve storage utilization, which may in turn lower capital expenditure by reducing the overall amount of storage media required to meet storage capacity needs. It can also be applied to network data transfers to reduce the number of bytes that must be sent. The deduplication process requires comparison of data 'chunks' (also known as 'byte patterns') which are unique, contiguous blocks of data. These chunks are identified and stored during a process of analysis, and compared to other chunks within existing data. Whenever a match occurs, the redundant chunk is replaced with a small reference that points to the stored chunk. Given that the same byte pattern may occur dozens, hundreds, or even thousands of times (the match frequency is dependent on the chunk size), the amount of data that must be stored or transferred can be greatly reduce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Maintenance

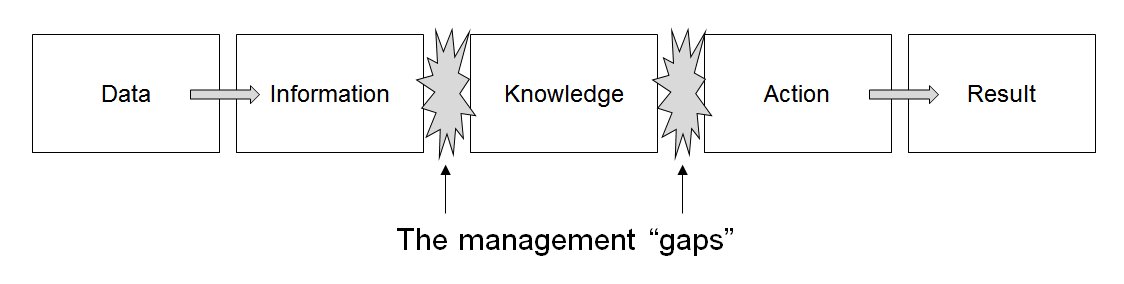

Data management comprises all disciplines related to handling data as a valuable resource, it is the practice of managing an organization's data so it can be analyzed for decision making. Concept The concept of data management emerged alongside the evolution of computing technology. In the 1950s, as computers became more prevalent, organizations began to grapple with the challenge of organizing and storing data efficiently. Early methods relied on punch cards and manual sorting, which were labor-intensive and prone to errors. The introduction of database management systems in the 1970s marked a significant milestone, enabling structured storage and retrieval of data. By the 1980s, relational database models revolutionized data management, emphasizing the importance of data as an asset and fostering a data-centric mindset in business. This era also saw the rise of data governance practices, which prioritized the organization and regulation of data to ensure quality and complian ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Database Normalization

Database normalization is the process of structuring a relational database in accordance with a series of so-called '' normal forms'' in order to reduce data redundancy and improve data integrity. It was first proposed by British computer scientist Edgar F. Codd as part of his relational model. Normalization entails organizing the columns (attributes) and tables (relations) of a database to ensure that their dependencies are properly enforced by database integrity constraints. It is accomplished by applying some formal rules either by a process of ''synthesis'' (creating a new database design) or ''decomposition'' (improving an existing database design). Objectives A basic objective of the first normal form defined by Codd in 1970 was to permit data to be queried and manipulated using a "universal data sub-language" grounded in first-order logic. An example of such a language is SQL, though it is one that Codd regarded as seriously flawed. The objectives of normalization ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Corruption

Data corruption refers to errors in computer data that occur during writing, reading, storage, transmission, or processing, which introduce unintended changes to the original data. Computer, transmission, and storage systems use a number of measures to provide end-to-end data integrity, or lack of errors. In general, when data corruption occurs, a Computer file, file containing that data will produce unexpected results when accessed by the system or the related application. Results could range from a minor loss of data to a system crash. For example, if a Document file format, document file is corrupted, when a person tries to open that file with a document editor they may get an error message, thus the file might not be opened or might open with some of the data corrupted (or in some cases, completely corrupted, leaving the document unintelligible). The adjacent image is a corrupted image file in which most of the information has been lost. Some types of malware may intentional ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |