|

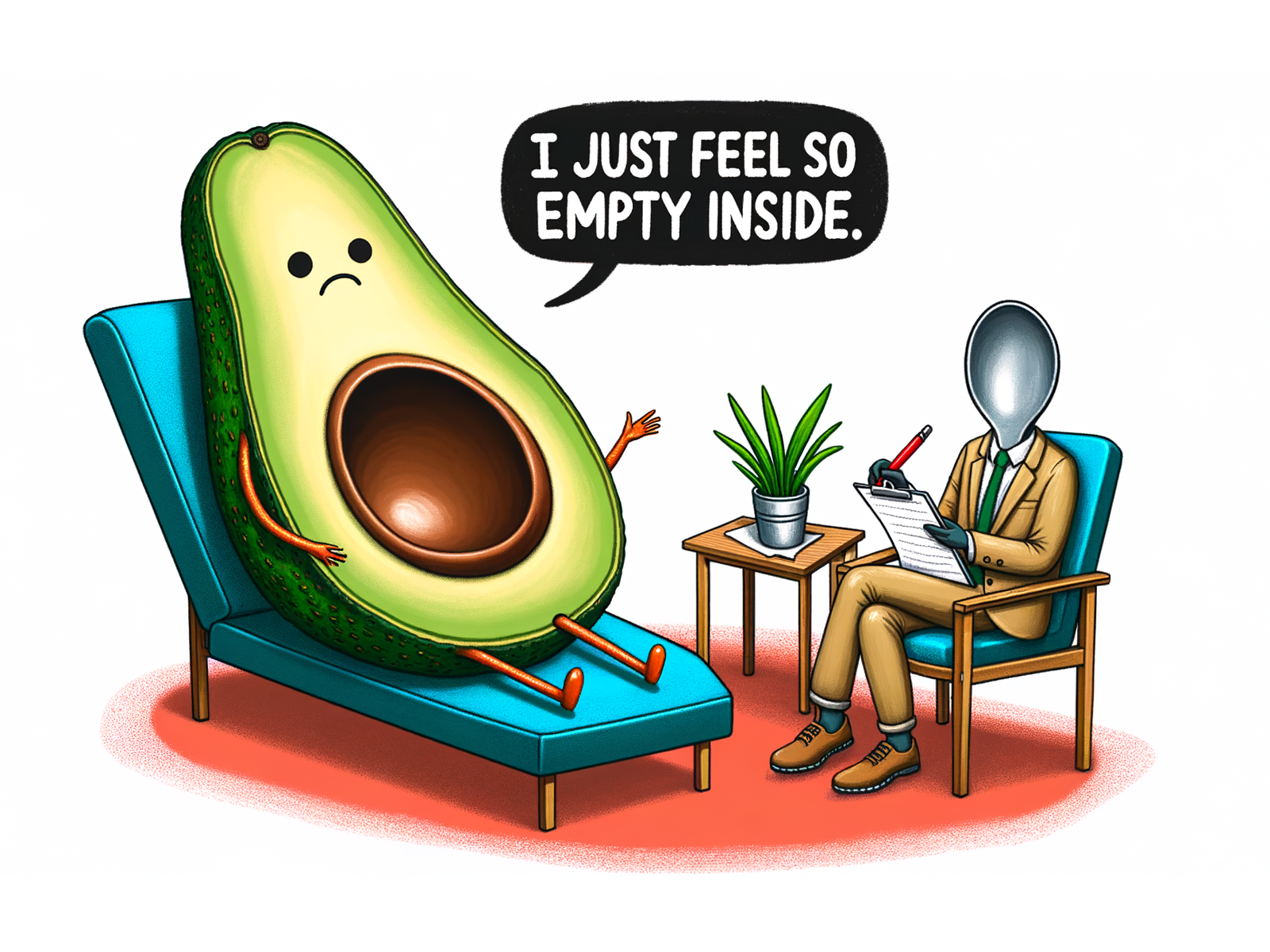

Dall-E

DALL-E, DALL-E 2, and DALL-E 3 (stylised DALL·E) are text-to-image models developed by OpenAI using deep learning methodologies to generate digital images from natural language descriptions known as Prompt engineering, ''prompts''. The first version of DALL-E was announced in January 2021. In the following year, its successor DALL-E 2 was released. DALL-E 3 was released natively into ChatGPT for ChatGPT Plus and ChatGPT Enterprise customers in October 2023, with availability via OpenAI's API and "Labs" platform provided in early November. Microsoft implemented the model in Bing's Image Creator tool and plans to implement it into their Designer app. With Bing's Image Creator tool, Microsoft Copilot runs on DALL-E 3. In March 2025, DALL-E-3 was replaced in ChatGPT by GPT-4o#GPT Image 1, GPT Image 1's native image-generation capabilities. History and background DALL-E was revealed by OpenAI in a blog post on 5 January 2021, and uses a version of GPT-3 modified to generate image ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text-to-image Model

A text-to-image model is a machine learning model which takes an input natural language prompt and produces an image matching that description. Text-to-image models began to be developed in the mid-2010s during the beginnings of the AI boom, as a result of advances in deep neural networks. In 2022, the output of state-of-the-art text-to-image models—such as OpenAI's DALL-E 2, Google Brain's Imagen, Stability AI's Stable Diffusion, and Midjourney—began to be considered to approach the quality of real photographs and human-drawn art. Text-to-image models are generally latent diffusion models, which combine a language model, which transforms the input text into a latent representation, and a generative image model, which produces an image conditioned on that representation. The most effective models have generally been trained on massive amounts of image and text data scraped from the web. History Before the rise of deep learning, attempts to build text-to-image mo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Diffusion Model

In machine learning, diffusion models, also known as diffusion-based generative models or score-based generative models, are a class of latent variable model, latent variable generative model, generative models. A diffusion model consists of two major components: the forward diffusion process, and the reverse sampling process. The goal of diffusion models is to learn a diffusion process for a given dataset, such that the process can generate new elements that are distributed similarly as the original dataset. A diffusion model models data as generated by a diffusion process, whereby a new datum performs a Wiener process, random walk with drift through the space of all possible data. A trained diffusion model can be sampled in many ways, with different efficiency and quality. There are various equivalent formalisms, including Markov chains, denoising diffusion probabilistic models, noise conditioned score networks, and stochastic differential equations. They are typically trained ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

OpenAI

OpenAI, Inc. is an American artificial intelligence (AI) organization founded in December 2015 and headquartered in San Francisco, California. It aims to develop "safe and beneficial" artificial general intelligence (AGI), which it defines as "highly autonomous systems that outperform humans at most economically valuable work". As a leading organization in the ongoing AI boom, OpenAI is known for the GPT family of large language models, the DALL-E series of text-to-image models, and a text-to-video model named Sora (text-to-video model), Sora. Its release of ChatGPT in November 2022 has been credited with catalyzing widespread interest in generative AI. The organization has a complex corporate structure. As of April 2025, it is led by the Nonprofit organization, non-profit OpenAI, Inc., Delaware General Corporation Law, registered in Delaware, and has multiple for-profit subsidiaries including OpenAI Holdings, LLC and OpenAI Global, LLC. Microsoft has invested US$13 billion ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

GPT-4o

GPT-4o ("o" for "omni") is a multilingual, multimodal generative pre-trained transformer developed by OpenAI and released in May 2024. It can process and generate text, images and audio. GPT-4o is free, but ChatGPT Plus subscribers have higher usage limits. GPT-4o's audio-generation capabilities were used in ChatGPT's Advanced Voice Mode. In OpenAI's application programming interface (API), GPT-4o is faster and cheaper than its predecessor, GPT-4 Turbo. On July 18, 2024, OpenAI released GPT-4o mini, a smaller version of GPT-4o which replaced GPT-3.5 Turbo on the ChatGPT interface. GPT-4o's ability to generate images was released later, in March 2025, when it replaced DALL-E 3 in ChatGPT. Background Multiple versions of GPT-4o were originally secretly launched under different names on Large Model Systems Organization's (LMSYS) Chatbot Arena as three different models. These three models were called gpt2-chatbot, im-a-good-gpt2-chatbot, and im-also-a-good-gpt2-chatbot. On 7 Ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ChatGPT

ChatGPT is a generative artificial intelligence chatbot developed by OpenAI and released on November 30, 2022. It uses large language models (LLMs) such as GPT-4o as well as other Multimodal learning, multimodal models to create human-like responses in text, speech, and images. It has access to features such as searching the web, using apps, and running programs. It is credited with accelerating the AI boom, an ongoing period of rapid investment in and public attention to the field of artificial intelligence (AI). Some observers have raised concern about the potential of ChatGPT and similar programs to displace human intelligence, enable plagiarism, or fuel misinformation. ChatGPT is built on OpenAI's proprietary series of generative pre-trained transformer (GPT) models and is Fine-tuning (machine learning), fine-tuned for conversational applications using a combination of supervised learning and reinforcement learning from human feedback. Successive user AI prompt, prompts an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transformer (machine Learning Model)

The transformer is a deep learning architecture based on the multi-head attention mechanism, in which text is converted to numerical representations called tokens, and each token is converted into a vector via lookup from a word embedding table. At each layer, each token is then contextualized within the scope of the context window with other (unmasked) tokens via a parallel multi-head attention mechanism, allowing the signal for key tokens to be amplified and less important tokens to be diminished. Transformers have the advantage of having no recurrent units, therefore requiring less training time than earlier recurrent neural architectures (RNNs) such as long short-term memory (LSTM). Later variations have been widely adopted for training large language models (LLM) on large (language) datasets. The modern version of the transformer was proposed in the 2017 paper " Attention Is All You Need" by researchers at Google. Transformers were first developed as an improvement ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microsoft Copilot

Microsoft Copilot (or simply Copilot) is a generative artificial intelligence chatbot developed by Microsoft. Based on the GPT-4 series of large language models, it was launched in 2023 as Microsoft's primary replacement for the discontinued Cortana (virtual assistant), Cortana. The service was introduced in February 2023 under the name ''Bing Chat'', as a built-in feature for Microsoft Bing and Microsoft Edge. Over the course of 2023, Microsoft began to unify the ''Copilot'' branding across its various chatbot products, cementing the "copilot" analogy. At its Microsoft Build#2023, Build 2023 conference, Microsoft announced its plans to integrate Copilot into Windows 11, allowing users to access it directly through the taskbar. In January 2024, a dedicated Copilot key was announced for Windows keyboards. Copilot utilizes the Microsoft Prometheus model, built upon OpenAI's GPT-4 foundational large language model, which in turn has been Fine-tuning (deep learning), fine-tuned us ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stable Diffusion

Stable Diffusion is a deep learning, text-to-image model released in 2022 based on Diffusion model, diffusion techniques. The generative artificial intelligence technology is the premier product of Stability AI and is considered to be a part of the ongoing AI boom, artificial intelligence boom. It is primarily used to generate detailed images conditioned on text descriptions, though it can also be applied to other tasks such as inpainting, outpainting, and generating image-to-image translations guided by a prompt engineering, text prompt. Its development involved researchers from the CompVis Group at Ludwig Maximilian University of Munich and Runway (company), Runway with a computational donation from Stability and training data from non-profit organizations. Stable Diffusion is a latent diffusion model, a kind of deep generative artificial neural network. Its code and model weights have been released Source-available software, publicly, and an optimized version can run on most ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prompt Engineering

Prompt engineering is the process of structuring or crafting an instruction in order to produce the best possible output from a generative artificial intelligence (AI) model. A ''prompt'' is natural language text describing the task that an AI should perform. A prompt for a text-to-text Large language model, language model can be a query, a command, or a longer statement including context, instructions, and conversation history. Prompt engineering may involve phrasing a query, specifying a style, choice of words and grammar, providing relevant context, or describing a character for the AI to mimic. When communicating with a text-to-image or a text-to-audio model, a typical prompt is a description of a desired output such as "a high-quality photo of an astronaut riding a horse" or "Lo-fi slow BPM electro chill with organic samples". Prompting a text-to-image model may involve adding, removing, or emphasizing words to achieve a desired subject, style, layout, lighting, and aestheti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microsoft Bing

Microsoft Bing (also known simply as Bing) is a search engine owned and operated by Microsoft. The service traces its roots back to Microsoft's earlier search engines, including MSN Search, Windows Live Search, and Live Search. Bing offers a broad spectrum of search services, encompassing web, Bing Videos, video, image, and Bing Maps, map search products, all developed using ASP.NET. The transition from Live Search to Bing was announced by Microsoft CEO Steve Ballmer on May 28, 2009, at the ''All Things Digital'' conference in San Diego, California. The official release followed on June 3, 2009. Bing introduced several notable features at its inception, such as search suggestions during query input and a list of related searches, known as the 'Explore pane'. These features leveraged semantic technology from Powerset (company), Powerset, a company Microsoft acquired in 2008. Microsoft also struck a deal with Yahoo! that led to Bing powering Yahoo! Search. Microsoft made signif ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning is a subset of machine learning that focuses on utilizing multilayered neural networks to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience and is centered around stacking artificial neurons into layers and "training" them to process data. The adjective "deep" refers to the use of multiple layers (ranging from three to several hundred or thousands) in the network. Methods used can be either supervised, semi-supervised or unsupervised. Some common deep learning network architectures include fully connected networks, deep belief networks, recurrent neural networks, convolutional neural networks, generative adversarial networks, transformers, and neural radiance fields. These architectures have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |