|

Compressibility (computer Science)

In algorithmic information theory (a subfield of computer science and mathematics), the Kolmogorov complexity of an object, such as a piece of text, is the length of a shortest computer program (in a predetermined programming language) that produces the object as output. It is a measure of the computational resources needed to specify the object, and is also known as algorithmic complexity, Solomonoff–Kolmogorov–Chaitin complexity, program-size complexity, descriptive complexity, or algorithmic entropy. It is named after Andrey Kolmogorov, who first published on the subject in 1963 and is a generalization of classical information theory. The notion of Kolmogorov complexity can be used to state and Proof of impossibility, prove impossibility results akin to Cantor's diagonal argument, Gödel's incompleteness theorem, and halting problem, Turing's halting problem. In particular, no program ''P'' computing a lower bound for each text's Kolmogorov complexity can return a value es ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pascal (programming Language)

Pascal is an imperative and procedural programming language, designed by Niklaus Wirth as a small, efficient language intended to encourage good programming practices using structured programming and data structuring. It is named in honour of the French mathematician, philosopher and physicist Blaise Pascal. Pascal was developed on the pattern of the ALGOL 60 language. Wirth was involved in the process to improve the language as part of the ALGOL X efforts and proposed a version named ALGOL W. This was not accepted, and the ALGOL X process bogged down. In 1968, Wirth decided to abandon the ALGOL X process and further improve ALGOL W, releasing this as Pascal in 1970. On top of ALGOL's scalars and arrays, Pascal enables defining complex datatypes and building dynamic and recursive data structures such as lists, trees and graphs. Pascal has strong typing on all objects, which means that one type of data cannot be converted to or interpreted as another without explicit conversi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matthew Effect (sociology)

The Matthew effect of accumulated advantage, Matthew principle, or Matthew effect, is the tendency of individuals to accrue social or economic success in proportion to their initial level of popularity, friends, wealth, etc. It is sometimes summarized by the adage "the rich get richer and the poor get poorer". The term was coined by sociologists Robert K. Merton and Harriet Zuckerman in 1968 and takes its name from the Parable of the Talents in the biblical Gospel of Matthew. The Matthew effect may largely be explained by preferential attachment, whereby wealth or credit is distributed among individuals according to how much they already have. This has the net effect of making it increasingly difficult for low ranked individuals to increase their totals because they have fewer resources to risk over time, and increasingly easy for high rank individuals to preserve a large total because they have a large amount to risk. The study of Matthew effects were initially focused primarily ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ming Li

Ming Li is a Canadian computer scientist, known for his fundamental contributions to Kolmogorov complexity, bioinformatics, machine learning theory, and analysis of algorithms. Li is currently a professor of Computer Science at the David R. Cheriton School of Computer Science at the University of Waterloo. He holds a Tier I Canada Research Chair in Bioinformatics. In addition to academic achievements, his research has led to the founding of two independent companies. Education Li received a Master of Science degree (Computer Science) from Wayne State University in 1980 and earned a Doctor of Philosophy degree (Computer Science) under the supervision of Juris Hartmanis, from Cornell University in 1985. His post-doctoral research was conducted at Harvard University under the supervision of Leslie Valiant. Career Paul Vitanyi and Li pioneered Kolmogorov complexity theory and applications, and co-authored the textbook ''An Introduction to Kolmogorov Complexity and Its Applications' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gregory Chaitin

Gregory John Chaitin ( ; born 25 June 1947) is an Argentine-American mathematician and computer scientist. Beginning in the late 1960s, Chaitin made contributions to algorithmic information theory and metamathematics, in particular a computer-theoretic result equivalent to Gödel's incompleteness theorem. He is considered to be one of the founders of what is today known as algorithmic (Solomonoff–Kolmogorov–Chaitin, Kolmogorov or program-size) complexity together with Andrei Kolmogorov and Ray Solomonoff. Along with the works of e.g. Solomonoff, Kolmogorov, Martin-Löf, and Leonid Levin, algorithmic information theory became a foundational part of theoretical computer science, information theory, and mathematical logic. It is a common subject in several computer science curricula. Besides computer scientists, Chaitin's work draws attention of many philosophers and mathematicians to fundamental problems in mathematical creativity and digital philosophy. Mathematics and comp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multiple Discovery

Multiple may refer to: Economics *Multiple finance, a method used to analyze stock prices *Multiples of the P/E, price-to-earnings ratio *Chain stores, are also referred to as 'Multiples' *Box office multiple, the ratio of a film's total gross to that of its opening weekend Sociology *Multiples (sociology), a theory in sociology of science by Robert K. Merton, see Science *Multiple (mathematics), multiples of numbers *List of multiple discoveries, instances of scientists, working independently of each other, reaching similar findings *Multiple birth, because having twins is sometimes called having "multiples" *Multiple sclerosis, an inflammatory disease *Parlance for people with multiple identities, sometimes called "multiples"; often theorized as having dissociative identity disorder Printing *Printmaking, where ''multiple'' is often used as a term for a print, especially in the US *Artist's multiple, series of identical prints, collages or objects by an artist, subverting the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithmic Probability

In algorithmic information theory, algorithmic probability, also known as Solomonoff probability, is a mathematical method of assigning a prior probability to a given observation. It was invented by Ray Solomonoff in the 1960s. It is used in inductive inference theory and analyses of algorithms. In his general theory of inductive inference, Solomonoff uses the method together with Bayes' rule to obtain probabilities of prediction for an algorithm's future outputs. In the mathematical formalism used, the observations have the form of finite binary strings viewed as outputs of Turing machines, and the universal prior is a probability distribution over the set of finite binary strings calculated from a probability distribution over programs (that is, inputs to a universal Turing machine). The prior is universal in the Turing-computability sense, i.e. no string has zero probability. It is not computable, but it can be approximated. Overview Algorithmic probability is the main ingre ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ray Solomonoff

Ray Solomonoff (July 25, 1926 – December 7, 2009) was the inventor of algorithmic probability, his General Theory of Inductive Inference (also known as Universal Inductive Inference),Samuel Rathmanner and Marcus Hutter. A philosophical treatise of universal induction. Entropy, 13(6):1076–1136, 2011. and was a founder of algorithmic information theory. He was an originator of the branch of artificial intelligence based on machine learning, prediction and probability. He circulated the first report on non-semantic machine learning in 1956."An Inductive Inference Machine", Dartmouth College, N.H., version of Aug. 14, 1956(pdf scanned copy of the original)/ref> Solomonoff first described algorithmic probability in 1960, publishing the theorem that launched Kolmogorov complexity and algorithmic information theory. He first described these results at a conference at Caltech in 1960, and in a report, Feb. 1960, "A Preliminary Report on a General Theory of Inductive Inference." He clar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Structure

In computer science, a data structure is a data organization, management, and storage format that is usually chosen for efficient access to data. More precisely, a data structure is a collection of data values, the relationships among them, and the functions or operations that can be applied to the data, i.e., it is an algebraic structure about data. Usage Data structures serve as the basis for abstract data types (ADT). The ADT defines the logical form of the data type. The data structure implements the physical form of the data type. Different types of data structures are suited to different kinds of applications, and some are highly specialized to specific tasks. For example, relational databases commonly use B-tree indexes for data retrieval, while compiler implementations usually use hash tables to look up identifiers. Data structures provide a means to manage large amounts of data efficiently for uses such as large databases and internet indexing services. Usually, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithmic Information Theory

Algorithmic information theory (AIT) is a branch of theoretical computer science that concerns itself with the relationship between computation and information of computably generated objects (as opposed to stochastically generated), such as strings or any other data structure. In other words, it is shown within algorithmic information theory that computational incompressibility "mimics" (except for a constant that only depends on the chosen universal programming language) the relations or inequalities found in information theory. According to Gregory Chaitin, it is "the result of putting Shannon's information theory and Turing's computability theory into a cocktail shaker and shaking vigorously." Besides the formalization of a universal measure for irreducible information content of computably generated objects, some main achievements of AIT were to show that: in fact algorithmic complexity follows (in the self-delimited case) the same inequalities (except for a constant) tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

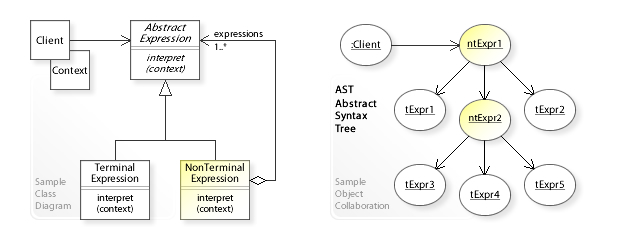

Interpreter (computing)

In computer science, an interpreter is a computer program that directly executes instructions written in a programming or scripting language, without requiring them previously to have been compiled into a machine language program. An interpreter generally uses one of the following strategies for program execution: # Parse the source code and perform its behavior directly; # Translate source code into some efficient intermediate representation or object code and immediately execute that; # Explicitly execute stored precompiled bytecode made by a compiler and matched with the interpreter Virtual Machine. Early versions of Lisp programming language and minicomputer and microcomputer BASIC dialects would be examples of the first type. Perl, Raku, Python, MATLAB, and Ruby are examples of the second, while UCSD Pascal is an example of the third type. Source programs are compiled ahead of time and stored as machine independent code, which is then linked at run-time and executed by ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Up To

Two Mathematical object, mathematical objects ''a'' and ''b'' are called equal up to an equivalence relation ''R'' * if ''a'' and ''b'' are related by ''R'', that is, * if ''aRb'' holds, that is, * if the equivalence classes of ''a'' and ''b'' with respect to ''R'' are equal. This figure of speech is mostly used in connection with expressions derived from equality, such as uniqueness or count. For example, ''x'' is unique up to ''R'' means that all objects ''x'' under consideration are in the same equivalence class with respect to the relation ''R''. Moreover, the equivalence relation ''R'' is often designated rather implicitly by a generating condition or transformation. For example, the statement "an integer's prime factorization is unique up to ordering" is a concise way to say that any two lists of prime factors of a given integer are equivalent with respect to the relation ''R'' that relates two lists if one can be obtained by reordering (permutation) from the other. As anot ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |