|

Cryptographic Primitive

Cryptographic primitives are well-established, low-level cryptography, cryptographic algorithms that are frequently used to build cryptographic protocols for computer security systems. These routines include, but are not limited to, one-way hash functions and cipher, encryption functions. Rationale When creating cryptosystem, cryptographic systems, system designer, designers use cryptographic primitives as their most basic building blocks. Because of this, cryptographic primitives are designed to do one very specific task in a precisely defined and highly reliable fashion. Since cryptographic primitives are used as building blocks, they must be very reliable, i.e. perform according to their specification. For example, if an encryption routine claims to be only breakable with number of computer operations, and it is broken with significantly fewer than operations, then that cryptographic primitive has failed. If a cryptographic primitive is found to fail, almost every protocol t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cryptography

Cryptography, or cryptology (from "hidden, secret"; and ''graphein'', "to write", or ''-logy, -logia'', "study", respectively), is the practice and study of techniques for secure communication in the presence of Adversary (cryptography), adversarial behavior. More generally, cryptography is about constructing and analyzing Communication protocol, protocols that prevent third parties or the public from reading private messages. Modern cryptography exists at the intersection of the disciplines of mathematics, computer science, information security, electrical engineering, digital signal processing, physics, and others. Core concepts related to information security (confidentiality, data confidentiality, data integrity, authentication, and non-repudiation) are also central to cryptography. Practical applications of cryptography include electronic commerce, Smart card#EMV, chip-based payment cards, digital currencies, password, computer passwords, and military communications. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Software Bug

A software bug is a design defect ( bug) in computer software. A computer program with many or serious bugs may be described as ''buggy''. The effects of a software bug range from minor (such as a misspelled word in the user interface) to severe (such as frequent crashing). In 2002, a study commissioned by the US Department of Commerce's National Institute of Standards and Technology concluded that "software bugs, or errors, are so prevalent and so detrimental that they cost the US economy an estimated $59 billion annually, or about 0.6 percent of the gross domestic product". Since the 1950s, some computer systems have been designed to detect or auto-correct various software errors during operations. History Terminology ''Mistake metamorphism'' (from Greek ''meta'' = "change", ''morph'' = "form") refers to the evolution of a defect in the final stage of software deployment. Transformation of a ''mistake'' committed by an analyst in the early stages of the softw ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

RSA (cryptosystem)

The RSA (Rivest–Shamir–Adleman) cryptosystem is a public-key cryptosystem, one of the oldest widely used for secure data transmission. The initialism "RSA" comes from the surnames of Ron Rivest, Adi Shamir and Leonard Adleman, who publicly described the algorithm in 1977. An equivalent system was developed secretly in 1973 at Government Communications Headquarters (GCHQ), the British signals intelligence agency, by the English mathematician Clifford Cocks. That system was declassified in 1997. In a public-key cryptosystem, the encryption key is public and distinct from the decryption key, which is kept secret (private). An RSA user creates and publishes a public key based on two large prime numbers, along with an auxiliary value. The prime numbers are kept secret. Messages can be encrypted by anyone via the public key, but can only be decrypted by someone who knows the private key. The security of RSA relies on the practical difficulty of factoring the product o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Public-key Cryptography

Public-key cryptography, or asymmetric cryptography, is the field of cryptographic systems that use pairs of related keys. Each key pair consists of a public key and a corresponding private key. Key pairs are generated with cryptographic algorithms based on mathematical problems termed one-way functions. Security of public-key cryptography depends on keeping the private key secret; the public key can be openly distributed without compromising security. There are many kinds of public-key cryptosystems, with different security goals, including digital signature, Diffie–Hellman key exchange, Key encapsulation mechanism, public-key key encapsulation, and public-key encryption. Public key algorithms are fundamental security primitives in modern cryptosystems, including applications and protocols that offer assurance of the confidentiality and authenticity of electronic communications and data storage. They underpin numerous Internet standards, such as Transport Layer Security, T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Advanced Encryption Standard

The Advanced Encryption Standard (AES), also known by its original name Rijndael (), is a specification for the encryption of electronic data established by the U.S. National Institute of Standards and Technology (NIST) in 2001. AES is a variant of the Rijndael block cipher developed by two Belgium, Belgian cryptographers, Joan Daemen and Vincent Rijmen, who submitted a proposal to NIST during the Advanced Encryption Standard process, AES selection process. Rijndael is a family of ciphers with different key size, key and Block size (cryptography), block sizes. For AES, NIST selected three members of the Rijndael family, each with a block size of 128 bits, but three different key lengths: 128, 192 and 256 bits. AES has been adopted by the Federal government of the United States, U.S. government. It supersedes the Data Encryption Standard (DES), which was published in 1977. The algorithm described by AES is a symmetric-key algorithm, meaning the same key is used for both encrypting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

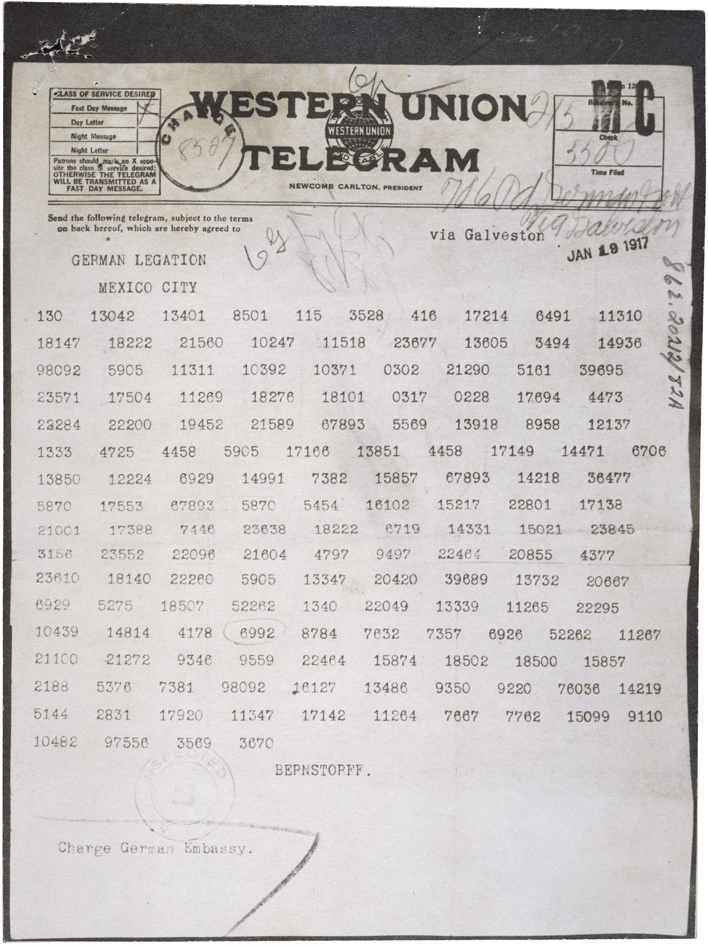

Ciphertext

In cryptography, ciphertext or cyphertext is the result of encryption performed on plaintext using an algorithm, called a cipher. Ciphertext is also known as encrypted or encoded information because it contains a form of the original plaintext that is unreadable by a human or computer without the proper cipher to decrypt it. This process prevents the loss of sensitive information via hacking. Decryption, the inverse of encryption, is the process of turning ciphertext into readable plaintext. Ciphertext is not to be confused with codetext because the latter is a result of a code, not a cipher. Conceptual underpinnings Let m\! be the plaintext message that Alice wants to secretly transmit to Bob and let E_k\! be the encryption cipher, where _k\! is a secret key, cryptographic key. Alice must first transform the plaintext into ciphertext, c\!, in order to securely send the message to Bob, as follows: : c = E_k(m). \! In a symmetric-key system, Bob knows Alice's encryption key. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Private Key Cryptography

Symmetric-key algorithms are algorithms for cryptography that use the same cryptographic keys for both the encryption of plaintext and the decryption of ciphertext. The keys may be identical, or there may be a simple transformation to go between the two keys. The keys, in practice, represent a shared secret between two or more parties that can be used to maintain a private information link. The requirement that both parties have access to the secret key is one of the main drawbacks of symmetric-key encryption, in comparison to public-key encryption (also known as asymmetric-key encryption). However, symmetric-key encryption algorithms are usually better for bulk encryption. With exception of the one-time pad they have a smaller key size, which means less storage space and faster transmission. Due to this, asymmetric-key encryption is often used to exchange the secret key for symmetric-key encryption. Types Symmetric-key encryption can use either stream ciphers or block ciphers. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SHA-256

SHA-2 (Secure Hash Algorithm 2) is a set of cryptographic hash functions designed by the United States National Security Agency (NSA) and first published in 2001. They are built using the Merkle–Damgård construction, from a one-way compression function itself built using the Davies–Meyer structure from a specialized block cipher. SHA-2 includes significant changes from its predecessor, SHA-1. The SHA-2 family consists of six hash functions with digests (hash values) that are 224, 256, 384 or 512 bits: SHA-224, SHA-256, SHA-384, SHA-512, SHA-512/224, SHA-512/256. SHA-256 and SHA-512 are hash functions whose digests are eight 32-bit and 64-bit words, respectively. They use different shift amounts and additive constants, but their structures are otherwise virtually identical, differing only in the number of rounds. SHA-224 and SHA-384 are truncated versions of SHA-256 and SHA-512 respectively, computed with different initial values. SHA-512/224 and SHA-512/256 are also trunc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

One-way Compression Function

In cryptography, a one-way compression function is a function that transforms two fixed-length inputs into a fixed-length output.Handbook of Applied Cryptography by Alfred J. Menezes, Paul C. van Oorschot, Scott A. Vanstone. Fifth Printing (August 2001) page 328. The transformation is one-way function, "one-way", meaning that it is difficult given a particular output to compute inputs which compress to that output. One-way compression functions are not related to conventional data compression algorithms, which instead can be inverted exactly (lossless compression) or approximately (lossy compression) to the original data. One-way compression functions are for instance used in the Merkle–Damgård construction inside cryptographic hash functions. One-way compression functions are often built from block ciphers. Some methods to turn any normal block cipher into a one-way compression function are Davies–Meyer, Matyas–Meyer–Oseas, Miyaguchi–Preneel (single-block-length compr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

One-way Hash Function

A cryptographic hash function (CHF) is a hash algorithm (a map of an arbitrary binary string to a binary string with a fixed size of n bits) that has special properties desirable for a cryptographic application: * the probability of a particular n-bit output result (hash value) for a random input string ("message") is 2^ (as for any good hash), so the hash value can be used as a representative of the message; * finding an input string that matches a given hash value (a ''pre-image'') is infeasible, ''assuming all input strings are equally likely.'' The ''resistance'' to such search is quantified as security strength: a cryptographic hash with n bits of hash value is expected to have a ''preimage resistance'' strength of n bits, unless the space of possible input values is significantly smaller than 2^ (a practical example can be found in ); * a ''second preimage'' resistance strength, with the same expectations, refers to a similar problem of finding a second message that mat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vulnerability (computing)

Vulnerabilities are flaws or weaknesses in a system's design, implementation, or management that can be exploited by a malicious actor to compromise its security. Despite a system administrator's best efforts to achieve complete correctness, virtually all hardware and software contain bugs where the system does not behave as expected. If the bug could enable an attacker to compromise the confidentiality, integrity, or availability of system resources, it can be considered a vulnerability. Insecure software development practices as well as design factors such as complexity can increase the burden of vulnerabilities. Vulnerability management is a process that includes identifying systems and prioritizing which are most important, scanning for vulnerabilities, and taking action to secure the system. Vulnerability management typically is a combination of remediation, mitigation, and acceptance. Vulnerabilities can be scored for severity according to the Common Vulnerability S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |