|

Cray T3E

The Cray T3E was Cray Research's second-generation massively parallel supercomputer architecture, launched in late November 1995. The first T3E was installed at the Pittsburgh Supercomputing Center in 1996. Like the previous Cray T3D, it was a fully distributed memory machine using a 3D torus topology interconnection network. The T3E initially used the DEC Alpha 21164 (EV5) microprocessor and was designed to scale from 8 to 2,176 ''Processing Elements'' (PEs). Each PE had between 64 MB and 2 GB of DRAM and a 6-way interconnect router with a payload bandwidth of 480 MB/s in each direction. Unlike many other MPP systems, including the T3D, the T3E was fully self-hosted and ran the UNICOS/mk distributed operating system with a ''GigaRing'' I/O subsystem integrated into the torus for network, disk and tape I/O. The original T3E (retrospectively known as the T3E-600) had a 300 MHz processor clock. Later variants, using the faster 21164A (EV56) processor, comprised the T3E-900 (4 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Processor Board Cray-2 Hg

Processor may refer to: Computing Hardware * Processor (computing) ** Central processing unit (CPU), the hardware within a computer that executes a program *** Microprocessor, a central processing unit contained on a single integrated circuit (IC) **** Application-specific instruction set processor (ASIP), a component used in system-on-a-chip design **** Graphics processing unit (GPU), a processor designed for doing dedicated graphics-rendering computations **** Physics processing unit (PPU), a dedicated microprocessor designed to handle the calculations of physics **** Digital signal processor (DSP), a specialized microprocessor designed specifically for digital signal processing ***** Image processor, a specialized DSP used for image processing in digital cameras, mobile phones or other devices **** Neural processing unit (NPU), a class of specialized hardware accelerator or computer system designed to accelerate artificial intelligence and machine learning applications, inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

History Of Supercomputing

The history of supercomputing goes back to the 1960s when a series of computers at Control Data Corporation (CDC) were designed by Seymour Cray to use innovative designs and parallelism to achieve superior computational peak performance. The CDC 6600, released in 1964, is generally considered the first supercomputer. However, some earlier computers were considered supercomputers for their day such as the 1954 IBM NORC in the 1950s, and in the early 1960s, the UNIVAC LARC (1960), the IBM 7030 Stretch (1962), and the Manchester Atlas (1962), all of which were of comparable power. While the supercomputers of the 1980s used only a few processors, in the 1990s, machines with thousands of processors began to appear both in the United States and in Japan, setting new computational performance records. By the end of the 20th century, massively parallel supercomputers with thousands of "off-the-shelf" processors similar to those found in personal computers were constructed and broke thro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

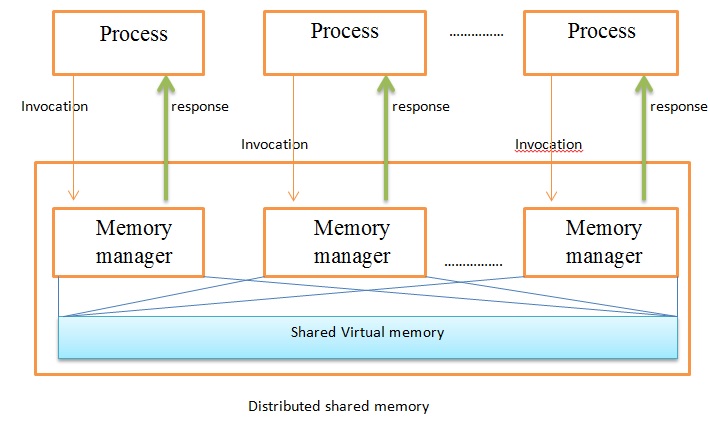

Distributed Shared Memory

In computer science, distributed shared memory (DSM) is a form of memory architecture where physically separated memories can be addressed as a single shared address space. The term "shared" does not mean that there is a single centralized memory, but that the address space is shared—i.e., the same physical address on two Processor (computing), processors refers to the same location in memory. Distributed global address space (DGAS), is a similar term for a wide class of software and hardware implementations, in which each node (networking), node of a computer cluster, cluster has access to shared memory architecture, shared memory in addition to each node's private (i.e., not shared) Random-access memory, memory. Overview DSM can be achieved via software as well as hardware. Hardware examples include cache coherence circuits and network interface controllers. There are three ways of implementing DSM: * Page (computer memory), Page-based approach using virtual memory * Sha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MIPS Architecture

MIPS (Microprocessor without Interlocked Pipelined Stages) is a family of reduced instruction set computer (RISC) instruction set architectures (ISA)Price, Charles (September 1995). ''MIPS IV Instruction Set'' (Revision 3.2), MIPS Technologies, Inc. developed by MIPS Computer Systems, now MIPS Technologies, based in the United States. There are multiple versions of MIPS, including MIPS I, II, III, IV, and V, as well as five releases of MIPS32/64 (for 32- and 64-bit implementations, respectively). The early MIPS architectures were 32-bit; 64-bit versions were developed later. As of April 2017, the current version of MIPS is MIPS32/64 Release 6. MIPS32/64 primarily differs from MIPS I–V by defining the privileged kernel mode System Control Coprocessor in addition to the user mode architecture. The MIPS architecture has several optional extensions: MIPS-3D, a simple set of floating-point SIMD instructions dedicated to 3D computer graphics; MDMX (MaDMaX), a more extensive i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Origin 3000

The Origin 3000 and the Onyx 3000 is a family of mid-range and high-end computers developed and manufactured by SGI. The Origin 3000 is a server, and the Onyx 3000 is a visualization system. Both systems were introduced in July 2000 to succeed the Origin 2000 and the Onyx2 respectively. These systems ran the IRIX 6.5 Advanced Server Environment operating system. Entry-level variants of these systems based on the same architecture but with a different hardware implementation are known as the Origin 300 and Onyx 300. The Origin 3000 was succeeded by the Altix 3000 in 2004 and the last model was discontinued on 29 December 2006, while the Onyx 3000 was succeeded by the Onyx4 and the Itanium-based Prism in 2004 and the last model was discontinued on 25 March 2005. Origin 3000 Models Special * Origin 3200C - This model was a cluster of nodes that consist of entire Origin 3200 systems. This model could scale to thousands of processors. The clustering technology used ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Silicon Graphics

Silicon Graphics, Inc. (stylized as SiliconGraphics before 1999, later rebranded SGI, historically known as Silicon Graphics Computer Systems or SGCS) was an American high-performance computing manufacturer, producing computer hardware and software. Founded in Mountain View, California, in November 1981 by James H. Clark, the computer scientist and entrepreneur perhaps best known for founding Netscape (with Marc Andreessen). Its initial market was 3D graphics computer workstations, but its products, strategies and market positions developed significantly over time. Early systems were based on the RealityEngine, Geometry Engine that Clark and Marc Hannah had developed at Stanford University, and were derived from Clark's broader background in computer graphics. The Geometry Engine was the first very-large-scale integration (VLSI) implementation of a geometry pipeline, specialized hardware that accelerated the "inner-loop" geometric computations needed to display three-dimensional ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Science

Computational science, also known as scientific computing, technical computing or scientific computation (SC), is a division of science, and more specifically the Computer Sciences, which uses advanced computing capabilities to understand and solve complex physical problems. While this typically extends into computational specializations, this field of study includes: * Algorithms ( numerical and non-numerical): mathematical models, computational models, and computer simulations developed to solve sciences (e.g, physical, biological, and social), engineering, and humanities problems * Computer hardware that develops and optimizes the advanced system hardware, firmware, networking, and data management components needed to solve computationally demanding problems * The computing infrastructure that supports both the science and engineering problem solving and the developmental computer and information science In practical use, it is typically the application of compu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Teraflops

Floating point operations per second (FLOPS, flops or flop/s) is a measure of computer performance in computing, useful in fields of scientific computations that require floating-point calculations. For such cases, it is a more accurate measure than measuring instructions per second. Floating-point arithmetic Floating-point arithmetic is needed for very large or very small real numbers, or computations that require a large dynamic range. Floating-point representation is similar to scientific notation, except computers use base two (with rare exceptions), rather than base ten. The encoding scheme stores the sign, the exponent (in base two for Cray and VAX, base two or ten for IEEE floating point formats, and base 16 for IBM Floating Point Architecture) and the significand (number after the radix point). While several similar formats are in use, the most common is ANSI/IEEE Std. 754-1985. This standard defines the format for 32-bit numbers called ''single precision'', a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Operating System

A distributed operating system is system software over a collection of independent software, networked, communicating, and physically separate computational nodes. They handle jobs which are serviced by multiple CPUs. Each individual node holds a specific software subset of the global aggregate operating system. Each subset is a composite of two distinct service provisioners. The first is a ubiquitous minimal kernel, or microkernel, that directly controls that node's hardware. Second is a higher-level collection of ''system management components'' that coordinate the node's individual and collaborative activities. These components abstract microkernel functions and support user applications. The microkernel and the management components collection work together. They support the system's goal of integrating multiple resources and processing functionality into an efficient and stable system. This seamless integration of individual nodes into a global system is referred to as ''tra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cray Research

Cray Inc., a subsidiary of Hewlett Packard Enterprise, is an American supercomputer manufacturer headquartered in Seattle, Washington. It also manufactures systems for data storage and analytics. Several Cray supercomputer systems are listed in the TOP500, which ranks the most powerful supercomputers in the world. In 1972, the company was founded by computer designer Seymour Cray as Cray Research, Inc., and it continues to manufacture parts in Chippewa Falls, Wisconsin, where Cray was born and raised. After being acquired by Silicon Graphics in 1996, the modern company was formed after being purchased in 2000 by Tera Computer Company, which adopted the name Cray Inc. In 2019, the company was acquired by Hewlett Packard Enterprise for $1.3 billion. History Background: 1950–1972 In 1950, Seymour Cray began working in the computing field when he joined Engineering Research Associates (ERA) in Saint Paul, Minnesota. There, he helped to create the ERA 1103. ERA eventually became ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |