|

Covariance

In probability theory and statistics, covariance is a measure of the joint variability of two random variables. The sign of the covariance, therefore, shows the tendency in the linear relationship between the variables. If greater values of one variable mainly correspond with greater values of the other variable, and the same holds for lesser values (that is, the variables tend to show similar behavior), the covariance is positive. In the opposite case, when greater values of one variable mainly correspond to lesser values of the other (that is, the variables tend to show opposite behavior), the covariance is negative. The magnitude of the covariance is the geometric mean of the variances that are in common for the two random variables. The Pearson product-moment correlation coefficient, correlation coefficient normalizes the covariance by dividing by the geometric mean of the total variances for the two random variables. A distinction must be made between (1) the covariance of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Covariance Matrix

In probability theory and statistics, a covariance matrix (also known as auto-covariance matrix, dispersion matrix, variance matrix, or variance–covariance matrix) is a square matrix giving the covariance between each pair of elements of a given random vector. Intuitively, the covariance matrix generalizes the notion of variance to multiple dimensions. As an example, the variation in a collection of random points in two-dimensional space cannot be characterized fully by a single number, nor would the variances in the x and y directions contain all of the necessary information; a 2 \times 2 matrix would be necessary to fully characterize the two-dimensional variation. Any covariance matrix is symmetric and positive semi-definite and its main diagonal contains variances (i.e., the covariance of each element with itself). The covariance matrix of a random vector \mathbf is typically denoted by \operatorname_, \Sigma or S. Definition Throughout this article, boldfaced u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Covariance Trends

In probability theory and statistics, covariance is a measure of the joint variability of two random variables. The sign of the covariance, therefore, shows the tendency in the linear relationship between the variables. If greater values of one variable mainly correspond with greater values of the other variable, and the same holds for lesser values (that is, the variables tend to show similar behavior), the covariance is positive. In the opposite case, when greater values of one variable mainly correspond to lesser values of the other (that is, the variables tend to show opposite behavior), the covariance is negative. The magnitude of the covariance is the geometric mean of the variances that are in common for the two random variables. The Pearson product-moment correlation coefficient, correlation coefficient normalizes the covariance by dividing by the geometric mean of the total variances for the two random variables. A distinction must be made between (1) the covariance of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Covariance Geometric Visualisation

In probability theory and statistics, covariance is a measure of the joint variability of two random variables. The sign of the covariance, therefore, shows the tendency in the linear relationship between the variables. If greater values of one variable mainly correspond with greater values of the other variable, and the same holds for lesser values (that is, the variables tend to show similar behavior), the covariance is positive. In the opposite case, when greater values of one variable mainly correspond to lesser values of the other (that is, the variables tend to show opposite behavior), the covariance is negative. The magnitude of the covariance is the geometric mean of the variances that are in common for the two random variables. The correlation coefficient normalizes the covariance by dividing by the geometric mean of the total variances for the two random variables. A distinction must be made between (1) the covariance of two random variables, which is a population par ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multivariate Normal Distribution

In probability theory and statistics, the multivariate normal distribution, multivariate Gaussian distribution, or joint normal distribution is a generalization of the one-dimensional ( univariate) normal distribution to higher dimensions. One definition is that a random vector is said to be ''k''-variate normally distributed if every linear combination of its ''k'' components has a univariate normal distribution. Its importance derives mainly from the multivariate central limit theorem. The multivariate normal distribution is often used to describe, at least approximately, any set of (possibly) correlated real-valued random variables, each of which clusters around a mean value. Definitions Notation and parametrization The multivariate normal distribution of a ''k''-dimensional random vector \mathbf = (X_1,\ldots,X_k)^ can be written in the following notation: : \mathbf\ \sim\ \mathcal(\boldsymbol\mu,\, \boldsymbol\Sigma), or to make it explicitly known that \mathb ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variance

In probability theory and statistics, variance is the expected value of the squared deviation from the mean of a random variable. The standard deviation (SD) is obtained as the square root of the variance. Variance is a measure of dispersion, meaning it is a measure of how far a set of numbers is spread out from their average value. It is the second central moment of a distribution, and the covariance of the random variable with itself, and it is often represented by \sigma^2, s^2, \operatorname(X), V(X), or \mathbb(X). An advantage of variance as a measure of dispersion is that it is more amenable to algebraic manipulation than other measures of dispersion such as the expected absolute deviation; for example, the variance of a sum of uncorrelated random variables is equal to the sum of their variances. A disadvantage of the variance for practical applications is that, unlike the standard deviation, its units differ from the random variable, which is why the standard devi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

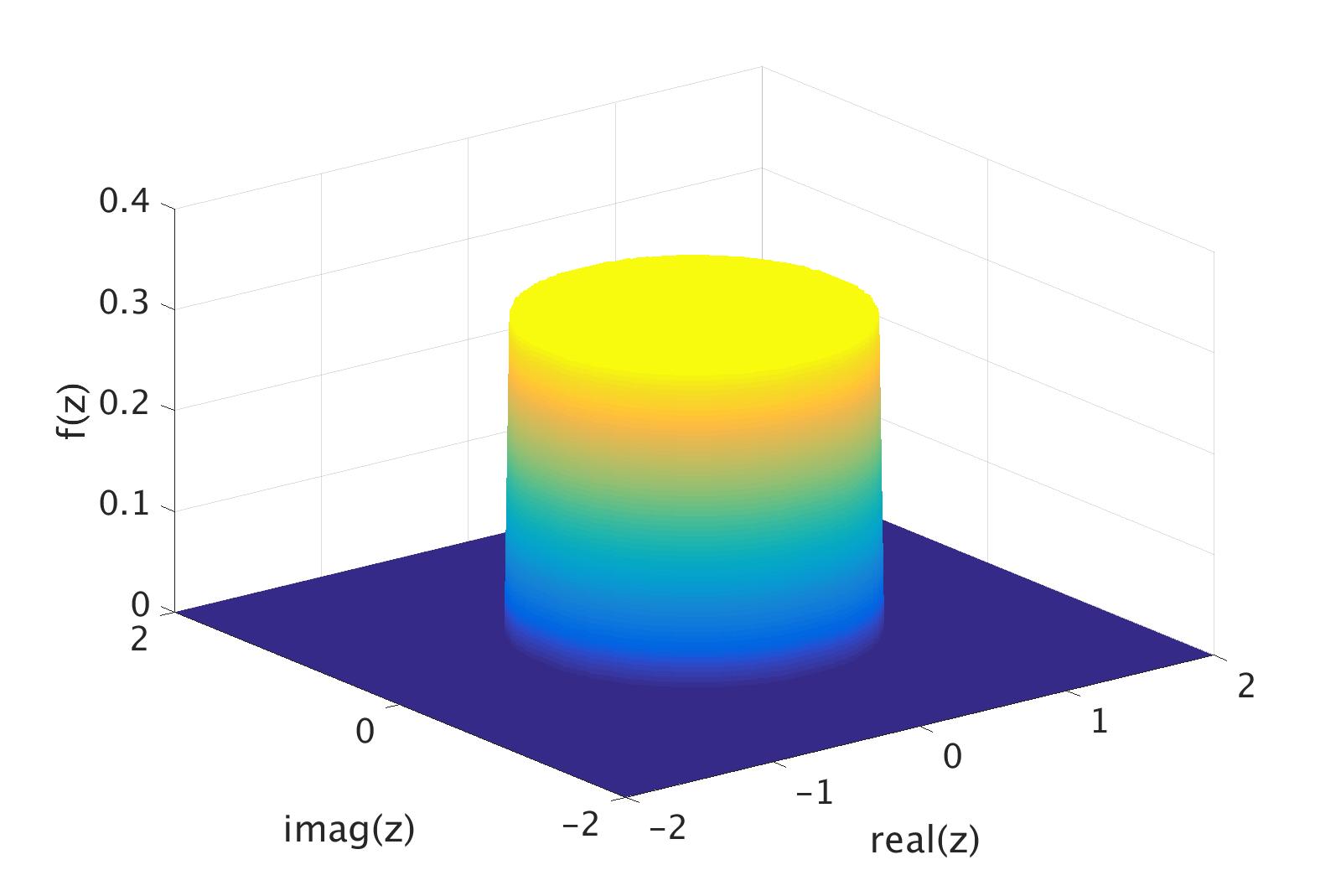

Complex Random Variable

In probability theory and statistics, complex random variables are a generalization of real-valued random variables to complex numbers, i.e. the possible values a complex random variable may take are complex numbers. Complex random variables can always be considered as pairs of real random variables: their real and imaginary parts. Therefore, the #Cumulative distribution function, distribution of one complex random variable may be interpreted as the Joint probability distribution, joint distribution of two real random variables. Some concepts of real random variables have a straightforward generalization to complex random variables—e.g., the definition of the #Expectation, mean of a complex random variable. Other concepts are unique to complex random variables. Applications of complex random variables are found in digital signal processing, quadrature amplitude modulation and information theory. Definition A complex random variable Z on the probability space (\Omega,\mathcal ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uncorrelated

In probability theory and statistics, two real-valued random variables, X, Y, are said to be uncorrelated if their covariance, \operatorname ,Y= \operatorname Y- \operatorname \operatorname /math>, is zero. If two variables are uncorrelated, there is no linear relationship between them. Uncorrelated random variables have a Pearson correlation coefficient, when it exists, of zero, except in the trivial case when either variable has zero variance (is a constant). In this case the correlation is undefined. In general, uncorrelatedness is not the same as orthogonality, except in the special case where at least one of the two random variables has an expected value of 0. In this case, the covariance is the expectation of the product, and X and Y are uncorrelated if and only if In logic and related fields such as mathematics and philosophy, "if and only if" (often shortened as "iff") is paraphrased by the biconditional, a logical connective between statements. The bicondit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

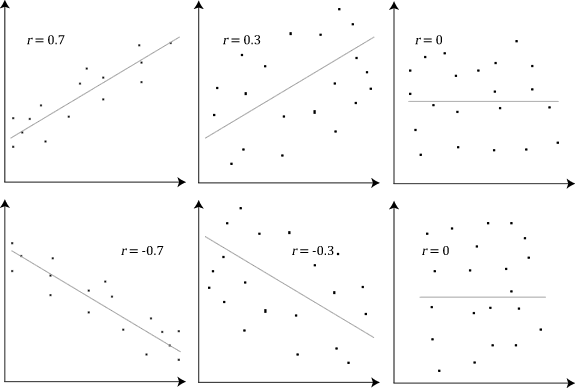

Pearson Product-moment Correlation Coefficient

In statistics, the Pearson correlation coefficient (PCC) is a correlation coefficient that measures linear correlation between two sets of data. It is the ratio between the covariance of two variables and the product of their standard deviations; thus, it is essentially a normalized measurement of the covariance, such that the result always has a value between −1 and 1. As with covariance itself, the measure can only reflect a linear correlation of variables, and ignores many other types of relationships or correlations. As a simple example, one would expect the age and height of a sample of children from a school to have a Pearson correlation coefficient significantly greater than 0, but less than 1 (as 1 would represent an unrealistically perfect correlation). Naming and history It was developed by Karl Pearson from a related idea introduced by Francis Galton in the 1880s, and for which the mathematical formula was derived and published by Auguste Bravais in 1844. The naming ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linear Relationship

In statistics, correlation or dependence is any statistical relationship, whether causal or not, between two random variables or bivariate data. Although in the broadest sense, "correlation" may indicate any type of association, in statistics it usually refers to the degree to which a pair of variables are '' linearly'' related. Familiar examples of dependent phenomena include the correlation between the height of parents and their offspring, and the correlation between the price of a good and the quantity the consumers are willing to purchase, as it is depicted in the demand curve. Correlations are useful because they can indicate a predictive relationship that can be exploited in practice. For example, an electrical utility may produce less power on a mild day based on the correlation between electricity demand and weather. In this example, there is a causal relationship, because extreme weather causes people to use more electricity for heating or cooling. However, in gene ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Correlation

In statistics, correlation or dependence is any statistical relationship, whether causal or not, between two random variables or bivariate data. Although in the broadest sense, "correlation" may indicate any type of association, in statistics it usually refers to the degree to which a pair of variables are '' linearly'' related. Familiar examples of dependent phenomena include the correlation between the height of parents and their offspring, and the correlation between the price of a good and the quantity the consumers are willing to purchase, as it is depicted in the demand curve. Correlations are useful because they can indicate a predictive relationship that can be exploited in practice. For example, an electrical utility may produce less power on a mild day based on the correlation between electricity demand and weather. In this example, there is a causal relationship, because extreme weather causes people to use more electricity for heating or cooling. However, in g ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Second Moment

In mathematics, the moments of a function are certain quantitative measures related to the shape of the function's graph. If the function represents mass density, then the zeroth moment is the total mass, the first moment (normalized by total mass) is the center of mass, and the second moment is the moment of inertia. If the function is a probability distribution, then the first moment is the expected value, the second central moment is the variance, the third standardized moment is the skewness, and the fourth standardized moment is the kurtosis. For a distribution of mass or probability on a bounded interval, the collection of all the moments (of all orders, from to ) uniquely determines the distribution (Hausdorff moment problem). The same is not true on unbounded intervals (Hamburger moment problem). In the mid-nineteenth century, Pafnuty Chebyshev became the first person to think systematically in terms of the moments of random variables. Significance of the moments The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |