|

ChatGPT Plus

ChatGPT is a generative artificial intelligence chatbot developed by OpenAI and released on November 30, 2022. It uses large language models (LLMs) such as GPT-4o as well as other multimodal models to create human-like responses in text, speech, and images. It has access to features such as searching the web, using apps, and running programs. It is credited with accelerating the AI boom, an ongoing period of rapid investment in and public attention to the field of artificial intelligence (AI). Some observers have raised concern about the potential of ChatGPT and similar programs to displace human intelligence, enable plagiarism, or fuel misinformation. ChatGPT is built on OpenAI's proprietary series of generative pre-trained transformer (GPT) models and is fine-tuned for conversational applications using a combination of supervised learning and reinforcement learning from human feedback. Successive user prompts and replies are considered as context at each stage of the co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multimodal Learning

Multimodal learning is a type of deep learning that integrates and processes multiple types of data, referred to as modalities, such as text, audio, images, or video. This integration allows for a more holistic understanding of complex data, improving model performance in tasks like visual question answering, cross-modal retrieval, text-to-image generation, aesthetic ranking, and image captioning. Large multimodal models, such as Google Gemini and GPT-4o, have become increasingly popular since 2023, enabling increased versatility and a broader understanding of real-world phenomena. Motivation Data usually comes with different modalities which carry different information. For example, it is very common to caption an image to convey the information not presented in the image itself. Similarly, sometimes it is more straightforward to use an image to describe information which may not be obvious from text. As a result, if different words appear in similar images, then these words ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AI Prompt

Prompt engineering is the process of structuring or crafting an instruction in order to produce the best possible output from a generative artificial intelligence (AI) model. A ''prompt'' is natural language text describing the task that an AI should perform. A prompt for a text-to-text language model can be a query, a command, or a longer statement including context, instructions, and conversation history. Prompt engineering may involve phrasing a query, specifying a style, choice of words and grammar, providing relevant context, or describing a character for the AI to mimic. When communicating with a text-to-image or a text-to-audio model, a typical prompt is a description of a desired output such as "a high-quality photo of an astronaut riding a horse" or "Lo-fi slow BPM electro chill with organic samples". Prompting a text-to-image model may involve adding, removing, or emphasizing words to achieve a desired subject, style, layout, lighting, and aesthetic. History In 2018, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reinforcement Learning From Human Feedback

In machine learning, reinforcement learning from human feedback (RLHF) is a technique to AI alignment, align an intelligent agent with human preferences. It involves training a reward model to represent preferences, which can then be used to train other models through reinforcement learning. In classical reinforcement learning, an intelligent agent's goal is to learn a function that guides its behavior, called a reinforcement learning#Policy, policy. This function is iteratively updated to maximize rewards based on the agent's task performance. However, explicitly defining a reward function that accurately approximates human preferences is challenging. Therefore, RLHF seeks to train a "reward model" directly from human feedback. The reward model is first trained in a supervised learning, supervised manner to predict if a response to a given prompt is good (high reward) or bad (low reward) based on ranking data collected from human labeled data, annotators. This model then serves a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Supervised Learning

In machine learning, supervised learning (SL) is a paradigm where a Statistical model, model is trained using input objects (e.g. a vector of predictor variables) and desired output values (also known as a ''supervisory signal''), which are often human-made labels. The training process builds a function that maps new data to expected output values. An optimal scenario will allow for the algorithm to accurately determine output values for unseen instances. This requires the learning algorithm to Generalization (learning), generalize from the training data to unseen situations in a reasonable way (see inductive bias). This statistical quality of an algorithm is measured via a ''generalization error''. Steps to follow To solve a given problem of supervised learning, the following steps must be performed: # Determine the type of training samples. Before doing anything else, the user should decide what kind of data is to be used as a Training, validation, and test data sets, trainin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fine-tuning (machine Learning)

In deep learning, fine-tuning is an approach to transfer learning in which the parameters of a pre-trained neural network model are trained on new data. Fine-tuning can be done on the entire neural network, or on only a subset of its layers, in which case the layers that are not being fine-tuned are "frozen" (i.e., not changed during backpropagation). A model may also be augmented with "adapters" that consist of far fewer parameters than the original model, and fine-tuned in a parameter-efficient way by tuning the weights of the adapters and leaving the rest of the model's weights frozen. For some architectures, such as convolutional neural networks, it is common to keep the earlier layers (those closest to the input layer) frozen, as they capture lower-level features, while later layers often discern high-level features that can be more related to the task that the model is trained on. Models that are pre-trained on large, general corpora are usually fine-tuned by reusing their p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Generative Pre-trained Transformer

A generative pre-trained transformer (GPT) is a type of large language model (LLM) and a prominent framework for generative artificial intelligence. It is an Neural network (machine learning), artificial neural network that is used in natural language processing by machines. It is based on the Transformer (deep learning architecture), transformer deep learning architecture, pre-trained on large data sets of unlabeled text, and able to generate novel human-like content. As of 2023, most LLMs had these characteristics and are sometimes referred to broadly as GPTs. The first GPT was introduced in 2018 by OpenAI. OpenAI has released significant #Foundation models, GPT foundation models that have been sequentially numbered, to comprise its "GPT-''n''" series. Each of these was significantly more capable than the previous, due to increased size (number of trainable parameters) and training. The most recent of these, GPT-4o, was released in May 2024. Such models have been the basis fo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Misinformation

Misinformation is incorrect or misleading information. Misinformation and disinformation are not interchangeable terms: misinformation can exist with or without specific malicious intent, whereas disinformation is distinct in that the information is ''deliberately'' deceptive and propagated. Misinformation can include inaccurate, incomplete, misleading, or false information as well as selective or half-truths. In January 2024, the World Economic Forum identified misinformation and disinformation, propagated by both internal and external interests, to "widen societal and political divides" as the most severe global risks in the short term. The reason is that misinformation can influence people's beliefs about communities, politics, medicine, and more. Research shows that susceptibility to misinformation can be influenced by several factors, including cognitive biases, emotional responses, social dynamics, and media literacy levels. Accusations of misinformation have been used to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Plagiarism

Plagiarism is the representation of another person's language, thoughts, ideas, or expressions as one's own original work.From the 1995 ''Random House Dictionary of the English Language, Random House Compact Unabridged Dictionary'': use or close imitation of the language and thoughts of another author and the representation of them as one's own original work qtd. in From the Oxford English Dictionary: The action or practice of taking someone else's work, idea, etc., and passing it off as one's own; literary theft. Although precise definitions vary depending on the institution, in many countries and cultures plagiarism is considered a violation of academic integrity and journalistic ethics, as well as of social norms around learning, teaching, research, fairness, respect, and responsibility. As such, a person or Legal Entity, entity that is determined to have committed plagiarism is often subject to various punishments or sanctions, such as Suspension (punishment), suspension, Expul ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

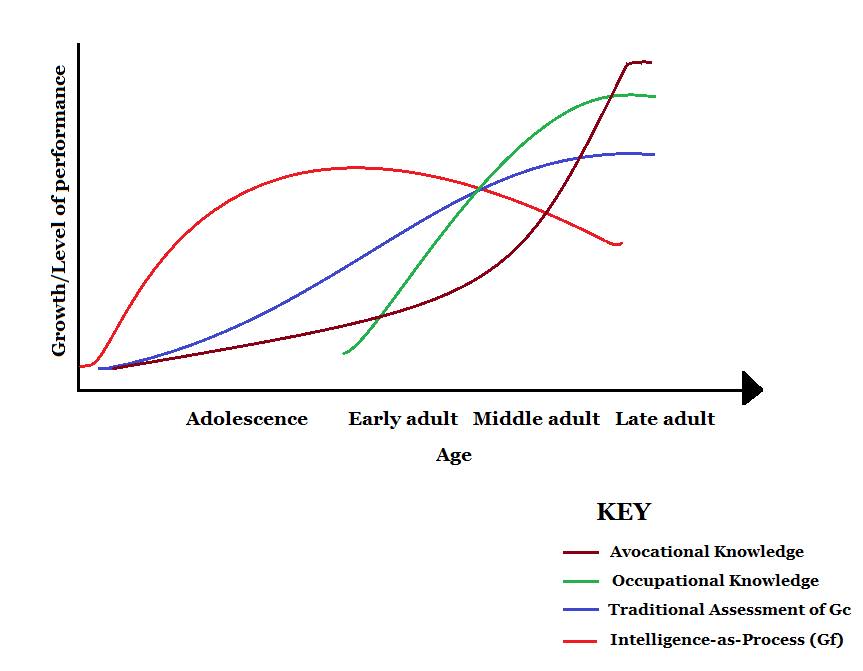

Human Intelligence

Human intelligence is the Intellect, intellectual capability of humans, which is marked by complex Cognition, cognitive feats and high levels of motivation and self-awareness. Using their intelligence, humans are able to learning, learn, Concept learning, form concepts, understanding, understand, and apply logic and reason. Human intelligence is also thought to encompass their capacities to Pattern recognition (psychology), recognize patterns, planning, plan, innovation, innovate, problem solving, solve problems, decision making, make decisions, memory, retain information, and use language to Human communication, communicate. There are conflicting ideas about how intelligence should be conceptualized and measured. In psychometrics, human intelligence is commonly assessed by intelligence quotient (IQ) tests, although the Validity (statistics), validity of these tests is disputed. Several subcategories of intelligence, such as emotional intelligence and social intelligence, have be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

The New York Times

''The New York Times'' (''NYT'') is an American daily newspaper based in New York City. ''The New York Times'' covers domestic, national, and international news, and publishes opinion pieces, investigative reports, and reviews. As one of the longest-running newspapers in the United States, the ''Times'' serves as one of the country's Newspaper of record, newspapers of record. , ''The New York Times'' had 9.13 million total and 8.83 million online subscribers, both by significant margins the List of newspapers in the United States, highest numbers for any newspaper in the United States; the total also included 296,330 print subscribers, making the ''Times'' the second-largest newspaper by print circulation in the United States, following ''The Wall Street Journal'', also based in New York City. ''The New York Times'' is published by the New York Times Company; since 1896, the company has been chaired by the Ochs-Sulzberger family, whose current chairman and the paper's publ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |