|

Artificial Intelligence Engineering

Artificial intelligence engineering (AI engineering) is a technical discipline that focuses on the design, development, and deployment of AI systems. AI engineering involves applying engineering principles and methodologies to create scalable, efficient, and reliable AI-based solutions. It merges aspects of data engineering and software engineering to create real-world applications in diverse domains such as healthcare, finance, autonomous systems, and industrial automation. Key components AI engineering integrates a variety of technical domains and practices, all of which are essential to building scalable, reliable, and ethical AI systems. Data engineering and infrastructure Data serves as the cornerstone of AI systems, necessitating careful engineering to ensure quality, availability, and usability. AI engineers gather large, diverse datasets from multiple sources such as databases, APIs, and real-time streams. This data undergoes cleaning, normalization, and preprocessing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech recognition, computer vision, translation between (natural) languages, as well as other mappings of inputs. The ''Oxford English Dictionary'' of Oxford University Press defines artificial intelligence as: the theory and development of computer systems able to perform tasks that normally require human intelligence, such as visual perception, speech recognition, decision-making, and translation between languages. AI applications include advanced web search engines (e.g., Google), recommendation systems (used by YouTube, Amazon and Netflix), understanding human speech (such as Siri and Alexa), self-driving cars (e.g., Tesla), automated decision-making and competing at the highest level in strategic game systems (such as chess and Go). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hyperparameter Tuning

In machine learning, hyperparameter optimization or tuning is the problem of choosing a set of optimal hyperparameters for a learning algorithm. A hyperparameter is a parameter whose value is used to control the learning process. By contrast, the values of other parameters (typically node weights) are learned. The same kind of machine learning model can require different constraints, weights or learning rates to generalize different data patterns. These measures are called hyperparameters, and have to be tuned so that the model can optimally solve the machine learning problem. Hyperparameter optimization finds a tuple of hyperparameters that yields an optimal model which minimizes a predefined loss function on given independent data. The objective function takes a tuple of hyperparameters and returns the associated loss. Cross-validation is often used to estimate this generalization performance. Approaches Grid search The traditional way of performing hyperparameter optimiz ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Part Of Speech

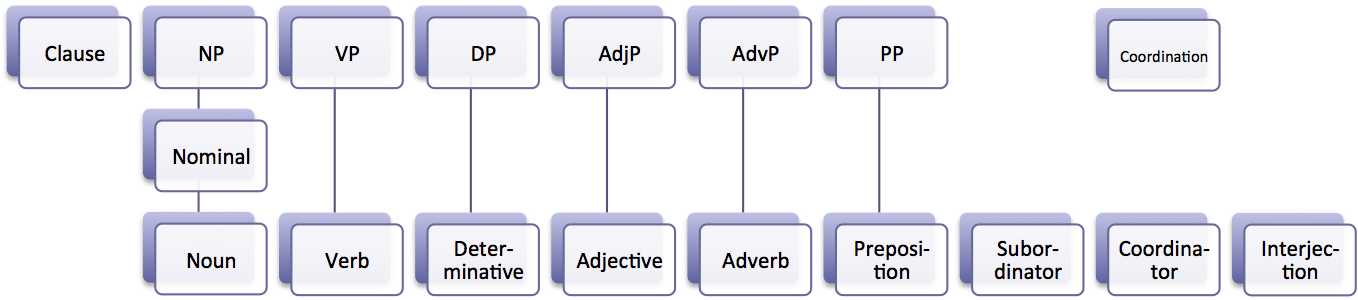

In grammar, a part of speech or part-of-speech ( abbreviated as POS or PoS, also known as word class or grammatical category) is a category of words (or, more generally, of lexical items) that have similar grammatical properties. Words that are assigned to the same part of speech generally display similar syntactic behavior (they play similar roles within the grammatical structure of sentences), sometimes similar morphological behavior in that they undergo inflection for similar properties and even similar semantic behavior. Commonly listed English parts of speech are noun, verb, adjective, adverb, pronoun, preposition, conjunction, interjection, numeral, article, and determiner. Other terms than ''part of speech''—particularly in modern linguistic classifications, which often make more precise distinctions than the traditional scheme does—include word class, lexical class, and lexical category. Some authors restrict the term ''lexical category'' to refer only to a par ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Named-entity Recognition

Named-entity recognition (NER) (also known as (named) entity identification, entity chunking, and entity extraction) is a subtask of information extraction that seeks to locate and classify named entities mentioned in unstructured text into pre-defined categories such as person names, organizations, locations, medical codes, time expressions, quantities, monetary values, percentages, etc. Most research on NER/NEE systems has been structured as taking an unannotated block of text, such as this one: And producing an annotated block of text that highlights the names of entities: In this example, a person name consisting of one token, a two-token company name and a temporal expression have been detected and classified. State-of-the-art NER systems for English produce near-human performance. For example, the best system entering MUC-7 scored 93.39% of F-measure while human annotators scored 97.60% and 96.95%. Named-entity recognition platforms Notable NER platforms include: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transformer (deep Learning Architecture)

A transformer is a deep learning architecture developed by researchers at Google and based on the multi-head attention mechanism, proposed in a 2017 paper " Attention Is All You Need". Text is converted to numerical representations called tokens, and each token is converted into a vector via looking up from a word embedding table. At each layer, each token is then contextualized within the scope of the context window with other (unmasked) tokens via a parallel multi-head attention mechanism allowing the signal for key tokens to be amplified and less important tokens to be diminished. Transformers have the advantage of having no recurrent units, and therefore require less training time than earlier recurrent neural architectures (RNNs) such as long short-term memory (LSTM). Later variations have been widely adopted for training large language models (LLM) on large (language) datasets, such as the Wikipedia corpus and Common Crawl. Transformers were first developed as an impr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled " Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pruning (artificial Neural Network)

Pruning is the practice of removing parameters (which may entail removing individual parameters, or parameters in groups such as by neurons) from an existing artificial neural networks. The goal of this process is to maintain accuracy of the network while increasing its efficiency. This can be done to reduce the computational resources required to run the neural network. A biological process of synaptic pruning takes place in the brain of mammals during development (see also Neural Darwinism Neural Darwinism is a biological, and more specifically Darwinian and selectionist, approach to understanding global brain function, originally proposed by American biologist, researcher and Nobel-Prize recipient Gerald Maurice Edelman (July 1, ...). Node (neuron) pruning A basic algorithm for pruning is as follows: #Evaluate the importance of each neuron. #Rank the neurons according to their importance (assuming there is a clearly defined measure for "importance"). #Remove the least i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Recurrent Neural Network

A recurrent neural network (RNN) is a class of artificial neural networks where connections between nodes can create a cycle, allowing output from some nodes to affect subsequent input to the same nodes. This allows it to exhibit temporal dynamic behavior. Derived from feedforward neural networks, RNNs can use their internal state (memory) to process variable length sequences of inputs. This makes them applicable to tasks such as unsegmented, connected handwriting recognition or speech recognition. Recurrent neural networks are theoretically Turing complete and can run arbitrary programs to process arbitrary sequences of inputs. The term "recurrent neural network" is used to refer to the class of networks with an infinite impulse response, whereas "convolutional neural network" refers to the class of finite impulse response. Both classes of networks exhibit temporal dynamic behavior. A finite impulse recurrent network is a directed acyclic graph that can be unrolled and replace ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Neural Network

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of artificial neural network (ANN), most commonly applied to analyze visual imagery. CNNs are also known as Shift Invariant or Space Invariant Artificial Neural Networks (SIANN), based on the shared-weight architecture of the convolution kernels or filters that slide along input features and provide translation- equivariant responses known as feature maps. Counter-intuitively, most convolutional neural networks are not invariant to translation, due to the downsampling operation they apply to the input. They have applications in image and video recognition, recommender systems, image classification, image segmentation, medical image analysis, natural language processing, brain–computer interfaces, and financial time series. CNNs are regularized versions of multilayer perceptrons. Multilayer perceptrons usually mean fully connected networks, that is, each neuron in one layer is connected to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neural Network (machine Learning)

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a model inspired by the structure and function of biological neural networks in animal brains. An ANN consists of connected units or nodes called '' artificial neurons'', which loosely model the neurons in a brain. These are connected by ''edges'', which model the synapses in a brain. Each artificial neuron receives signals from connected neurons, then processes them and sends a signal to other connected neurons. The "signal" is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs, called the '' activation function''. The strength of the signal at each connection is determined by a '' weight'', which adjusts during the learning process. Typically, neurons are aggregated into layers. Different layers may perform different transformations on their inputs. Signals travel from the first layer (the ''input layer' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transfer Learning

Transfer learning (TL) is a research problem in machine learning (ML) that focuses on storing knowledge gained while solving one problem and applying it to a different but related problem. For example, knowledge gained while learning to recognize cars could apply when trying to recognize trucks. This area of research bears some relation to the long history of psychological literature on transfer of learning, although practical ties between the two fields are limited. From the practical standpoint, reusing or transferring information from previously learned tasks for the learning of new tasks has the potential to significantly improve the sample efficiency of a reinforcement learning agent. History In 1976, Stevo Bozinovski and Ante Fulgosi published a paper explicitly addressing transfer learning in neural networks training. The paper gives a mathematical and geometrical model of transfer learning. In 1981, a report was given on the application of transfer learning in training ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |