|

Block Wiedemann Algorithm

The block Wiedemann algorithm for computing kernel vectors of a matrix over a finite field is a generalization by Don Coppersmith of an algorithm due to Doug Wiedemann. Wiedemann's algorithm Let M be an n\times n square matrix over some finite field F, let x_ be a random vector of length n, and let x = M x_. Consider the sequence of vectors S = \left , Mx, M^2x, \ldots\right/math> obtained by repeatedly multiplying the vector by the matrix M; let y be any other vector of length n, and consider the sequence of finite-field elements S_y = \left \cdot x, y \cdot Mx, y \cdot M^2x \ldots\right/math> We know that the matrix M has a minimal polynomial; by the Cayley–Hamilton theorem we know that this polynomial is of degree (which we will call n_0) no more than n. Say \sum_^ p_rM^r = 0. Then \sum_^ y \cdot (p_r (M^r x)) = 0; so the minimal polynomial of the matrix annihilates the sequence S and hence S_y. But the Berlekamp–Massey algorithm allows us to calculate relatively effi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kernel (linear Algebra)

In mathematics, the kernel of a linear map, also known as the null space or nullspace, is the linear subspace of the domain of the map which is mapped to the zero vector. That is, given a linear map between two vector spaces and , the kernel of is the vector space of all elements of such that , where denotes the zero vector in , or more symbolically: :\ker(L) = \left\ . Properties The kernel of is a linear subspace of the domain .Linear algebra, as discussed in this article, is a very well established mathematical discipline for which there are many sources. Almost all of the material in this article can be found in , , and Strang's lectures. In the linear map L : V \to W, two elements of have the same image in if and only if their difference lies in the kernel of , that is, L\left(\mathbf_1\right) = L\left(\mathbf_2\right) \quad \text \quad L\left(\mathbf_1-\mathbf_2\right) = \mathbf. From this, it follows that the image of is isomorphic to the quotient of by the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vector Space

In mathematics and physics, a vector space (also called a linear space) is a set whose elements, often called ''vectors'', may be added together and multiplied ("scaled") by numbers called '' scalars''. Scalars are often real numbers, but can be complex numbers or, more generally, elements of any field. The operations of vector addition and scalar multiplication must satisfy certain requirements, called ''vector axioms''. The terms real vector space and complex vector space are often used to specify the nature of the scalars: real coordinate space or complex coordinate space. Vector spaces generalize Euclidean vectors, which allow modeling of physical quantities, such as forces and velocity, that have not only a magnitude, but also a direction. The concept of vector spaces is fundamental for linear algebra, together with the concept of matrix, which allows computing in vector spaces. This provides a concise and synthetic way for manipulating and studying systems of linear eq ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix (mathematics)

In mathematics, a matrix (plural matrices) is a rectangular array or table of numbers, symbols, or expressions, arranged in rows and columns, which is used to represent a mathematical object or a property of such an object. For example, \begin1 & 9 & -13 \\20 & 5 & -6 \end is a matrix with two rows and three columns. This is often referred to as a "two by three matrix", a "-matrix", or a matrix of dimension . Without further specifications, matrices represent linear maps, and allow explicit computations in linear algebra. Therefore, the study of matrices is a large part of linear algebra, and most properties and operations of abstract linear algebra can be expressed in terms of matrices. For example, matrix multiplication represents composition of linear maps. Not all matrices are related to linear algebra. This is, in particular, the case in graph theory, of incidence matrices, and adjacency matrices. ''This article focuses on matrices related to linear algebra, and, unle ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Finite Field

In mathematics, a finite field or Galois field (so-named in honor of Évariste Galois) is a field that contains a finite number of elements. As with any field, a finite field is a set on which the operations of multiplication, addition, subtraction and division are defined and satisfy certain basic rules. The most common examples of finite fields are given by the integers mod when is a prime number. The ''order'' of a finite field is its number of elements, which is either a prime number or a prime power. For every prime number and every positive integer there are fields of order p^k, all of which are isomorphic. Finite fields are fundamental in a number of areas of mathematics and computer science, including number theory, algebraic geometry, Galois theory, finite geometry, cryptography and coding theory. Properties A finite field is a finite set which is a field; this means that multiplication, addition, subtraction and division (excluding division by zero) are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Don Coppersmith

Don Coppersmith (born 1950) is a cryptographer and mathematician. He was involved in the design of the Data Encryption Standard block cipher at IBM, particularly the design of the S-boxes, strengthening them against differential cryptanalysis. He also improved the quantum Fourier transform discovered by Peter Shor in the same year (1994). He has also worked on algorithms for computing discrete logarithms, the cryptanalysis of RSA, methods for rapid matrix multiplication (see Coppersmith–Winograd algorithm) and IBM's MARS cipher. Don is also a co-designer of the SEAL and Scream ciphers. In 1972, Coppersmith obtained a bachelor's degree in mathematics at the Massachusetts Institute of Technology, and a Masters and Ph.D. in mathematics from Harvard University in 1975 and 1977 respectively. He was a Putnam Fellow each year from 1968–1971, becoming the first four-time Putnam Fellow in history. In 1998, he started ''Ponder This'', an online monthly column on mathematical puz ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Square Matrix

In mathematics, a square matrix is a matrix with the same number of rows and columns. An ''n''-by-''n'' matrix is known as a square matrix of order Any two square matrices of the same order can be added and multiplied. Square matrices are often used to represent simple linear transformations, such as shearing or rotation. For example, if R is a square matrix representing a rotation (rotation matrix) and \mathbf is a column vector describing the position of a point in space, the product R\mathbf yields another column vector describing the position of that point after that rotation. If \mathbf is a row vector, the same transformation can be obtained using where R^ is the transpose of Main diagonal The entries a_ (''i'' = 1, …, ''n'') form the main diagonal of a square matrix. They lie on the imaginary line which runs from the top left corner to the bottom right corner of the matrix. For instance, the main diagonal of the 4×4 matrix above contains the elements , , , . The d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Minimal Polynomial (linear Algebra)

In linear algebra, the minimal polynomial of an matrix over a field is the monic polynomial over of least degree such that . Any other polynomial with is a (polynomial) multiple of . The following three statements are equivalent: # is a root of , # is a root of the characteristic polynomial of , # is an eigenvalue of matrix . The multiplicity of a root of is the largest power such that ''strictly'' contains . In other words, increasing the exponent up to will give ever larger kernels, but further increasing the exponent beyond will just give the same kernel. If the field is not algebraically closed, then the minimal and characteristic polynomials need not factor according to their roots (in ) alone, in other words they may have irreducible polynomial factors of degree greater than . For irreducible polynomials one has similar equivalences: # divides , # divides , # the kernel of has dimension at least . # the kernel of has dimension at least . Like the c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cayley–Hamilton Theorem

In linear algebra, the Cayley–Hamilton theorem (named after the mathematicians Arthur Cayley and William Rowan Hamilton) states that every square matrix over a commutative ring (such as the real or complex numbers or the integers) satisfies its own characteristic equation. If is a given matrix and is the identity matrix, then the characteristic polynomial of is defined as p_A(\lambda)=\det(\lambda I_n-A), where is the determinant operation and is a variable for a scalar element of the base ring. Since the entries of the matrix (\lambda I_n-A) are (linear or constant) polynomials in , the determinant is also a degree- monic polynomial in , p_A(\lambda) = \lambda^n + c_\lambda^ + \cdots + c_1\lambda + c_0~. One can create an analogous polynomial p_A(A) in the matrix instead of the scalar variable , defined as p_A(A) = A^n + c_A^ + \cdots + c_1A + c_0I_n~. The Cayley–Hamilton theorem states that this polynomial expression is equal to the zero matrix, which is to say tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

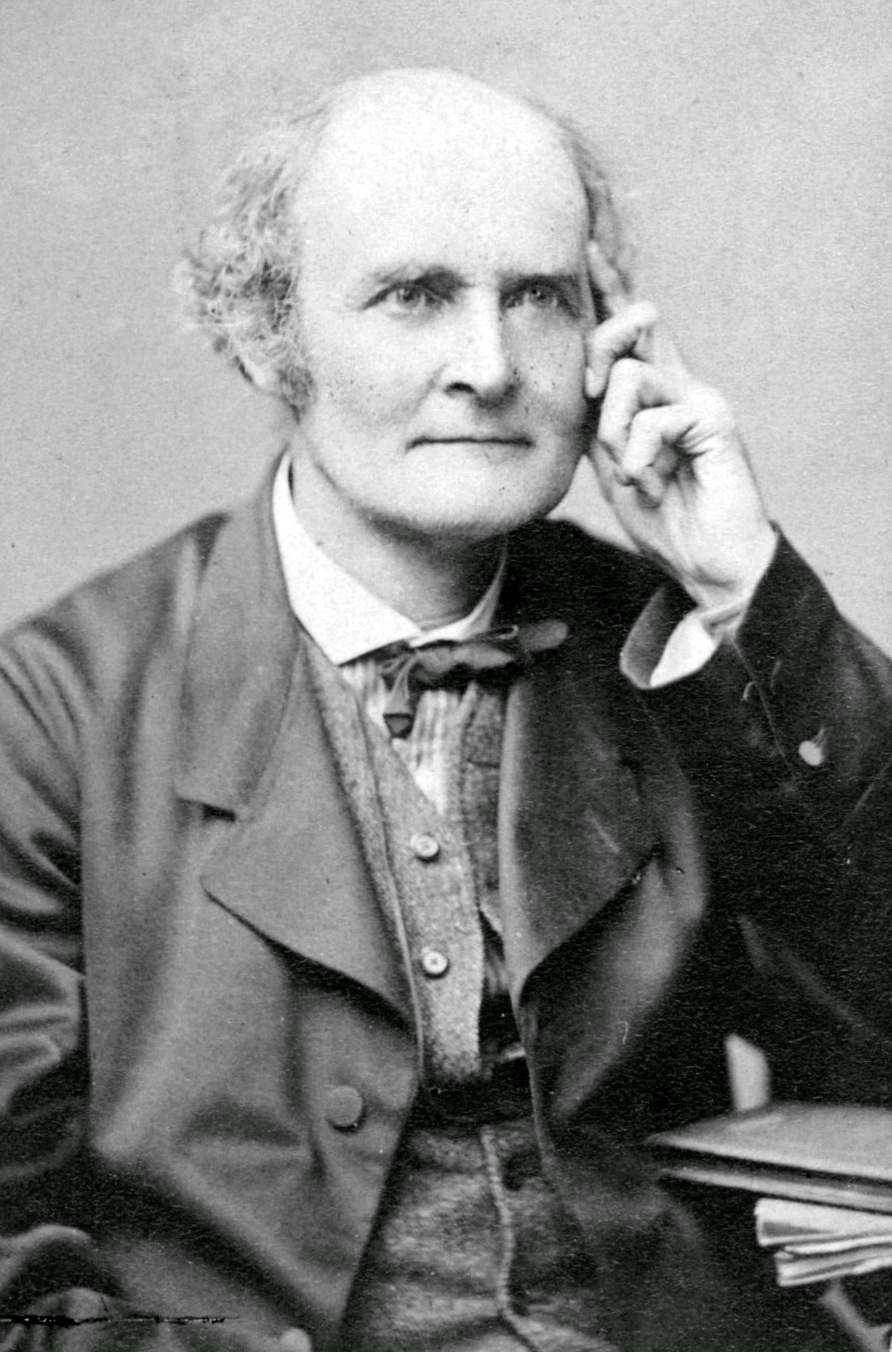

Berlekamp–Massey Algorithm

The Berlekamp–Massey algorithm is an algorithm that will find the shortest linear-feedback shift register (LFSR) for a given binary output sequence. The algorithm will also find the minimal polynomial of a linearly recurrent sequence in an arbitrary field. The field requirement means that the Berlekamp–Massey algorithm requires all non-zero elements to have a multiplicative inverse. Reeds and Sloane offer an extension to handle a ring. Elwyn Berlekamp invented an algorithm for decoding Bose–Chaudhuri–Hocquenghem (BCH) codes. James Massey James Lee Massey (February 11, 1934 – June 16, 2013) was an American information theorist and cryptographer, Professor Emeritus of Digital Technology at ETH Zurich. His notable work includes the application of the Berlekamp–Massey algorithm ... recognized its application to linear feedback shift registers and simplified the algorithm. Massey termed the algorithm the LFSR Synthesis Algorithm (Berlekamp Iterative Algorithm), but ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fast Fourier Transform

A fast Fourier transform (FFT) is an algorithm that computes the discrete Fourier transform (DFT) of a sequence, or its inverse (IDFT). Fourier analysis converts a signal from its original domain (often time or space) to a representation in the frequency domain and vice versa. The DFT is obtained by decomposing a sequence of values into components of different frequencies. This operation is useful in many fields, but computing it directly from the definition is often too slow to be practical. An FFT rapidly computes such transformations by factorizing the DFT matrix into a product of sparse (mostly zero) factors. As a result, it manages to reduce the complexity of computing the DFT from O\left(N^2\right), which arises if one simply applies the definition of DFT, to O(N \log N), where N is the data size. The difference in speed can be enormous, especially for long data sets where ''N'' may be in the thousands or millions. In the presence of round-off error, many FFT algorithm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |