|

Blekko

Blekko (trademarked as blekko) was a company that provided a web search engine with the stated goal of providing better search results than those offered by Google Search, with results gathered from a set of 3 billion trusted webpages and excluding such sites as content farms. The company's site, launched to the public on November 1, 2010, used slashtags to provide results for common searches. Blekko also offered a downloadable search bar. It was acquired by IBM in March 2015, and the service was discontinued."Blekko: The Newest Search Engine" ''PC Magazine'', November 1, 2010. Accessed November 1, 2010. Blekko also differentiated itself by offering richer data than its competitors. For instance, if a user accessed a domain name with the added ''/seo'', he would be directed to a page containing the statistics of the URL. This is the reason experts cited Blekko's fitness with the Big Data paradigm since it gathers multiple data sets and presents them visually so that the user is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rich Skrenta

Richard J. Skrenta Jr. (born June 6, 1967) is an American computer programmer and Silicon Valley entrepreneur who created the web search engine blekko.Arrington, Michael (2008-01-02). "The Next Google Search Challenger: Blekko". TechCrunch, 2 January 2008. Retrieved from https://techcrunch.com/2008/01/02/the-next-google-search-challenger-blekko/. Early life and education Skrenta Jr. was born in Pittsburgh on June 6, 1967. In 1982, at age 15, as a high school student at Mt. Lebanon High School, Skrenta wrote the Elk Cloner virus that infected Apple II computers. It is widely believed to have been one of the first large-scale self-spreading personal computer viruses ever created. In 1989, Skrenta graduated with a B.A. in computer science from Northwestern University. Career Between 1989 and 1991, Skrenta worked at Commodore Business Machines with Amiga Unix. In 1989, Skrenta started working on a multiplayer simulation game. In 1994, it was launched under the name ''Olympia'' a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Search Engine

A search engine is a software system that provides hyperlinks to web pages, and other relevant information on World Wide Web, the Web in response to a user's web query, query. The user enters a query in a web browser or a mobile app, and the search engine results page, search results are typically presented as a list of hyperlinks accompanied by textual summaries and images. Users also have the option of limiting a search to specific types of results, such as images, videos, or news. For a search provider, its software engine, engine is part of a distributed computing system that can encompass many data centers throughout the world. The speed and accuracy of an engine's response to a query are based on a complex system of Search engine indexing, indexing that is continuously updated by automated web crawlers. This can include data mining the Computer file, files and databases stored on web servers, although some content is deep web, not accessible to crawlers. There have been ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robots Exclusion Standard

robots.txt is the filename used for implementing the Robots Exclusion Protocol, a standard used by websites to indicate to visiting web crawlers and other web robots which portions of the website they are allowed to visit. The standard, developed in 1994, relies on voluntary compliance. Malicious bots can use the file as a directory of which pages to visit, though standards bodies discourage countering this with security through obscurity. Some archival sites ignore robots.txt. The standard was used in the 1990s to mitigate server overload. In the 2020s, websites began denying bots that collect information for generative artificial intelligence. The "robots.txt" file can be used in conjunction with sitemaps, another robot inclusion standard for websites. History The standard was proposed by Martijn Koster, when working for Nexor in February 1994 on the ''www-talk'' mailing list, the main communication channel for WWW-related activities at the time. Charles Stross clai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Crawler

Web crawler, sometimes called a spider or spiderbot and often shortened to crawler, is an Internet bot that systematically browses the World Wide Web and that is typically operated by search engines for the purpose of Web indexing (''web spidering''). Web search engines and some other websites use Web crawling or spidering software to update their web content or indices of other sites' web content. Web crawlers copy pages for processing by a search engine, which Index (search engine), indexes the downloaded pages so that users can search more efficiently. Crawlers consume resources on visited systems and often visit sites unprompted. Issues of schedule, load, and "politeness" come into play when large collections of pages are accessed. Mechanisms exist for public sites not wishing to be crawled to make this known to the crawling agent. For example, including a robots.txt file can request Software agent, bots to index only parts of a website, or nothing at all. The number of In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Duplicate Content

Duplicate content is a term used in the field of search engine optimization to describe content that appears on more than one web page. The duplicate content can be substantial parts of the content within or across domains and can be either exactly duplicate or closely similar. When multiple pages contain essentially the same content, search engines such as Google and Bing can penalize or cease displaying the copying site in any relevant search results. Types Non-malicious Non-malicious duplicate content may include variations of the same page, such as versions optimized for normal HTML, mobile devices, or printer-friendliness, or store items that can be shown via multiple distinct URLs. Duplicate content issues can also arise when a site is accessible under multiple subdomains, such as with or without the "www." or where sites fail to handle the trailing slash of URLs correctly. Another common source of non-malicious duplicate content is pagination, in which content and/or corr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Cache

A web cache (or HTTP cache) is a system for optimizing the World Wide Web. It is implemented both client-side and server-side. The caching of multimedia and other files can result in less overall delay when web browser, browsing the Web. Parts of the system Forward and reverse A forward cache is a cache outside the web server's network, e.g. in the client's web browser, in an ISP, or within a corporate network. A network-aware forward cache only caches heavily accessed items. A proxy server sitting between the client and web server can evaluate HTTP headers and choose whether to store web content. A reverse cache sits in front of one or more web servers, accelerating requests from the Internet and reducing peak server load. This is usually a content delivery network (CDN) that retains copies of web content at various points throughout a network. HTTP options The Hypertext Transfer Protocol (HTTP) defines three basic mechanisms for controlling caches: freshness, validation, and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Data

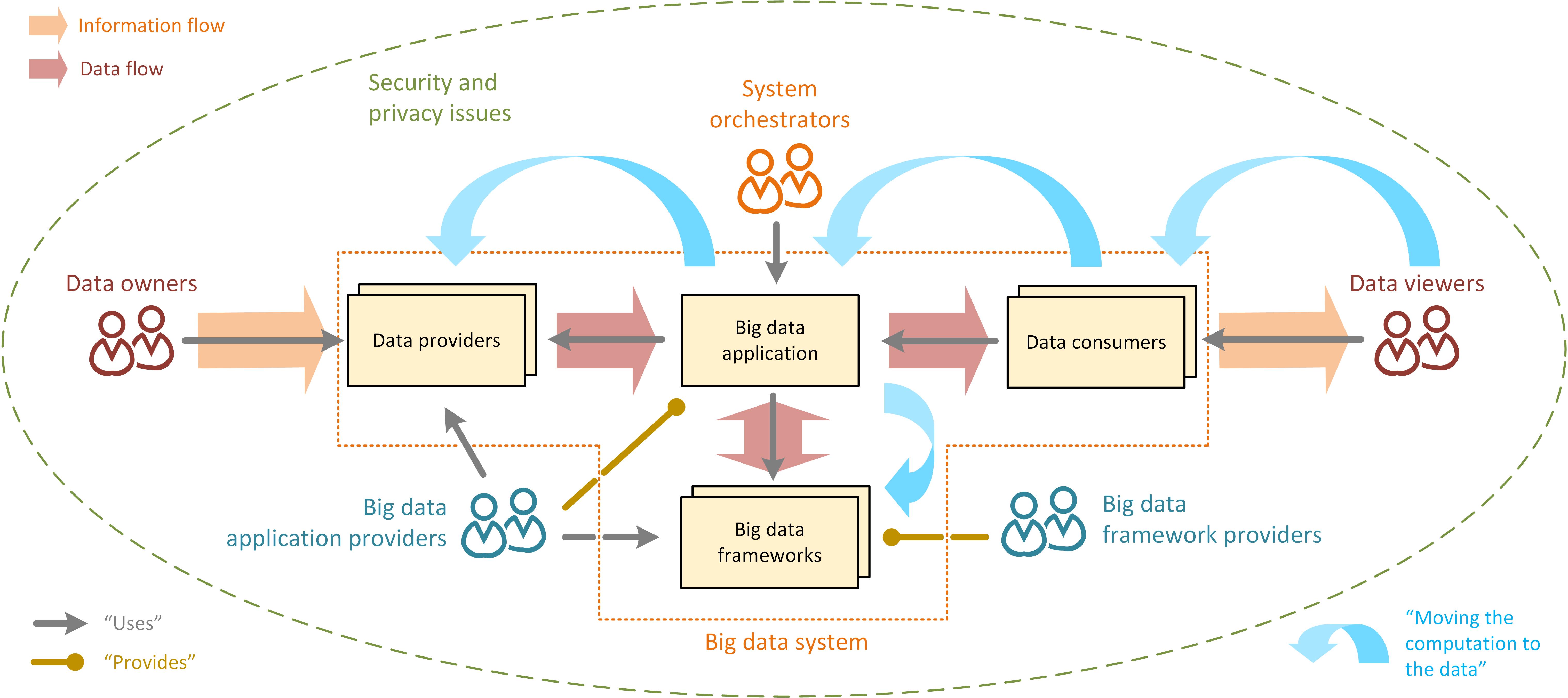

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include Automatic identification and data capture, capturing data, Computer data storage, data storage, data analysis, search, Data sharing, sharing, Data transmission, transfer, Data visualization, visualization, Query language, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. Thus a fourth concept, ''veracity,'' refers to the quality or insightfulness of the data. Without sufficient investm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Search Engine Optimization

Search engine optimization (SEO) is the process of improving the quality and quantity of Web traffic, website traffic to a website or a web page from web search engine, search engines. SEO targets unpaid search traffic (usually referred to as "Organic search, organic" results) rather than direct traffic, referral traffic, social media traffic, or Online advertising, paid traffic. Unpaid search engine traffic may originate from a variety of kinds of searches, including image search, video search, academic databases and search engines, academic search, news search, and industry-specific vertical search engines. As an Internet marketing strategy, SEO considers how search engines work, the computer-programmed algorithms that dictate search engine results, what people search for, the actual search queries or Keyword research, keywords typed into search engines, and which search engines are preferred by a target audience. SEO is performed because a website will receive more visito ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Watson (computer)

IBM Watson is a computer system capable of question answering, answering questions posed in natural language. It was developed as a part of IBM's DeepQA project by a research team, led by principal investigator David Ferrucci. Watson was named after IBM's founder and first CEO, industrialist Thomas J. Watson. The computer system was initially developed to answer questions on the popular quiz show ''Jeopardy!'' and in 2011, the Watson computer system competed on ''Jeopardy!'' against champions Brad Rutter and Ken Jennings, winning the first-place prize of US$1 million. In February 2013, IBM announced that Watson's first commercial application would be for utilization management decisions in lung cancer treatment, at Memorial Sloan Kettering Cancer Center, New York City, in conjunction with WellPoint (now Elevance Health). Description Watson was created as a question answering (QA) computing system that IBM built to apply advanced natural language processing, information ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Paywall

A paywall is a method of restricting access to content (media), content, with a purchase or a subscription business model, paid subscription, especially news. Beginning in the mid-2010s, newspapers started implementing paywalls on their websites as a way to increase revenue after years of decline in paid print readership and advertising revenue, partly due to the use of ad blockers. In academics, Academic paper, research papers are often subject to a paywall and are available via academic library, academic libraries that subscribe. Paywalls have also been used as a way of increasing the number of print subscribers; for example, some newspapers offer access to online content plus delivery of a Sunday print edition at a lower price than online access alone. Newspaper websites such as that of ''The Boston Globe'' and ''The New York Times'' use this tactic because it increases both their online revenue and their print circulation (which in turn provides more ad revenue). History ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |