|

AI Explainability

Explainable AI (XAI), often overlapping with interpretable AI, or explainable machine learning (XML), is a field of research within artificial intelligence (AI) that explores methods that provide humans with the ability of ''intellectual oversight'' over AI algorithms. The main focus is on the reasoning behind the decisions or predictions made by the AI algorithms, to make them more understandable and transparent. This addresses users' requirement to assess safety and scrutinize the automated decision making in applications. XAI counters the "black box" tendency of machine learning, where even the AI's designers cannot explain why it arrived at a specific decision. XAI hopes to help users of AI-powered systems perform more effectively by improving their understanding of how those systems reason. XAI may be an implementation of the social right to explanation. Even if there is no such legal right or regulatory requirement, XAI can improve the user experience of a product or servic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is the capability of computer, computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of research in computer science that develops and studies methods and software that enable machines to machine perception, perceive their environment and use machine learning, learning and intelligence to take actions that maximize their chances of achieving defined goals. High-profile applications of AI include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon (company), Amazon, and Netflix); virtual assistants (e.g., Google Assistant, Siri, and Amazon Alexa, Alexa); autonomous vehicles (e.g., Waymo); Generative artificial intelligence, generative and Computational creativity, creative tools (e.g., ChatGPT and AI art); and Superintelligence, superhuman play and analysis in strategy games (e.g., ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image Recognition

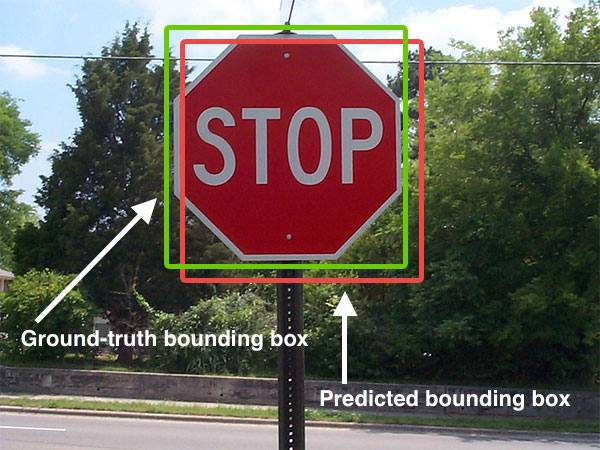

Computer vision tasks include methods for acquiring, processing, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the form of decisions. "Understanding" in this context signifies the transformation of visual images (the input to the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. Image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanner, 3D point clouds from LiDaR sensors, or medical scanning devices. The t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Language Model

A language model is a model of the human brain's ability to produce natural language. Language models are useful for a variety of tasks, including speech recognition, machine translation,Andreas, Jacob, Andreas Vlachos, and Stephen Clark (2013)"Semantic parsing as machine translation". Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers). natural language generation (generating more human-like text), optical character recognition, route optimization, handwriting recognition, grammar induction, and information retrieval. Large language models (LLMs), currently their most advanced form, are predominantly based on transformers trained on larger datasets (frequently using words scraped from the public internet). They have superseded recurrent neural network-based models, which had previously superseded the purely statistical models, such as word ''n''-gram language model. History Noam Chomsky did pioneering work on lan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Saliency Map

In computer vision, a saliency map is an image that highlights either the region on which people's eyes focus first or the most relevant regions for machine learning models. The goal of a saliency map is to reflect the degree of importance of a pixel to the human visual system or an otherwise opaque ML model. For example, in this image, a person first looks at the fort and light clouds, so they should be highlighted on the saliency map. Saliency maps engineered in artificial or computer vision are typically not the same as the actual saliency map constructed by biological or natural vision. Application Overview Saliency maps have applications in a variety of different problems. Some general applications: Human eye * Image and video compression: The human eye focuses only on a small region of interest in the frame. Therefore, it is not necessary to compress the entire frame with uniform quality. According to the authors, using a salience map reduces the final size of the vid ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

The Alignment Problem

''The Alignment Problem: Machine Learning and Human Values'' is a 2020 non-fiction book by the American writer Brian Christian. It is based on numerous interviews with experts trying to build artificial intelligence systems, particularly machine learning systems, that are aligned with human values. Summary The book is divided into three sections: Prophecy, Agency, and Normativity. Each section covers researchers and engineers working on different challenges in the alignment of artificial intelligence with human values. Prophecy In the first section, Christian interweaves discussions of the history of artificial intelligence research, particularly the machine learning approach of artificial neural networks such as the Perceptron and AlexNet, with examples of how AI systems can have unintended behavior. He tells the story of Julia Angwin, a journalist whose ProPublica investigation of the COMPAS algorithm, a tool for predicting recidivism among criminal defendants, led to wides ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multitask Learning

Multi-task learning (MTL) is a subfield of machine learning in which multiple learning tasks are solved at the same time, while exploiting commonalities and differences across tasks. This can result in improved learning efficiency and prediction accuracy for the task-specific models, when compared to training the models separately. Inherently, Multi-task learning is a multi-objective optimization problem having trade-offs between different tasks. Early versions of MTL were called "hints". In a widely cited 1997 paper, Rich Caruana gave the following characterization:Multitask Learning is an approach to inductive transfer that improves generalization by using the domain information contained in the training signals of related tasks as an inductive bias. It does this by learning tasks in parallel while using a shared representation; what is learned for each task can help other tasks be learned better. In the classification context, MTL aims to improve the performance of multiple cla ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shapley Value

In cooperative game theory, the Shapley value is a method (solution concept) for fairly distributing the total gains or costs among a group of players who have collaborated. For example, in a team project where each member contributed differently, the Shapley value provides a way to determine how much credit or blame each member deserves. It was named in honor of Lloyd Shapley, who introduced it in 1951 and won the Nobel Memorial Prize in Economic Sciences for it in 2012. The Shapley value determines each player's contribution by considering how much the overall outcome changes when they join each possible combination of other players, and then averaging those changes. In essence, it calculates each player's average marginal contribution across all possible coalitions. It is the only solution that satisfies four fundamental properties: efficiency, symmetry, additivity, and the dummy player (or null player) property, which are widely accepted as defining a fair distribution. This m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Classification

When classification is performed by a computer, statistical methods are normally used to develop the algorithm. Often, the individual observations are analyzed into a set of quantifiable properties, known variously as explanatory variables or ''features''. These properties may variously be categorical (e.g. "A", "B", "AB" or "O", for blood type), ordinal (e.g. "large", "medium" or "small"), integer-valued (e.g. the number of occurrences of a particular word in an email) or real-valued (e.g. a measurement of blood pressure). Other classifiers work by comparing observations to previous observations by means of a similarity or distance function. An algorithm that implements classification, especially in a concrete implementation, is known as a classifier. The term "classifier" sometimes also refers to the mathematical function, implemented by a classification algorithm, that maps input data to a category. Terminology across fields is quite varied. In statistics, where classi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

International Organization For Standardization

The International Organization for Standardization (ISO ; ; ) is an independent, non-governmental, international standard development organization composed of representatives from the national standards organizations of member countries. Membership requirements are given in Article 3 of the ISO Statutes. ISO was founded on 23 February 1947, and () it has published over 25,000 international standards covering almost all aspects of technology and manufacturing. It has over 800 technical committees (TCs) and subcommittees (SCs) to take care of standards development. The organization develops and publishes international standards in technical and nontechnical fields, including everything from manufactured products and technology to food safety, transport, IT, agriculture, and healthcare. More specialized topics like electrical and electronic engineering are instead handled by the International Electrotechnical Commission.Editors of Encyclopedia Britannica. 3 June 2021.Inte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Knowledge Extraction

Knowledge extraction is the creation of knowledge from structured ( relational databases, XML) and unstructured (text, documents, images) sources. The resulting knowledge needs to be in a machine-readable and machine-interpretable format and must represent knowledge in a manner that facilitates inferencing. Although it is methodically similar to information extraction ( NLP) and ETL (data warehouse), the main criterion is that the extraction result goes beyond the creation of structured information or the transformation into a relational schema. It requires either the reuse of existing formal knowledge (reusing identifiers or ontologies) or the generation of a schema based on the source data. The RDB2RDF W3C group is currently standardizing a language for extraction of resource description frameworks (RDF) from relational databases. Another popular example for knowledge extraction is the transformation of Wikipedia into structured data and also the mapping to existing knowl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Human-in-the-loop

Human-in-the-loop (HITL) is used in multiple contexts. It can be defined as a model requiring human interaction. HITL is associated with modeling and simulation (M&S) in the live, virtual, and constructive taxonomy. HITL along with the related human-on-the-loop are also used in relation to lethal autonomous weapons. Further, HITL is used in the context of machine learning. Machine learning In machine learning, HITL is used in the sense of humans aiding the computer in making the correct decisions in building a model. HITL improves machine learning over random sampling by selecting the most critical data needed to refine the model. Simulation In simulation, HITL models may conform to human factors requirements as in the case of a mockup. In this type of simulation a human is always part of the simulation and consequently influences the outcome in such a way that is difficult if not impossible to reproduce exactly. HITL also readily allows for the identification of problems and re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |