A ratio distribution (also known as a quotient distribution) is a

probability distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical descri ...

constructed as the distribution of the

ratio

In mathematics, a ratio () shows how many times one number contains another. For example, if there are eight oranges and six lemons in a bowl of fruit, then the ratio of oranges to lemons is eight to six (that is, 8:6, which is equivalent to the ...

of

random variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a Mathematics, mathematical formalization of a quantity or object which depends on randomness, random events. The term 'random variable' in its mathema ...

s having two other known distributions.

Given two (usually

independent

Independent or Independents may refer to:

Arts, entertainment, and media Artist groups

* Independents (artist group), a group of modernist painters based in Pennsylvania, United States

* Independentes (English: Independents), a Portuguese artist ...

) random variables ''X'' and ''Y'', the distribution of the random variable ''Z'' that is formed as the ratio ''Z'' = ''X''/''Y'' is a ''ratio distribution''.

An example is the

Cauchy distribution

The Cauchy distribution, named after Augustin-Louis Cauchy, is a continuous probability distribution. It is also known, especially among physicists, as the Lorentz distribution (after Hendrik Lorentz), Cauchy–Lorentz distribution, Lorentz(ian) ...

(also called the ''normal ratio distribution''), which comes about as the ratio of two

normally distributed

In probability theory and statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real number, real-valued random variable. The general form of its probability density function is

f(x ...

variables with zero mean.

Two other distributions often used in test-statistics are also ratio distributions:

the

''t''-distribution arises from a

Gaussian random variable divided by an independent

chi-distributed random variable,

while the

''F''-distribution originates from the ratio of two independent

chi-squared distributed random variables.

More general ratio distributions have been considered in the literature.

Often the ratio distributions are

heavy-tailed, and it may be difficult to work with such distributions and develop an associated

statistical test

A statistical hypothesis test is a method of statistical inference used to decide whether the data provide sufficient evidence to reject a particular hypothesis. A statistical hypothesis test typically involves a calculation of a test statistic. ...

.

A method based on the

median

The median of a set of numbers is the value separating the higher half from the lower half of a Sample (statistics), data sample, a statistical population, population, or a probability distribution. For a data set, it may be thought of as the “ ...

has been suggested as a "work-around".

Algebra of random variables

The ratio is one type of algebra for random variables:

Related to the ratio distribution are the

product distribution

A product distribution is a probability distribution constructed as the distribution of the product of random variables having two other known distributions. Given two statistically independent random variables ''X'' and ''Y'', the distribution of ...

,

sum distribution

Sum most commonly means the total of two or more numbers added together; see addition.

Sum can also refer to:

Mathematics

* Sum (category theory), the generic concept of summation in mathematics

* Sum, the result of summation, the addition ...

and

difference distribution

Difference commonly refers to:

* Difference (philosophy), the set of properties by which items are distinguished

* Difference (mathematics), the result of a subtraction

Difference, The Difference, Differences or Differently may also refer to:

Mu ...

. More generally, one may talk of combinations of sums, differences, products and ratios.

Many of these distributions are described in

Melvin D. Springer's book from 1979 ''The Algebra of Random Variables''.

The algebraic rules known with ordinary numbers do not apply for the algebra of random variables.

For example, if a product is ''C = AB'' and a ratio is ''D=C/A'' it does not necessarily mean that the distributions of ''D'' and ''B'' are the same.

Indeed, a peculiar effect is seen for the

Cauchy distribution

The Cauchy distribution, named after Augustin-Louis Cauchy, is a continuous probability distribution. It is also known, especially among physicists, as the Lorentz distribution (after Hendrik Lorentz), Cauchy–Lorentz distribution, Lorentz(ian) ...

: The product and the ratio of two independent Cauchy distributions (with the same scale parameter and the location parameter set to zero) will give the same distribution.

This becomes evident when regarding the Cauchy distribution as itself a ratio distribution of two Gaussian distributions of zero means: Consider two Cauchy random variables,

and

each constructed from two Gaussian distributions

and

then

:

where

. The first term is the ratio of two Cauchy distributions while the last term is the product of two such distributions.

Derivation

A way of deriving the ratio distribution of

from the joint distribution of the two other random variables ''X , Y'' , with joint pdf

, is by integration of the following form

:

If the two variables are independent then

and this becomes

:

This may not be straightforward. By way of example take the classical problem of the ratio of two standard Gaussian samples. The joint pdf is

:

Defining

we have

:

Using the known definite integral

we get

:

which is the Cauchy distribution, or Student's ''t'' distribution with ''n'' = 1

The

Mellin transform

In mathematics, the Mellin transform is an integral transform that may be regarded as the multiplicative version of the two-sided Laplace transform. This integral transform is closely connected to the theory of Dirichlet series, and is

often used ...

has also been suggested for derivation of ratio distributions.

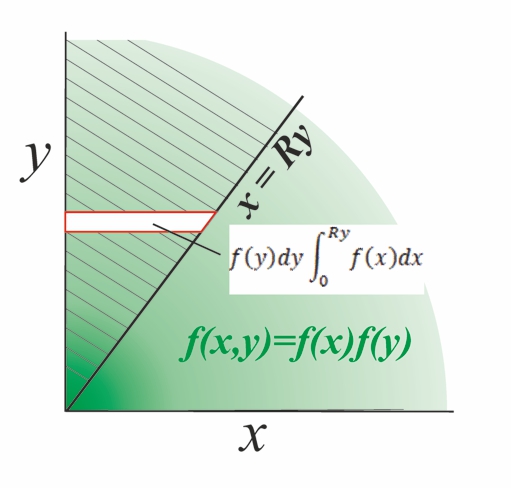

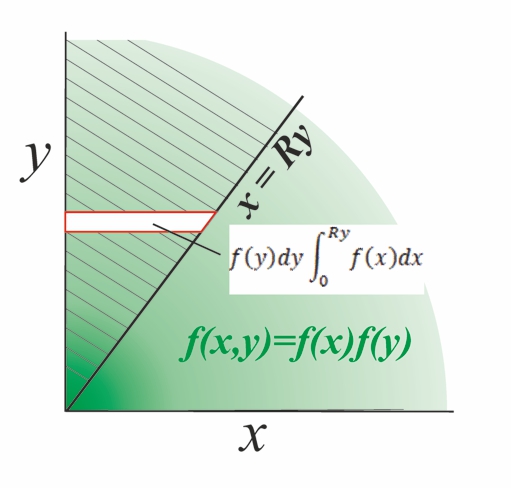

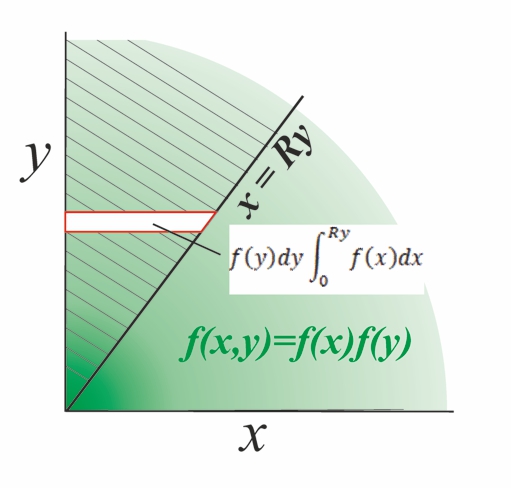

In the case of positive independent variables, proceed as follows. The diagram shows a separable bivariate distribution

which has support in the positive quadrant

and we wish to find the pdf of the ratio

. The hatched volume above the line

represents the cumulative distribution of the function

multiplied with the logical function

. The density is first integrated in horizontal strips; the horizontal strip at height ''y'' extends from ''x'' = 0 to ''x = Ry'' and has incremental probability

.

Secondly, integrating the horizontal strips upward over all ''y'' yields the volume of probability above the line

:

Finally, differentiate

with respect to

to get the pdf

.

:

Move the differentiation inside the integral:

:

and since

:

then

:

As an example, find the pdf of the ratio ''R'' when

:

We have

:

thus

:

Differentiation wrt. ''R'' yields the pdf of ''R''

:

Moments of random ratios

From

Mellin transform

In mathematics, the Mellin transform is an integral transform that may be regarded as the multiplicative version of the two-sided Laplace transform. This integral transform is closely connected to the theory of Dirichlet series, and is

often used ...

theory, for distributions existing only on the positive half-line

, we have the product identity

provided

are independent. For the case of a ratio of samples like

, in order to make use of this identity it is necessary to use moments of the inverse distribution. Set

such that

We have

:

thus

:

Differentiation wrt. ''R'' yields the pdf of ''R''

:

We have

:

thus

:

Differentiation wrt. ''R'' yields the pdf of ''R''

:

We have

:

thus

:

Differentiation wrt. ''R'' yields the pdf of ''R''

:

We have

:

thus

:

Differentiation wrt. ''R'' yields the pdf of ''R''

: