ŇĀukaszyk‚ÄďKarmowski metric on:

[Wikipedia]

[Google]

[Amazon]

In

In the case where ''X'' and ''Y'' are dependent on each other, having a joint probability density function ''f''(''x'', ''y''), the L‚ÄďK metric has the following form:

:

In the case where ''X'' and ''Y'' are dependent on each other, having a joint probability density function ''f''(''x'', ''y''), the L‚ÄďK metric has the following form:

:

For example the wavefunction of a quantum particle (''X'') in a box of length ''L'' has the form:

:

In this case the L‚ÄďK metric between this particle and any point of the box amounts:

:

From the properties of the L‚ÄďK metric it follows that the sum of distances between the edge of the box (''őĺ'' = 0 or ''őĺ''= ''L'') and any given point and the L‚ÄďK metric between this point and the particle ''X'' is greater than L‚ÄďK metric between the edge of the box and the particle. E.g. for a quantum particle ''X'' at an energy level ''m'' = 2 and point ''őĺ'' = 0.2:

:

Obviously the L‚ÄďK metric between the particle and the edge of the box (D(0, X) or D(''L'', X)) amounts 0.5''L'' and is independent on the particle's energy level.

For example the wavefunction of a quantum particle (''X'') in a box of length ''L'' has the form:

:

In this case the L‚ÄďK metric between this particle and any point of the box amounts:

:

From the properties of the L‚ÄďK metric it follows that the sum of distances between the edge of the box (''őĺ'' = 0 or ''őĺ''= ''L'') and any given point and the L‚ÄďK metric between this point and the particle ''X'' is greater than L‚ÄďK metric between the edge of the box and the particle. E.g. for a quantum particle ''X'' at an energy level ''m'' = 2 and point ''őĺ'' = 0.2:

:

Obviously the L‚ÄďK metric between the particle and the edge of the box (D(0, X) or D(''L'', X)) amounts 0.5''L'' and is independent on the particle's energy level.

Suppose we have to measure the distance between point ''¬Ķx'' and point ''¬Ķy'', which are collinear with some point ''0''. Suppose further that we instructed this task to two independent and large groups of surveyors equipped with tape measures, wherein each surveyor of the first group will measure distance between ''0'' and ''¬Ķx'' and each surveyor of the second group will measure distance between ''0'' and ''¬Ķy''.

Under the following assumptions we may consider the two sets of received observations ''xi'', ''yj'' as random variables ''X'' and ''Y'' having

Suppose we have to measure the distance between point ''¬Ķx'' and point ''¬Ķy'', which are collinear with some point ''0''. Suppose further that we instructed this task to two independent and large groups of surveyors equipped with tape measures, wherein each surveyor of the first group will measure distance between ''0'' and ''¬Ķx'' and each surveyor of the second group will measure distance between ''0'' and ''¬Ķy''.

Under the following assumptions we may consider the two sets of received observations ''xi'', ''yj'' as random variables ''X'' and ''Y'' having

''Experimental test of local observer independence''

Science Advances 20 Sep 2019, Vol. 5, no. 9, eaaw9832, DOI: 10.1126/sciadv.aaw9832 It is zero only for two measurements having the same spatiotemporal coordinates for a given observer.

mathematics

Mathematics is an area of knowledge that includes the topics of numbers, formulas and related structures, shapes and the spaces in which they are contained, and quantities and their changes. These topics are represented in modern mathematics ...

, the ŇĀukaszyk‚ÄďKarmowski metric is a function defining a distance between two random variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the po ...

s or two random vectors. This function is not a metric as it does not satisfy the identity of indiscernibles condition of the metric, that is for two identical arguments its value is greater than zero. The concept is named after Szymon ŇĀukaszyk and Wojciech Karmowski.

Continuous random variables

The ŇĀukaszyk‚ÄďKarmowski metric ''D'' between two continuous independentrandom variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the po ...

s ''X'' and ''Y'' is defined as:

:

where ''f''(''x'') and ''g''(''y'') are the probability density functions of ''X'' and ''Y'' respectively.

One may easily show that such ''metrics'' above do not satisfy the identity of indiscernibles condition required to be satisfied by the metric of the metric space. In fact they satisfy this condition if and only if both arguments ''X'', ''Y'' are certain events described by Dirac delta density probability distribution function Probability distribution function may refer to:

* Probability distribution

* Cumulative distribution function

* Probability mass function

* Probability density function

In probability theory, a probability density function (PDF), or density ...

s. In such a case:

:

the ŇĀukaszyk‚ÄďKarmowski metric simply transforms into the metric between expected value

In probability theory, the expected value (also called expectation, expectancy, mathematical expectation, mean, average, or first moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of a l ...

s , of the variables ''X'' and ''Y'' and obviously:

:

For all the other real cases however:

:

The ŇĀukaszyk‚ÄďKarmowski metric satisfies the remaining non-negativity and symmetry

Symmetry (from grc, ŌÉŌÖőľőľőĶŌĄŌĀőĮőĪ "agreement in dimensions, due proportion, arrangement") in everyday language refers to a sense of harmonious and beautiful proportion and balance. In mathematics, "symmetry" has a more precise definit ...

conditions of metric directly from its definition (symmetry of modulus), as well as subadditivity In mathematics, subadditivity is a property of a function that states, roughly, that evaluating the function for the sum of two elements of the domain always returns something less than or equal to the sum of the function's values at each element. ...

/ triangle inequality condition:

:

Thus

:

In the case where ''X'' and ''Y'' are dependent on each other, having a joint probability density function ''f''(''x'', ''y''), the L‚ÄďK metric has the following form:

:

In the case where ''X'' and ''Y'' are dependent on each other, having a joint probability density function ''f''(''x'', ''y''), the L‚ÄďK metric has the following form:

:

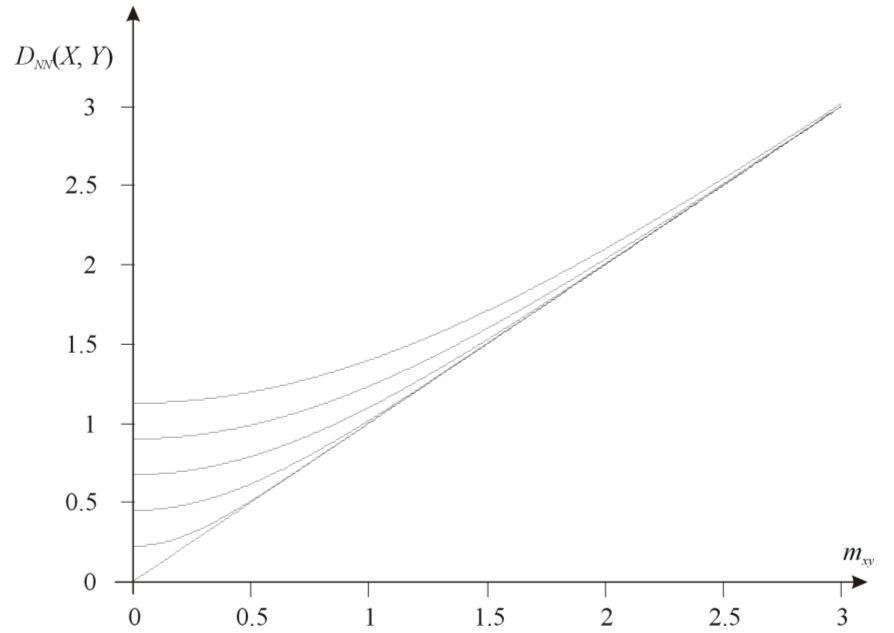

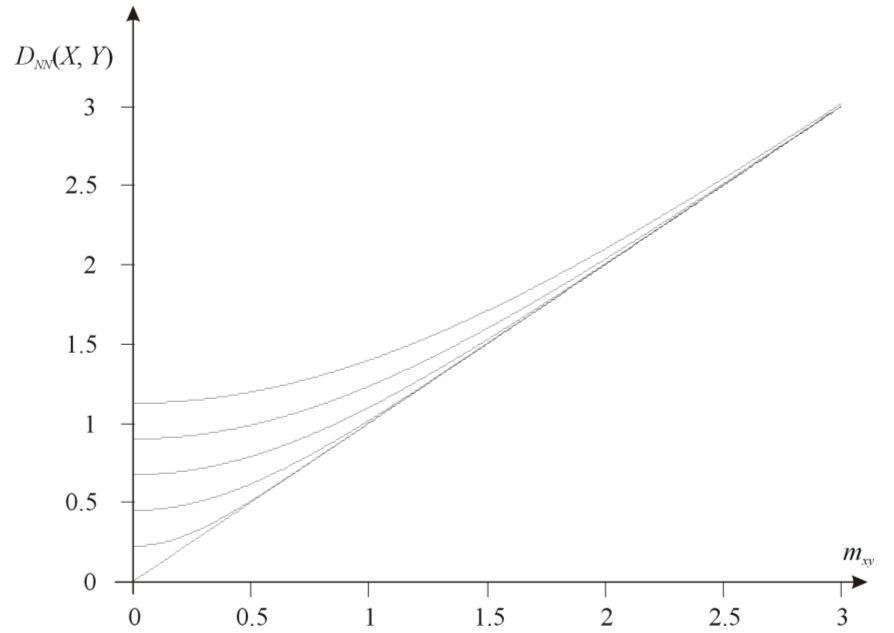

Example: two continuous random variables with normal distributions (NN)

If both random variables ''X'' and ''Y'' havenormal distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

:

f(x) = \frac e^

The parameter \mu ...

s with the same standard deviation

In statistics, the standard deviation is a measure of the amount of variation or dispersion of a set of values. A low standard deviation indicates that the values tend to be close to the mean (also called the expected value) of the set, while ...

ŌÉ, and if moreover ''X'' and ''Y'' are independent, then ''D''(''X'', ''Y'') is given by

:

where

:

where erfc(''x'') is the complementary error function

In mathematics, the error function (also called the Gauss error function), often denoted by , is a complex function of a complex variable defined as:

:\operatorname z = \frac\int_0^z e^\,\mathrm dt.

This integral is a special (non-elementary ...

and where the subscripts NN indicate the type of the L‚ÄďK metric.

In this case, the lowest possible value of the function is given by

:

Example: two continuous random variables with uniform distributions (RR)

When both random variables ''X'' and ''Y'' have uniform distributions (''R'') of the samestandard deviation

In statistics, the standard deviation is a measure of the amount of variation or dispersion of a set of values. A low standard deviation indicates that the values tend to be close to the mean (also called the expected value) of the set, while ...

ŌÉ, ''D''(''X'', ''Y'') is given by

:

The minimal value of this kind of L‚ÄďK metric is

:

Discrete random variables

In case the random variables ''X'' and ''Y'' are characterized by discrete probability distribution the ŇĀukaszyk‚ÄďKarmowski metric ''D'' is defined as: : For example for two discretePoisson-distributed

In probability theory and statistics, the Poisson distribution is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time or space if these events occur with a known c ...

random variables ''X'' and ''Y'' the equation above transforms into:

:

Random vectors

The ŇĀukaszyk‚ÄďKarmowski metric of random variables may be easily extended into metric ''D''(X, Y) of random vectors X, Y by substituting with any metric operator ''d''(x,y): : For example substituting ''d''(x,y) with an Euclidean metric and assuming two-dimensionality of random vectors X, Y would yield: : This form of L‚ÄďK metric is also greater than zero for the same vectors being measured (with the exception of two vectors having Dirac delta coefficients) and satisfies non-negativity and symmetry conditions of metric. The proofs are analogous to the ones provided for the L‚ÄďK metric of random variables discussed above. In case random vectors X and Y are dependent on each other, sharing commonjoint probability distribution

Given two random variables that are defined on the same probability space, the joint probability distribution is the corresponding probability distribution on all possible pairs of outputs. The joint distribution can just as well be considered ...

''F''(X, Y) the L‚ÄďK metric has the form:

:

Random vectors ‚Äď the Euclidean form

If the random vectors X and Y are not also only mutually independent but also all components of each vector aremutually independent

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of o ...

, the ŇĀukaszyk‚ÄďKarmowski metric for random vectors is defined as:

:

where:

:

is a particular form of L‚ÄďK metric of random variables chosen in dependence of the distributions of particular coefficients and of vectors X, Y .

Such a form of L‚ÄďK metric also shares the common properties of all L‚ÄďK metrics.

* It does not satisfy the identity of indiscernibles condition:

:

:since:

:

:but from the properties of L‚ÄďK metric for random variables it follows that:

:

* It is non-negative and symmetric since the particular coefficients are also non-negative and symmetric:

:

:

* It satisfies the triangle inequality:

:

:since (cf. Minkowski inequality):

:

Physical interpretation

The ŇĀukaszyk‚ÄďKarmowski metric may be considered as a distance between quantum mechanics particles described by wavefunctions ''Ōą'', where the probability ''dP'' that given particle is present in given volume of space ''dV'' amounts: :A quantum particle in a box

For example the wavefunction of a quantum particle (''X'') in a box of length ''L'' has the form:

:

In this case the L‚ÄďK metric between this particle and any point of the box amounts:

:

From the properties of the L‚ÄďK metric it follows that the sum of distances between the edge of the box (''őĺ'' = 0 or ''őĺ''= ''L'') and any given point and the L‚ÄďK metric between this point and the particle ''X'' is greater than L‚ÄďK metric between the edge of the box and the particle. E.g. for a quantum particle ''X'' at an energy level ''m'' = 2 and point ''őĺ'' = 0.2:

:

Obviously the L‚ÄďK metric between the particle and the edge of the box (D(0, X) or D(''L'', X)) amounts 0.5''L'' and is independent on the particle's energy level.

For example the wavefunction of a quantum particle (''X'') in a box of length ''L'' has the form:

:

In this case the L‚ÄďK metric between this particle and any point of the box amounts:

:

From the properties of the L‚ÄďK metric it follows that the sum of distances between the edge of the box (''őĺ'' = 0 or ''őĺ''= ''L'') and any given point and the L‚ÄďK metric between this point and the particle ''X'' is greater than L‚ÄďK metric between the edge of the box and the particle. E.g. for a quantum particle ''X'' at an energy level ''m'' = 2 and point ''őĺ'' = 0.2:

:

Obviously the L‚ÄďK metric between the particle and the edge of the box (D(0, X) or D(''L'', X)) amounts 0.5''L'' and is independent on the particle's energy level.

Two quantum particles in a box

A distance between two particles bouncing in a one-dimensional box of length ''L'' having time-independent wavefunctions: : : may be defined in terms of ŇĀukaszyk‚ÄďKarmowski metric of independent random variables as: : The distance between particles ''X'' and ''Y'' is minimal for ''m'' = 1 i ''n'' = 1, that is for the minimum energy levels of these particles and amounts: : According to properties of this function, the minimum distance is nonzero. For greater energy levels ''m'', ''n'' it approaches to ''L''/3.Popular explanation

Suppose we have to measure the distance between point ''¬Ķx'' and point ''¬Ķy'', which are collinear with some point ''0''. Suppose further that we instructed this task to two independent and large groups of surveyors equipped with tape measures, wherein each surveyor of the first group will measure distance between ''0'' and ''¬Ķx'' and each surveyor of the second group will measure distance between ''0'' and ''¬Ķy''.

Under the following assumptions we may consider the two sets of received observations ''xi'', ''yj'' as random variables ''X'' and ''Y'' having

Suppose we have to measure the distance between point ''¬Ķx'' and point ''¬Ķy'', which are collinear with some point ''0''. Suppose further that we instructed this task to two independent and large groups of surveyors equipped with tape measures, wherein each surveyor of the first group will measure distance between ''0'' and ''¬Ķx'' and each surveyor of the second group will measure distance between ''0'' and ''¬Ķy''.

Under the following assumptions we may consider the two sets of received observations ''xi'', ''yj'' as random variables ''X'' and ''Y'' having normal distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is

:

f(x) = \frac e^

The parameter \mu ...

of the same variance ''ŌÉ'' 2 and distributed over "factual locations" of points ''¬Ķx'', ''¬Ķy''.

Calculating the arithmetic mean

In mathematics and statistics, the arithmetic mean ( ) or arithmetic average, or just the ''mean'' or the ''average'' (when the context is clear), is the sum of a collection of numbers divided by the count of numbers in the collection. The colle ...

for all pairs , ''xi'' − ''yj'', we should then obtain the value of L‚ÄďK metric ''DNN''(''X'', ''Y''). Its characteristic curvilinearity arises from the symmetry of modulus and overlapping of distributions ''f''(''x''), ''g''(''y'') when their means approach each other.

An interesting experiment the results of which coincide with the properties of L‚ÄďK metric was performed in 1967 by Robert Moyer and Thomas Landauer Dr. Thomas K. Landauer (April 25, 1932 ‚Äď March 26, 2014) was a Professor Emeritus at the Department of Psychology of the University of Colorado. He received his doctorate in 1960 from Harvard University, and also held academic appointments at Harv ...

who measured the precise time an adult took to decide which of two Arabic digits was the largest. When the two digits were numerically distanced such as 2 and 9. subjects responded quickly and accurately. But their response time slowed by more than 100 milliseconds when they were closer such as 5 and 6, and subjects then erred as often as once in every ten trials. The distance effect was present both among highly intelligent persons, as well as those who were trained to escape it.

Practical applications

A ŇĀukaszyk‚ÄďKarmowski metric may be used instead of a metric operator (commonly the Euclidean distance) in various numerical methods, and in particular in approximation algorithms such us radial basis function networks, inverse distance weighting orKohonen

A self-organizing map (SOM) or self-organizing feature map (SOFM) is an unsupervised learning, unsupervised machine learning technique used to produce a dimensionality reduction, low-dimensional (typically two-dimensional) representation of a hig ...

self-organizing maps.

This approach is physically based, allowing the real uncertainty in the location of the sample points to be considered.

ŇĀukaszyk‚ÄďKarmowski metric is the only metric that can be used In the context of observer-dependent measurements.Massimiliano Proietti, Alexander Pickston, Francesco Graffitti, Peter Barrow, Dmytro Kundys, Cyril Branciard, Martin Ringbauer, Alessandro Fedrizzi''Experimental test of local observer independence''

Science Advances 20 Sep 2019, Vol. 5, no. 9, eaaw9832, DOI: 10.1126/sciadv.aaw9832 It is zero only for two measurements having the same spatiotemporal coordinates for a given observer.

See also

* Probabilistic metric space *Statistical distance

In statistics, probability theory, and information theory, a statistical distance quantifies the distance between two statistical objects, which can be two random variables, or two probability distributions or samples, or the distance can be be ...

References

{{DEFAULTSORT:Lukaszyk-Karmowski metric Statistical distance