|

Particle In A Box

In quantum mechanics, the particle in a box model (also known as the infinite potential well or the infinite square well) describes a particle free to move in a small space surrounded by impenetrable barriers. The model is mainly used as a hypothetical example to illustrate the differences between classical and quantum systems. In classical systems, for example, a particle trapped inside a large box can move at any speed within the box and it is no more likely to be found at one position than another. However, when the well becomes very narrow (on the scale of a few nanometers), quantum effects become important. The particle may only occupy certain positive energy levels. Likewise, it can never have zero energy, meaning that the particle can never "sit still". Additionally, it is more likely to be found at certain positions than at others, depending on its energy level. The particle may never be detected at certain positions, known as spatial nodes. The particle in a box mo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dispersion Relation

In the physical sciences and electrical engineering, dispersion relations describe the effect of dispersion on the properties of waves in a medium. A dispersion relation relates the wavelength or wavenumber of a wave to its frequency. Given the dispersion relation, one can calculate the phase velocity and group velocity of waves in the medium, as a function of frequency. In addition to the geometry-dependent and material-dependent dispersion relations, the overarching Kramers–Kronig relations describe the frequency dependence of wave propagation and attenuation. Dispersion may be caused either by geometric boundary conditions ( waveguides, shallow water) or by interaction of the waves with the transmitting medium. Elementary particles, considered as matter waves, have a nontrivial dispersion relation even in the absence of geometric constraints and other media. In the presence of dispersion, wave velocity is no longer uniquely defined, giving rise to the distinction ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Confined Particle Dispersion - Positive

''The Abandoned'' (also known as ''The Confines'', and ''Confined'') is a 2015 American supernatural horror film directed by Eytan Rockaway, and written by Ido Fluk. It stars Jason Patric, Louisa Krause and Mark Margolis. The film premiered at the Los Angeles Film Festival on June 13, 2015, and was released in theaters and video on demand on January 8, 2016 by IFC Films. The film concerns a struggling young woman, who tries to reacquire her normal life by taking a job as a security guard at an abandoned apartment building. ''The Abandoned'' marked Rockaway's debut as director. Plot Julia Streak ( Louisa Krause) is a troubled, antipsychotic-dependent young woman who takes a job as a night guard at a grand but abandoned apartment complex so she can support her daughter, Clara. She is accepted by master keeper Dixon Boothe (Ezra Knight) and introduced to the only other security guard for the complex, the rude paraplegic Dennis Cooper ( Jason Patric), who has been working at the com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nat (unit)

The natural unit of information (symbol: nat), sometimes also nit or nepit, is a unit of information, based on natural logarithms and powers of ''e'', rather than the powers of 2 and base 2 logarithms, which define the shannon. This unit is also known by its unit symbol, the nat. One nat is the information content of an event when the probability of that event occurring is 1/ ''e''. One nat is equal to shannons ≈ 1.44 Sh or, equivalently, hartleys ≈ 0.434 Hart. History Boulton and Wallace used the term ''nit'' in conjunction with minimum message length, which was subsequently changed by the minimum description length community to ''nat'' to avoid confusion with the nit used as a unit of luminance. Alan Turing used the ''natural ban''. Entropy Shannon entropy (information entropy), being the expected value of the information of an event, is a quantity of the same type and with the same units as information. The International System ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uncertainty Principle

In quantum mechanics, the uncertainty principle (also known as Heisenberg's uncertainty principle) is any of a variety of mathematical inequalities asserting a fundamental limit to the accuracy with which the values for certain pairs of physical quantities of a particle, such as position, ''x'', and momentum, ''p'', can be predicted from initial conditions. Such variable pairs are known as complementary variables or canonically conjugate variables; and, depending on interpretation, the uncertainty principle limits to what extent such conjugate properties maintain their approximate meaning, as the mathematical framework of quantum physics does not support the notion of simultaneously well-defined conjugate properties expressed by a single value. The uncertainty principle implies that it is in general not possible to predict the value of a quantity with arbitrary certainty, even if all initial conditions are specified. Introduced first in 1927 by the German physicist Wern ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

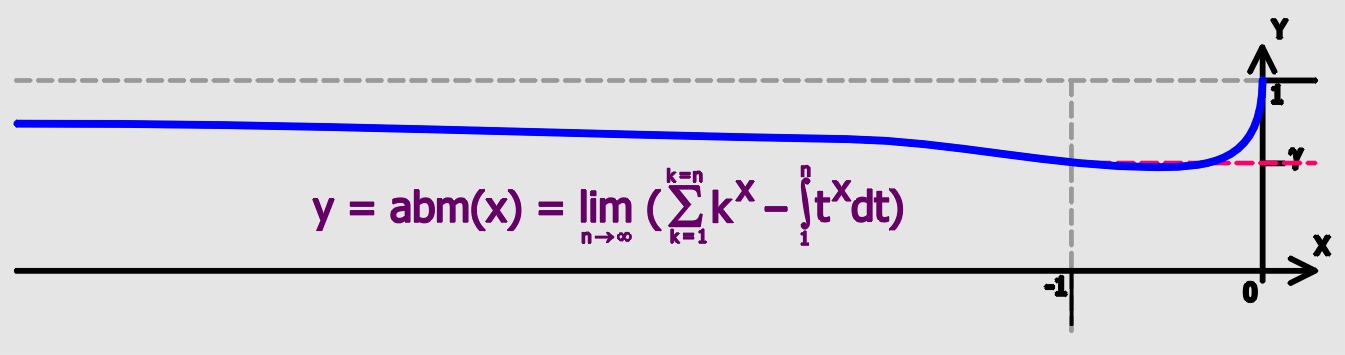

Euler-Mascheroni Constant

Euler's constant (sometimes also called the Euler–Mascheroni constant) is a mathematical constant usually denoted by the lowercase Greek letter gamma (). It is defined as the limiting difference between the harmonic series and the natural logarithm, denoted here by \log: :\begin \gamma &= \lim_\left(-\log n + \sum_^n \frac1\right)\\ px&=\int_1^\infty\left(-\frac1x+\frac1\right)\,dx. \end Here, \lfloor x\rfloor represents the floor function. The numerical value of Euler's constant, to 50 decimal places, is: : History The constant first appeared in a 1734 paper by the Swiss mathematician Leonhard Euler, titled ''De Progressionibus harmonicis observationes'' (Eneström Index 43). Euler used the notations and for the constant. In 1790, Italian mathematician Lorenzo Mascheroni used the notations and for the constant. The notation appears nowhere in the writings of either Euler or Mascheroni, and was chosen at a later time perhaps because of the constant's connect ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Entropy (information)

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes. Given a discrete random variable X, which takes values in the alphabet \mathcal and is distributed according to p: \mathcal\to, 1/math>: \Eta(X) := -\sum_ p(x) \log p(x) = \mathbb \log p(X), where \Sigma denotes the sum over the variable's possible values. The choice of base for \log, the logarithm, varies for different applications. Base 2 gives the unit of bits (or " shannons"), while base ''e'' gives "natural units" nat, and base 10 gives units of "dits", "bans", or " hartleys". An equivalent definition of entropy is the expected value of the self-information of a variable. The concept of information entropy was introduced by Claude Shannon in his 1948 paper " A Mathematical Theory of Communication",PDF archived froherePDF archived frohere and is also referred to as Shannon entropy. Shannon's theory ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heisenberg Uncertainty Principle

In quantum mechanics, the uncertainty principle (also known as Heisenberg's uncertainty principle) is any of a variety of mathematical inequalities asserting a fundamental limit to the accuracy with which the values for certain pairs of physical quantities of a particle, such as position, ''x'', and momentum, ''p'', can be predicted from initial conditions. Such variable pairs are known as complementary variables or canonically conjugate variables; and, depending on interpretation, the uncertainty principle limits to what extent such conjugate properties maintain their approximate meaning, as the mathematical framework of quantum physics does not support the notion of simultaneously well-defined conjugate properties expressed by a single value. The uncertainty principle implies that it is in general not possible to predict the value of a quantity with arbitrary certainty, even if all initial conditions are specified. Introduced first in 1927 by the German physicist Werner ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expectation Value

In probability theory, the expected value (also called expectation, expectancy, mathematical expectation, mean, average, or first moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of a large number of independently selected outcomes of a random variable. The expected value of a random variable with a finite number of outcomes is a weighted average of all possible outcomes. In the case of a continuum of possible outcomes, the expectation is defined by integration. In the axiomatic foundation for probability provided by measure theory, the expectation is given by Lebesgue integration. The expected value of a random variable is often denoted by , , or , with also often stylized as or \mathbb. History The idea of the expected value originated in the middle of the 17th century from the study of the so-called problem of points, which seeks to divide the stakes ''in a fair way'' between two players, who have to end t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sinc Function

In mathematics, physics and engineering, the sinc function, denoted by , has two forms, normalized and unnormalized.. In mathematics, the historical unnormalized sinc function is defined for by \operatornamex = \frac. Alternatively, the unnormalized sinc function is often called the sampling function, indicated as Sa(''x''). In digital signal processing and information theory, the normalized sinc function is commonly defined for by \operatornamex = \frac. In either case, the value at is defined to be the limiting value \operatorname0 := \lim_\frac = 1 for all real . The normalization causes the definite integral of the function over the real numbers to equal 1 (whereas the same integral of the unnormalized sinc function has a value of ). As a further useful property, the zeros of the normalized sinc function are the nonzero integer values of . The normalized sinc function is the Fourier transform of the rectangular function with no scaling. It is used in the conc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

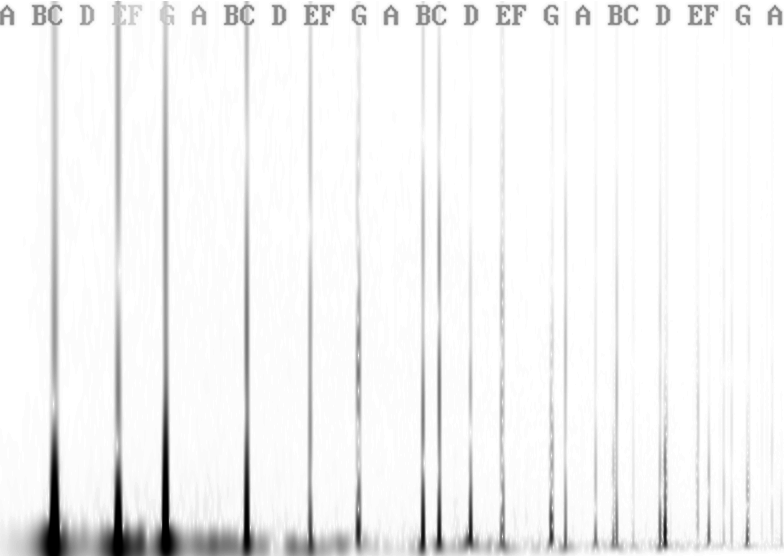

Fourier Transform

A Fourier transform (FT) is a mathematical transform that decomposes functions into frequency components, which are represented by the output of the transform as a function of frequency. Most commonly functions of time or space are transformed, which will output a function depending on temporal frequency or spatial frequency respectively. That process is also called ''analysis''. An example application would be decomposing the waveform of a musical chord into terms of the intensity of its constituent pitches. The term ''Fourier transform'' refers to both the frequency domain representation and the mathematical operation that associates the frequency domain representation to a function of space or time. The Fourier transform of a function is a complex-valued function representing the complex sinusoids that comprise the original function. For each frequency, the magnitude ( absolute value) of the complex value represents the amplitude of a constituent complex sinusoid wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Absolute Value

In mathematics, the absolute value or modulus of a real number x, is the non-negative value without regard to its sign. Namely, , x, =x if is a positive number, and , x, =-x if x is negative (in which case negating x makes -x positive), and For example, the absolute value of 3 and the absolute value of −3 is The absolute value of a number may be thought of as its distance from zero. Generalisations of the absolute value for real numbers occur in a wide variety of mathematical settings. For example, an absolute value is also defined for the complex numbers, the quaternions, ordered rings, fields and vector spaces. The absolute value is closely related to the notions of magnitude, distance, and norm in various mathematical and physical contexts. Terminology and notation In 1806, Jean-Robert Argand introduced the term ''module'', meaning ''unit of measure'' in French, specifically for the ''complex'' absolute value, Oxford English Dictionary, Draft Revision, Ju ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |