Sparse Distributed Representation on:

[Wikipedia]

[Google]

[Amazon]

Hierarchical temporal memory (HTM) is a biologically constrained

:

:

Numenta Platform for Intelligent Computing (NuPIC)

is one of several availabl

HTM implementations

Some are provided b

Numenta

while some are developed and maintained by th

HTM open source community

NuPIC includes implementations of Spatial Pooling and Temporal Memory in both C++ and Python. It also include

3 APIs

Users can construct HTM systems using direct implementations of th

or construct a Network using th

which is a flexible framework for constructing complicated associations between different Layers of cortex.

NuPIC 1.0

was released in July 2017, after which the codebase was put into maintenance mode. Current research continues in Nument

research codebases

www.grokstream.com

* Cortical.io – advanced natural language processing, se

www.cortical.io

The following tools are available on NuPIC: * HTM Studio – find anomalies in time series using your own data, se

numenta.com/htm-studio/

* Numenta Anomaly Benchmark – compare HTM anomalies with other anomaly detection techniques, se

numenta.com/numenta-anomaly-benchmark/

The following example applications are available on NuPIC, se

numenta.com/applications/

* HTM for stocks – example of tracking anomalies in the stock market (sample code) * Rogue behavior detection – example of finding anomalies in human behavior (white paper and sample code) * Geospatial tracking – example of finding anomalies in objectives moving through space and time (white paper and sample code)

Cortical Learning Algorithm overview

(Accessed May 2013)

HTM Cortical Learning Algorithms

(PDF Sept. 2011)

Numenta, Inc.HTM Cortical Learning Algorithms ArchiveAssociation for Computing Machinery talk from 2009 by Subutai Ahmad from NumentaOnIntelligence.org Forum

an

Models and Simulation Topics

forum.

Hierarchical Temporal Memory

(Microsoft PowerPoint presentation)

Cortical Learning Algorithm Tutorial: CLA Basics

talk about the cortical learning algorithm (CLA) used by the HTM model on

Pattern Recognition by Hierarchical Temporal Memory

by Davide Maltoni, April 13, 2011

Vicarious

Startup rooted in HTM by Dileep George

The Gartner Fellows: Jeff Hawkins Interview

by Tom Austin, ''

Emerging Tech: Jeff Hawkins reinvents artificial intelligence

by Debra D'Agostino and Edward H. Baker, ''CIO Insight'', May 1, 2006

by Stefanie Olsen, ''

"The Thinking Machine"

by Evan Ratliff,

Think like a human

by Jeff Hawkins,

Neocortex – Memory-Prediction Framework

—

Hierarchical Temporal Memory related Papers and Books

Belief revision Artificial neural networks Deep learning Unsupervised learning Semisupervised learning

machine intelligence

Artificial intelligence (AI) is intelligence—perceiving, synthesizing, and inferring information—demonstrated by machines, as opposed to intelligence displayed by animals and humans. Example tasks in which this is done include speech re ...

technology developed by Numenta. Originally described in the 2004 book ''On Intelligence

''On Intelligence: How a New Understanding of the Brain will Lead to the Creation of Truly Intelligent Machines'' is a 2004 book by Jeff Hawkins and Sandra Blakeslee. The book explains Hawkins' memory-prediction framework theory of the brain and ...

'' by Jeff Hawkins

Jeffrey Hawkins is a co-founder of the companies Palm Computing, where he co-created the PalmPilot, and Handspring, where he was one of the creators of the Treo.Jeff Hawkins, ''On Intelligence'', p.28

He subsequently turned to work on neurosc ...

with Sandra Blakeslee

Sandra Blakeslee (born 1943) is an American science correspondent of over four decades for ''The New York Times'' and science writer, specializing in neuroscience. Together with neuroscientist V. S. Ramachandran, she authored the 1998 popular sc ...

, HTM is primarily used today for anomaly detection

In data analysis, anomaly detection (also referred to as outlier detection and sometimes as novelty detection) is generally understood to be the identification of rare items, events or observations which deviate significantly from the majority o ...

in streaming data. The technology is based on neuroscience

Neuroscience is the science, scientific study of the nervous system (the brain, spinal cord, and peripheral nervous system), its functions and disorders. It is a Multidisciplinary approach, multidisciplinary science that combines physiology, an ...

and the physiology

Physiology (; ) is the scientific study of functions and mechanisms in a living system. As a sub-discipline of biology, physiology focuses on how organisms, organ systems, individual organs, cells, and biomolecules carry out the chemic ...

and interaction of pyramidal neurons

Pyramidal cells, or pyramidal neurons, are a type of multipolar neuron found in areas of the brain including the cerebral cortex, the hippocampus, and the amygdala. Pyramidal neurons are the primary excitation units of the mammalian prefrontal co ...

in the neocortex

The neocortex, also called the neopallium, isocortex, or the six-layered cortex, is a set of layers of the mammalian cerebral cortex involved in higher-order brain functions such as sensory perception, cognition, generation of motor commands, ...

of the mammalian (in particular, human

Humans (''Homo sapiens'') are the most abundant and widespread species of primate, characterized by bipedalism and exceptional cognitive skills due to a large and complex brain. This has enabled the development of advanced tools, culture, ...

) brain.

At the core of HTM are learning algorithm

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific problems or to perform a computation. Algorithms are used as specifications for performing ...

s that can store, learn, infer

Inferences are steps in reasoning, moving from premises to logical consequences; etymologically, the word '' infer'' means to "carry forward". Inference is theoretically traditionally divided into deduction and induction, a distinction that ...

, and recall high-order sequences. Unlike most other machine learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence.

Machine ...

methods, HTM constantly learns (in an unsupervised process) time-based patterns in unlabeled data. HTM is robust to noise, and has high capacity (it can learn multiple patterns simultaneously). When applied to computers, HTM is well suited for prediction, anomaly detection, classification, and ultimately sensorimotor applications.

HTM has been tested and implemented in software through example applications from Numenta and a few commercial applications from Numenta's partners.

Structure and algorithms

A typical HTM network is atree

In botany, a tree is a perennial plant with an elongated stem, or trunk, usually supporting branches and leaves. In some usages, the definition of a tree may be narrower, including only woody plants with secondary growth, plants that are ...

-shaped hierarchy of ''levels'' (not to be confused with the "''layers''" of the neocortex

The neocortex, also called the neopallium, isocortex, or the six-layered cortex, is a set of layers of the mammalian cerebral cortex involved in higher-order brain functions such as sensory perception, cognition, generation of motor commands, ...

, as described below

Below may refer to:

*Earth

* Ground (disambiguation)

* Soil

* Floor

* Bottom (disambiguation)

* Less than

*Temperatures below freezing

* Hell or underworld

People with the surname

* Ernst von Below (1863–1955), German World War I general

* Fr ...

). These levels are composed of smaller elements called ''region''s (or nodes). A single level in the hierarchy possibly contains several regions. Higher hierarchy levels often have fewer regions. Higher hierarchy levels can reuse patterns learned at the lower levels by combining them to memorize more complex patterns.

Each HTM region has the same basic function. In learning and inference modes, sensory data (e.g. data from the eyes) comes into bottom-level regions. In generation mode, the bottom level regions output the generated pattern of a given category. The top level usually has a single region that stores the most general and most permanent categories (concepts); these determine, or are determined by, smaller concepts at lower levels—concepts that are more restricted in time and space. When set in inference mode, a region (in each level) interprets information coming up from its "child" regions as probabilities of the categories it has in memory.

Each HTM region learns by identifying and memorizing spatial patterns—combinations of input bits that often occur at the same time. It then identifies temporal sequences of spatial patterns that are likely to occur one after another.

As an evolving model

HTM is the algorithmic component toJeff Hawkins

Jeffrey Hawkins is a co-founder of the companies Palm Computing, where he co-created the PalmPilot, and Handspring, where he was one of the creators of the Treo.Jeff Hawkins, ''On Intelligence'', p.28

He subsequently turned to work on neurosc ...

’ Thousand Brains Theory of Intelligence. So new findings on the neocortex are progressively incorporated into the HTM model, which changes over time in response. The new findings do not necessarily invalidate the previous parts of the model, so ideas from one generation are not necessarily excluded in its successive one. Because of the evolving nature of the theory, there have been several generations of HTM algorithms, which are briefly described below.

First generation: zeta 1

The first generation of HTM algorithms is sometimes referred to as ''zeta 1''.Training

During ''training'', a node (or region) receives a temporal sequence of spatial patterns as its input. The learning process consists of two stages: # The spatial pooling identifies (in the input) frequently observed patterns and memorise them as "coincidences". Patterns that are significantly similar to each other are treated as the same coincidence. A large number of possible input patterns are reduced to a manageable number of known coincidences. # The temporal pooling partitions coincidences that are likely to follow each other in the training sequence into temporal groups. Each group of patterns represents a "cause" of the input pattern (or "name" in ''On Intelligence''). The concepts of ''spatial pooling'' and ''temporal pooling'' are still quite important in the current HTM algorithms. Temporal pooling is not yet well understood, and its meaning has changed over time (as the HTM algorithms evolved).Inference

During inference, the node calculates the set of probabilities that a pattern belongs to each known coincidence. Then it calculates the probabilities that the input represents each temporal group. The set of probabilities assigned to the groups is called a node's "belief" about the input pattern. (In a simplified implementation, node's belief consists of only one winning group). This belief is the result of the inference that is passed to one or more "parent" nodes in the next higher level of the hierarchy. "Unexpected" patterns to the node do not have a dominant probability of belonging to any one temporal group but have nearly equal probabilities of belonging to several of the groups. If sequences of patterns are similar to the training sequences, then the assigned probabilities to the groups will not change as often as patterns are received. The output of the node will not change as much, and a resolution in time is lost. In a more general scheme, the node's belief can be sent to the input of any node(s) at any level(s), but the connections between the nodes are still fixed. The higher-level node combines this output with the output from other child nodes thus forming its own input pattern. Since resolution in space and time is lost in each node as described above, beliefs formed by higher-level nodes represent an even larger range of space and time. This is meant to reflect the organisation of the physical world as it is perceived by the human brain. Larger concepts (e.g. causes, actions, and objects) are perceived to change more slowly and consist of smaller concepts that change more quickly. Jeff Hawkins postulates that brains evolved this type of hierarchy to match, predict, and affect the organisation of the external world. More details about the functioning of Zeta 1 HTM can be found in Numenta's old documentation.Second generation: cortical learning algorithms

The second generation of HTM learning algorithms, often referred to as cortical learning algorithms (CLA), was drastically different from zeta 1. It relies on adata structure

In computer science, a data structure is a data organization, management, and storage format that is usually chosen for Efficiency, efficient Data access, access to data. More precisely, a data structure is a collection of data values, the rel ...

called sparse distributed representations (that is, a data structure whose elements are binary, 1 or 0, and whose number of 1 bits is small compared to the number of 0 bits) to represent the brain activity and a more biologically-realistic neuron model (often also referred to as cell, in the context of HTM). There are two core components in this HTM generation: a spatial pooling algorithm, which outputs sparse distributed representations (SDR), and a sequence memory algorithm, which learns to represent and predict complex sequences.

In this new generation, the layers

Layer or layered may refer to:

Arts, entertainment, and media

* ''Layers'' (Kungs album)

* ''Layers'' (Les McCann album)

* ''Layers'' (Royce da 5'9" album)

*"Layers", the title track of Royce da 5'9"'s sixth studio album

* Layer, a female Maveri ...

and minicolumns of the cerebral cortex

The cerebral cortex, also known as the cerebral mantle, is the outer layer of neural tissue of the cerebrum of the brain in humans and other mammals. The cerebral cortex mostly consists of the six-layered neocortex, with just 10% consisting o ...

are addressed and partially modeled. Each HTM layer (not to be confused with an HTM level of an HTM hierarchy, as described above) consists of a number of highly interconnected minicolumns. An HTM layer creates a sparse distributed representation from its input, so that a fixed percentage of '' minicolumns'' are active at any one time. A minicolumn is understood as a group of cells that have the same receptive field

The receptive field, or sensory space, is a delimited medium where some physiological stimuli can evoke a sensory neuronal response in specific organisms.

Complexity of the receptive field ranges from the unidimensional chemical structure of odo ...

. Each minicolumn has a number of cells that are able to remember several previous states. A cell can be in one of three states: ''active'', ''inactive'' and ''predictive'' state.

Spatial pooling

The receptive field of each minicolumn is a fixed number of inputs that are randomly selected from a much larger number of node inputs. Based on the (specific) input pattern, some minicolumns will be more or less associated with the active input values. Spatial pooling selects a relatively constant number of the most active minicolumns and inactivates (inhibits) other minicolumns in the vicinity of the active ones. Similar input patterns tend to activate a stable set of minicolumns. The amount of memory used by each layer can be increased to learn more complex spatial patterns or decreased to learn simpler patterns.= Active, inactive and predictive cells

= As mentioned above, a cell (or a neuron) of a minicolumn, at any point in time, can be in an active, inactive or predictive state. Initially, cells are inactive.How do cells become active? If one or more cells in the active minicolumn are in the ''predictive'' state (see below), they will be the only cells to become active in the current time step. If none of the cells in the active minicolumn are in the predictive state (which happens during the initial time step or when the activation of this minicolumn was not expected), all cells are made active.

How do cells become predictive? When a cell becomes active, it gradually forms connections to nearby cells that tend to be active during several previous time steps. Thus a cell learns to recognize a known sequence by checking whether the connected cells are active. If a large number of connected cells are active, this cell switches to the ''predictive'' state in anticipation of one of the few next inputs of the sequence.

= The output of a minicolumn

= The output of a layer includes minicolumns in both active and predictive states. Thus minicolumns are active over long periods of time, which leads to greater temporal stability seen by the parent layer.Inference and online learning

Cortical learning algorithms are able to learn continuously from each new input pattern, therefore no separate inference mode is necessary. During inference, HTM tries to match the stream of inputs to fragments of previously learned sequences. This allows each HTM layer to be constantly predicting the likely continuation of the recognized sequences. The index of the predicted sequence is the output of the layer. Since predictions tend to change less frequently than the input patterns, this leads to increasing temporal stability of the output in higher hierarchy levels. Prediction also helps to fill in missing patterns in the sequence and to interpret ambiguous data by biasing the system to infer what it predicted.Applications of the CLAs

Cortical learning algorithms are currently being offered as commercialSaaS

Software as a service (SaaS ) is a software licensing and delivery model in which software is licensed on a subscription basis and is centrally hosted. SaaS is also known as "on-demand software" and Web-based/Web-hosted software.

SaaS is co ...

by Numenta (such as Grok).

The validity of the CLAs

The following question was posed to Jeff Hawkins in September 2011 with regard to cortical learning algorithms: "How do you know if the changes you are making to the model are good or not?" To which Jeff's response was "There are two categories for the answer: one is to look at neuroscience, and the other is methods for machine intelligence. In the neuroscience realm, there are many predictions that we can make, and those can be tested. If our theories explain a vast array of neuroscience observations then it tells us that we’re on the right track. In the machine learning world, they don’t care about that, only how well it works on practical problems. In our case that remains to be seen. To the extent you can solve a problem that no one was able to solve before, people will take notice."Third generation: sensorimotor inference

The third generation builds on the second generation and adds in a theory of sensorimotor inference in the neocortex. This theory proposes thatcortical column

A cortical column is a group of neurons forming a cylindrical structure through the cerebral cortex of the brain perpendicular to the cortical surface. The structure was first identified by Mountcastle in 1957. He later identified minicolumns as t ...

s at every level of the hierarchy can learn complete models of objects over time and that features are learned at specific locations on the objects. The theory was expanded in 2018 and referred to as the Thousand Brains Theory.

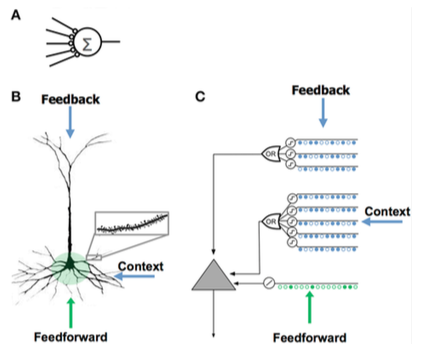

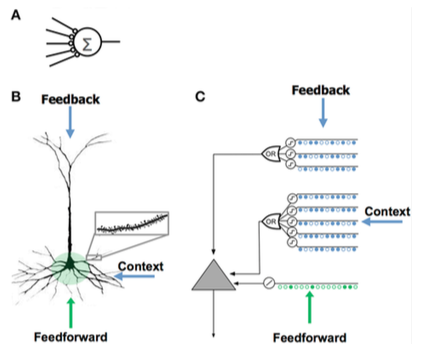

Comparison of neuron models

:

:

Comparing HTM and neocortex

HTM attempts to implement the functionality that is characteristic of a hierarchically related group of cortical regions in the neocortex. A ''region'' of the neocortex corresponds to one or more ''levels'' in the HTM hierarchy, while thehippocampus

The hippocampus (via Latin from Greek , ' seahorse') is a major component of the brain of humans and other vertebrates. Humans and other mammals have two hippocampi, one in each side of the brain. The hippocampus is part of the limbic system, ...

is remotely similar to the highest HTM level. A single HTM node may represent a group of cortical column

A cortical column is a group of neurons forming a cylindrical structure through the cerebral cortex of the brain perpendicular to the cortical surface. The structure was first identified by Mountcastle in 1957. He later identified minicolumns as t ...

s within a certain region.

Although it is primarily a functional model, several attempts have been made to relate the algorithms of the HTM with the structure of neuronal connections in the layers of neocortex. The neocortex is organized in vertical columns of 6 horizontal layers. The 6 layers of cells in the neocortex should not be confused with levels in an HTM hierarchy.

HTM nodes attempt to model a portion of cortical columns (80 to 100 neurons) with approximately 20 HTM "cells" per column. HTMs model only layers 2 and 3 to detect spatial and temporal features of the input with 1 cell per column in layer 2 for spatial "pooling", and 1 to 2 dozen per column in layer 3 for temporal pooling. A key to HTMs and the cortex's is their ability to deal with noise and variation in the input which is a result of using a "sparse distributive representation" where only about 2% of the columns are active at any given time.

An HTM attempts to model a portion of the cortex's learning and plasticity as described above. Differences between HTMs and neurons include:

* strictly binary signals and synapses

* no direct inhibition of synapses or dendrites (but simulated indirectly)

* currently only models layers 2/3 and 4 (no 5 or 6)

* no "motor" control (layer 5)

* no feed-back between regions (layer 6 of high to layer 1 of low)

Sparse distributed representations

Integrating memory component with neural networks has a long history dating back to early research in distributed representations andself-organizing map

A self-organizing map (SOM) or self-organizing feature map (SOFM) is an unsupervised machine learning technique used to produce a low-dimensional (typically two-dimensional) representation of a higher dimensional data set while preserving the ...

s. For example, in sparse distributed memory Sparse distributed memory (SDM) is a mathematical model of human long-term memory introduced by Pentti Kanerva in 1988 while he was at NASA Ames Research Center. It is a generalized random-access memory (RAM) for long (e.g., 1,000 bit) binary words ...

(SDM), the patterns encoded by neural networks are used as memory addresses for content-addressable memory

Content-addressable memory (CAM) is a special type of computer memory used in certain very-high-speed searching applications. It is also known as associative memory or associative storage and compares input search data against a table of stored d ...

, with "neurons" essentially serving as address encoders and decoders.

Computers store information in ''dense'' representations such as a 32-bit word

A word is a basic element of language that carries an objective or practical meaning, can be used on its own, and is uninterruptible. Despite the fact that language speakers often have an intuitive grasp of what a word is, there is no consen ...

, where all combinations of 1s and 0s are possible. By contrast, brains use ''sparse'' distributed representations (SDRs). The human neocortex has roughly 16 billion neurons, but at any given time only a small percent are active. The activities of neurons are like bits in a computer, and so the representation is sparse. Similar to SDM developed by NASA

The National Aeronautics and Space Administration (NASA ) is an independent agency of the US federal government responsible for the civil space program, aeronautics research, and space research.

NASA was established in 1958, succeedi ...

in the 80s and vector space

In mathematics and physics, a vector space (also called a linear space) is a set whose elements, often called '' vectors'', may be added together and multiplied ("scaled") by numbers called '' scalars''. Scalars are often real numbers, but ...

models used in Latent semantic analysis

Latent semantic analysis (LSA) is a technique in natural language processing, in particular distributional semantics, of analyzing relationships between a set of documents and the terms they contain by producing a set of concepts related to the do ...

, HTM uses sparse distributed representations.

The SDRs used in HTM are binary representations of data consisting of many bits with a small percentage of the bits active (1s); a typical implementation might have 2048 columns and 64K artificial neurons where as few as 40 might be active at once. Although it may seem less efficient for the majority of bits to go "unused" in any given representation, SDRs have two major advantages over traditional dense representations. First, SDRs are tolerant of corruption and ambiguity due to the meaning of the representation being shared (''distributed'') across a small percentage (''sparse'') of active bits. In a dense representation, flipping a single bit completely changes the meaning, while in an SDR a single bit may not affect the overall meaning much. This leads to the second advantage of SDRs: because the meaning of a representation is distributed across all active bits, the similarity between two representations can be used as a measure of semantic

Semantics (from grc, σημαντικός ''sēmantikós'', "significant") is the study of reference, meaning, or truth. The term can be used to refer to subfields of several distinct disciplines, including philosophy, linguistics and comput ...

similarity in the objects they represent. That is, if two vectors in an SDR have 1s in the same position, then they are semantically similar in that attribute. The bits in SDRs have semantic meaning, and that meaning is distributed across the bits.

The semantic folding theory builds on these SDR properties to propose a new model for language semantics, where words are encoded into word-SDRs and the similarity between terms, sentences, and texts can be calculated with simple distance measures.

Similarity to other models

Bayesian networks

Likened to aBayesian network

A Bayesian network (also known as a Bayes network, Bayes net, belief network, or decision network) is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG). Bay ...

, an HTM comprises a collection of nodes that are arranged in a tree-shaped hierarchy. Each node in the hierarchy discovers an array of causes in the input patterns and temporal sequences it receives. A Bayesian belief revision Belief revision is the process of changing beliefs to take into account a new piece of information. The logical formalization of belief revision is researched in philosophy, in databases, and in artificial intelligence for the design of rational ag ...

algorithm is used to propagate feed-forward and feedback beliefs from child to parent nodes and vice versa. However, the analogy to Bayesian networks is limited, because HTMs can be self-trained (such that each node has an unambiguous family relationship), cope with time-sensitive data, and grant mechanisms for covert attention.

A theory of hierarchical cortical computation based on Bayesian belief propagation

A belief is an attitude that something is the case, or that some proposition is true. In epistemology, philosophers use the term "belief" to refer to attitudes about the world which can be either true or false. To believe something is to take i ...

was proposed earlier by Tai Sing Lee and David Mumford

David Bryant Mumford (born 11 June 1937) is an American mathematician known for his work in algebraic geometry and then for research into vision and pattern theory. He won the Fields Medal and was a MacArthur Fellow. In 2010 he was awarded ...

. While HTM is mostly consistent with these ideas, it adds details about handling invariant representations in the visual cortex.

Neural networks

Like any system that models details of the neocortex, HTM can be viewed as anartificial neural network

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains.

An ANN is based on a collection of connected units ...

. The tree-shaped hierarchy commonly used in HTMs resembles the usual topology of traditional neural networks. HTMs attempt to model cortical columns (80 to 100 neurons) and their interactions with fewer HTM "neurons". The goal of current HTMs is to capture as much of the functions of neurons and the network (as they are currently understood) within the capability of typical computers and in areas that can be made readily useful such as image processing. For example, feedback from higher levels and motor control is not attempted because it is not yet understood how to incorporate them and binary instead of variable synapses are used because they were determined to be sufficient in the current HTM capabilities.

LAMINART and similar neural networks researched by Stephen Grossberg

Stephen Grossberg (born December 31, 1939) is a cognitive scientist, theoretical and computational psychologist, neuroscientist, mathematician, biomedical engineer, and neuromorphic technologist. He is the Wang Professor of Cognitive and Neu ...

attempt to model both the infrastructure of the cortex and the behavior of neurons in a temporal framework to explain neurophysiological and psychophysical data. However, these networks are, at present, too complex for realistic application.

HTM is also related to work by Tomaso Poggio

Tomaso Armando Poggio (born 11 September 1947 in Genoa, Italy), is the Eugene McDermott professor in the Department of Brain and Cognitive Sciences, an investigator at the McGovern Institute for Brain Research, a member of the MIT Computer Scie ...

, including an approach for modeling the ventral stream

The two-streams hypothesis is a model of the neural processing of vision as well as hearing. The hypothesis, given its initial characterisation in a paper by David Milner and Melvyn A. Goodale in 1992, argues that humans possess two distinct visua ...

of the visual cortex known as HMAX. Similarities of HTM to various AI ideas are described in the December 2005 issue of the Artificial Intelligence journal.

Neocognitron

Neocognitron

__NOTOC__

The neocognitron is a hierarchical, multilayered artificial neural network proposed by Kunihiko Fukushima in 1979. It has been used for Japanese Handwriting recognition, handwritten character recognition and other pattern recognition task ...

, a hierarchical multilayered neural network proposed by Professor Kunihiko Fukushima in 1987, is one of the first Deep Learning Neural Networks models.

NuPIC platform and development tools

ThNumenta Platform for Intelligent Computing (NuPIC)

is one of several availabl

HTM implementations

Some are provided b

Numenta

while some are developed and maintained by th

HTM open source community

NuPIC includes implementations of Spatial Pooling and Temporal Memory in both C++ and Python. It also include

3 APIs

Users can construct HTM systems using direct implementations of th

or construct a Network using th

which is a flexible framework for constructing complicated associations between different Layers of cortex.

NuPIC 1.0

was released in July 2017, after which the codebase was put into maintenance mode. Current research continues in Nument

research codebases

Applications

The following commercial applications are available using NuPIC: * Grok – anomaly detection for IT servers, sewww.grokstream.com

* Cortical.io – advanced natural language processing, se

www.cortical.io

The following tools are available on NuPIC: * HTM Studio – find anomalies in time series using your own data, se

numenta.com/htm-studio/

* Numenta Anomaly Benchmark – compare HTM anomalies with other anomaly detection techniques, se

numenta.com/numenta-anomaly-benchmark/

The following example applications are available on NuPIC, se

numenta.com/applications/

* HTM for stocks – example of tracking anomalies in the stock market (sample code) * Rogue behavior detection – example of finding anomalies in human behavior (white paper and sample code) * Geospatial tracking – example of finding anomalies in objectives moving through space and time (white paper and sample code)

See also

*Neocognitron

__NOTOC__

The neocognitron is a hierarchical, multilayered artificial neural network proposed by Kunihiko Fukushima in 1979. It has been used for Japanese Handwriting recognition, handwritten character recognition and other pattern recognition task ...

* Deep learning

*Convolutional neural network

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of artificial neural network (ANN), most commonly applied to analyze visual imagery. CNNs are also known as Shift Invariant or Space Invariant Artificial Neural Netwo ...

*Strong AI

Strong artificial intelligence may refer to:

"Strong Artificial Intelligence (AI) is an artificial intelligence that constructs mental abilities, thought processes, and functions that are impersonated from the human brain. It is more of a phil ...

*Artificial consciousness

Artificial consciousness (AC), also known as machine consciousness (MC) or synthetic consciousness (; ), is a field related to artificial intelligence and cognitive robotics. The aim of the theory of artificial consciousness is to "Define that w ...

*Cognitive architecture A cognitive architecture refers to both a theory about the structure of the human mind and to a computational instantiation of such a theory used in the fields of artificial intelligence (AI) and computational cognitive science. The formalized mod ...

*''On Intelligence

''On Intelligence: How a New Understanding of the Brain will Lead to the Creation of Truly Intelligent Machines'' is a 2004 book by Jeff Hawkins and Sandra Blakeslee. The book explains Hawkins' memory-prediction framework theory of the brain and ...

''

*Memory-prediction framework

The memory-prediction framework is a theory of brain function created by Jeff Hawkins and described in his 2004 book ''On Intelligence''. This theory concerns the role of the mammalian neocortex and its associations with the hippocampi and the th ...

*Belief revision Belief revision is the process of changing beliefs to take into account a new piece of information. The logical formalization of belief revision is researched in philosophy, in databases, and in artificial intelligence for the design of rational ag ...

*Belief propagation

A belief is an attitude that something is the case, or that some proposition is true. In epistemology, philosophers use the term "belief" to refer to attitudes about the world which can be either true or false. To believe something is to take i ...

*Bionics

Bionics or biologically inspired engineering is the application of biological methods and systems found in nature to the study and design of engineering systems and modern technology.

The word ''bionic'', coined by Jack E. Steele in August 1 ...

*List of artificial intelligence projects

The following is a list of current and past, non-classified notable artificial intelligence projects.

Specialized projects

Brain-inspired

* Blue Brain Project, an attempt to create a synthetic brain by reverse-engineering the mammalian brain do ...

* Memory Network

*Neural Turing Machine

A Neural Turing machine (NTM) is a recurrent neural network model of a Turing machine. The approach was published by Alex Graves et al. in 2014. NTMs combine the fuzzy pattern matching capabilities of neural networks with the algorithmic power of p ...

*Multiple trace theory

Multiple trace theory is a memory consolidation model advanced as an alternative model to strength theory. It posits that each time some information is presented to a person, it is neurally encoded in a unique memory trace composed of a combination ...

Related models

*Hierarchical hidden Markov model

The hierarchical hidden Markov model (HHMM) is a statistical model derived from the hidden Markov model (HMM). In an HHMM, each state is considered to be a self-contained probabilistic model. More precisely, each state of the HHMM is itself an HHMM ...

*Bayesian networks

A Bayesian network (also known as a Bayes network, Bayes net, belief network, or decision network) is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG). Bay ...

*Neural networks

A neural network is a network or circuit of biological neurons, or, in a modern sense, an artificial neural network, composed of artificial neurons or nodes. Thus, a neural network is either a biological neural network, made up of biological ...

References

{{ReflistExternal links

Official

Cortical Learning Algorithm overview

(Accessed May 2013)

HTM Cortical Learning Algorithms

(PDF Sept. 2011)

Numenta, Inc.

an

Internet forum

An Internet forum, or message board, is an online discussion site where people can hold conversations in the form of posted messages. They differ from chat rooms in that messages are often longer than one line of text, and are at least tempora ...

for the discussion of relevant topics, especially relevant being thModels and Simulation Topics

forum.

Hierarchical Temporal Memory

(Microsoft PowerPoint presentation)

Cortical Learning Algorithm Tutorial: CLA Basics

talk about the cortical learning algorithm (CLA) used by the HTM model on

YouTube

YouTube is a global online video sharing and social media platform headquartered in San Bruno, California. It was launched on February 14, 2005, by Steve Chen, Chad Hurley, and Jawed Karim. It is owned by Google, and is the second most ...

Other

Pattern Recognition by Hierarchical Temporal Memory

by Davide Maltoni, April 13, 2011

Vicarious

Startup rooted in HTM by Dileep George

The Gartner Fellows: Jeff Hawkins Interview

by Tom Austin, ''

Gartner

Gartner, Inc is a technological research and consulting firm based in Stamford, Connecticut that conducts research on technology and shares this research both through private consulting as well as executive programs and conferences. Its clients ...

'', March 2, 2006Emerging Tech: Jeff Hawkins reinvents artificial intelligence

by Debra D'Agostino and Edward H. Baker, ''CIO Insight'', May 1, 2006

by Stefanie Olsen, ''

CNET News.com

''CNET'' (short for "Computer Network") is an American media website that publishes reviews, news, articles, blogs, podcasts, and videos on technology and consumer electronics globally. ''CNET'' originally produced content for radio and televi ...

'', May 12, 2006"The Thinking Machine"

by Evan Ratliff,

Wired

''Wired'' (stylized as ''WIRED'') is a monthly American magazine, published in print and online editions, that focuses on how emerging technologies affect culture, the economy, and politics. Owned by Condé Nast, it is headquartered in San Fran ...

, March 2007Think like a human

by Jeff Hawkins,

IEEE Spectrum

''IEEE Spectrum'' is a magazine edited by the Institute of Electrical and Electronics Engineers

The Institute of Electrical and Electronics Engineers (IEEE) is a 501(c)(3) professional association for electronic engineering and electrical e ...

, April 2007Neocortex – Memory-Prediction Framework

—

Open Source

Open source is source code that is made freely available for possible modification and redistribution. Products include permission to use the source code, design documents, or content of the product. The open-source model is a decentralized sof ...

Implementation with GNU General Public License

The GNU General Public License (GNU GPL or simply GPL) is a series of widely used free software licenses that guarantee end user

In product development, an end user (sometimes end-user) is a person who ultimately uses or is intended to ulti ...

Hierarchical Temporal Memory related Papers and Books

Belief revision Artificial neural networks Deep learning Unsupervised learning Semisupervised learning