Multivariate analysis of variance on:

[Wikipedia]

[Google]

[Amazon]

In

In

MANOVA's power is affected by the correlations of the dependent variables and by the effect sizes associated with those variables. For example, when there are two groups and two dependent variables, MANOVA's power is lowest when the correlation equals the ratio of the smaller to the larger standardized effect size.

MANOVA's power is affected by the correlations of the dependent variables and by the effect sizes associated with those variables. For example, when there are two groups and two dependent variables, MANOVA's power is lowest when the correlation equals the ratio of the smaller to the larger standardized effect size.

In

In statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a s ...

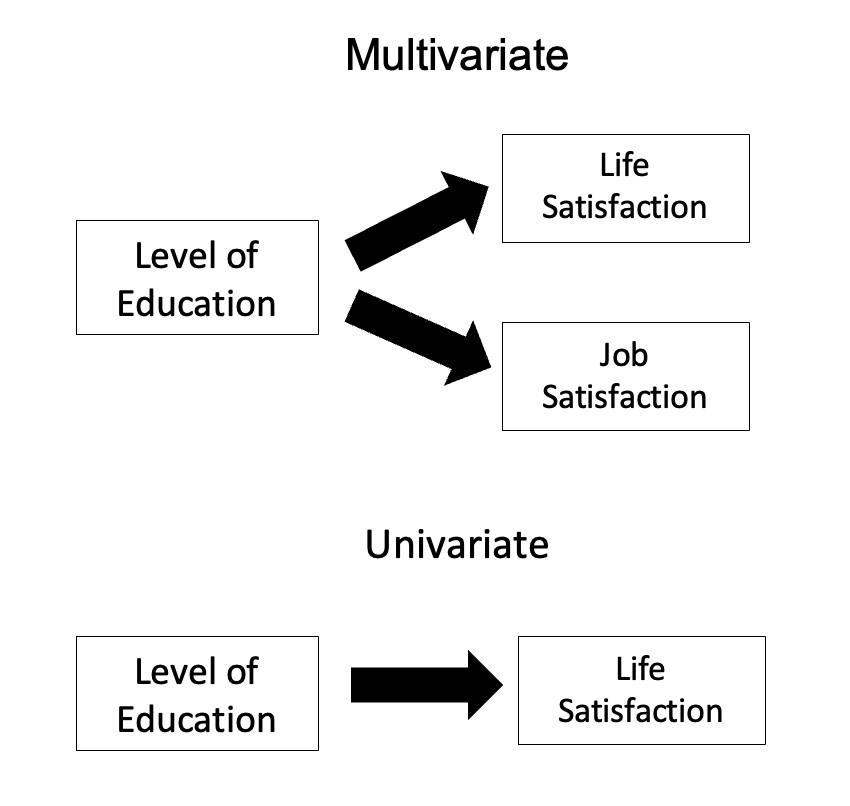

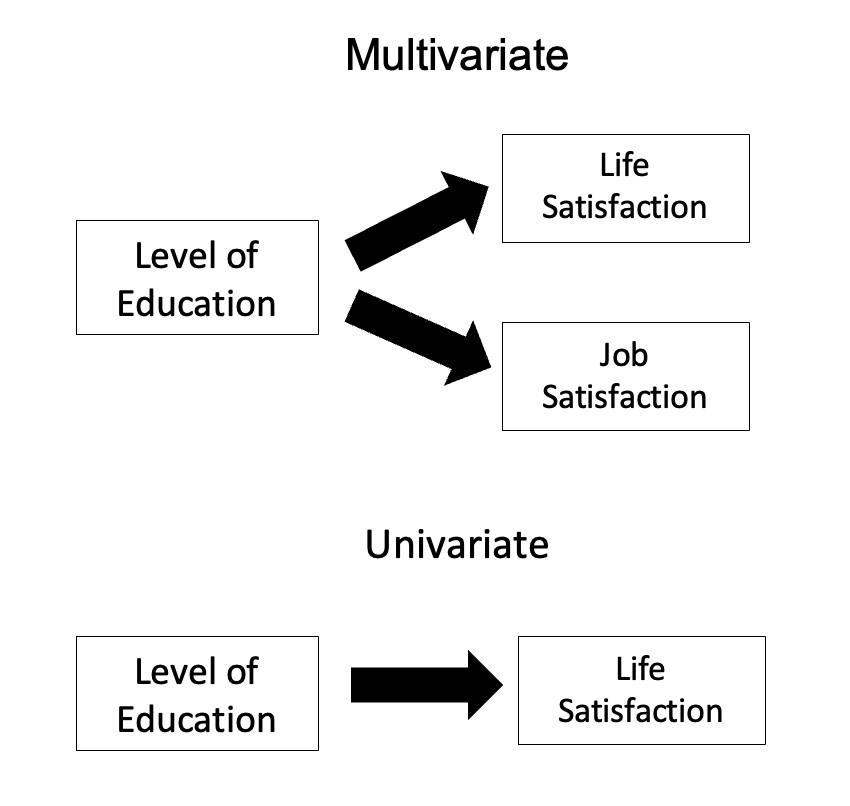

, multivariate analysis of variance (MANOVA) is a procedure for comparing multivariate sample means. As a multivariate procedure, it is used when there are two or more dependent variables, and is often followed by significance tests involving individual dependent variables separately.

Without relation to the image, the dependent variables may be k life satisfactions scores measured at sequential time points and p job satisfaction scores measured at sequential time points. In this case there are k+p dependent variables whose linear combination follows a multivariate normal distribution, multivariate variance-covariance matrix homogeneity, and linear relationship, no multicollinearity, and each without outliers.

Model

Assume -dimensional observations, where the ’th observation is assigned to the group and is distributed around the group center withmultivariate Gaussian

In probability theory and statistics, the multivariate normal distribution, multivariate Gaussian distribution, or joint normal distribution is a generalization of the one-dimensional (univariate) normal distribution to higher dimensions. One de ...

noise: where is the covariance matrix

In probability theory and statistics, a covariance matrix (also known as auto-covariance matrix, dispersion matrix, variance matrix, or variance–covariance matrix) is a square matrix giving the covariance between each pair of elements of ...

. Then we formulate our null hypothesis

The null hypothesis (often denoted ''H''0) is the claim in scientific research that the effect being studied does not exist. The null hypothesis can also be described as the hypothesis in which no relationship exists between two sets of data o ...

as

Relationship with ANOVA

MANOVA is a generalized form of univariateanalysis of variance

Analysis of variance (ANOVA) is a family of statistical methods used to compare the Mean, means of two or more groups by analyzing variance. Specifically, ANOVA compares the amount of variation ''between'' the group means to the amount of variati ...

(ANOVA), although, unlike univariate ANOVA, it uses the covariance

In probability theory and statistics, covariance is a measure of the joint variability of two random variables.

The sign of the covariance, therefore, shows the tendency in the linear relationship between the variables. If greater values of one ...

between outcome variables in testing the statistical significance of the mean differences.

Where sums of squares appear in univariate analysis of variance, in multivariate analysis of variance certain positive-definite matrices appear. The diagonal entries are the same kinds of sums of squares that appear in univariate ANOVA. The off-diagonal entries are corresponding sums of products. Under normality assumptions about error

An error (from the Latin , meaning 'to wander'Oxford English Dictionary, s.v. “error (n.), Etymology,” September 2023, .) is an inaccurate or incorrect action, thought, or judgement.

In statistics, "error" refers to the difference between t ...

distributions, the counterpart of the sum of squares due to error has a Wishart distribution

In statistics, the Wishart distribution is a generalization of the gamma distribution to multiple dimensions. It is named in honor of John Wishart (statistician), John Wishart, who first formulated the distribution in 1928. Other names include Wi ...

.Hypothesis Testing

First, define the following matrices: * : where the -th row is equal to * : where the -th row is the best prediction given the group membership . That is the mean over all observation in group : . * : where the -th row is the best prediction given no information. That is the empirical mean over all observations Then the matrix is a generalization of the sum of squares explained by the group, and is a generalization of theresidual sum of squares

In statistics, the residual sum of squares (RSS), also known as the sum of squared residuals (SSR) or the sum of squared estimate of errors (SSE), is the sum of the squares of residuals (deviations predicted from actual empirical values of dat ...

.

Note that alternatively one could also speak about covariances when the abovementioned matrices are scaled by 1/(n-1) since the subsequent test statistics do not change by multiplying and by the same non-zero constant.

The most common statistics are summaries based on the roots (or eigenvalues) of the matrix

* Samuel Stanley Wilks

Samuel Stanley Wilks (June 17, 1906 – March 7, 1964) was an American mathematician and academic who played an important role in the development of mathematical statistics, especially in regard to practical applications.

Early life and educat ...

' distributed as lambda

Lambda (; uppercase , lowercase ; , ''lám(b)da'') is the eleventh letter of the Greek alphabet, representing the voiced alveolar lateral approximant . In the system of Greek numerals, lambda has a value of 30. Lambda is derived from the Phoen ...

(Λ)

* the K. C. Sreedharan Pillai–M. S. Bartlett

Maurice Stevenson Bartlett FRS (18 June 1910 – 8 January 2002) was an English statistician who made particular contributions to the analysis of data with spatial and temporal patterns. He is also known for his work in the theory of statis ...

trace

Trace may refer to:

Arts and entertainment Music

* ''Trace'' (Son Volt album), 1995

* ''Trace'' (Died Pretty album), 1993

* Trace (band), a Dutch progressive rock band

* ''The Trace'' (album), by Nell

Other uses in arts and entertainment

* ...

,

* the Lawley– Hotelling trace,

* Roy's greatest root

Roy's is an upscale American restaurant that specializes in Hawaiian and Japanese fusion cuisine, with a focus on sushi, seafood and steak. The chain was founded by James Beard Foundation Award Winner Roy Yamaguchi in 1988 in Honolulu, Hawaii ...

(also called ''Roy's largest root''),

Discussion continues over the merits of each, although the greatest root leads only to a bound on significance which is not generally of practical interest. A further complication is that, except for the Roy's greatest root, the distribution of these statistics under the null hypothesis

The null hypothesis (often denoted ''H''0) is the claim in scientific research that the effect being studied does not exist. The null hypothesis can also be described as the hypothesis in which no relationship exists between two sets of data o ...

is not straightforward and can only be approximated except in a few low-dimensional cases.

An algorithm for the distribution of the Roy's largest root under the null hypothesis

The null hypothesis (often denoted ''H''0) is the claim in scientific research that the effect being studied does not exist. The null hypothesis can also be described as the hypothesis in which no relationship exists between two sets of data o ...

was derived in while the distribution under the alternative is studied in.

The best-known approximation

An approximation is anything that is intentionally similar but not exactly equal to something else.

Etymology and usage

The word ''approximation'' is derived from Latin ''approximatus'', from ''proximus'' meaning ''very near'' and the prefix ...

for Wilks' lambda was derived by C. R. Rao.

In the case of two groups, all the statistics are equivalent and the test reduces to Hotelling's T-square

In statistics, particularly in hypothesis testing, the Hotelling's ''T''-squared distribution (''T''2), proposed by Harold Hotelling, is a multivariate probability distribution that is tightly related to the ''F''-distribution and is most not ...

.

Introducing covariates (MANCOVA)

One can also test if there is a group effect after adjusting for covariates. For this, follow the procedure above but substitute with the predictions of thegeneral linear model

The general linear model or general multivariate regression model is a compact way of simultaneously writing several multiple linear regression models. In that sense it is not a separate statistical linear model. The various multiple linear regre ...

, containing the group and the covariates, and substitute with the predictions of the general linear model containing only the covariates (and an intercept). Then are the additional sum of squares explained by adding the grouping information and is the residual sum of squares of the model containing the grouping and the covariates.

Note that in case of unbalanced data, the order of adding the covariates matters.

Correlation of dependent variables

MANOVA's power is affected by the correlations of the dependent variables and by the effect sizes associated with those variables. For example, when there are two groups and two dependent variables, MANOVA's power is lowest when the correlation equals the ratio of the smaller to the larger standardized effect size.

MANOVA's power is affected by the correlations of the dependent variables and by the effect sizes associated with those variables. For example, when there are two groups and two dependent variables, MANOVA's power is lowest when the correlation equals the ratio of the smaller to the larger standardized effect size.

See also

*Permutational analysis of variance

Permutational multivariate analysis of variance (PERMANOVA), is a non-parametric multivariate statistical permutation test. PERMANOVA is used to compare groups of objects and test the null hypothesis that the centroids and dispersion of the grou ...

for a non-parametric alternative

*Discriminant function analysis

Linear discriminant analysis (LDA), normal discriminant analysis (NDA), canonical variates analysis (CVA), or discriminant function analysis is a generalization of Fisher's linear discriminant, a method used in statistics and other fields, to fi ...

*Canonical correlation analysis

In statistics, canonical-correlation analysis (CCA), also called canonical variates analysis, is a way of inferring information from cross-covariance matrices. If we have two vectors ''X'' = (''X''1, ..., ''X'n'') and ''Y'' ...

*Multivariate analysis of variance

In statistics, multivariate analysis of variance (MANOVA) is a procedure for comparing multivariate random variable, multivariate sample means. As a multivariate procedure, it is used when there are two or more dependent variables, and is often fo ...

(Wikiversity)

*Repeated measures design

Repeated measures design is a research design that involves multiple measures of the same variable taken on the same or matched subjects either under different conditions or over two or more time periods. For instance, repeated measurements are c ...

References

External links

{{Experimental design Analysis of variance Design of experiments