microarchitectural on:

[Wikipedia]

[Google]

[Amazon]

In

In

The ISA is roughly the same as the programming model of a processor as seen by an

The ISA is roughly the same as the programming model of a processor as seen by an

The pipelined

The pipelined

computer engineering

Computer engineering (CoE or CpE) is a branch of electrical engineering and computer science that integrates several fields of computer science and electronic engineering required to develop computer hardware and software. Computer engineers ...

, microarchitecture, also called computer organization and sometimes abbreviated as µarch or uarch, is the way a given instruction set architecture

In computer science, an instruction set architecture (ISA), also called computer architecture, is an abstract model of a computer. A device that executes instructions described by that ISA, such as a central processing unit (CPU), is called an ' ...

(ISA) is implemented in a particular processor

Processor may refer to:

Computing Hardware

* Processor (computing)

**Central processing unit (CPU), the hardware within a computer that executes a program

*** Microprocessor, a central processing unit contained on a single integrated circuit (I ...

. A given ISA may be implemented with different microarchitectures; implementations may vary due to different goals of a given design or due to shifts in technology.

Computer architecture

In computer engineering, computer architecture is a description of the structure of a computer system made from component parts. It can sometimes be a high-level description that ignores details of the implementation. At a more detailed level, t ...

is the combination of microarchitecture and instruction set architecture.

Relation to instruction set architecture

The ISA is roughly the same as the programming model of a processor as seen by an

The ISA is roughly the same as the programming model of a processor as seen by an assembly language

In computer programming, assembly language (or assembler language, or symbolic machine code), often referred to simply as Assembly and commonly abbreviated as ASM or asm, is any low-level programming language with a very strong correspondence be ...

programmer or compiler writer. The ISA includes the instructions

Instruction or instructions may refer to:

Computing

* Instruction, one operation of a processor within a computer architecture instruction set

* Computer program, a collection of instructions

Music

* Instruction (band), a 2002 rock band from Ne ...

, execution model

A programming language consists of a grammar/syntax plus an execution model. The execution model specifies the behavior of elements of the language. By applying the execution model, one can derive the behavior of a program that was written in term ...

, processor register

A processor register is a quickly accessible location available to a computer's processor. Registers usually consist of a small amount of fast storage, although some registers have specific hardware functions, and may be read-only or write-only. ...

s, address and data formats among other things. The microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA.

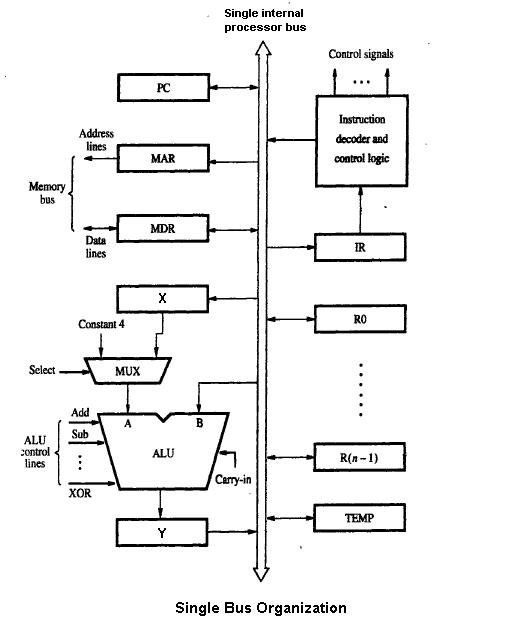

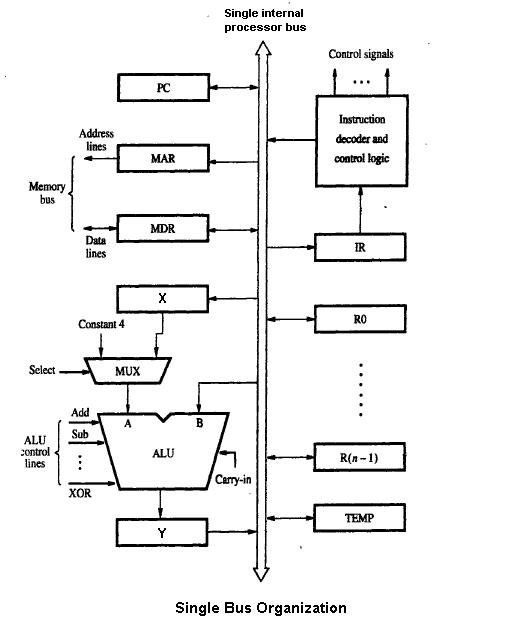

The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be anything from single gates and registers, to complete arithmetic logic unit

In computing, an arithmetic logic unit (ALU) is a Combinational logic, combinational digital circuit that performs arithmetic and bitwise operations on integer binary numbers. This is in contrast to a floating-point unit (FPU), which operates on ...

s (ALUs) and even larger elements. These diagrams generally separate the datapath

A datapath is a collection of functional units such as arithmetic logic units or multipliers that perform data processing operations, registers, and buses. Along with the control unit it composes the central processing unit (CPU). A larger data ...

(where data is placed) and the control path (which can be said to steer the data).

The person designing a system usually draws the specific microarchitecture as a kind of data flow diagram

A data-flow diagram is a way of representing a flow of data through a process or a system (usually an information system). The DFD also provides information about the outputs and inputs of each entity and the process itself. A data-flow diagram ha ...

. Like a block diagram

A block diagram is a diagram of a system in which the principal parts or functions are represented by blocks connected by lines that show the relationships of the blocks.

, the microarchitecture diagram shows microarchitectural elements such as the arithmetic and logic unit and the register file

A register file is an array of processor registers in a central processing unit (CPU). Register banking is the method of using a single name to access multiple different physical registers depending on the operating mode. Modern integrated circuit- ...

as a single schematic symbol. Typically, the diagram connects those elements with arrows, thick lines and thin lines to distinguish between three-state buses (which require a three-state buffer for each device that drives the bus), unidirectional buses (always driven by a single source, such as the way the address bus on simpler computers is always driven by the memory address register

In a computer, the memory address register (MAR) is the Central processing unit, CPU Hardware register, register that either stores the memory address from which data will be fetched to the CPU registers, or the address to which data will be sent ...

), and individual control lines. Very simple computers have a single data bus organization they have a single three-state bus

A three-state bus, also known as a tri-state bus, is a computer bus connected to multiple tri-state output devices, only one of which can be enabled at any point to avoid bus contention. This scheme allows for the same bus to be shared among mult ...

. The diagram of more complex computers usually shows multiple three-state buses, which help the machine do more operations simultaneously.

Each microarchitectural element is in turn represented by a schematic

A schematic, or schematic diagram, is a designed representation of the elements of a system using abstract, graphic symbols rather than realistic pictures. A schematic usually omits all details that are not relevant to the key information the sc ...

describing the interconnections of logic gate

A logic gate is an idealized or physical device implementing a Boolean function, a logical operation performed on one or more binary inputs that produces a single binary output. Depending on the context, the term may refer to an ideal logic gate, ...

s used to implement it. Each logic gate is in turn represented by a circuit diagram

A circuit diagram (wiring diagram, electrical diagram, elementary diagram, electronic schematic) is a graphical representation of an electrical circuit. A pictorial circuit diagram uses simple images of components, while a schematic diagram s ...

describing the connections of the transistors used to implement it in some particular logic family

In computer engineering, a logic family is one of two related concepts:

* A logic family of monolithic digital integrated circuit devices is a group of electronic logic gates constructed using one of several different designs, usually with compati ...

. Machines with different microarchitectures may have the same instruction set architecture, and thus be capable of executing the same programs. New microarchitectures and/or circuitry solutions, along with advances in semiconductor manufacturing, are what allows newer generations of processors to achieve higher performance while using the same ISA.

In principle, a single microarchitecture could execute several different ISAs with only minor changes to the microcode

In processor design, microcode (μcode) is a technique that interposes a layer of computer organization between the central processing unit (CPU) hardware and the programmer-visible instruction set architecture of a computer. Microcode is a laye ...

.

Aspects

datapath

A datapath is a collection of functional units such as arithmetic logic units or multipliers that perform data processing operations, registers, and buses. Along with the control unit it composes the central processing unit (CPU). A larger data ...

is the most commonly used datapath design in microarchitecture today. This technique is used in most modern microprocessors, microcontroller

A microcontroller (MCU for ''microcontroller unit'', often also MC, UC, or μC) is a small computer on a single VLSI integrated circuit (IC) chip. A microcontroller contains one or more CPUs (processor cores) along with memory and programmable i ...

s, and DSPs. The pipelined architecture allows multiple instructions to overlap in execution, much like an assembly line. The pipeline includes several different stages which are fundamental in microarchitecture designs. Some of these stages include instruction fetch, instruction decode, execute, and write back. Some architectures include other stages such as memory access. The design of pipelines is one of the central microarchitectural tasks.

Execution units are also essential to microarchitecture. Execution units include arithmetic logic unit

In computing, an arithmetic logic unit (ALU) is a Combinational logic, combinational digital circuit that performs arithmetic and bitwise operations on integer binary numbers. This is in contrast to a floating-point unit (FPU), which operates on ...

s (ALU), floating point unit

Floating may refer to:

* a type of dental work performed on horse teeth

* use of an isolation tank

* the guitar-playing technique where chords are sustained rather than scratched

* ''Floating'' (play), by Hugh Hughes

* Floating (psychological phe ...

s (FPU), load/store units, branch prediction, and SIMD

Single instruction, multiple data (SIMD) is a type of parallel processing in Flynn's taxonomy. SIMD can be internal (part of the hardware design) and it can be directly accessible through an instruction set architecture (ISA), but it should ...

. These units perform the operations or calculations of the processor. The choice of the number of execution units, their latency and throughput is a central microarchitectural design task. The size, latency, throughput and connectivity of memories within the system are also microarchitectural decisions.

System-level design decisions such as whether or not to include peripheral

A peripheral or peripheral device is an auxiliary device used to put information into and get information out of a computer. The term ''peripheral device'' refers to all hardware components that are attached to a computer and are controlled by the ...

s, such as memory controller

The memory controller is a digital circuit that manages the flow of data going to and from the computer's main memory. A memory controller can be a separate chip or integrated into another chip, such as being placed on the same die or as an int ...

s, can be considered part of the microarchitectural design process. This includes decisions on the performance-level and connectivity of these peripherals.

Unlike architectural design, where achieving a specific performance level is the main goal, microarchitectural design pays closer attention to other constraints. Since microarchitecture design decisions directly affect what goes into a system, attention must be paid to issues such as chip area/cost, power consumption, logic complexity, ease of connectivity, manufacturability, ease of debugging, and testability.

Microarchitectural concepts

Instruction cycles

To run programs, all single- or multi-chip CPUs: # Read an instruction and decode it # Find any associated data that is needed to process the instruction # Process the instruction # Write the results out The instruction cycle is repeated continuously until the power is turned off.Multicycle microarchitecture

Historically, the earliest computers were multicycle designs. The smallest, least-expensive computers often still use this technique. Multicycle architectures often use the least total number of logic elements and reasonable amounts of power. They can be designed to have deterministic timing and high reliability. In particular, they have no pipeline to stall when taking conditional branches or interrupts. However, other microarchitectures often perform more instructions per unit time, using the same logic family. When discussing "improved performance," an improvement is often relative to a multicycle design. In a multicycle computer, the computer does the four steps in sequence, over several cycles of the clock. Some designs can perform the sequence in two clock cycles by completing successive stages on alternate clock edges, possibly with longer operations occurring outside the main cycle. For example, stage one on the rising edge of the first cycle, stage two on the falling edge of the first cycle, etc. In the control logic, the combination of cycle counter, cycle state (high or low) and the bits of the instruction decode register determine exactly what each part of the computer should be doing. To design the control logic, one can create a table of bits describing the control signals to each part of the computer in each cycle of each instruction. Then, this logic table can be tested in a software simulation running test code. If the logic table is placed in a memory and used to actually run a real computer, it is called amicroprogram

In processor design, microcode (μcode) is a technique that interposes a layer of computer organization between the central processing unit (CPU) hardware and the programmer-visible instruction set architecture of a computer. Microcode is a lay ...

. In some computer designs, the logic table is optimized into the form of combinational logic made from logic gates, usually using a computer program that optimizes logic. Early computers used ad-hoc logic design for control until Maurice Wilkes

Sir Maurice Vincent Wilkes (26 June 1913 – 29 November 2010) was a British computer scientist who designed and helped build the Electronic Delay Storage Automatic Calculator (EDSAC), one of the earliest stored program computers, and who inv ...

invented this tabular approach and called it microprogramming.

Increasing execution speed

Complicating this simple-looking series of steps is the fact that the memory hierarchy, which includes caching,main memory

Computer data storage is a technology consisting of computer components and recording media that are used to retain digital data. It is a core function and fundamental component of computers.

The central processing unit (CPU) of a computer ...

and non-volatile storage like hard disk

A hard disk drive (HDD), hard disk, hard drive, or fixed disk is an electro-mechanical data storage device that stores and retrieves digital data using magnetic storage with one or more rigid rapidly rotating platters coated with magnet ...

s (where the program instructions and data reside), has always been slower than the processor itself. Step (2) often introduces a lengthy (in CPU terms) delay while the data arrives over the computer bus

In computer architecture, a bus (shortened form of the Latin '' omnibus'', and historically also called data highway or databus) is a communication system that transfers data between components inside a computer, or between computers. This ex ...

. A considerable amount of research has been put into designs that avoid these delays as much as possible. Over the years, a central goal was to execute more instructions in parallel, thus increasing the effective execution speed of a program. These efforts introduced complicated logic and circuit structures. Initially, these techniques could only be implemented on expensive mainframes or supercomputers due to the amount of circuitry needed for these techniques. As semiconductor manufacturing progressed, more and more of these techniques could be implemented on a single semiconductor chip. See Moore's law

Moore's law is the observation that the number of transistors in a dense integrated circuit (IC) doubles about every two years. Moore's law is an observation and projection of a historical trend. Rather than a law of physics, it is an empir ...

.

Instruction set choice

Instruction sets have shifted over the years, from originally very simple to sometimes very complex (in various respects). In recent years,load–store architecture

In computer engineering, a load–store architecture is an instruction set architecture that divides instructions into two categories: memory access ( load and store between memory and registers) and ALU operations (which only occur between regis ...

s, VLIW

Very long instruction word (VLIW) refers to instruction set architectures designed to exploit instruction level parallelism (ILP). Whereas conventional central processing units (CPU, processor) mostly allow programs to specify instructions to exe ...

and EPIC types have been in fashion. Architectures that are dealing with data parallelism

Data parallelism is parallelization across multiple processors in parallel computing environments. It focuses on distributing the data across different nodes, which operate on the data in parallel. It can be applied on regular data structures like ...

include SIMD

Single instruction, multiple data (SIMD) is a type of parallel processing in Flynn's taxonomy. SIMD can be internal (part of the hardware design) and it can be directly accessible through an instruction set architecture (ISA), but it should ...

and Vectors. Some labels used to denote classes of CPU architectures are not particularly descriptive, especially so the CISC label; many early designs retroactively denoted " CISC" are in fact significantly simpler than modern RISC processors (in several respects).

However, the choice of instruction set architecture

In computer science, an instruction set architecture (ISA), also called computer architecture, is an abstract model of a computer. A device that executes instructions described by that ISA, such as a central processing unit (CPU), is called an ' ...

may greatly affect the complexity of implementing high-performance devices. The prominent strategy, used to develop the first RISC processors, was to simplify instructions to a minimum of individual semantic complexity combined with high encoding regularity and simplicity. Such uniform instructions were easily fetched, decoded and executed in a pipelined fashion and a simple strategy to reduce the number of logic levels in order to reach high operating frequencies; instruction cache-memories compensated for the higher operating frequency and inherently low code density

In computer science, an instruction set architecture (ISA), also called computer architecture, is an abstract model of a computer. A device that executes instructions described by that ISA, such as a central processing unit (CPU), is called an ' ...

while large register sets were used to factor out as much of the (slow) memory accesses as possible.

Instruction pipelining

One of the first, and most powerful, techniques to improve performance is the use ofinstruction pipelining

In computer engineering, instruction pipelining or ILP is a technique for implementing instruction-level parallelism within a single processor. Pipelining attempts to keep every part of the processor busy with some instruction by dividing inco ...

. Early processor designs would carry out all of the steps above for one instruction before moving onto the next. Large portions of the circuitry were left idle at any one step; for instance, the instruction decoding circuitry would be idle during execution and so on.

Pipelining improves performance by allowing a number of instructions to work their way through the processor at the same time. In the same basic example, the processor would start to decode (step 1) a new instruction while the last one was waiting for results. This would allow up to four instructions to be "in flight" at one time, making the processor look four times as fast. Although any one instruction takes just as long to complete (there are still four steps) the CPU as a whole "retires" instructions much faster.

RISC makes pipelines smaller and much easier to construct by cleanly separating each stage of the instruction process and making them take the same amount of time—one cycle. The processor as a whole operates in an assembly line

An assembly line is a manufacturing process (often called a ''progressive assembly'') in which parts (usually interchangeable parts) are added as the semi-finished assembly moves from workstation to workstation where the parts are added in seq ...

fashion, with instructions coming in one side and results out the other. Due to the reduced complexity of the classic RISC pipeline

In the history of computing hardware, history of computer hardware, some early reduced instruction set computer central processing units (RISC CPUs) used a very similar architectural solution, now called a classic RISC pipeline. Those CPUs were: ...

, the pipelined core and an instruction cache could be placed on the same size die that would otherwise fit the core alone on a CISC design. This was the real reason that RISC was faster. Early designs like the SPARC

SPARC (Scalable Processor Architecture) is a reduced instruction set computer (RISC) instruction set architecture originally developed by Sun Microsystems. Its design was strongly influenced by the experimental Berkeley RISC system developed ...

and MIPS often ran over 10 times as fast as Intel

Intel Corporation is an American multinational corporation and technology company headquartered in Santa Clara, California. It is the world's largest semiconductor chip manufacturer by revenue, and is one of the developers of the x86 seri ...

and Motorola

Motorola, Inc. () was an American Multinational corporation, multinational telecommunications company based in Schaumburg, Illinois, United States. After having lost $4.3 billion from 2007 to 2009, the company split into two independent p ...

CISC solutions at the same clock speed and price.

Pipelines are by no means limited to RISC designs. By 1986 the top-of-the-line VAX implementation (VAX 8800

The VAX 8000 is a discontinued family of superminicomputers developed and manufactured by Digital Equipment Corporation (DEC) using processors implementing the VAX instruction set architecture (ISA).

The 8000 series was introduced in October 19 ...

) was a heavily pipelined design, slightly predating the first commercial MIPS and SPARC designs. Most modern CPUs (even embedded CPUs) are now pipelined, and microcoded CPUs with no pipelining are seen only in the most area-constrained embedded processors. Large CISC machines, from the VAX 8800 to the modern Pentium 4 and Athlon, are implemented with both microcode and pipelines. Improvements in pipelining and caching are the two major microarchitectural advances that have enabled processor performance to keep pace with the circuit technology on which they are based.

Cache

It was not long before improvements in chip manufacturing allowed for even more circuitry to be placed on the die, and designers started looking for ways to use it. One of the most common was to add an ever-increasing amount ofcache memory

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewher ...

on-die. Cache is very fast and expensive memory. It can be accessed in a few cycles as opposed to many needed to "talk" to main memory. The CPU includes a cache controller which automates reading and writing from the cache. If the data is already in the cache it is accessed from there – at considerable time savings, whereas if it is not the processor is "stalled" while the cache controller reads it in.

RISC designs started adding cache in the mid-to-late 1980s, often only 4 KB in total. This number grew over time, and typical CPUs now have at least 2 MB, while more powerful CPUs come with 4 or 6 or 12MB or even 32MB or more, with the most being 768MB in the newly released EPYC Milan-X line, organized in multiple levels of a memory hierarchy

In computer architecture, the memory hierarchy separates computer storage into a hierarchy based on response time. Since response time, complexity, and capacity are related, the levels may also be distinguished by their performance and controlli ...

. Generally speaking, more cache means more performance, due to reduced stalling.

Caches and pipelines were a perfect match for each other. Previously, it didn't make much sense to build a pipeline that could run faster than the access latency of off-chip memory. Using on-chip cache memory instead, meant that a pipeline could run at the speed of the cache access latency, a much smaller length of time. This allowed the operating frequencies of processors to increase at a much faster rate than that of off-chip memory.

Branch prediction

One barrier to achieving higher performance through instruction-level parallelism stems from pipeline stalls and flushes due to branches. Normally, whether a conditional branch will be taken isn't known until late in the pipeline as conditional branches depend on results coming from a register. From the time that the processor's instruction decoder has figured out that it has encountered a conditional branch instruction to the time that the deciding register value can be read out, the pipeline needs to be stalled for several cycles, or if it's not and the branch is taken, the pipeline needs to be flushed. As clock speeds increase the depth of the pipeline increases with it, and some modern processors may have 20 stages or more. On average, every fifth instruction executed is a branch, so without any intervention, that's a high amount of stalling. Techniques such asbranch prediction

In computer architecture, a branch predictor is a digital circuit that tries to guess which way a branch (e.g., an if–then–else structure) will go before this is known definitively. The purpose of the branch predictor is to improve the flow ...

and speculative execution

Speculative execution is an optimization technique where a computer system performs some task that may not be needed. Work is done before it is known whether it is actually needed, so as to prevent a delay that would have to be incurred by doing t ...

are used to lessen these branch penalties. Branch prediction is where the hardware makes educated guesses on whether a particular branch will be taken. In reality one side or the other of the branch will be called much more often than the other. Modern designs have rather complex statistical prediction systems, which watch the results of past branches to predict the future with greater accuracy. The guess allows the hardware to prefetch instructions without waiting for the register read. Speculative execution is a further enhancement in which the code along the predicted path is not just prefetched but also executed before it is known whether the branch should be taken or not. This can yield better performance when the guess is good, with the risk of a huge penalty when the guess is bad because instructions need to be undone.

Superscalar

Even with all of the added complexity and gates needed to support the concepts outlined above, improvements in semiconductor manufacturing soon allowed even more logic gates to be used. In the outline above the processor processes parts of a single instruction at a time. Computer programs could be executed faster if multiple instructions were processed simultaneously. This is whatsuperscalar

A superscalar processor is a CPU that implements a form of parallelism called instruction-level parallelism within a single processor. In contrast to a scalar processor, which can execute at most one single instruction per clock cycle, a sup ...

processors achieve, by replicating functional units such as ALUs. The replication of functional units was only made possible when the die area of a single-issue processor no longer stretched the limits of what could be reliably manufactured. By the late 1980s, superscalar designs started to enter the market place.

In modern designs it is common to find two load units, one store (many instructions have no results to store), two or more integer math units, two or more floating point units, and often a SIMD

Single instruction, multiple data (SIMD) is a type of parallel processing in Flynn's taxonomy. SIMD can be internal (part of the hardware design) and it can be directly accessible through an instruction set architecture (ISA), but it should ...

unit of some sort. The instruction issue logic grows in complexity by reading in a huge list of instructions from memory and handing them off to the different execution units that are idle at that point. The results are then collected and re-ordered at the end.

Out-of-order execution

The addition of caches reduces the frequency or duration of stalls due to waiting for data to be fetched from the memory hierarchy, but does not get rid of these stalls entirely. In early designs a ''cache miss'' would force the cache controller to stall the processor and wait. Of course there may be some other instruction in the program whose data ''is'' available in the cache at that point.Out-of-order execution

In computer engineering, out-of-order execution (or more formally dynamic execution) is a paradigm used in most high-performance central processing units to make use of instruction cycles that would otherwise be wasted. In this paradigm, a proce ...

allows that ready instruction to be processed while an older instruction waits on the cache, then re-orders the results to make it appear that everything happened in the programmed order. This technique is also used to avoid other operand dependency stalls, such as an instruction awaiting a result from a long latency floating-point operation or other multi-cycle operations.

Register renaming

Register renaming refers to a technique used to avoid unnecessary serialized execution of program instructions because of the reuse of the same registers by those instructions. Suppose we have two groups of instruction that will use the sameregister

Register or registration may refer to:

Arts entertainment, and media Music

* Register (music), the relative "height" or range of a note, melody, part, instrument, etc.

* ''Register'', a 2017 album by Travis Miller

* Registration (organ), th ...

. One set of instructions is executed first to leave the register to the other set, but if the other set is assigned to a different similar register, both sets of instructions can be executed in parallel (or) in series.

Multiprocessing and multithreading

Computer architects have become stymied by the growing mismatch in CPU operating frequencies andDRAM

Dynamic random-access memory (dynamic RAM or DRAM) is a type of random-access semiconductor memory that stores each bit of data in a memory cell, usually consisting of a tiny capacitor and a transistor, both typically based on metal-oxid ...

access times. None of the techniques that exploited instruction-level parallelism (ILP) within one program could make up for the long stalls that occurred when data had to be fetched from main memory. Additionally, the large transistor counts and high operating frequencies needed for the more advanced ILP techniques required power dissipation levels that could no longer be cheaply cooled. For these reasons, newer generations of computers have started to exploit higher levels of parallelism that exist outside of a single program or program thread.

This trend is sometimes known as ''throughput computing''. This idea originated in the mainframe market where online transaction processing In online transaction processing (OLTP), information systems typically facilitate and manage transaction-oriented applications. This is contrasted with online analytical processing.

The term "transaction" can have two different meanings, both of wh ...

emphasized not just the execution speed of one transaction, but the capacity to deal with massive numbers of transactions. With transaction-based applications such as network routing and web-site serving greatly increasing in the last decade, the computer industry has re-emphasized capacity and throughput issues.

One technique of how this parallelism is achieved is through multiprocessing

Multiprocessing is the use of two or more central processing units (CPUs) within a single computer system. The term also refers to the ability of a system to support more than one processor or the ability to allocate tasks between them. There ar ...

systems, computer systems with multiple CPUs. Once reserved for high-end mainframes

A mainframe computer, informally called a mainframe or big iron, is a computer used primarily by large organizations for critical applications like bulk data processing for tasks such as censuses, industry and consumer statistics, enterprise ...

and supercomputer

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second ( FLOPS) instead of million instructions ...

s, small-scale (2–8) multiprocessors servers have become commonplace for the small business market. For large corporations, large scale (16–256) multiprocessors are common. Even personal computer

A personal computer (PC) is a multi-purpose microcomputer whose size, capabilities, and price make it feasible for individual use. Personal computers are intended to be operated directly by an end user, rather than by a computer expert or tec ...

s with multiple CPUs have appeared since the 1990s.

With further transistor size reductions made available with semiconductor technology advances, multi-core CPUs have appeared where multiple CPUs are implemented on the same silicon chip. Initially used in chips targeting embedded markets, where simpler and smaller CPUs would allow multiple instantiations to fit on one piece of silicon. By 2005, semiconductor technology allowed dual high-end desktop CPUs ''CMP'' chips to be manufactured in volume. Some designs, such as Sun Microsystems

Sun Microsystems, Inc. (Sun for short) was an American technology company that sold computers, computer components, software, and information technology services and created the Java programming language, the Solaris operating system, ZFS, the ...

' UltraSPARC T1

Sun Microsystems' UltraSPARC T1 microprocessor, known until its 14 November 2005 announcement by its development codename "Niagara", is a multithreading, multicore CPU. Designed to lower the energy consumption of server computers, the CPU ty ...

have reverted to simpler (scalar, in-order) designs in order to fit more processors on one piece of silicon.

Another technique that has become more popular recently is multithreading. In multithreading, when the processor has to fetch data from slow system memory, instead of stalling for the data to arrive, the processor switches to another program or program thread which is ready to execute. Though this does not speed up a particular program/thread, it increases the overall system throughput by reducing the time the CPU is idle.

Conceptually, multithreading is equivalent to a context switch

In computing, a context switch is the process of storing the state of a process or thread, so that it can be restored and resume execution at a later point, and then restoring a different, previously saved, state. This allows multiple processes ...

at the operating system level. The difference is that a multithreaded CPU can do a thread switch in one CPU cycle instead of the hundreds or thousands of CPU cycles a context switch normally requires. This is achieved by replicating the state hardware (such as the register file

A register file is an array of processor registers in a central processing unit (CPU). Register banking is the method of using a single name to access multiple different physical registers depending on the operating mode. Modern integrated circuit- ...

and program counter

The program counter (PC), commonly called the instruction pointer (IP) in Intel x86 and Itanium microprocessors, and sometimes called the instruction address register (IAR), the instruction counter, or just part of the instruction sequencer, is ...

) for each active thread.

A further enhancement is simultaneous multithreading

Simultaneous multithreading (SMT) is a technique for improving the overall efficiency of superscalar CPUs with hardware multithreading. SMT permits multiple independent threads of execution to better use the resources provided by modern process ...

. This technique allows superscalar CPUs to execute instructions from different programs/threads simultaneously in the same cycle.

See also

*Control unit

The control unit (CU) is a component of a computer's central processing unit (CPU) that directs the operation of the processor. A CU typically uses a binary decoder to convert coded instructions into timing and control signals that direct the ope ...

* Hardware architecture

In engineering, hardware architecture refers to the identification of a system's physical components and their interrelationships. This description, often called a hardware design model, allows hardware designers to understand how their compon ...

* Hardware description language

In computer engineering, a hardware description language (HDL) is a specialized computer language used to describe the structure and behavior of electronic circuits, and most commonly, digital logic circuits.

A hardware description language e ...

(HDL)

* Instruction-level parallelism

Instruction-level parallelism (ILP) is the parallel or simultaneous execution of a sequence of instructions in a computer program. More specifically ILP refers to the average number of instructions run per step of this parallel execution.

Discu ...

(ILP)

* List of AMD CPU microarchitectures

The following is a list of AMD CPU microarchitectures.

Nomenclature

Historically, AMD's CPU families were given a "K-number" (which originally stood for Kryptonite, an allusion to the Superman comic book character's fatal weakness) starting w ...

* List of Intel CPU microarchitectures

The following is a ''partial'' list of Intel CPU microarchitectures. The list is ''incomplete''. Additional details can be found in Intel's Tick–tock model and Process–architecture–optimization model.

x86 microarchitectures

16-bit

; ...

* Processor design

Processor design is a subfield of computer engineering and electronics engineering (fabrication) that deals with creating a processor, a key component of computer hardware.

The design process involves choosing an instruction set and a certain exe ...

* Stream processing

In computer science, stream processing (also known as event stream processing, data stream processing, or distributed stream processing) is a programming paradigm which views data streams, or sequences of events in time, as the central input and ou ...

* VHDL

The VHSIC Hardware Description Language (VHDL) is a hardware description language (HDL) that can model the behavior and structure of digital systems at multiple levels of abstraction, ranging from the system level down to that of logic gates ...

* Very large-scale integration

Very large-scale integration (VLSI) is the process of creating an integrated circuit (IC) by combining millions or List_of_Nvidia_graphics_processing_units#Volta_series , billions of MOS transistors onto a single chip. VLSI began in the 1970s wh ...

(VLSI)

* Verilog

Verilog, standardized as IEEE 1364, is a hardware description language (HDL) used to model electronic systems. It is most commonly used in the design and verification of digital circuits at the register-transfer level of abstraction. It is also ...

References

Further reading

* * * * * * * * * * {{CPU technologies Central processing unit Instruction processing Microprocessors