History Of Randomness on:

[Wikipedia]

[Google]

[Amazon]

In ancient history, the concepts of chance and

In ancient history, the concepts of chance and

Pre-Christian people along the

Pre-Christian people along the  For several centuries thereafter, the idea of chance continued to be intertwined with fate. Divination was practiced in many cultures, using diverse methods. The Chinese analyzed the cracks in turtle shells, while the Germans, who according to

For several centuries thereafter, the idea of chance continued to be intertwined with fate. Divination was practiced in many cultures, using diverse methods. The Chinese analyzed the cracks in turtle shells, while the Germans, who according to

Around 1620

Around 1620  While the mathematical elite was making progress in understanding randomness from the 17th to the 19th century, the public at large continued to rely on practices such as

While the mathematical elite was making progress in understanding randomness from the 17th to the 19th century, the public at large continued to rely on practices such as

During the 20th century, the five main

During the 20th century, the five main  By the early 1940s, the frequency theory approach to probability was well accepted within the

By the early 1940s, the frequency theory approach to probability was well accepted within the

In ancient history, the concepts of chance and

In ancient history, the concepts of chance and randomness

In common usage, randomness is the apparent or actual lack of pattern or predictability in events. A random sequence of events, symbols or steps often has no order and does not follow an intelligible pattern or combination. Individual rand ...

were intertwined with that of fate. Many ancient peoples threw dice to determine fate, and this later evolved into games of chance

A game of chance is in contrast with a game of skill. It is a game whose outcome is strongly influenced by some randomizing device. Common devices used include dice, spinning tops, playing cards, roulette wheels, or numbered balls drawn from ...

. At the same time, most ancient cultures used various methods of divination

Divination (from Latin ''divinare'', 'to foresee, to foretell, to predict, to prophesy') is the attempt to gain insight into a question or situation by way of an occultic, standardized process or ritual. Used in various forms throughout histor ...

to attempt to circumvent randomness and fate. Beyond religion

Religion is usually defined as a social- cultural system of designated behaviors and practices, morals, beliefs, worldviews, texts, sanctified places, prophecies, ethics, or organizations, that generally relates humanity to supernatural, ...

and games of chance

A game of chance is in contrast with a game of skill. It is a game whose outcome is strongly influenced by some randomizing device. Common devices used include dice, spinning tops, playing cards, roulette wheels, or numbered balls drawn from ...

, randomness has been attested for sortition

In governance, sortition (also known as selection by lottery, selection by lot, allotment, demarchy, stochocracy, aleatoric democracy, democratic lottery, and lottocracy) is the selection of political officials as a random sample from a larger ...

since at least ancient Athenian democracy

Athenian democracy developed around the 6th century BC in the Greek city-state (known as a polis) of Athens, comprising the city of Athens and the surrounding territory of Attica. Although Athens is the most famous ancient Greek democratic city- ...

in the form of a kleroterion

A kleroterion ( grc, κληρωτήριον) was a randomization device used by the Athenian polis during the period of democracy to select citizens to the boule, to most state offices, to the nomothetai, and to court juries.

The kleroterion w ...

.

The formalization of odds and chance was perhaps earliest done by the Chinese 3,000 years ago. The Greek philosophers

Ancient Greek philosophy arose in the 6th century BC, marking the end of the Greek Dark Ages. Greek philosophy continued throughout the Hellenistic period and the period in which Greece and most Greek-inhabited lands were part of the Roman Empire ...

discussed randomness at length, but only in non-quantitative forms. It was only in the sixteenth century that Italian mathematicians began to formalize the odds associated with various games of chance. The invention of modern calculus

Calculus, originally called infinitesimal calculus or "the calculus of infinitesimals", is the mathematical study of continuous change, in the same way that geometry is the study of shape, and algebra is the study of generalizations of arithm ...

had a positive impact on the formal study of randomness. In the 19th century the concept of entropy

Entropy is a scientific concept, as well as a measurable physical property, that is most commonly associated with a state of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynam ...

was introduced in physics.

The early part of the twentieth century saw a rapid growth in the formal analysis of randomness, and mathematical foundations for probability were introduced, leading to its axiomatization in 1933. At the same time, the advent of quantum mechanics

Quantum mechanics is a fundamental theory in physics that provides a description of the physical properties of nature at the scale of atoms and subatomic particles. It is the foundation of all quantum physics including quantum chemistry, ...

changed the scientific perspective on determinacy

Determinacy is a subfield of set theory, a branch of mathematics, that examines the conditions under which one or the other player of a game has a winning strategy, and the consequences of the existence of such strategies. Alternatively and sim ...

. In the mid to late 20th-century, ideas of algorithmic information theory

Algorithmic information theory (AIT) is a branch of theoretical computer science that concerns itself with the relationship between computation and information of computably generated objects (as opposed to stochastically generated), such as st ...

introduced new dimensions to the field via the concept of algorithmic randomness

Intuitively, an algorithmically random sequence (or random sequence) is a sequence of binary digits that appears random to any algorithm running on a (prefix-free or not) universal Turing machine. The notion can be applied analogously to sequenc ...

.

Although randomness had often been viewed as an obstacle and a nuisance for many centuries, in the twentieth century computer scientists began to realize that the ''deliberate'' introduction of randomness into computations can be an effective tool for designing better algorithms. In some cases, such randomized algorithms

A randomized algorithm is an algorithm that employs a degree of randomness as part of its logic or procedure. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performan ...

are able to outperform the best deterministic methods.

Antiquity to the Middle Ages

Pre-Christian people along the

Pre-Christian people along the Mediterranean

The Mediterranean Sea is a sea connected to the Atlantic Ocean, surrounded by the Mediterranean Basin and almost completely enclosed by land: on the north by Western and Southern Europe and Anatolia, on the south by North Africa, and on the e ...

threw dice to determine fate, and this later evolved into games of chance. There is also evidence of games of chance played by ancient Egyptians, Hindus and

Chinese, dating back to 2100 BC. The Chinese used dice before the Europeans, and have a long history of playing games of chance.

Over 3,000 years ago, the problems concerned with the tossing of several coins were considered in the I Ching

The ''I Ching'' or ''Yi Jing'' (, ), usually translated ''Book of Changes'' or ''Classic of Changes'', is an ancient Chinese divination text that is among the oldest of the Chinese classics. Originally a divination manual in the Western Zho ...

, one of the oldest Chinese mathematical texts, that probably dates to 1150 BC. The two principal elements yin and yang

Yin and yang ( and ) is a Chinese philosophy, Chinese philosophical concept that describes opposite but interconnected forces. In Chinese cosmology, the universe creates itself out of a primary chaos of material energy, organized into the c ...

were combined in the I Ching in various forms to produce ''Heads and Tails'' permutations of the type HH, TH, HT, etc. and the Chinese seem to have been aware of Pascal's triangle

In mathematics, Pascal's triangle is a triangular array of the binomial coefficients that arises in probability theory, combinatorics, and algebra. In much of the Western world, it is named after the French mathematician Blaise Pascal, although ot ...

long before the Europeans formalized it in the 17th century. However, Western philosophy focused on the non-mathematical aspects of chance and randomness until the 16th century.

The development of the concept of chance throughout history has been very gradual. Historians have wondered why progress in the field of randomness was so slow, given that humans have encountered chance since antiquity. Deborah J. Bennett suggests that ordinary people face an inherent difficulty in understanding randomness, although the concept is often taken as being obvious and self-evident. She cites studies by Kahneman

Daniel Kahneman (; he, דניאל כהנמן; born March 5, 1934) is an Israeli-American psychologist and economist notable for his work on the psychology of judgment and decision-making, as well as behavioral economics, for which he was awarde ...

and Tversky; these concluded that statistical principles are not learned from everyday experience because people do not attend to the detail necessary to gain such knowledge.

The Greek philosophers were the earliest Western thinkers to address chance and randomness. Around 400 BC, Democritus

Democritus (; el, Δημόκριτος, ''Dēmókritos'', meaning "chosen of the people"; – ) was an Ancient Greek pre-Socratic philosopher from Abdera, primarily remembered today for his formulation of an atomic theory of the universe. No ...

presented a view of the world as governed by the unambiguous laws of order and considered randomness as a subjective concept that only originated from the inability of humans to understand the nature of events. He used the example of two men who would send their servants to bring water at the same time to cause them to meet. The servants, unaware of the plan, would view the meeting as random.

Aristotle

Aristotle (; grc-gre, Ἀριστοτέλης ''Aristotélēs'', ; 384–322 BC) was a Greek philosopher and polymath during the Classical period in Ancient Greece. Taught by Plato, he was the founder of the Peripatetic school of phil ...

saw chance and necessity as opposite forces. He argued that nature had rich and constant patterns that could not be the result of chance alone, but that these patterns never displayed the machine-like uniformity of necessary determinism. He viewed randomness as a genuine and widespread part of the world, but as subordinate to necessity and order. Aristotle classified events into three types: ''certain'' events that happen necessarily; ''probable'' events that happen in most cases; and ''unknowable'' events that happen by pure chance. He considered the outcome of games of chance as unknowable.

Around 300 BC Epicurus

Epicurus (; grc-gre, Ἐπίκουρος ; 341–270 BC) was an ancient Greek philosopher and sage who founded Epicureanism, a highly influential school of philosophy. He was born on the Greek island of Samos to Athenian parents. Influenced ...

proposed the concept that randomness exists by itself, independent of human knowledge. He believed that in the atomic world, atoms would ''swerve'' at random along their paths, bringing about randomness at higher levels.

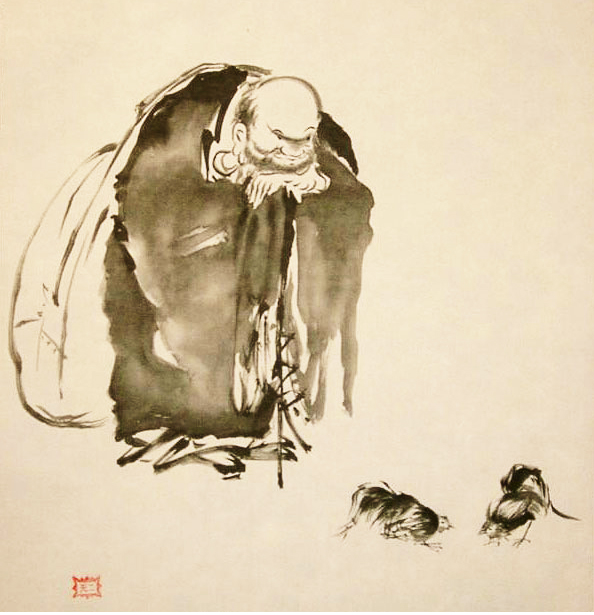

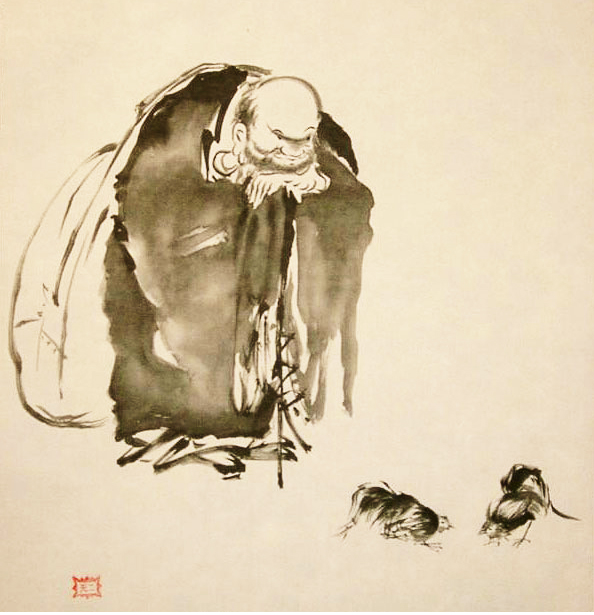

For several centuries thereafter, the idea of chance continued to be intertwined with fate. Divination was practiced in many cultures, using diverse methods. The Chinese analyzed the cracks in turtle shells, while the Germans, who according to

For several centuries thereafter, the idea of chance continued to be intertwined with fate. Divination was practiced in many cultures, using diverse methods. The Chinese analyzed the cracks in turtle shells, while the Germans, who according to Tacitus

Publius Cornelius Tacitus, known simply as Tacitus ( , ; – ), was a Roman historian and politician. Tacitus is widely regarded as one of the greatest Roman historiography, Roman historians by modern scholars.

The surviving portions of his t ...

had the highest regards for lots and omens, utilized strips of bark. In the Roman Empire

The Roman Empire ( la, Imperium Romanum ; grc-gre, Βασιλεία τῶν Ῥωμαίων, Basileía tôn Rhōmaíōn) was the post-Republican period of ancient Rome. As a polity, it included large territorial holdings around the Mediterr ...

, chance was personified by the Goddess Fortuna

Fortuna ( la, Fortūna, equivalent to the Greek goddess Tyche) is the goddess of fortune and the personification of luck in Roman religion who, largely thanks to the Late Antique author Boethius, remained popular through the Middle Ages until at ...

. The Romans would partake in games of chance to simulate what Fortuna would have decided. In 49 BC, Julius Caesar

Gaius Julius Caesar (; ; 12 July 100 BC – 15 March 44 BC), was a Roman general and statesman. A member of the First Triumvirate, Caesar led the Roman armies in the Gallic Wars before defeating his political rival Pompey in a civil war, and ...

allegedly decided on his fateful decision to cross the Rubicon

The Rubicon ( la, Rubico; it, Rubicone ; rgn, Rubicôn ) is a shallow river in northeastern Italy, just north of Rimini.

It was known as Fiumicino until 1933, when it was identified with the ancient river Rubicon, famously crossed by Julius Ca ...

after throwing dice.

Aristotle's classification of events into the three classes: ''certain'', ''probable'' and ''unknowable'' was adopted by Roman philosophers, but they had to reconcile it with deterministic Christian

Christians () are people who follow or adhere to Christianity, a monotheistic Abrahamic religion based on the life and teachings of Jesus Christ. The words ''Christ'' and ''Christian'' derive from the Koine Greek title ''Christós'' (Χρι ...

teachings in which even events unknowable to man were considered to be predetermined by God. About 960 Bishop Wibold of Cambrai

Cambrai (, ; pcd, Kimbré; nl, Kamerijk), formerly Cambray and historically in English Camerick or Camericke, is a city in the Nord (French department), Nord Departments of France, department and in the Hauts-de-France Regions of France, regio ...

correctly enumerated the 56 different outcomes (without permutations) of playing with three dice. No reference to playing cards has been found in Europe before 1350. The Church preached against card playing, and card games spread much more slowly than games based on dice. The Christian Church specifically forbade divination

Divination (from Latin ''divinare'', 'to foresee, to foretell, to predict, to prophesy') is the attempt to gain insight into a question or situation by way of an occultic, standardized process or ritual. Used in various forms throughout histor ...

; and wherever Christianity went, divination lost most of its old-time power.

Over the centuries, many Christian scholars wrestled with the conflict between the belief in free will

Free will is the capacity of agents to choose between different possible courses of action unimpeded.

Free will is closely linked to the concepts of moral responsibility, praise, culpability, sin, and other judgements which apply only to actio ...

and its implied randomness, and the idea that God knows everything that happens. Saints Augustine

Augustine of Hippo ( , ; la, Aurelius Augustinus Hipponensis; 13 November 354 – 28 August 430), also known as Saint Augustine, was a theologian and philosopher of Berbers, Berber origin and the bishop of Hippo Regius in Numidia (Roman pr ...

and Aquinas

Thomas Aquinas, OP (; it, Tommaso d'Aquino, lit=Thomas of Aquino; 1225 – 7 March 1274) was an Italian Dominican friar and priest who was an influential philosopher, theologian and jurist in the tradition of scholasticism; he is known wit ...

tried to reach an accommodation between foreknowledge and free will, but Martin Luther

Martin Luther (; ; 10 November 1483 – 18 February 1546) was a German priest, theologian, author, hymnwriter, and professor, and Order of Saint Augustine, Augustinian friar. He is the seminal figure of the Reformation, Protestant Refo ...

argued against randomness and took the position that God's omniscience renders human actions unavoidable and determined. In the 13th century, Thomas Aquinas

Thomas Aquinas, OP (; it, Tommaso d'Aquino, lit=Thomas of Aquino; 1225 – 7 March 1274) was an Italian Dominican friar and priest who was an influential philosopher, theologian and jurist in the tradition of scholasticism; he is known wi ...

viewed randomness not as the result of a single cause, but of several causes coming together by chance. While he believed in the existence of randomness, he rejected it as an explanation of the end-directedness of nature, for he saw too many patterns in nature to have been obtained by chance.

The Greeks and Romans had not noticed the magnitudes of the relative frequencies of the games of chance. For centuries, chance was discussed in Europe with no mathematical foundation and it was only in the 16th century that Italian mathematicians began to discuss the outcomes of games of chance as ratios. In his 1565 ''Liber de Lude Aleae'' (a gambler's manual published after his death) Gerolamo Cardano

Gerolamo Cardano (; also Girolamo or Geronimo; french: link=no, Jérôme Cardan; la, Hieronymus Cardanus; 24 September 1501– 21 September 1576) was an Italian polymath, whose interests and proficiencies ranged through those of mathematician, ...

wrote one of the first formal tracts to analyze the odds of winning at various games.

17th–19th centuries

Around 1620

Around 1620 Galileo

Galileo di Vincenzo Bonaiuti de' Galilei (15 February 1564 – 8 January 1642) was an Italian astronomer, physicist and engineer, sometimes described as a polymath. Commonly referred to as Galileo, his name was pronounced (, ). He was ...

wrote a paper called ''On a discovery concerning dice'' that used an early probabilistic model to address specific questions. In 1654, prompted by Chevalier de Méré Antoine Gombaud, ''alias'' Chevalier de Méré, (1607 – 29 December 1684) was a French people, French writer, born in Poitou.E. Feuillâtre (Editor), ''Les Épistoliers Du XVIIe Siècle. Avec des Notices biographiques, des Notices littéraires ...

's interest in gambling, Blaise Pascal

Blaise Pascal ( , , ; ; 19 June 1623 – 19 August 1662) was a French mathematician, physicist, inventor, philosopher, and Catholic Church, Catholic writer.

He was a child prodigy who was educated by his father, a tax collector in Rouen. Pa ...

corresponded with Pierre de Fermat

Pierre de Fermat (; between 31 October and 6 December 1607 – 12 January 1665) was a French mathematician who is given credit for early developments that led to infinitesimal calculus, including his technique of adequality. In particular, he ...

, and much of the groundwork for probability theory was laid. Pascal's Wager was noted for its early use of the concept of infinity

Infinity is that which is boundless, endless, or larger than any natural number. It is often denoted by the infinity symbol .

Since the time of the ancient Greeks, the philosophical nature of infinity was the subject of many discussions amo ...

, and the first formal use of decision theory

Decision theory (or the theory of choice; not to be confused with choice theory) is a branch of applied probability theory concerned with the theory of making decisions based on assigning probabilities to various factors and assigning numerical ...

. The work of Pascal and Fermat influenced Leibniz

Gottfried Wilhelm (von) Leibniz . ( – 14 November 1716) was a German polymath active as a mathematician, philosopher, scientist and diplomat. He is one of the most prominent figures in both the history of philosophy and the history of mathema ...

's work on the infinitesimal calculus

Calculus, originally called infinitesimal calculus or "the calculus of infinitesimals", is the mathematical study of continuous change, in the same way that geometry is the study of shape, and algebra is the study of generalizations of arithm ...

, which in turn provided further momentum for the formal analysis of probability and randomness.

The first known suggestion for viewing randomness in terms of complexity was made by Leibniz

Gottfried Wilhelm (von) Leibniz . ( – 14 November 1716) was a German polymath active as a mathematician, philosopher, scientist and diplomat. He is one of the most prominent figures in both the history of philosophy and the history of mathema ...

in an obscure 17th-century document discovered after his death. Leibniz asked how one could know if a set of points on a piece of paper were selected at random (e.g. by splattering ink) or not. Given that for any set of finite points there is always a mathematical equation that can describe the points, (e.g. by Lagrangian interpolation) the question focuses on the way the points are expressed mathematically. Leibniz viewed the points as random if the function describing them had to be extremely complex. Three centuries later, the same concept was formalized as algorithmic randomness

Intuitively, an algorithmically random sequence (or random sequence) is a sequence of binary digits that appears random to any algorithm running on a (prefix-free or not) universal Turing machine. The notion can be applied analogously to sequenc ...

by A. N. Kolmogorov and Gregory Chaitin

Gregory John Chaitin ( ; born 25 June 1947) is an Argentine-American mathematician and computer scientist. Beginning in the late 1960s, Chaitin made contributions to algorithmic information theory and metamathematics, in particular a computer-t ...

as the minimal length of a computer program needed to describe a finite string as random.

''The Doctrine of Chances

''The Doctrine of Chances'' was the first textbook on probability theory, written by 18th-century French mathematician Abraham de Moivre and first published in 1718.. De Moivre wrote in English because he resided in England at the time, having ...

'', the first textbook on probability theory

Probability theory is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set o ...

was published in 1718 and the field continued to grow thereafter. The frequency theory approach to probability was first developed by Robert Ellis and John Venn

John Venn, Fellow of the Royal Society, FRS, Fellow of the Society of Antiquaries of London, FSA (4 August 1834 – 4 April 1923) was an English mathematician, logician and philosopher noted for introducing Venn diagrams, which are used in l ...

late in the 19th century.

While the mathematical elite was making progress in understanding randomness from the 17th to the 19th century, the public at large continued to rely on practices such as

While the mathematical elite was making progress in understanding randomness from the 17th to the 19th century, the public at large continued to rely on practices such as fortune telling

Fortune telling is the practice of predicting information about a person's life. Melton, J. Gordon. (2008). ''The Encyclopedia of Religious Phenomena''. Visible Ink Press. pp. 115-116. The scope of fortune telling is in principle identical w ...

in the hope of taming chance. Fortunes were told in a multitude of ways both in the Orient (where fortune telling was later termed an addiction) and in Europe by gypsies and others. English practices such as the reading of eggs dropped in a glass were exported to Puritan communities in North America.

The term entropy

Entropy is a scientific concept, as well as a measurable physical property, that is most commonly associated with a state of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynam ...

, which is now a key element in the study of randomness, was coined by Rudolf Clausius

Rudolf Julius Emanuel Clausius (; 2 January 1822 – 24 August 1888) was a German physicist and mathematician and is considered one of the central founding fathers of the science of thermodynamics. By his restatement of Sadi Carnot's principle ...

in 1865 as he studied heat engines in the context of the second law of thermodynamics

The second law of thermodynamics is a physical law based on universal experience concerning heat and Energy transformation, energy interconversions. One simple statement of the law is that heat always moves from hotter objects to colder objects ( ...

. Clausius was the first to state "entropy always increases".

From the time of Newton

Newton most commonly refers to:

* Isaac Newton (1642–1726/1727), English scientist

* Newton (unit), SI unit of force named after Isaac Newton

Newton may also refer to:

Arts and entertainment

* ''Newton'' (film), a 2017 Indian film

* Newton ( ...

until about 1890, it was generally believed that if one knows the initial state of a system with great accuracy, and if all the forces acting on the system can be formulated with equal accuracy, it would be possible, in principle, to make predictions of the state of the universe for an infinitely long time. The limits to such predictions in physical systems became clear as early as 1893 when Henri Poincaré

Jules Henri Poincaré ( S: stress final syllable ; 29 April 1854 – 17 July 1912) was a French mathematician, theoretical physicist, engineer, and philosopher of science. He is often described as a polymath, and in mathematics as "The ...

showed that in the three-body problem

In physics and classical mechanics, the three-body problem is the problem of taking the initial positions and velocities (or momenta) of three point masses and solving for their subsequent motion according to Newton's laws of motion and Newton's ...

in astronomy, small changes to the initial state could result in large changes in trajectories during the numerical integration of the equations.

During the 19th century, as probability theory was formalized and better understood, the attitude towards "randomness as nuisance" began to be questioned. Goethe

Johann Wolfgang von Goethe (28 August 1749 – 22 March 1832) was a German poet, playwright, novelist, scientist, statesman, theatre director, and critic. His works include plays, poetry, literature, and aesthetic criticism, as well as treat ...

wrote:

The tissue of the world is built from necessities and randomness; the intellect of men places itself between both and can control them; it considers the necessity and the reason of its existence; it knows how randomness can be managed, controlled, and used.The words of Goethe proved prophetic, when in the 20th century

randomized algorithms

A randomized algorithm is an algorithm that employs a degree of randomness as part of its logic or procedure. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performan ...

were discovered as powerful tools. By the end of the 19th century, Newton's model of a mechanical universe was fading away as the statistical view of the collision of molecules in gases was studied by Maxwell and Boltzmann

Ludwig Eduard Boltzmann (; 20 February 1844 – 5 September 1906) was an Austrian physicist and philosopher. His greatest achievements were the development of statistical mechanics, and the statistical explanation of the second law of thermodyn ...

. Boltzmann's equation ''S'' = ''k'' log''e'' ''W'' (inscribed on his tombstone) first related entropy

Entropy is a scientific concept, as well as a measurable physical property, that is most commonly associated with a state of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynam ...

with logarithm

In mathematics, the logarithm is the inverse function to exponentiation. That means the logarithm of a number to the base is the exponent to which must be raised, to produce . For example, since , the ''logarithm base'' 10 o ...

s.

20th century

During the 20th century, the five main

During the 20th century, the five main interpretations of probability

The word probability has been used in a variety of ways since it was first applied to the mathematical study of games of chance. Does probability measure the real, physical, tendency of something to occur, or is it a measure of how strongly one b ...

theory (e.g., ''classical'', ''logical'', ''frequency'', ''propensity'' and ''subjective'') became better understood, were discussed, compared and contrasted. A significant number of application areas were developed in this century, from finance to physics. In 1900 Louis Bachelier

Louis Jean-Baptiste Alphonse Bachelier (; 11 March 1870 – 28 April 1946) was a French mathematician at the turn of the 20th century. He is credited with being the first person to model the stochastic process now called Brownian motion, as part ...

applied Brownian motion

Brownian motion, or pedesis (from grc, πήδησις "leaping"), is the random motion of particles suspended in a medium (a liquid or a gas).

This pattern of motion typically consists of random fluctuations in a particle's position insi ...

to evaluate stock options

In finance, an option is a contract which conveys to its owner, the ''holder'', the right, but not the obligation, to buy or sell a specific quantity of an underlying asset or instrument at a specified strike price on or before a specified date ...

, effectively launching the fields of financial mathematics

Mathematical finance, also known as quantitative finance and financial mathematics, is a field of applied mathematics, concerned with mathematical modeling of financial markets.

In general, there exist two separate branches of finance that require ...

and stochastic processes

In probability theory and related fields, a stochastic () or random process is a mathematical object usually defined as a family of random variables. Stochastic processes are widely used as mathematical models of systems and phenomena that appe ...

.

Émile Borel

Félix Édouard Justin Émile Borel (; 7 January 1871 – 3 February 1956) was a French mathematician

A mathematician is someone who uses an extensive knowledge of mathematics in their work, typically to solve mathematical problems.

Math ...

was one of the first mathematicians to formally address randomness in 1909, and introduced normal number

In mathematics, a real number is said to be simply normal in an integer base b if its infinite sequence of digits is distributed uniformly in the sense that each of the b digit values has the same natural density 1/b. A number is said to b ...

s. In 1919 Richard von Mises

Richard Edler von Mises (; 19 April 1883 – 14 July 1953) was an Austrian scientist and mathematician who worked on solid mechanics, fluid mechanics, aerodynamics, aeronautics, statistics and probability theory. He held the position of Gordon ...

gave the first definition of algorithmic randomness via the impossibility of a gambling system

The principle of the impossibility of a gambling system is a concept in probability. It states that in a random sequence, the methodical selection of subsequences does not change the probability of specific elements. The first mathematical demonstr ...

. He advanced the frequency theory of randomness in terms of what he called ''the collective'', i.e. a random sequence The concept of a random sequence is essential in probability theory and statistics. The concept generally relies on the notion of a sequence of random variables and many statistical discussions begin with the words "let ''X''1,...,''Xn'' be independ ...

. Von Mises regarded the randomness of a collective as an empirical law, established by experience. He related the "disorder" or randomness of a collective to the lack of success of attempted gambling systems. This approach led him to suggest a definition of randomness that was later refined and made mathematically rigorous by Alonzo Church

Alonzo Church (June 14, 1903 – August 11, 1995) was an American mathematician, computer scientist, logician, philosopher, professor and editor who made major contributions to mathematical logic and the foundations of theoretical computer scienc ...

by using computable function

Computable functions are the basic objects of study in computability theory. Computable functions are the formalized analogue of the intuitive notion of algorithms, in the sense that a function is computable if there exists an algorithm that can do ...

s in 1940. Von Mises likened the principle of the ''impossibility of a gambling system'' to the principle of the conservation of energy

In physics and chemistry, the law of conservation of energy states that the total energy of an isolated system remains constant; it is said to be ''conserved'' over time. This law, first proposed and tested by Émilie du Châtelet, means th ...

, a law that cannot be proven, but has held true in repeated experiments.

Von Mises never totally formalized his rules for sub-sequence selection, but in his 1940 paper "On the concept of random sequence", Alonzo Church

Alonzo Church (June 14, 1903 – August 11, 1995) was an American mathematician, computer scientist, logician, philosopher, professor and editor who made major contributions to mathematical logic and the foundations of theoretical computer scienc ...

suggested that the functions used for place settings in the formalism of von Mises be computable functions rather than arbitrary functions of the initial segments of the sequence, appealing to the Church–Turing thesis

In computability theory, the Church–Turing thesis (also known as computability thesis, the Turing–Church thesis, the Church–Turing conjecture, Church's thesis, Church's conjecture, and Turing's thesis) is a thesis about the nature of comp ...

on effectiveness.

The advent of quantum mechanics

Quantum mechanics is a fundamental theory in physics that provides a description of the physical properties of nature at the scale of atoms and subatomic particles. It is the foundation of all quantum physics including quantum chemistry, ...

in the early 20th century and the formulation of the Heisenberg uncertainty principle

In quantum mechanics, the uncertainty principle (also known as Heisenberg's uncertainty principle) is any of a variety of mathematical inequalities asserting a fundamental limit to the accuracy with which the values for certain pairs of physic ...

in 1927 saw the end to the Newtonian mindset among physicists regarding the ''determinacy of nature''. In quantum mechanics, there is not even a way to consider all observable elements in a system as random variables ''at once'', since many observables do not commute.

By the early 1940s, the frequency theory approach to probability was well accepted within the

By the early 1940s, the frequency theory approach to probability was well accepted within the Vienna circle

The Vienna Circle (german: Wiener Kreis) of Logical Empiricism was a group of elite philosophers and scientists drawn from the natural and social sciences, logic and mathematics who met regularly from 1924 to 1936 at the University of Vienna, cha ...

, but in the 1950s Karl Popper

Sir Karl Raimund Popper (28 July 1902 – 17 September 1994) was an Austrian-British philosopher, academic and social commentator. One of the 20th century's most influential philosophers of science, Popper is known for his rejection of the cl ...

proposed the propensity theory. Given that the frequency approach cannot deal with "a single toss" of a coin, and can only address large ensembles or collectives, the single-case probabilities were treated as propensities or chances. The concept of propensity was also driven by the desire to handle single-case probability settings in quantum mechanics, e.g. the probability of decay of a specific atom at a specific moment. In more general terms, the frequency approach can not deal with the probability of the death of a ''specific person'' given that the death can not be repeated multiple times for that person. Karl Popper

Sir Karl Raimund Popper (28 July 1902 – 17 September 1994) was an Austrian-British philosopher, academic and social commentator. One of the 20th century's most influential philosophers of science, Popper is known for his rejection of the cl ...

echoed the same sentiment as Aristotle in viewing randomness as subordinate to order when he wrote that "the concept of chance is not opposed to the concept of law" in nature, provided one considers the laws of chance.

Claude Shannon

Claude Elwood Shannon (April 30, 1916 – February 24, 2001) was an American people, American mathematician, electrical engineering, electrical engineer, and cryptography, cryptographer known as a "father of information theory".

As a 21-year-o ...

's development of Information theory

Information theory is the scientific study of the quantification (science), quantification, computer data storage, storage, and telecommunication, communication of information. The field was originally established by the works of Harry Nyquist a ...

in 1948 gave rise to the ''entropy

Entropy is a scientific concept, as well as a measurable physical property, that is most commonly associated with a state of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodynam ...

view'' of randomness. In this view, randomness is the opposite of ''determinism'' in a stochastic process

In probability theory and related fields, a stochastic () or random process is a mathematical object usually defined as a family of random variables. Stochastic processes are widely used as mathematical models of systems and phenomena that appea ...

. Hence if a stochastic system has entropy zero it has no randomness and any increase in entropy increases randomness. Shannon's formulation defaults to Boltzmann

Ludwig Eduard Boltzmann (; 20 February 1844 – 5 September 1906) was an Austrian physicist and philosopher. His greatest achievements were the development of statistical mechanics, and the statistical explanation of the second law of thermodyn ...

's 19th century formulation of entropy in case all probabilities are equal. Entropy is now widely used in diverse fields of science from thermodynamics

Thermodynamics is a branch of physics that deals with heat, work, and temperature, and their relation to energy, entropy, and the physical properties of matter and radiation. The behavior of these quantities is governed by the four laws of the ...

to quantum chemistry

Quantum chemistry, also called molecular quantum mechanics, is a branch of physical chemistry focused on the application of quantum mechanics to chemical systems, particularly towards the quantum-mechanical calculation of electronic contributions ...

.

Martingales for the study of chance and betting strategies were introduced by Paul Lévy in the 1930s and were formalized by Joseph L. Doob

Joseph Leo Doob (February 27, 1910 – June 7, 2004) was an American mathematician, specializing in analysis and probability theory.

The theory of martingales was developed by Doob.

Early life and education

Doob was born in Cincinnati, Ohio, ...

in the 1950s. The application of random walk hypothesis The random walk hypothesis is a financial theory stating that stock market prices evolve according to a random walk (so price changes are random) and thus cannot be predicted.

History

The concept can be traced to French broker Jules Regnault who pu ...

in financial theory

Finance is the study and discipline of money, currency and capital assets. It is related to, but not synonymous with economics, the study of production, distribution, and consumption of money, assets, goods and services (the discipline of fi ...

was first proposed by Maurice Kendall in 1953. It was later promoted by Eugene Fama

Eugene Francis "Gene" Fama (; born February 14, 1939) is an American economist, best known for his empirical work on portfolio theory, asset pricing, and the efficient-market hypothesis.

He is currently Robert R. McCormick Distinguished Servi ...

and Burton Malkiel

Burton Gordon Malkiel (born August 28, 1932) is an American economist and writer most noted for his classic finance book ''A Random Walk Down Wall Street'' (first published 1973, in its 12th edition as of 2019). He is a leading proponent of the ef ...

.

Random strings were first studied in the 1960s by A. N. Kolmogorov (who had provided the first axiomatic definition of probability theory in 1933), Chaitin

Gregory John Chaitin ( ; born 25 June 1947) is an Argentine-American mathematician and computer scientist. Beginning in the late 1960s, Chaitin made contributions to algorithmic information theory and metamathematics, in particular a compute ...

and Martin-Löf. The algorithmic randomness

Intuitively, an algorithmically random sequence (or random sequence) is a sequence of binary digits that appears random to any algorithm running on a (prefix-free or not) universal Turing machine. The notion can be applied analogously to sequenc ...

of a string was defined as the minimum size of a program (e.g. in bits) executed on a universal computer

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algori ...

that yields the string. Chaitin's Omega number later related randomness and the halting probability for programs.

In 1964, Benoît Mandelbrot

Benoit B. Mandelbrot (20 November 1924 – 14 October 2010) was a Polish-born French-American mathematician and polymath with broad interests in the practical sciences, especially regarding what he labeled as "the art of roughness" of phy ...

suggested that most statistical models approached only a first stage of dealing with indeterminism, and that they ignored many aspects of real world turbulence. In his 1997 he defined ''seven states of randomness

The seven states of randomness in probability theory, fractals and risk analysis are extensions of the concept of randomness as modeled by the normal distribution. These seven states were first introduced by Benoît Mandelbrot in his 1997 boo ...

'' ranging from "mild to wild", with traditional randomness being at the mild end of the scale.

Despite mathematical advances, reliance on other methods of dealing with chance, such as fortune telling and astrology

Astrology is a range of Divination, divinatory practices, recognized as pseudoscientific since the 18th century, that claim to discern information about human affairs and terrestrial events by studying the apparent positions of Celestial o ...

continued in the 20th century. The government of Myanmar

Myanmar, ; UK pronunciations: US pronunciations incl. . Note: Wikipedia's IPA conventions require indicating /r/ even in British English although only some British English speakers pronounce r at the end of syllables. As John C. Wells, Joh ...

reportedly shaped 20th century economic policy based on fortune telling and planned the move of the capital of the country based on the advice of astrologers. White House Chief of Staff Donald Regan

Donald Thomas Regan (December 21, 1918 – June 10, 2003) was the 66th United States Secretary of the Treasury from 1981 to 1985 and the White House Chief of Staff from 1985 to 1987 under Ronald Reagan. In the Reagan administration, he advocat ...

criticized the involvement of astrologer Joan Quigley

Joan Ceciel Quigley (April 10, 1927 – October 21, 2014), of San Francisco, California was an astrologer best known for her astrological advice to the Reagan White House in the 1980s. Quigley was born in Kansas City, Missouri.

She was called ...

in decisions made during Ronald Reagan's presidency in the 1980s. Quigley claims to have been the White House astrologer for seven years.

During the 20th century, limits in dealing with randomness were better understood. The best-known example of both theoretical and operational limits on predictability is weather forecasting, simply because models have been used in the field since the 1950s. Predictions of weather and climate are necessarily uncertain. Observations of weather and climate are uncertain and incomplete, and the models into which the data are fed are uncertain. In 1961, Edward Lorenz

Edward Norton Lorenz (May 23, 1917 – April 16, 2008) was an American mathematician and meteorologist who established the theoretical basis of weather and climate predictability, as well as the basis for computer-aided atmospheric physics and me ...

noticed that a very small change to the initial data submitted to a computer program for weather simulation could result in a completely different weather scenario. This later became known as the butterfly effect, often paraphrased as the question: "''Does the flap of a butterfly’s wings in Brazil set off a tornado in Texas?''". A key example of serious practical limits on predictability is in geology, where the ability to predict earthquakes either on an individual or on a statistical basis remains a remote prospect.

In the late 1970s and early 1980s, computer scientists

Computer science is the study of computation, automation, and information. Computer science spans theoretical disciplines (such as algorithms, theory of computation, information theory, and automation) to practical disciplines (including th ...

began to realize that the ''deliberate'' introduction of randomness into computations can be an effective tool for designing better algorithms. In some cases, such randomized algorithms

A randomized algorithm is an algorithm that employs a degree of randomness as part of its logic or procedure. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performan ...

outperform the best deterministic methods.

Notes

References

* * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * *See also

{{cite journal , last1= Sheynin , first1= O.B. , date= 1991 , title= The notion of randomness from Aristotle to Poincaré , journal= Mathématiques et sciences humaines , volume= 114 , pages= 41–55 , url=http://www.numdam.org/article/MSH_1991__114__41_0.pdf RandomnessRandomness

In common usage, randomness is the apparent or actual lack of pattern or predictability in events. A random sequence of events, symbols or steps often has no order and does not follow an intelligible pattern or combination. Individual rand ...