Direct Mapped Cache on:

[Wikipedia]

[Google]

[Amazon]

A

Consider a main memory of 16 kilobytes, which is organized as 4-byte blocks, and a direct-mapped cache of 256 bytes with a block size of 4 bytes. Because the main memory is 16kB, we need a minimum of 14 bits to uniquely represent a memory address.

Since each cache block is of size 4 bytes, the total number of sets in the cache is 256/4, which equals 64 sets.

The incoming address to the cache is divided into bits for

Consider a main memory of 16 kilobytes, which is organized as 4-byte blocks, and a direct-mapped cache of 256 bytes with a block size of 4 bytes. Because the main memory is 16kB, we need a minimum of 14 bits to uniquely represent a memory address.

Since each cache block is of size 4 bytes, the total number of sets in the cache is 256/4, which equals 64 sets.

The incoming address to the cache is divided into bits for

The incoming address to the cache is divided into bits for Offset, Index and Tag.

* ''Offset'' corresponds to the bits used to determine the byte to be accessed from the cache line. Because the cache lines are 4 bytes long, there are ''2 offset bits''.

* ''Index'' corresponds to bits used to determine the set of the Cache. There are 32 sets in the cache, and because 2^5 = 32, there are ''5 index bits.''

* ''Tag'' corresponds to the remaining bits. This means there are 14 – (5+2) = ''7 bits'', which are stored in tag field to match the address on cache request.

Below are memory addresses and an explanation of which cache line on which set they map to:

# Address

The incoming address to the cache is divided into bits for Offset, Index and Tag.

* ''Offset'' corresponds to the bits used to determine the byte to be accessed from the cache line. Because the cache lines are 4 bytes long, there are ''2 offset bits''.

* ''Index'' corresponds to bits used to determine the set of the Cache. There are 32 sets in the cache, and because 2^5 = 32, there are ''5 index bits.''

* ''Tag'' corresponds to the remaining bits. This means there are 14 – (5+2) = ''7 bits'', which are stored in tag field to match the address on cache request.

Below are memory addresses and an explanation of which cache line on which set they map to:

# Address

Micro-Architecture

"Skewed-associative caches have ... major advantages over conventional set-associative caches."

CPU cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, wh ...

is a memory which holds the recently utilized data by the processor. A block of memory cannot necessarily be placed randomly in the cache and may be restricted to a single cache line

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which ...

or a set of cache lines by the cache placement policy. In other words, the cache placement policy determines where a particular memory block can be placed when it goes into the cache.

There are three different policies available for placement of a memory block in the cache: direct-mapped, fully associative, and set-associative. Originally this space of cache organizations was described using the term "congruence mapping".

Direct-mapped cache

In a direct-mapped cache structure, the cache is organized into multiple sets with a single cache line per set. Based on the address of the memory block, it can only occupy a single cache line. The cache can be framed as a column matrix.To place a block in the cache

* The set is determined by theindex

Index (or its plural form indices) may refer to:

Arts, entertainment, and media Fictional entities

* Index (''A Certain Magical Index''), a character in the light novel series ''A Certain Magical Index''

* The Index, an item on a Halo megastru ...

bits derived from the address of the memory block.

* The memory block is placed in the set identified and the tag is stored in the tag field associated with the set.

* If the cache line is previously occupied, then the new data replaces the memory block in the cache.

To search a word in the cache

* The set is identified by the index bits of the address. * The tag bits derived from the memory block address are compared with the tag bits associated with the set. If the tag matches, then there is acache hit

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewher ...

and the cache block is returned to the processor. Else there is a cache miss

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhe ...

and the memory block is fetched from the lower memory (main memory

Computer data storage is a technology consisting of computer components and recording media that are used to retain digital data. It is a core function and fundamental component of computers.

The central processing unit (CPU) of a comput ...

, disk).

Advantages

* This placement policy is power efficient as it avoids the search through all the cache lines. * The placement policy and the replacement policy is simple. * It requires cheap hardware as only one tag needs to be checked at a time.Disadvantage

* It has lower cache hit rate, as there is only one cache line available in a set. Every time a new memory is referenced to the same set, the cache line is replaced, which causes conflict miss.Example

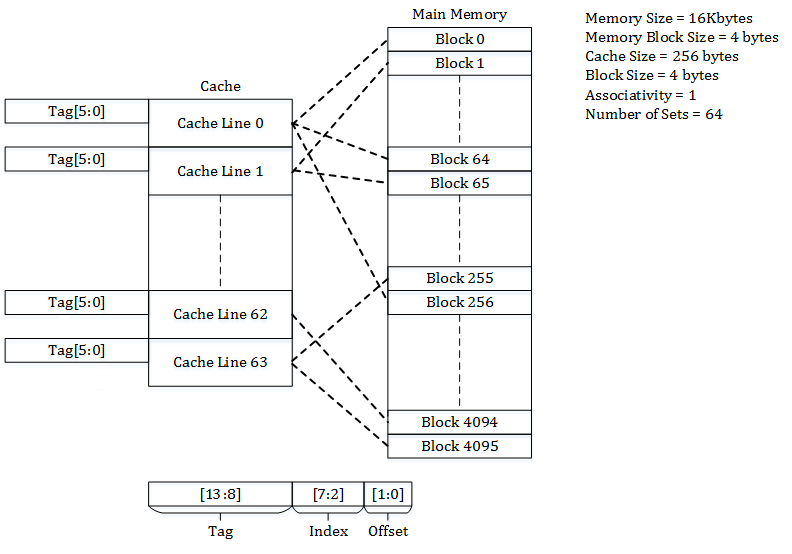

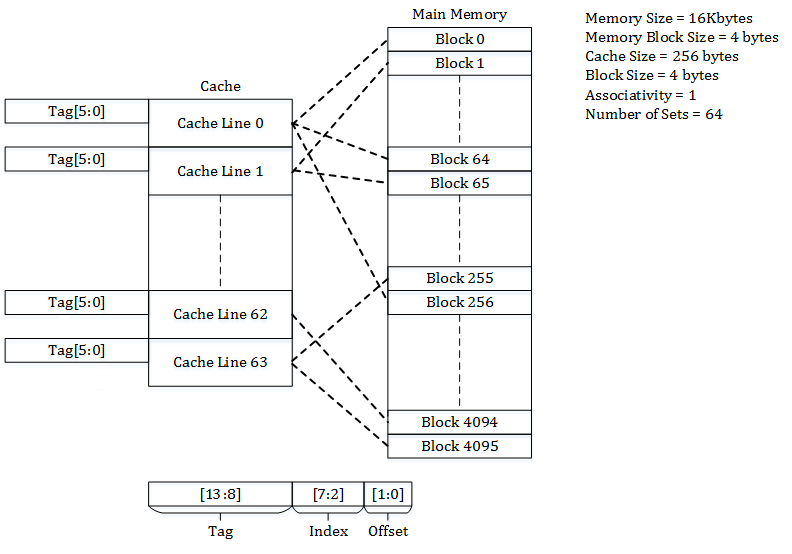

Consider a main memory of 16 kilobytes, which is organized as 4-byte blocks, and a direct-mapped cache of 256 bytes with a block size of 4 bytes. Because the main memory is 16kB, we need a minimum of 14 bits to uniquely represent a memory address.

Since each cache block is of size 4 bytes, the total number of sets in the cache is 256/4, which equals 64 sets.

The incoming address to the cache is divided into bits for

Consider a main memory of 16 kilobytes, which is organized as 4-byte blocks, and a direct-mapped cache of 256 bytes with a block size of 4 bytes. Because the main memory is 16kB, we need a minimum of 14 bits to uniquely represent a memory address.

Since each cache block is of size 4 bytes, the total number of sets in the cache is 256/4, which equals 64 sets.

The incoming address to the cache is divided into bits for Offset

Offset or Off-Set may refer to:

Arts, entertainment, and media

* "Off-Set", a song by T.I. and Young Thug from the '' Furious 7: Original Motion Picture Soundtrack''

* ''Offset'' (EP), a 2018 EP by singer Kim Chung-ha

* ''Offset'' (film), a 200 ...

, Index

Index (or its plural form indices) may refer to:

Arts, entertainment, and media Fictional entities

* Index (''A Certain Magical Index''), a character in the light novel series ''A Certain Magical Index''

* The Index, an item on a Halo megastru ...

and Tag.

* ''Offset'' corresponds to the bits used to determine the byte to be accessed from the cache line. Because the cache lines are 4 bytes long, there are ''2 offset bits''.

* ''Index'' corresponds to bits used to determine the set of the Cache. There are 64 sets in the cache, and because 2^6 = 64, there are ''6 index bits.''

* ''Tag'' corresponds to the remaining bits. This means there are 14 – (6+2) = ''6 tag bits'', which are stored in tag field to match the address on cache request.

Below are memory addresses and an explanation of which cache line they map to:

# Address 0x0000 (tag - 0b00_0000, index – 0b00_0000, offset – 0b00) corresponds to block 0 of the memory and maps to the set 0 of the cache.

# Address 0x0004 (tag - 0b00_0000, index – 0b00_0001, offset – 0b00) corresponds to block 1 of the memory and maps to the set 1 of the cache.

# Address 0x00FF (tag – 0b00_0000, index – 0b11_1111, offset – 0b11) corresponds to block 63 of the memory and maps to the set 63 of the cache.

# Address 0x0100 (tag – 0b00_0001, index – 0b00_0000, offset – 0b00) corresponds to block 64 of the memory and maps to the set 0 of the cache.

Fully associative cache

In a fully associative cache, the cache is organized into a single cache set with multiple cache lines. A memory block can occupy any of the cache lines. The cache organization can be framed as row matrix.To place a block in the cache

* The cache line is selected based on the valid bit associated with it. If the valid bit is 0, the new memory block can be placed in the cache line, else it has to be placed in another cache line with valid bit 0. * If the cache is completely occupied then a block is evicted and the memory block is placed in that cache line. * The eviction of memory block from the cache is decided by the replacement policy.To search a word in the cache

* The Tag field of the memory address is compared with tag bits associated with all the cache lines. If it matches, the block is present in the cache and is a cache hit. If it doesn't match, then it's a cache miss and has to be fetched from the lower memory. * Based on the Offset, a byte is selected and returned to the processor.

Advantages

* Fully associative cache structure provides us the flexibility of placing memory block in any of the cache lines and hence full utilization of the cache. * The placement policy provides better cache hit rate. * It offers the flexibility of utilizing a wide variety of replacement algorithms if a cache miss occursDisadvantages

* The placement policy is slow as it takes time to iterate through all the lines. * The placement policy is power hungry as it has to iterate over entire cache set to locate a block. * The most expensive of all methods, due to the high cost of associative-comparison hardware.Example

Consider a main memory of 16 kilobytes, which is organized as 4-byte blocks, and a fully associative cache of 256 bytes and a block size of 4 bytes. Because the main memory is 16kB, we need a minimum of 14 bits to uniquely represent a memory address. Since each cache block is of size 4 bytes, the total number of sets in the cache is 256/4, which equals 64 cache lines. The incoming address to the cache is divided into bits for offset and tag. * ''Offset'' corresponds to the bits used to determine the byte to be accessed from the cache line. In the example, there are 2 offset bits, which are used to address the 4 bytes of the cache line * ''Tag'' corresponds to the remaining bits. This means there are 14 – (2) = ''12 tag bits'', which are stored in tag field to match the address on cache request. Since any block of memory can be mapped to any cache line, the memory block can occupy one of the cache lines based on the replacement policy.Set-associative cache

Set-associative cache is a trade-off between direct-mapped cache and fully associative cache. A set-associative cache can be imagined as a matrix. The cache is divided into ‘n’ sets and each set contains ‘m’ cache lines. A memory block is first mapped onto a set and then placed into any cache line of the set. The range of caches from direct-mapped to fully associative is a continuum of levels of set associativity. (A direct-mapped cache is one-way set-associative and a fully associative cache with ''m'' cache lines is ''m''-way set-associative.) Many processor caches in today's designs are either direct-mapped, two-way set-associative, or four-way set-associative.To place a block in the cache

* The set is determined by the index bits derived from the address of the memory block. * The memory block is placed in an available cache line in the set identified, and the tag is stored in the tag field associated with the line. If all the cache lines in the set are occupied, then the new data replaces the block identified through the replacement policy.To locate a word in the cache

* The set is determined by the index bits derived from the address of the memory block. * The tag bits are compared with the tags of all cache lines present in selected set. If the tag matches any of the cache lines, it is a cache hit and the appropriate line is returned. If the tag doesn't match any of the lines, then it is a cache miss and the data is requested from next level in the memory hierarchy.Advantages

* The placement policy is a trade-off between direct-mapped and fully associative cache. * It offers the flexibility of using replacement algorithms if a cache miss occurs.Disadvantages

* The placement policy will not effectively use all the available cache lines in the cache and suffers from conflict miss.Example

Consider a main memory of 16 kilobytes, which is organized as 4-byte blocks, and a 2-way set-associative cache of 256 bytes with a block size of 4 bytes. Because the main memory is 16kB, we need a minimum of 14 bits to uniquely represent a memory address. Since each cache block is of size 4 bytes and is 2-way set-associative, the total number of sets in the cache is 256/(4 * 2), which equals 32 sets. The incoming address to the cache is divided into bits for Offset, Index and Tag.

* ''Offset'' corresponds to the bits used to determine the byte to be accessed from the cache line. Because the cache lines are 4 bytes long, there are ''2 offset bits''.

* ''Index'' corresponds to bits used to determine the set of the Cache. There are 32 sets in the cache, and because 2^5 = 32, there are ''5 index bits.''

* ''Tag'' corresponds to the remaining bits. This means there are 14 – (5+2) = ''7 bits'', which are stored in tag field to match the address on cache request.

Below are memory addresses and an explanation of which cache line on which set they map to:

# Address

The incoming address to the cache is divided into bits for Offset, Index and Tag.

* ''Offset'' corresponds to the bits used to determine the byte to be accessed from the cache line. Because the cache lines are 4 bytes long, there are ''2 offset bits''.

* ''Index'' corresponds to bits used to determine the set of the Cache. There are 32 sets in the cache, and because 2^5 = 32, there are ''5 index bits.''

* ''Tag'' corresponds to the remaining bits. This means there are 14 – (5+2) = ''7 bits'', which are stored in tag field to match the address on cache request.

Below are memory addresses and an explanation of which cache line on which set they map to:

# Address 0x0000 (tag - 0b000_0000, index – 0b0_0000, offset – 0b00) corresponds to block 0 of the memory and maps to the set 0 of the cache. The block occupies a cache line in set 0, determined by the replacement policy for the cache.

# Address 0x0004 (tag - 0b000_0000, index – 0b0_0001, offset – 0b00) corresponds to block 1 of the memory and maps to the set 1 of the cache. The block occupies a cache line in set 1, determined by the replacement policy for the cache.

# Address 0x00FF (tag – 0b000_0001, index – 0b1_1111, offset – 0b11) corresponds to block 63 of the memory and maps to the set 31 of the cache. The block occupies a cache line in set 31, determined by the replacement policy for the cache.

# Address 0x0100 (tag – 0b000_0010, index – 0b0_0000, offset – 0b00) corresponds to block 64 of the memory and maps to the set 0 of the cache. The block occupies a cache line in set 0, determined by the replacement policy for the cache.

Two-way skewed associative cache

Other schemes have been suggested, such as the ''skewed cache'', where the index for way 0 is direct, as above, but the index for way 1 is formed with ahash function

A hash function is any function that can be used to map data of arbitrary size to fixed-size values. The values returned by a hash function are called ''hash values'', ''hash codes'', ''digests'', or simply ''hashes''. The values are usually ...

. A good hash function has the property that addresses which conflict with the direct mapping tend not to conflict when mapped with the hash function, and so it is less likely that a program will suffer from an unexpectedly large number of conflict misses due to a pathological access pattern. The downside is extra latency from computing the hash function. Additionally, when it comes time to load a new line and evict an old line, it may be difficult to determine which existing line was least recently used, because the new line conflicts with data at different indexes in each way; LRU tracking for non-skewed caches is usually done on a per-set basis. Nevertheless, skewed-associative caches have major advantages over conventional set-associative ones."Skewed-associative caches have ... major advantages over conventional set-associative caches."

Pseudo-associative cache

A true set-associative cache tests all the possible ways simultaneously, using something like acontent-addressable memory

Content-addressable memory (CAM) is a special type of computer memory used in certain very-high-speed searching applications. It is also known as associative memory or associative storage and compares input search data against a table of stored d ...

. A pseudo-associative cache tests each possible way one at a time. A hash-rehash cache and a column-associative cache are examples of a pseudo-associative cache.

In the common case of finding a hit in the first way tested, a pseudo-associative cache is as fast as a direct-mapped cache, but it has a much lower conflict miss rate than a direct-mapped cache, closer to the miss rate of a fully associative cache.

See also

*Associativity

In mathematics, the associative property is a property of some binary operations, which means that rearranging the parentheses in an expression will not change the result. In propositional logic, associativity is a valid rule of replacement ...

* Cache replacement policy

In computing, cache algorithms (also frequently called cache replacement algorithms or cache replacement policies) are optimizing instructions, or algorithms, that a computer program or a hardware-maintained structure can utilize in order to m ...

* Cache hierarchy

Cache hierarchy, or multi-level caches, refers to a memory architecture that uses a hierarchy of memory stores based on varying access speeds to cache data. Highly requested data is cached in high-speed access memory stores, allowing swifter access ...

* Writing Policies

* Cache coloring In computer science, cache coloring (also known as page coloring) is the process of attempting to allocate free pages that are contiguous from the CPU cache's point of view, in order to maximize the total number of pages cached by the processor. Cac ...

References

{{Reflist Cache (computing)