data provenance on:

[Wikipedia]

[Google]

[Amazon]

Data lineage refers to the process of tracking how data is generated, transformed, transmitted and used across a system over time. It documents data's origins, transformations and movements, providing detailed visibility into its life cycle. This process simplifies the identification of errors in

"PROV-Overview, An Overview of the PROV Family of Documents" : ''Provenance is defined as a record that describes the people, institutions, entities and activities involved in producing, influencing, or delivering a piece of data or something. In particular, the provenance of information is crucial in deciding whether information is to be trusted, how it should be integrated with other diverse information sources, and how to give credit to its originators when reusing it. In an open and inclusive environment such as the Web, where users find information that is often contradictory or questionable, provenance can help those users to make trust judgements.''

: ''Provenance is defined as a record that describes the people, institutions, entities and activities involved in producing, influencing, or delivering a piece of data or something. In particular, the provenance of information is crucial in deciding whether information is to be trusted, how it should be integrated with other diverse information sources, and how to give credit to its originators when reusing it. In an open and inclusive environment such as the Web, where users find information that is often contradictory or questionable, provenance can help those users to make trust judgements.''

"PROV-DM: The PROV Data Model"

To capture end-to-end lineage in a DISC system, we use the Ibis model, which introduces the notion of containment hierarchies for operators and data. Specifically, Ibis proposes that an operator can be contained within another and such a relationship between two operators is called ''operator containment''. Operator containment implies that the contained (or child) operator performs a part of the logical operation of the containing (or parent) operator. For example, a MapReduce task is contained in a job. Similar containment relationships exist for data as well, Known as data containment. Data containment implies that the contained data is a subset of the containing data (superset).

To capture end-to-end lineage in a DISC system, we use the Ibis model, which introduces the notion of containment hierarchies for operators and data. Specifically, Ibis proposes that an operator can be contained within another and such a relationship between two operators is called ''operator containment''. Operator containment implies that the contained (or child) operator performs a part of the logical operation of the containing (or parent) operator. For example, a MapReduce task is contained in a job. Similar containment relationships exist for data as well, Known as data containment. Data containment implies that the contained data is a subset of the containing data (superset).

The second problem i.e. the existence of outliers can also be identified by running the dataflow step wise and looking at the transformed outputs. The data scientist finds a subset of outputs that are not in accordance with the rest of outputs. The inputs which are causing these bad outputs are outliers in the data. This problem can be solved by removing the set of outliers from the data and replaying the entire dataflow. It can also be solved by modifying the machine learning algorithm by adding, removing or moving actors in the dataflow. The changes in the dataflow are successful if the replayed dataflow does not produce bad outputs.

The second problem i.e. the existence of outliers can also be identified by running the dataflow step wise and looking at the transformed outputs. The data scientist finds a subset of outputs that are not in accordance with the rest of outputs. The inputs which are causing these bad outputs are outliers in the data. This problem can be solved by removing the set of outliers from the data and replaying the entire dataflow. It can also be solved by modifying the machine learning algorithm by adding, removing or moving actors in the dataflow. The changes in the dataflow are successful if the replayed dataflow does not produce bad outputs.

data analytics

Analytics is the systematic computational analysis of data or statistics. It is used for the discovery, interpretation, and communication of meaningful patterns in data, which also falls under and directly relates to the umbrella term, data sci ...

workflows, by enabling users to trace issues back to their root causes.

Data lineage facilitates the ability to replay specific segments or inputs of the dataflow

In computing, dataflow is a broad concept, which has various meanings depending on the application and context. In the context of software architecture, data flow relates to stream processing or reactive programming.

Software architecture

Dat ...

. This can be used in debugging

In engineering, debugging is the process of finding the Root cause analysis, root cause, workarounds, and possible fixes for bug (engineering), bugs.

For software, debugging tactics can involve interactive debugging, control flow analysis, Logf ...

or regenerating lost outputs. In database system

In computing, a database is an organized collection of data or a type of data store based on the use of a database management system (DBMS), the software that interacts with end users, applications, and the database itself to capture and anal ...

s, this concept is closely related to data provenance

Data lineage refers to the process of tracking how data is generated, transformed, transmitted and used across a system over time. It documents data's origins, transformations and movements, providing detailed visibility into its life cycle. This ...

, which involves maintaining records of inputs, entities, systems and processes that influence data.

Data provenance provides a historical record of data origins and transformations. It supports forensic activities such as data-dependency analysis, error/compromise detection, recovery, auditing and compliance analysis: "''Lineage'' is a simple type of ''why provenance''."De, Soumyarupa. (2012). Newt : an architecture for lineage-based replay and debugging in DISC systems. UC San Diego: b7355202. Retrieved from: https://escholarship.org/uc/item/3170p7zn

Data governance plays a critical role in managing metadata by establishing guidelines, strategies and policies. Enhancing data lineage with data quality

Data quality refers to the state of qualitative or quantitative pieces of information. There are many definitions of data quality, but data is generally considered high quality if it is "fit for tsintended uses in operations, decision making and ...

measures and master data management

Master data management (MDM) is a discipline in which business and information technology collaborate to ensure the uniformity, accuracy, stewardship, semantic consistency, and accountability of the enterprise's official shared master data assets. ...

adds business value. Although data lineage is typically represented through a graphical user interface

A graphical user interface, or GUI, is a form of user interface that allows user (computing), users to human–computer interaction, interact with electronic devices through Graphics, graphical icon (computing), icons and visual indicators such ...

(GUI), the methods for gathering and exposing metadata

Metadata (or metainformation) is "data that provides information about other data", but not the content of the data itself, such as the text of a message or the image itself. There are many distinct types of metadata, including:

* Descriptive ...

to this interface can vary. Based on the metadata collection approach, data lineage can be categorized into three types: Those involving software packages for structured data, programming languages

A programming language is a system of notation for writing computer programs.

Programming languages are described in terms of their syntax (form) and semantics (meaning), usually defined by a formal language. Languages usually provide features ...

and Big data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

systems.

Data lineage information includes technical metadata about data transformations. Enriched data lineage may include additional elements such as data quality test results, reference data, data models, business terminology, data stewardship information, program management

Program management deals with overseeing a group or several projects that align with a company’s organizational strategy, goals, and mission. These Project, projects, are intended to improve an Organizational performance, organization's perfo ...

details and enterprise systems

Enterprise (or the archaic spelling Enterprize) may refer to:

Business and economics

Brands and enterprises

* Enterprise GP Holdings, an energy holding company

* Enterprise plc, a UK civil engineering and maintenance company

* Enterpri ...

associated with data points and transformations. Data lineage visualization tools often include masking features that allow users to focus on information relevant to specific use cases. To unify representations across disparate systems, metadata normalization or standardization may be required.

Representation of data lineage

Representation broadly depends on the scope of themetadata management

Metadata management involves managing metadata about other data, whereby this "other data" is generally referred to as content data. The term is used most often in relation to digital media, but older forms of metadata are catalogs, dictionaries, ...

and reference point of interest. Data lineage provides sources of the data and intermediate data flow hops from the reference point with backward data lineage, leading to the final destination's data points and its intermediate data flows with forward data lineage. These views can be combined with end-to-end lineage for a reference point that provides a complete audit trail of that data point of interest from sources to their final destinations. As the data points or hops increase, the complexity of such representation becomes incomprehensible. Thus, the best feature of the data lineage view is the ability to simplify the view by temporarily masking unwanted peripheral data points. Tools with the masking feature enable scalability

Scalability is the property of a system to handle a growing amount of work. One definition for software systems specifies that this may be done by adding resources to the system.

In an economic context, a scalable business model implies that ...

of the view and enhance analysis with the best user experience for both technical and business users. Data lineage also enables companies to trace sources of specific business data to track errors, implement changes in processes and implementing system migration

System migration involves moving a set of instructions or programs, e.g., PLC ( programmable logic controller) programs, from one platform to another, minimizing reengineering.

Migration of systems can also involve downtime, while the old sy ...

s to save significant amounts of time and resources. Data lineage can improve efficiency in business intelligence BI processes.

Data lineage can be represented visually to discover the data flow and movement from its source to destination via various changes and hops on its way in the enterprise environment. This includes how the data is transformed along the way, how the representation and parameters change and how the data splits or converges after each hop. A simple representation of the Data Lineage can be shown with dots and lines, where dots represent data containers for data points, and lines connecting them represent transformations the data undergoes between the data containers.

Data lineage can be visualized at various levels based on the granularity of the view. At a very high-level, data lineage is visualized as systems that the data interacts with before it reaches its destination. At its most granular, visualizations at the data point level can provide the details of the data point and its historical behavior, attribute properties and trends and data quality

Data quality refers to the state of qualitative or quantitative pieces of information. There are many definitions of data quality, but data is generally considered high quality if it is "fit for tsintended uses in operations, decision making and ...

of the data passed through that specific data point in the data lineage.

The scope of the data lineage determines the volume of metadata required to represent its data lineage. Usually, data governance and data management

Data management comprises all disciplines related to handling data as a valuable resource, it is the practice of managing an organization's data so it can be analyzed for decision making.

Concept

The concept of data management emerged alongsi ...

of an organization determine the scope of the data lineage based on their regulation

Regulation is the management of complex systems according to a set of rules and trends. In systems theory, these types of rules exist in various fields of biology and society, but the term has slightly different meanings according to context. Fo ...

s, enterprise data management strategy, data impact, reporting attributes and critical data element

In metadata, the term data element is an atomic unit of data that has precise meaning or precise semantics. A data element has:

# An identification such as a data element name

# A clear data element definition

# One or more representation term ...

s of the organization.

Rationale

Distributed systems likeGoogle

Google LLC (, ) is an American multinational corporation and technology company focusing on online advertising, search engine technology, cloud computing, computer software, quantum computing, e-commerce, consumer electronics, and artificial ...

Map Reduce

MapReduce is a programming model and an associated implementation for processing and generating big data sets with a Parallel computing, parallel and distributed computing, distributed algorithm on a Cluster (computing), cluster.

A MapReduce progr ...

, Microsoft

Microsoft Corporation is an American multinational corporation and technology company, technology conglomerate headquartered in Redmond, Washington. Founded in 1975, the company became influential in the History of personal computers#The ear ...

Dryad, Apache Hadoop

Apache Hadoop () is a collection of open-source software utilities for reliable, scalable, distributed computing. It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop wa ...

(an open-source project) and Google Pregel provide such platforms for businesses and users. However, even with these systems, Big Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

analytics can take several hours, days or weeks to run, simply due to the data volumes involved. For example, a ratings prediction algorithm for the Netflix Prize challenge took nearly 20 hours to execute on 50 cores, and a large-scale image processing task to estimate geographic information took 3 days to complete using 400 cores. "The Large Synoptic Survey Telescope

The Vera C. Rubin Observatory, formerly known as the Large Synoptic Survey Telescope (LSST), is an astronomy, astronomical observatory in Chile. Its main task will be carrying out a synoptic astronomical survey, the Legacy Survey of Space and Tim ...

is expected to generate terabytes

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable un ...

of data every night and eventually store more than 50 petabytes

The byte is a unit of digital information that most commonly consists of eight bits. Historically, the byte was the number of bits used to encode a single character of text in a computer and for this reason it is the smallest addressable un ...

, while in the bioinformatics

Bioinformatics () is an interdisciplinary field of science that develops methods and Bioinformatics software, software tools for understanding biological data, especially when the data sets are large and complex. Bioinformatics uses biology, ...

sector, the 12 largest genome sequencing

Whole genome sequencing (WGS), also known as full genome sequencing or just genome sequencing, is the process of determining the entirety of the DNA sequence of an organism's genome at a single time. This entails sequencing all of an organism's ...

houses in the world now store petabytes of data apiece.

It is very difficult for a data scientist

Data science is an interdisciplinary academic field that uses statistics, scientific computing, scientific methods, processing, scientific visualization, algorithms and systems to extract or extrapolate knowledge from potentially noisy, struct ...

to trace an unknown or an unanticipated result.

Big data debugging

Big data analytics is the process of examining large data sets to uncover hidden patterns, unknown correlations,market trends

A market trend is a perceived tendency of the financial markets to move in a particular direction over time. Analysts classify these trends as ''secular'' for long time-frames, ''primary'' for medium time-frames, and ''secondary'' for short time- ...

, customer preferences and other useful business information. Machine learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task ( ...

, among other algorithms, is used to transform and analyze the data. Due to the large size of the data, there could be unknown features in the data.

The massive scale and unstructured nature of data, the complexity of these analytics pipelines, and long runtimes pose significant manageability and debugging challenges. Even a single error in these analytics can be extremely difficult to identify and remove. While one may debug them by re-running the entire analytics through a debugger for stepwise debugging, this can be expensive due to the amount of time and resources needed.

Auditing and data validation are other major problems due to the growing ease of access to relevant data sources for use in experiments, the sharing of data between scientific communities and use of third-party data in business enterprises.Ian Foster, Jens Vockler, Michael Wilde and Yong Zhao. Chimera: A Virtual Data System for Representing, Querying, and Automating Data Derivation. In 14th International Conference on Scientific and Statistical Database Management, July 2002.Benjamin H. Sigelman, Luiz André Barroso, Mike Burrows, Pat Stephenson, Manoj Plakal, Donald Beaver, Saul Jaspan and Chandan Shanbhag. Dapper, a large-scale distributed systems tracing infrastructure. Technical report, Google Inc, 2010. Peter Buneman, Sanjeev Khanna and Wang-Chiew Tan. Data provenance: Some basic issues. In Proceedings of the 20th Conference on Foundations of Software Technology and Theoretical Computer Science, FST TCS 2000, pages 87–93, London, UK, UK, 2000. Springer-Verlag As such, more cost-efficient ways of analyzing data intensive scale-able computing (DISC) are crucial to their continued effective use.

Challenges in Big Data debugging

Massive scale

According to an EMC/IDC study, 2.8 ZB of data were created and replicated in 2012. Furthermore, the same study states that the digital universe will double every two years between now and 2020, and that there will be approximately 5.2 TB of data for every person in 2020. Based on current technology, the storage of this much data will mean greater energy usage by data centers.Unstructured data

Unstructured data

Unstructured data (or unstructured information) is information that either does not have a pre-defined data model or is not organized in a pre-defined manner. Unstructured information is typically plain text, text-heavy, but may contain data such ...

usually refers to information that doesn't reside in a traditional row-column database. Unstructured data files often include text and multimedia

Multimedia is a form of communication that uses a combination of different content forms, such as Text (literary theory), writing, Sound, audio, images, animations, or video, into a single presentation. T ...

content, such as e-mail

Electronic mail (usually shortened to email; alternatively hyphenated e-mail) is a method of transmitting and receiving Digital media, digital messages using electronics, electronic devices over a computer network. It was conceived in the ...

messages, word processing documents, videos, photos, audio files, presentations, web pages

A web page (or webpage) is a World Wide Web, Web document that is accessed in a web browser. A website typically consists of many web pages hyperlink, linked together under a common domain name. The term "web page" is therefore a metaphor of pap ...

and many other kinds of business documents. While these types of files may have an internal structure, they are still considered "unstructured" because the data they contain doesn't fit neatly into a database. The amount of unstructured data in enterprises is growing many times faster than structured databases are growing. Big data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

can include both structured and unstructured data, but IDC estimates that 90 percent of Big Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

is unstructured data.

The fundamental challenge of unstructured data sources is that they are difficult for non-technical business users and data analysts alike to unbox, understand and prepare for analytic use. Beyond issues of structure, the sheer volume of this type of data contributes to such difficulty. Because of this, current data mining techniques often leave out valuable information and make analyzing unstructured data laborious and expensive.

In today's competitive business environment, companies have to find and analyze the relevant data they need quickly. The challenge is going through the volumes of data and accessing the level of detail needed, all at a high speed. The challenge only grows as the degree of granularity increases. One possible solution is hardware. Some vendors are using increased memory and parallel processing to crunch large volumes of data quickly. Another method is putting data in-memory but using a grid computing

Grid computing is the use of widely distributed computer resources to reach a common goal. A computing grid can be thought of as a distributed system with non-interactive workloads that involve many files. Grid computing is distinguished fro ...

approach, where many machines are used to solve a problem. Both approaches allow organizations to explore huge data volumes. Even with this level of sophisticated hardware and software, a few of the image processing tasks in large scale take a few days to few weeks. Debugging of the data processing is extremely hard due to long run times.

A third approach of advanced data discovery solutions combines self-service data prep with visual data discovery, enabling analysts to simultaneously prepare and visualize data side-by-side in an interactive analysis environment offered by newer companies, such as Trifacta, Alteryx and others.

Another method to track data lineage is spreadsheet

A spreadsheet is a computer application for computation, organization, analysis and storage of data in tabular form. Spreadsheets were developed as computerized analogs of paper accounting worksheets. The program operates on data entered in c ...

programs such as Excel that offer users cell-level lineage, or the ability to see which cells are dependent on another. However, the structure of the transformation is lost. Similarly, ETL or mapping software provide transform-level lineage, yet this view typically doesn't display data and is too coarse- grained to distinguish between transforms that are logically independent (e.g. transforms that operate on distinct columns) or dependent.

Big Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

platforms have a very complicated structure, where data is distributed across a vast range. Typically, the jobs are mapped into several machines and results are later combined by the reduce operations. Debugging a Big Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

pipeline becomes very challenging due to the very nature of the system. It will not be an easy task for the data scientist to figure out which machine's data has outliers and unknown features causing a particular algorithm to give unexpected results.

Proposed solution

Data provenance or data lineage can be used to make the debugging ofBig Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

pipeline easier. This necessitates the collection of data about data transformations. The below section will explain data provenance in more detail.

Data provenance

Scientific ''data provenance'' provides a historical record of the data and its origins. The provenance of data which is generated by complex transformations such as workflows is of considerable value to scientists. From it, one can ascertain the quality of the data based on its ancestral data and derivations, track back sources of errors, allow automated re-enactment of derivations to update a data, and provide attribution of data sources. Provenance is also essential to the business domain where it can be used to drill down to the source of data in adata warehouse

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for Business intelligence, reporting and data analysis and is a core component of business intelligence. Data warehouses are central Re ...

, track the creation of intellectual property and provide an audit trail for regulatory purposes.

The use of data provenance is proposed in distributed systems to trace records through a dataflow, replay the dataflow on a subset of its original inputs and debug data flows. To do so, one needs to keep track of the set of inputs to each operator, which were used to derive each of its outputs. Although there are several forms of provenance, such as copy-provenance and how-provenance, the information we need is a simple form of why-provenance, or lineage, as defined by Cui et al.Y. Cui and J. Widom. Lineage tracing for general data warehouse transformations. VLDB Journal, 12(1), 2003.

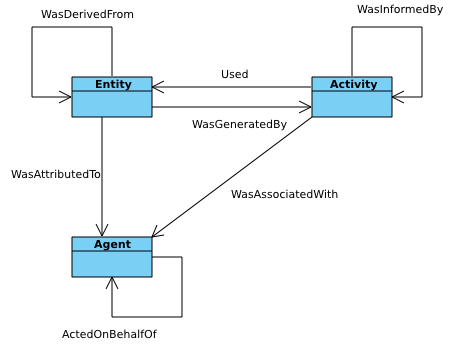

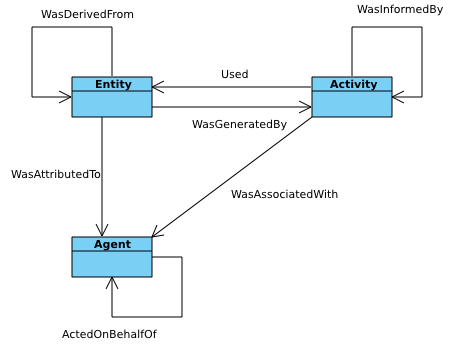

PROV Data Model

PROV is aW3C

The World Wide Web Consortium (W3C) is the main international standards organization for the World Wide Web. Founded in 1994 by Tim Berners-Lee, the consortium is made up of member organizations that maintain full-time staff working together in ...

recommendation of 2013,

: ''Provenance is information about entities, activities and people involved in producing a piece of data or thing, which can be used to form assessments about its quality, reliability or trustworthiness. The PROV Family of Documents defines a model, corresponding serializations and other supporting definitions to enable the inter-operable interchange of provenance information in heterogeneous environments such as the Web''."PROV-Overview, An Overview of the PROV Family of Documents"

: ''Provenance is defined as a record that describes the people, institutions, entities and activities involved in producing, influencing, or delivering a piece of data or something. In particular, the provenance of information is crucial in deciding whether information is to be trusted, how it should be integrated with other diverse information sources, and how to give credit to its originators when reusing it. In an open and inclusive environment such as the Web, where users find information that is often contradictory or questionable, provenance can help those users to make trust judgements.''

: ''Provenance is defined as a record that describes the people, institutions, entities and activities involved in producing, influencing, or delivering a piece of data or something. In particular, the provenance of information is crucial in deciding whether information is to be trusted, how it should be integrated with other diverse information sources, and how to give credit to its originators when reusing it. In an open and inclusive environment such as the Web, where users find information that is often contradictory or questionable, provenance can help those users to make trust judgements.''"PROV-DM: The PROV Data Model"

Lineage capture

Intuitively, for an operator producing output , lineage consists of triplets of form , where is the set of inputs to used to derive . A query that finds the inputs deriving an output is called a ''backward tracing query'', while one that finds the outputs produced by an input is called a ''forward tracing query''.Robert Ikeda, Hyunjung Park and Jennifer Widom. Provenance for generalized map and reduce workflows. In Proc. of CIDR, January 2011. Backward tracing is useful for debugging, while forward tracing is useful for tracking error propagation. Tracing queries also form the basis for replaying an original dataflow. However, to efficiently use lineage in a DISC system, we need to be able to capture lineage at multiple levels (or granularities) of operators and data, capture accurate lineage for DISC processing constructs and be able to trace through multiple dataflow stages efficiently. A DISC system consists of several levels of operators anddata

Data ( , ) are a collection of discrete or continuous values that convey information, describing the quantity, quality, fact, statistics, other basic units of meaning, or simply sequences of symbols that may be further interpreted for ...

, and different use cases of lineage can dictate the level at which lineage needs to be captured. Lineage can be captured at the level of the job, using files and giving lineage tuples of form , lineage can also be captured at the level of each task, using records and giving, for example, lineage tuples of form . The first form of lineage is called coarse-grain lineage, while the second form is called fine-grain lineage. Integrating lineage across different granularities enables users to ask questions such as "Which file read by a MapReduce job produced this particular output record?" and can be useful in debugging across different operators and data granularities within a dataflow.

To capture end-to-end lineage in a DISC system, we use the Ibis model, which introduces the notion of containment hierarchies for operators and data. Specifically, Ibis proposes that an operator can be contained within another and such a relationship between two operators is called ''operator containment''. Operator containment implies that the contained (or child) operator performs a part of the logical operation of the containing (or parent) operator. For example, a MapReduce task is contained in a job. Similar containment relationships exist for data as well, Known as data containment. Data containment implies that the contained data is a subset of the containing data (superset).

To capture end-to-end lineage in a DISC system, we use the Ibis model, which introduces the notion of containment hierarchies for operators and data. Specifically, Ibis proposes that an operator can be contained within another and such a relationship between two operators is called ''operator containment''. Operator containment implies that the contained (or child) operator performs a part of the logical operation of the containing (or parent) operator. For example, a MapReduce task is contained in a job. Similar containment relationships exist for data as well, Known as data containment. Data containment implies that the contained data is a subset of the containing data (superset).

Eager versus lazy lineage

Data lineage systems can be categorized as either eager or lazy. Eager collection systems capture the entire lineage of the data flow at run time. The kind of lineage they capture may be coarse-grain or fine-grain, but they do not require any further computations on the data flow after its execution. Lazy lineage collection typically captures only coarse-grain lineage at run time. These systems incur low capture overheads due to the small amount of lineage they capture. However, to answer fine-grain tracing queries, they must replay the data flow on all (or a large part) of its input and collect fine-grain lineage during the replay. This approach is suitable for forensic systems, where a user wants to debug an observed bad output. Eager fine-grain lineage collection systems incur higher capture overheads than lazy collection systems. However, they enable sophisticated replay and debugging.Actors

An actor is an entity that transforms data; it may be a Dryad vertex, individual map and reduce operators, a MapReduce job, or an entire dataflow pipeline. Actors act as black boxes and the inputs and outputs of an actor are tapped to capture lineage in the form of associations, where an association is a triplet that relates an input with an output for an actor . The instrumentation thus captures lineage in a dataflow one actor at a time, piecing it into a set of associations for each actor. The system developer needs to capture the data an actor reads (from other actors) and the data an actor writes (to other actors). For example, a developer can treat the Hadoop Job Tracker as an actor by recording the set of files read and written by each job.Dionysios Logothetis, Soumyarupa De and Kenneth Yocum. 2013. Scalable lineage capture for debugging DISC analytics. In Proceedings of the 4th annual Symposium on Cloud Computing (SOCC '13). ACM, New York, NY, USA, Article 17, 15 pages.Associations

Association is a combination of the inputs, outputs and the operation itself. The operation is represented in terms of a black box also known as the actor. The associations describe the transformations that are applied to the data. The associations are stored in the association tables. Each unique actor is represented by its association table. An association itself looks like where i is the set of inputs to the actor T and o is the set of outputs produced by the actor. Associations are the basic units of Data Lineage. Individual associations are later clubbed together to construct the entire history of transformations that were applied to the data.Architecture

Big data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

systems increase capacity by adding new hardware or software entities into the distributed system. This process is called ''horizontal scaling''. The distributed system acts as a single entity at the logical level even though it comprises multiple hardware and software entities. The system should continue to maintain this property after horizontal scaling. An important advantage of horizontal scalability is that it can provide the ability to increase capacity on the fly. The biggest plus point is that horizontal scaling can be done using commodity hardware.

The horizontal scaling feature of Big Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

systems should be taken into account while creating the architecture of lineage store. This is essential because the lineage store itself should also be able to scale in parallel with the Big Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

system. The number of associations and amount of storage required to store lineage will increase with the increase in size and capacity of the system. The architecture of Big Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

systems makes use of a single lineage store not appropriate and impossible to scale. The immediate solution to this problem is to distribute the lineage store itself.

The best-case scenario is to use a local lineage store for every machine in the distributed system network. This allows the lineage store also to scale horizontally. In this design, the lineage of data transformations applied to the data on a particular machine is stored on the local lineage store of that specific machine. The lineage store typically stores association tables. Each actor is represented by its own association table. The rows are the associations themselves, and the columns represent inputs and outputs. This design solves two problems. It allows horizontal scaling of the lineage store. If a single centralized lineage store was used, then this information had to be carried over the network, which would cause additional network latency. The network latency is also avoided by the use of a distributed lineage store.

Data flow reconstruction

The information stored in terms of associations needs to be combined by some means to get the data flow of a particular job. In a distributed system a job is broken down into multiple tasks. One or more instances run a particular task. The results produced on these individual machines are later combined together to finish the job. Tasks running on different machines perform multiple transformations on the data in the machine. All the transformations applied to the data on a machine is stored in the local lineage store of that machines. This information needs to be combined together to get the lineage of the entire job. The lineage of the entire job should help the data scientist understand the data flow of the job and he/she can use the data flow to debug theBig Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

pipeline. The data flow is reconstructed in 3 stages.

Association tables

The first stage of the data flow reconstruction is the computation of the association tables. The association tables exist for each actor in each local lineage store. The entire association table for an actor can be computed by combining these individual association tables. This is generally done using a series of equality joins based on the actors themselves. In few scenarios the tables might also be joined using inputs as the key. Indexes can also be used to improve the efficiency of a join. The joined tables need to be stored on a single instance or a machine to further continue processing. There are multiple schemes that are used to pick a machine where a join would be computed. The easiest one being the one with minimum CPU load. Space constraints should also be kept in mind while picking the instance where join would happen.Association graph

The second step in data flow reconstruction is computing an association graph from the lineage information. The graph represents the steps in the data flow. The actors act as vertices and the associations act as edges. Each actor T is linked to its upstream and downstream actors in the data flow. An upstream actor of T is one that produced the input of T, while a downstream actor is one that consumes the output of T. Containment relationships are always considered while creating the links. The graph consists of three types of links or edges.Explicitly specified links

The simplest link is an explicitly specified link between two actors. These links are explicitly specified in the code of a machine learning algorithm. When an actor is aware of its exact upstream or downstream actor, it can communicate this information to lineage API. This information is later used to link these actors during the tracing query. For example, in theMapReduce

MapReduce is a programming model and an associated implementation for processing and generating big data sets with a parallel and distributed algorithm on a cluster.

A MapReduce program is composed of a ''map'' procedure, which performs filte ...

architecture, each map instance knows the exact record reader instance whose output it consumes.

Logically inferred links

Developers can attach data flowarchetypes

The concept of an archetype ( ) appears in areas relating to behavior, historical psychology, philosophy and literary analysis.

An archetype can be any of the following:

# a statement, pattern of behavior, prototype, "first" form, or a main mo ...

to each logical actor. A data flow archetype explains how the child types of an actor type arrange themselves in a data flow. With the help of this information, one can infer a link between each actor of a source type and a destination type. For example, in the MapReduce

MapReduce is a programming model and an associated implementation for processing and generating big data sets with a parallel and distributed algorithm on a cluster.

A MapReduce program is composed of a ''map'' procedure, which performs filte ...

architecture, the map actor type is the source for reduce, and vice versa. The system infers this from the data flow archetypes and duly links map instances with reduce instances. However, there may be several MapReduce jobs in the data flow and linking all map instances with all reduce instances can create false links. To prevent this, such links are restricted to actor instances contained within a common actor instance of a containing (or parent) actor type. Thus, map and reduce instances are only linked to each other if they belong to the same job.

Implicit links through data set sharing

In distributed systems, sometimes there are implicit links, which are not specified during execution. For example, an implicit link exists between an actor that wrote to a file and another actor that read from it. Such links connect actors which use a common data set for execution. The dataset is the output of the first actor and the input of the actor follows it.Topological sorting

The final step in the data flow reconstruction is the topological sorting of the association graph. The directed graph created in the previous step is topologically sorted to obtain the order in which the actors have modified the data. This record of modifications by the different actors involved is used to track the data flow of theBig Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

pipeline or task.

Tracing and replay

This is the most crucial step inBig Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

debugging. The captured lineage is combined and processed to obtain the data flow of the pipeline. The data flow helps the data scientist or a developer to look deeply into the actors and their transformations. This step allows the data scientist to figure out the part of the algorithm that is generating the unexpected output. A Big Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

pipeline can go wrong in two broad ways. The first is a presence of a suspicious actor in the dataflow. The second is the existence of outliers in the data.

The first case can be debugged by tracing the dataflow. By using lineage and data-flow information together a data scientist can figure out how the inputs are converted into outputs. During the process actors that behave unexpectedly can be caught. Either these actors can be removed from the data flow, or they can be augmented by new actors to change the dataflow. The improved dataflow can be replayed to test the validity of it. Debugging faulty actors include recursively performing coarse-grain replay on actors in the dataflow, which can be expensive in resources for long dataflows. Another approach is to manually inspect lineage logs to find anomalies, which can be tedious and time-consuming across several stages of a dataflow. Furthermore, these approaches work only when the data scientist can discover bad outputs. To debug analytics without known bad outputs, the data scientist needs to analyze the dataflow for suspicious behavior in general. However, often, a user may not know the expected normal behavior and cannot specify predicates. This section describes a debugging methodology for retrospectively analyzing lineage to identify faulty actors in a multi-stage dataflow. We believe that sudden changes in an actor's behavior, such as its average selectivity, processing rate or output size, is characteristic of an anomaly. Lineage can reflect such changes in actor behavior over time and across different actor instances. Thus, mining lineage to identify such changes can be useful in debugging faulty actors in a dataflow.

The second problem i.e. the existence of outliers can also be identified by running the dataflow step wise and looking at the transformed outputs. The data scientist finds a subset of outputs that are not in accordance with the rest of outputs. The inputs which are causing these bad outputs are outliers in the data. This problem can be solved by removing the set of outliers from the data and replaying the entire dataflow. It can also be solved by modifying the machine learning algorithm by adding, removing or moving actors in the dataflow. The changes in the dataflow are successful if the replayed dataflow does not produce bad outputs.

The second problem i.e. the existence of outliers can also be identified by running the dataflow step wise and looking at the transformed outputs. The data scientist finds a subset of outputs that are not in accordance with the rest of outputs. The inputs which are causing these bad outputs are outliers in the data. This problem can be solved by removing the set of outliers from the data and replaying the entire dataflow. It can also be solved by modifying the machine learning algorithm by adding, removing or moving actors in the dataflow. The changes in the dataflow are successful if the replayed dataflow does not produce bad outputs.

Challenges

Although the utilization of data lineage methodologies represents a novel approach to the debugging ofBig Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with ...

pipelines, the process is not straightforward. A number of challenges must be addressed, including the scalability of the lineage store, the fault tolerance of the lineage store, the accurate capture of lineage for black box operators, and numerous other considerations. These challenges must be carefully evaluated in order to develop a realistic design for data lineage capture, taking into account the inherent trade-offs between them.

Scalability

DISC systems are primarily batch processing systems designed for high throughput. They execute several jobs per analytics, with several tasks per job. The overall number of operators executing at any time in a cluster can range from hundreds to thousands depending on the cluster size. Lineage capture for these systems must be able scale to both large volumes of data and numerous operators to avoid being a bottleneck for the DISC analytics.Fault tolerance

Lineage capture systems must also be fault tolerant to avoid rerunning data flows to capture lineage. At the same time, they must also accommodate failures in the DISC system. To do so, they must be able to identify a failed DISC task and avoid storing duplicate copies of lineage between the partial lineage generated by the failed task and duplicate lineage produced by the restarted task. A lineage system should also be able to gracefully handle multiple instances of local lineage systems going down. This can be achieved by storing replicas of lineage associations in multiple machines. The replica can act like a backup in the event of the real copy being lost.Black-box operators

Lineage systems for DISC dataflows must be able to capture accurate lineage across black-box operators to enable fine-grain debugging. Current approaches to this include Prober, which seeks to find the minimal set of inputs that can produce a specified output for a black-box operator by replaying the dataflow several times to deduce the minimal set, and dynamic slicing to capture lineage forNoSQL

NoSQL (originally meaning "Not only SQL" or "non-relational") refers to a type of database design that stores and retrieves data differently from the traditional table-based structure of relational databases. Unlike relational databases, which ...

operators through binary rewriting to compute dynamic slices. Although producing highly accurate lineage, such techniques can incur significant time overheads for capture or tracing, and it may be preferable to instead trade some accuracy for better performance. Thus, there is a need for a lineage collection system for DISC dataflows that can capture lineage from arbitrary operators with reasonable accuracy, and without significant overheads in capture or tracing.

Efficient tracing

Tracing is essential for debugging, during which a user can issue multiple tracing queries. Thus, it is important that tracing has fast turnaround times. Ikeda et al. can perform efficient backward tracing queries for MapReduce dataflows but are not generic to different DISC systems and do not perform efficient forward queries. Lipstick, a lineage system for Pig, while able to perform both backward and forward tracing, is specific to Pig and SQL operators and can only perform coarse-grain tracing for black-box operators. Thus, there is a need for a lineage system that enables efficient forward and backward tracing for generic DISC systems and dataflows with black-box operators.Sophisticated replay

Replaying only specific inputs or portions of dataflow is crucial for efficient debugging and simulating what-if scenarios. Ikeda et al. present a methodology for a lineage-based refresh, which selectively replays updated inputs to recompute affected outputs.Robert Ikeda, Semih Salihoglu and Jennifer Widom. Provenance-based refresh in data-oriented workflows. In Proceedings of the 20th ACM international conference on Information and Knowledge Management, CIKM '11, pages 1659–1668, New York, NY, USA, 2011. ACM. This is useful during debugging for re-computing outputs when a bad input has been fixed. However, sometimes a user may want to remove the bad input and replay the lineage of outputs previously affected by the error to produce error-free outputs. We call this an exclusive replay. Another use of replay in debugging involves replaying bad inputs for stepwise debugging (called selective replay). Current approaches to using lineage in DISC systems do not address these. Thus, there is a need for a lineage system that can perform both exclusive and selective replays to address different debugging needs.Anomaly detection

One of the primary debugging concerns in DISC systems is identifying faulty operators. In long dataflows with several hundreds of operators or tasks, manual inspection can be tedious and prohibitive. Even if lineage is used to narrow the subset of operators to examine, the lineage of a single output can still span several operators. There is a need for an inexpensive automated debugging system, which can substantially narrow the set of potentially faulty operators, with reasonable accuracy, to minimize the amount of manual examination required.See also

*Directed acyclic graph

In mathematics, particularly graph theory, and computer science, a directed acyclic graph (DAG) is a directed graph with no directed cycles. That is, it consists of vertices and edges (also called ''arcs''), with each edge directed from one ...

* Persistent staging area, a staging area that tracks the whole change history of a source table or query

References

{{Reflist, 33em Data management Distributed computing problems Big data