|

Data Provenance

Data lineage includes the data origin, what happens to it, and where it moves over time. Data lineage gives visibility while greatly simplifying the ability to trace errors back to the root cause in a data analytics process. It also enables replaying specific portions or inputs of the data flow for step-wise debugging or regenerating lost output. Database systems use such information, called data provenance, to address similar validation and debugging challenges.De, Soumyarupa. (2012). Newt : an architecture for lineage based replay and debugging in DISC systems. UC San Diego: b7355202. Retrieved from: https://escholarship.org/uc/item/3170p7zn Data provenance refers to records of the inputs, entities, systems, and processes that influence data of interest, providing a historical record of the data and its origins. The generated evidence supports forensic activities such as data-dependency analysis, error/compromise detection and recovery, auditing, and compliance analysis. "''Li ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data

In the pursuit of knowledge, data (; ) is a collection of discrete values that convey information, describing quantity, quality, fact, statistics, other basic units of meaning, or simply sequences of symbols that may be further interpreted. A datum is an individual value in a collection of data. Data is usually organized into structures such as tables that provide additional context and meaning, and which may themselves be used as data in larger structures. Data may be used as variables in a computational process. Data may represent abstract ideas or concrete measurements. Data is commonly used in scientific research, economics, and in virtually every other form of human organizational activity. Examples of data sets include price indices (such as consumer price index), unemployment rates, literacy rates, and census data. In this context, data represents the raw facts and figures which can be used in such a manner in order to capture the useful information out of it. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Governance

Data governance is a term used on both a macro and a micro level. The former is a political concept and forms part of international relations and Internet governance; the latter is a data management concept and forms part of corporate data governance. Macro level On the macro level, data governance refers to the governing of cross-border data flows by countries, and hence is more precisely called ''international data governance''. This new field consists of "norms, principles and rules governing various types of data." Micro level Here the focus is on an individual company. Here data governance is a data management concept concerning the capability that enables an organization to ensure that high data quality exists throughout the complete lifecycle of the data, and data controls are implemented that support business objectives. The key focus areas of data governance include availability, usability, consistency, data integrity and data security, standard compliance and incl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence. Machine learning algorithms build a model based on sample data, known as training data, in order to make predictions or decisions without being explicitly programmed to do so. Machine learning algorithms are used in a wide variety of applications, such as in medicine, email filtering, speech recognition, agriculture, and computer vision, where it is difficult or unfeasible to develop conventional algorithms to perform the needed tasks.Hu, J.; Niu, H.; Carrasco, J.; Lennox, B.; Arvin, F.,Voronoi-Based Multi-Robot Autonomous Exploration in Unknown Environments via Deep Reinforcement Learning IEEE Transactions on Vehicular Technology, 2020. A subset of machine learning is closely related to computational statistics, which focuses on making predicti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Data

Though used sometimes loosely partly because of a lack of formal definition, the interpretation that seems to best describe Big data is the one associated with large body of information that we could not comprehend when used only in smaller amounts. In it primary definition though, Big data refers to data sets that are too large or complex to be dealt with by traditional data-processing application software. Data with many fields (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include capturing data, data storage, data analysis, search, sharing, transfer, visualization, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Map Reduce

MapReduce is a programming model and an associated implementation for processing and generating big data sets with a parallel, distributed algorithm on a cluster. A MapReduce program is composed of a ''map'' procedure, which performs filtering and sorting (such as sorting students by first name into queues, one queue for each name), and a ''reduce'' method, which performs a summary operation (such as counting the number of students in each queue, yielding name frequencies). The "MapReduce System" (also called "infrastructure" or "framework") orchestrates the processing by marshalling the distributed servers, running the various tasks in parallel, managing all communications and data transfers between the various parts of the system, and providing for redundancy and fault tolerance. The model is a specialization of the ''split-apply-combine'' strategy for data analysis. It is inspired by the map and reduce functions commonly used in functional programming,"Our abstraction is in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Enterprise System

Enterprise software, also known as enterprise application software (EAS), is computer software used to satisfy the needs of an organization rather than individual users. Such organizations include businesses, schools, interest-based user groups, clubs, charities, and governments. Enterprise software is an integral part of a (computer-based) information system; a collection of such software is called an enterprise system. These systems handle a number of operations in an organization to enhance the business and management reporting tasks. The systems must process the information at a relatively high speed and can be deployed across a variety of networks. Services provided by enterprise software are typically business-oriented tools. As enterprises have similar departments and systems in common, enterprise software is often available as a suite of customizable programs. Generally, the complexity of these tools requires specialist capabilities and specific knowledge. Enterprise com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Program Management

Program management, is the process of managing several related projects, often with the intention of improving an organization's performance. It is distinct from ''project'' management. In practice and in its aims, program management is often closely related to systems engineering, industrial engineering, change management, and business transformation. In the defense sector, it is the dominant approach to managing large projects. Because major defense programs entail working with contractors, it is also called acquisition management, indicating that the government buyer ''acquires'' goods and services by means of contractors. The program manager has oversight of the purpose and status of the projects in a program and can use this oversight to support project-level activity to ensure the program goals are met by providing a decision-making capacity that cannot be achieved at project level or by providing the project manager with a program perspective when required, or as a so ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Steward

A data steward is an oversight or data governance role within an organization, and is responsible for ensuring the quality and fitness for purpose of the organization's data assets, including the metadata for those data assets. A data steward may share some responsibilities with a data custodian, such as the awareness, accessibility, release, appropriate use, security and management of data. A data steward would also participate in the development and implementation of data assets. A data steward may seek to improve the quality and fitness for purpose of other data assets their organization depends upon but is not responsible for. Data stewards have a specialist role that utilizes an organization's data governance processes, policies, guidelines and responsibilities for administering an organizations' entire data in compliance with policy and/or regulatory obligations. The overall objective of a data steward is the data quality of the data assets, datasets, data records and data e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Glossary Of Business And Management Terms

The following terms are in everyday use in financial regions, such as commercial business and the management of large organisations such as corporations. Noun phrases Verb phrases See also * Buzzwords * Corporate communication * Corporate jargon * Doublespeak * Euphemism * Obfuscation References * {{DEFAULTSORT:Business terms Terms Business Business is the practice of making one's living or making money by producing or Trade, buying and selling Product (business), products (such as goods and Service (economics), services). It is also "any activity or enterprise entered into for pr ... Wikipedia glossaries using tables ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Model

A data model is an abstract model that organizes elements of data and standardizes how they relate to one another and to the properties of real-world entities. For instance, a data model may specify that the data element representing a car be composed of a number of other elements which, in turn, represent the color and size of the car and define its owner. The term data model can refer to two distinct but closely related concepts. Sometimes it refers to an abstract formalization of the objects and relationships found in a particular application domain: for example the customers, products, and orders found in a manufacturing organization. At other times it refers to the set of concepts used in defining such formalizations: for example concepts such as entities, attributes, relations, or tables. So the "data model" of a banking application may be defined using the entity-relationship "data model". This article uses the term in both senses. A data model explicitly determines the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Data

Though used sometimes loosely partly because of a lack of formal definition, the interpretation that seems to best describe Big data is the one associated with large body of information that we could not comprehend when used only in smaller amounts. In it primary definition though, Big data refers to data sets that are too large or complex to be dealt with by traditional data-processing application software. Data with many fields (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include capturing data, data storage, data analysis, search, sharing, transfer, visualization, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

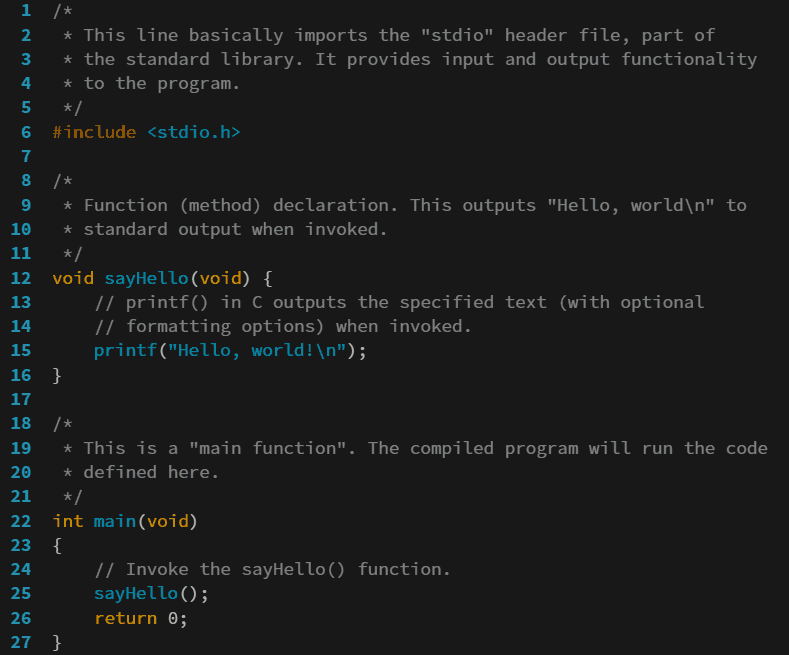

Programming Language

A programming language is a system of notation for writing computer programs. Most programming languages are text-based formal languages, but they may also be graphical. They are a kind of computer language. The description of a programming language is usually split into the two components of syntax (form) and semantics (meaning), which are usually defined by a formal language. Some languages are defined by a specification document (for example, the C programming language is specified by an ISO Standard) while other languages (such as Perl) have a dominant implementation that is treated as a reference. Some languages have both, with the basic language defined by a standard and extensions taken from the dominant implementation being common. Programming language theory is the subfield of computer science that studies the design, implementation, analysis, characterization, and classification of programming languages. Definitions There are many considerations when defini ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |