Correlation (statistics) on:

[Wikipedia]

[Google]

[Amazon]

In statistics, correlation or dependence is any statistical relationship, whether

In statistics, correlation or dependence is any statistical relationship, whether

The most familiar measure of dependence between two quantities is the

The most familiar measure of dependence between two quantities is the

causal

Causality (also referred to as causation, or cause and effect) is influence by which one event, process, state, or object (''a'' ''cause'') contributes to the production of another event, process, state, or object (an ''effect'') where the ca ...

or not, between two random variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the p ...

s or bivariate data

In statistics, bivariate data is data on each of two variables, where each value of one of the variables is paired with a value of the other variable. Typically it would be of interest to investigate the possible association between the two varia ...

. Although in the broadest sense, "correlation" may indicate any type of association, in statistics it usually refers to the degree to which a pair of variables are '' linearly'' related.

Familiar examples of dependent phenomena include the correlation between the height

Height is measure of vertical distance, either vertical extent (how "tall" something or someone is) or vertical position (how "high" a point is).

For example, "The height of that building is 50 m" or "The height of an airplane in-flight is ab ...

of parents and their offspring, and the correlation between the price of a good and the quantity the consumers are willing to purchase, as it is depicted in the so-called demand curve

In economics, a demand curve is a graph depicting the relationship between the price of a certain commodity (the ''y''-axis) and the quantity of that commodity that is demanded at that price (the ''x''-axis). Demand curves can be used either for t ...

.

Correlations are useful because they can indicate a predictive relationship that can be exploited in practice. For example, an electrical utility may produce less power on a mild day based on the correlation between electricity demand and weather. In this example, there is a causal relationship, because extreme weather

Extreme weather or extreme climate events includes unexpected, unusual, severe, or unseasonal weather; weather at the extremes of the historical distribution—the range that has been seen in the past. Often, extreme events are based on a locati ...

causes people to use more electricity for heating or cooling. However, in general, the presence of a correlation is not sufficient to infer the presence of a causal relationship (i.e., correlation does not imply causation

The phrase "correlation does not imply causation" refers to the inability to legitimately deduce a cause-and-effect relationship between two events or variables solely on the basis of an observed association or correlation between them. The id ...

).

Formally, random variables are ''dependent'' if they do not satisfy a mathematical property of probabilistic independence. In informal parlance, ''correlation'' is synonymous with ''dependence''. However, when used in a technical sense, correlation refers to any of several specific types of mathematical operations between the tested variables and their respective expected values. Essentially, correlation is the measure of how two or more variables are related to one another. There are several correlation coefficient

A correlation coefficient is a numerical measure of some type of correlation, meaning a statistical relationship between two variables. The variables may be two columns of a given data set of observations, often called a sample, or two componen ...

s, often denoted or , measuring the degree of correlation. The most common of these is the ''Pearson correlation coefficient

In statistics, the Pearson correlation coefficient (PCC, pronounced ) ― also known as Pearson's ''r'', the Pearson product-moment correlation coefficient (PPMCC), the bivariate correlation, or colloquially simply as the correlation coefficien ...

'', which is sensitive only to a linear relationship between two variables (which may be present even when one variable is a nonlinear function of the other). Other correlation coefficients – such as ''Spearman's rank correlation

In statistics, Spearman's rank correlation coefficient or Spearman's ''ρ'', named after Charles Spearman and often denoted by the Greek letter \rho (rho) or as r_s, is a nonparametric measure of rank correlation (statistical dependence between ...

'' – have been developed to be more robust

Robustness is the property of being strong and healthy in constitution. When it is transposed into a system, it refers to the ability of tolerating perturbations that might affect the system’s functional body. In the same line ''robustness'' ca ...

than Pearson's, that is, more sensitive to nonlinear relationships. Mutual information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual dependence between the two variables. More specifically, it quantifies the " amount of information" (in units such as ...

can also be applied to measure dependence between two variables.

Pearson's product-moment coefficient

The most familiar measure of dependence between two quantities is the

The most familiar measure of dependence between two quantities is the Pearson product-moment correlation coefficient

In statistics, the Pearson correlation coefficient (PCC, pronounced ) ― also known as Pearson's ''r'', the Pearson product-moment correlation coefficient (PPMCC), the bivariate correlation, or colloquially simply as the correlation coefficien ...

(PPMCC), or "Pearson's correlation coefficient", commonly called simply "the correlation coefficient". It is obtained by taking the ratio of the covariance of the two variables in question of our numerical dataset, normalized to the square root of their variances. Mathematically, one simply divides the covariance

In probability theory and statistics, covariance is a measure of the joint variability of two random variables. If the greater values of one variable mainly correspond with the greater values of the other variable, and the same holds for the le ...

of the two variables by the product of their standard deviations. Karl Pearson

Karl Pearson (; born Carl Pearson; 27 March 1857 – 27 April 1936) was an English mathematician and biostatistician. He has been credited with establishing the discipline of mathematical statistics. He founded the world's first university st ...

developed the coefficient from a similar but slightly different idea by Francis Galton

Sir Francis Galton, FRS FRAI (; 16 February 1822 – 17 January 1911), was an English Victorian era polymath: a statistician, sociologist, psychologist, anthropologist, tropical explorer, geographer, inventor, meteorologist, proto- ...

.

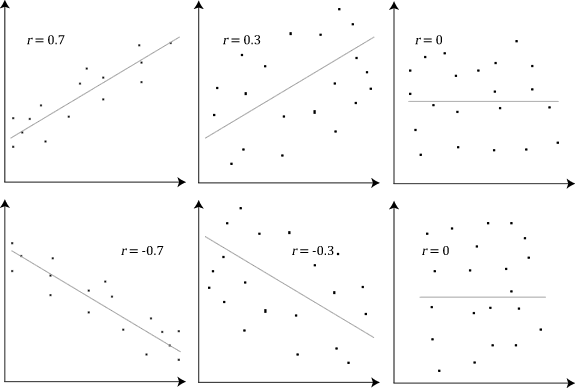

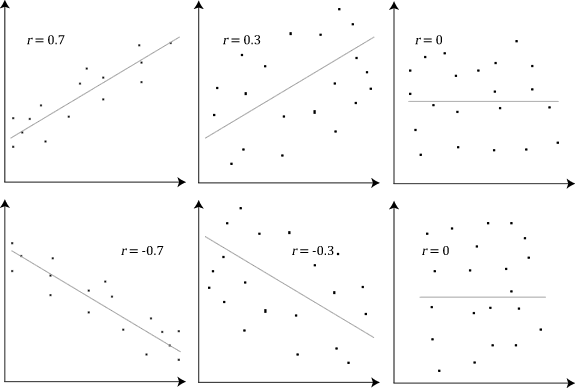

A Pearson product-moment correlation coefficient attempts to establish a line of best fit through a dataset of two variables by essentially laying out the expected values and the resulting Pearson's correlation coefficient indicates how far away the actual dataset is from the expected values. Depending on the sign of our Pearson's correlation coefficient, we can end up with either a negative or positive correlation if there is any sort of relationship between the variables of our data set.

The population correlation coefficient between two random variables and with expected value

In probability theory, the expected value (also called expectation, expectancy, mathematical expectation, mean, average, or first moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of a ...

s and and standard deviations and is defined as:

where is the expected value

In probability theory, the expected value (also called expectation, expectancy, mathematical expectation, mean, average, or first moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean of a ...

operator, means covariance

In probability theory and statistics, covariance is a measure of the joint variability of two random variables. If the greater values of one variable mainly correspond with the greater values of the other variable, and the same holds for the le ...

, and is a widely used alternative notation for the correlation coefficient. The Pearson correlation is defined only if both standard deviations are finite and positive. An alternative formula purely in terms of moments is:

Correlation and independence

It is a corollary of theCauchy–Schwarz inequality

The Cauchy–Schwarz inequality (also called Cauchy–Bunyakovsky–Schwarz inequality) is considered one of the most important and widely used inequalities in mathematics.

The inequality for sums was published by . The corresponding inequality f ...

that the absolute value of the Pearson correlation coefficient is not bigger than 1. Therefore, the value of a correlation coefficient ranges between −1 and +1. The correlation coefficient is +1 in the case of a perfect direct (increasing) linear relationship (correlation), −1 in the case of a perfect inverse (decreasing) linear relationship (anti-correlation), and some value in the open interval in all other cases, indicating the degree of linear dependence between the variables. As it approaches zero there is less of a relationship (closer to uncorrelated). The closer the coefficient is to either −1 or 1, the stronger the correlation between the variables.

If the variables are independent

Independent or Independents may refer to:

Arts, entertainment, and media Artist groups

* Independents (artist group), a group of modernist painters based in the New Hope, Pennsylvania, area of the United States during the early 1930s

* Independe ...

, Pearson's correlation coefficient is 0, but the converse is not true because the correlation coefficient detects only linear dependencies between two variables.

For example, suppose the random variable is symmetrically distributed about zero, and . Then is completely determined by , so that and are perfectly dependent, but their correlation is zero; they are uncorrelated

In probability theory and statistics, two real-valued random variables, X, Y, are said to be uncorrelated if their covariance, \operatorname ,Y= \operatorname Y- \operatorname \operatorname /math>, is zero. If two variables are uncorrelated, there ...

. However, in the special case when and are jointly normal, uncorrelatedness is equivalent to independence.

Even though uncorrelated data does not necessarily imply independence, one can check if random variables are independent if their mutual information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual dependence between the two variables. More specifically, it quantifies the " amount of information" (in units such as ...

is 0.

Sample correlation coefficient

Given a series of measurements of the pair indexed by , the ''sample correlation coefficient'' can be used to estimate the population Pearson correlation between and . The sample correlation coefficient is defined as : where and are the samplemeans

Means may refer to:

* Means LLC, an anti-capitalist media worker cooperative

* Means (band), a Christian hardcore band from Regina, Saskatchewan

* Means, Kentucky, a town in the US

* Means (surname)

* Means Johnston Jr. (1916–1989), US Navy ...

of and , and and are the corrected sample standard deviations of and .

Equivalent expressions for are

:

where and are the ''uncorrected'' sample standard deviations of and .

If and are results of measurements that contain measurement error, the realistic limits on the correlation coefficient are not −1 to +1 but a smaller range. For the case of a linear model with a single independent variable, the coefficient of determination (R squared) is the square of , Pearson's product-moment coefficient.

Example

Consider the