|

Weighted Averages

The weighted arithmetic mean is similar to an ordinary arithmetic mean (the most common type of average), except that instead of each of the data points contributing equally to the final average, some data points contribute more than others. The notion of weighted mean plays a role in descriptive statistics and also occurs in a more general form in several other areas of mathematics. If all the weights are equal, then the weighted mean is the same as the arithmetic mean. While weighted means generally behave in a similar fashion to arithmetic means, they do have a few counterintuitive properties, as captured for instance in Simpson's paradox. Examples Basic example Given two school with 20 students, one with 30 test grades in each class as follows: :Morning class = :Afternoon class = The mean for the morning class is 80 and the mean of the afternoon class is 90. The unweighted mean of the two means is 85. However, this does not account for the difference in number ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Arithmetic Mean

In mathematics and statistics, the arithmetic mean ( ) or arithmetic average, or just the ''mean'' or the ''average'' (when the context is clear), is the sum of a collection of numbers divided by the count of numbers in the collection. The collection is often a set of results of an experiment or an observational study, or frequently a set of results from a survey. The term "arithmetic mean" is preferred in some contexts in mathematics and statistics, because it helps distinguish it from other means, such as the geometric mean and the harmonic mean. In addition to mathematics and statistics, the arithmetic mean is used frequently in many diverse fields such as economics, anthropology and history, and it is used in almost every academic field to some extent. For example, per capita income is the arithmetic average income of a nation's population. While the arithmetic mean is often used to report central tendencies, it is not a robust statistic, meaning that it is greatly influe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

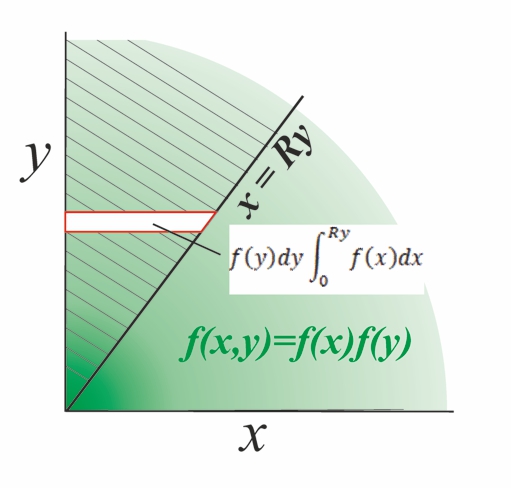

Independent And Identically Distributed Random Variables

In probability theory and statistics, a collection of random variables is independent and identically distributed if each random variable has the same probability distribution as the others and all are mutually independent. This property is usually abbreviated as ''i.i.d.'', ''iid'', or ''IID''. IID was first defined in statistics and finds application in different fields such as data mining and signal processing. Introduction In statistics, we commonly deal with random samples. A random sample can be thought of as a set of objects that are chosen randomly. Or, more formally, it’s “a sequence of independent, identically distributed (IID) random variables”. In other words, the terms ''random sample'' and ''IID'' are basically one and the same. In statistics, we usually say “random sample,” but in probability it’s more common to say “IID.” * Identically Distributed means that there are no overall trends–the distribution doesn’t fluctuate and all items in t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ratio Distribution

A ratio distribution (also known as a quotient distribution) is a probability distribution constructed as the distribution of the ratio of random variables having two other known distributions. Given two (usually independent) random variables ''X'' and ''Y'', the distribution of the random variable ''Z'' that is formed as the ratio ''Z'' = ''X''/''Y'' is a ''ratio distribution''. An example is the Cauchy distribution (also called the ''normal ratio distribution''), which comes about as the ratio of two normally distributed variables with zero mean. Two other distributions often used in test-statistics are also ratio distributions: the ''t''-distribution arises from a Gaussian random variable divided by an independent chi-distributed random variable, while the ''F''-distribution originates from the ratio of two independent chi-squared distributed random variables. More general ratio distributions have been considered in the literature. Often the ratio distributions are heavy- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ratio Estimator

The ratio estimator is a statistical parameter and is defined to be the ratio of means of two random variables. Ratio estimates are biased and corrections must be made when they are used in experimental or survey work. The ratio estimates are asymmetrical and symmetrical tests such as the t test should not be used to generate confidence intervals. The bias is of the order ''O''(1/''n'') (see big O notation) so as the sample size (''n'') increases, the bias will asymptotically approach 0. Therefore, the estimator is approximately unbiased for large sample sizes. Definition Assume there are two characteristics – ''x'' and ''y'' – that can be observed for each sampled element in the data set. The ratio ''R'' is : R = \bar_y / \bar_x The ratio estimate of a value of the ''y'' variate (''θ''''y'') is : \theta_y = R \theta_x where ''θ''''x'' is the corresponding value of the ''x'' variate. ''θ''''y'' is known to be asymptotically normally distributed.Scott AJ, Wu CFJ (198 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Estimand

An estimand is a quantity that is to be estimated in a statistical analysis. The term is used to more clearly distinguish the target of inference from the method used to obtain an approximation of this target (i.e., the estimator) and the specific value obtained from a given method and dataset (i.e., the estimate). For instance, a normally distributed random variable X has two defining parameters, its mean \mu and variance \sigma^. A variance estimator: s^ = \sum_^ \left. \left( x_ - \bar \right)^ \right/ (n-1), yields an estimate of 7 for a data set x = \left\; then s^ is called an estimator of \sigma^, and \sigma^ is called the estimand. Definition In relation to an Estimator, an estimand is the outcome of different treatments of interest. It can formally be thought of as any quantity that is to be estimated in any type of experiment. Overview An estimand is closely linked to the purpose or objective of an analysis. It describes what is to be estimated based on the questio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Horvitz–Thompson Estimator

In statistics, the Horvitz–Thompson estimator, named after Daniel G. Horvitz and Donovan J. Thompson, is a method for estimating the total and mean of a pseudo-population in a stratified sample. Inverse probability weighting is applied to account for different proportions of observations within strata in a target population. The Horvitz–Thompson estimator is frequently applied in survey analyses and can be used to account for missing data, as well as many sources of unequal selection probabilities. The method Formally, let Y_i, i = 1, 2, \ldots, n be an independent sample from ''n'' of ''N ≥ n'' distinct strata with a common mean ''μ''. Suppose further that \pi_i is the inclusion probability that a randomly sampled individual in a superpopulation belongs to the ''i''th stratum. The Hansen and Hurwitz (1943) estimator of the total is given by: : \hat_ = \sum_^n \pi_i ^ Y_i, and the Horvitz–Thompson estimate of the mean is given by: : \hat_ = N^\hat_ = N^\sum_^n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability-proportional-to-size Sampling

In survey methodology, probability-proportional-to-size (pps) sampling is a sampling process where each element of the population (of size ''N'') has some (independent) chance p_i to be selected to the sample when performing one draw. This p_i is proportional to some known quantity x_i so that p_i = \frac. One of the cases this occurs in, as developed by Hanson and Hurwitz in 1943,Hansen, Morris H., and William N. Hurwitz. "On the theory of sampling from finite populations." The Annals of Mathematical Statistics 14.4 (1943): 333-362. is when we have several clusters of units, each with a different (known upfront) number of units, then each cluster can be selected with a probability that is proportional to the number of units inside it.Cochran, W. G. (1977). Sampling Techniques (3rd ed.). Nashville, TN: John Wiley & Sons. So, for example, if we have 3 clusters with 10, 20 and 30 units each, then the chance of selecting the first cluster will be 1/6, the second would be 1/3, and the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sampling Design

Sampling may refer to: *Sampling (signal processing), converting a continuous signal into a discrete signal * Sampling (graphics), converting continuous colors into discrete color components *Sampling (music), the reuse of a sound recording in another recording **Sampler (musical instrument), an electronic musical instrument used to record and play back samples *Sampling (statistics), selection of observations to acquire some knowledge of a statistical population *Sampling (case studies), selection of cases for single or multiple case studies * Sampling (audit), application of audit procedures to less than 100% of population to be audited *Sampling (medicine), gathering of matter from the body to aid in the process of a medical diagnosis and/or evaluation of an indication for treatment, further medical tests or other procedures. *Sampling (occupational hygiene), detection of hazardous materials in the workplace *Sampling (for testing or analysis), taking a representative portion of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cluster Sampling

In statistics, cluster sampling is a sampling plan used when mutually homogeneous yet internally heterogeneous groupings are evident in a statistical population. It is often used in marketing research. In this sampling plan, the total population is divided into these groups (known as clusters) and a simple random sample of the groups is selected. The elements in each cluster are then sampled. If all elements in each sampled cluster are sampled, then this is referred to as a "one-stage" cluster sampling plan. If a simple random subsample of elements is selected within each of these groups, this is referred to as a "two-stage" cluster sampling plan. A common motivation for cluster sampling is to reduce the total number of interviews and costs given the desired accuracy. For a fixed sample size, the expected random error is smaller when most of the variation in the population is present internally within the groups, and not between the groups. Cluster elements The population with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Poisson Sampling

In survey methodology, Poisson sampling (sometimes denoted as ''PO sampling'') is a sampling process where each element of the population is subjected to an independent Bernoulli trial which determines whether the element becomes part of the sample.Ghosh, Dhiren, and Andrew Vogt. "Sampling methods related to Bernoulli and Poisson Sampling." Proceedings of the Joint Statistical Meetings. American Statistical Association Alexandria, VA, 2002(pdf)/ref> Each element of the population may have a different probability of being included in the sample (\pi_i). The probability of being included in a sample during the drawing of a single sample is denoted as the ''first-order inclusion probability'' of that element (p_i). If all first-order inclusion probabilities are equal, Poisson sampling becomes equivalent to Bernoulli sampling, which can therefore be considered to be a special case of Poisson sampling. A mathematical consequence of Poisson sampling Mathematically, the first-order in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bernoulli Distribution

In probability theory and statistics, the Bernoulli distribution, named after Swiss mathematician Jacob Bernoulli,James Victor Uspensky: ''Introduction to Mathematical Probability'', McGraw-Hill, New York 1937, page 45 is the discrete probability distribution of a random variable which takes the value 1 with probability p and the value 0 with probability q = 1-p. Less formally, it can be thought of as a model for the set of possible outcomes of any single experiment that asks a yes–no question. Such questions lead to outcomes that are boolean-valued: a single bit whose value is success/ yes/true/ one with probability ''p'' and failure/no/ false/zero with probability ''q''. It can be used to represent a (possibly biased) coin toss where 1 and 0 would represent "heads" and "tails", respectively, and ''p'' would be the probability of the coin landing on heads (or vice versa where 1 would represent tails and ''p'' would be the probability of tails). In particular, unfair coins ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Survey Sampling

In statistics, survey sampling describes the process of selecting a sample of elements from a target population to conduct a survey. The term "survey" may refer to many different types or techniques of observation. In survey sampling it most often involves a questionnaire used to measure the characteristics and/or attitudes of people. Different ways of contacting members of a sample once they have been selected is the subject of survey data collection. The purpose of sampling is to reduce the cost and/or the amount of work that it would take to survey the entire target population. A survey that measures the entire target population is called a census. A sample refers to a group or section of a population from which information is to be obtained Survey samples can be broadly divided into two types: probability samples and super samples. Probability-based samples implement a sampling plan with specified probabilities (perhaps adapted probabilities specified by an adaptive procedur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |