|

W-shingling

In natural language processing a ''w''-shingling is a set of ''unique'' ''shingles'' (therefore ''n-grams'') each of which is composed of contiguous subsequences of tokens within a document, which can then be used to ascertain the similarity between documents. The symbol ''w'' denotes the quantity of tokens in each shingle selected, or solved for. The document, "a rose is a rose is a rose" can therefore be maximally tokenized as follows: :(a,rose,is,a,rose,is,a,rose) The set of all contiguous ''sequences of 4 tokens'' (Thus 4=''n'', thus 4-''grams'') is : Which can then be reduced, or maximally shingled in this particular instance to :. Resemblance For a given shingle size, the degree to which two documents ''A'' and ''B'' resemble each other can be expressed as the ratio of the magnitudes of their shinglings' intersection In mathematics, the intersection of two or more objects is another object consisting of everything that is contained in all of the objects ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Vector Space Model

Vector space model or term vector model is an algebraic model for representing text documents (or more generally, items) as vector space, vectors such that the distance between vectors represents the relevance between the documents. It is used in information filtering, information retrieval, index (search engine), indexing and relevancy rankings. Its first use was in the SMART Information Retrieval System. Definitions In this section we consider a particular vector space model based on the Bag-of-words model, bag-of-words representation. Documents and queries are represented as vectors. :d_j = ( w_ ,w_ , \dotsc ,w_ ) :q = ( w_ ,w_ , \dotsc ,w_ ) Each Dimension (vector space), dimension corresponds to a separate term. If a term occurs in the document, its value in the vector is non-zero. Several different ways of computing these values, also known as (term) weights, have been developed. One of the best known schemes is tf-idf weighting (see the example below). The definition of ' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

MinHash

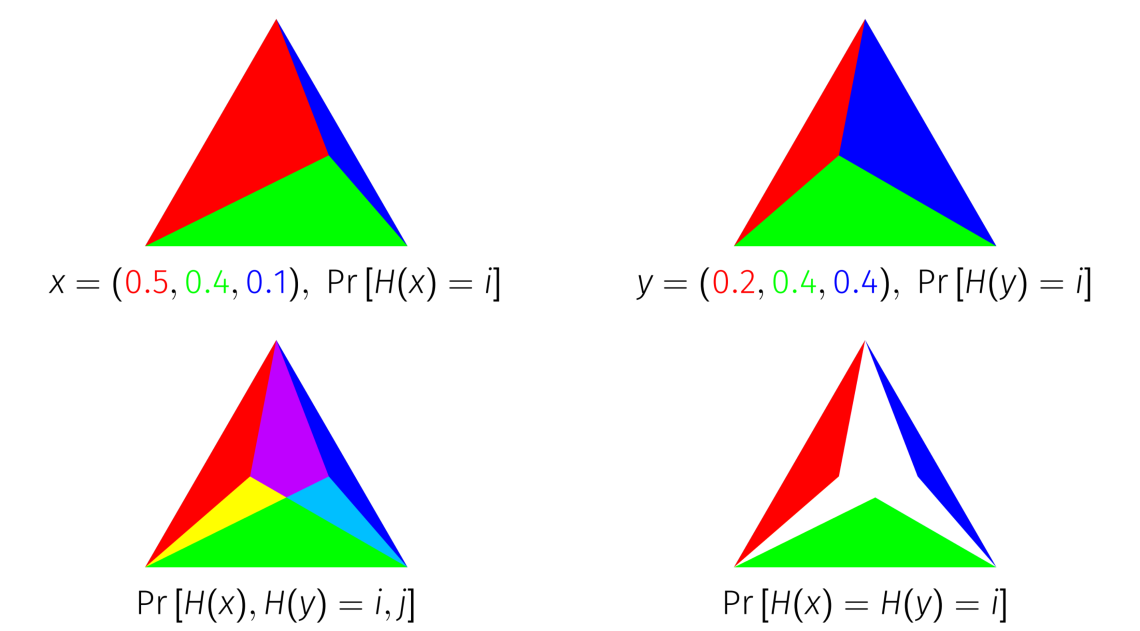

In computer science and data mining, MinHash (or the min-wise independent permutations locality sensitive hashing scheme) is a technique for quickly estimating how similar two sets are. The scheme was published by Andrei Broder in a 1997 conference, and initially used in the AltaVista search engine to detect duplicate web pages and eliminate them from search results.. It has also been applied in large-scale clustering problems, such as clustering documents by the similarity of their sets of words.. Jaccard similarity and minimum hash values The Jaccard similarity coefficient is a commonly used indicator of the similarity between two sets. Let be a set and and be subsets of , then the Jaccard index is defined to be the ratio of the number of elements of their intersection and the number of elements of their union: : J(A,B) = . This value is 0 when the two sets are disjoint, 1 when they are equal, and strictly between 0 and 1 otherwise. Two sets are more similar (i.e. have ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Bag-of-words Model

The bag-of-words (BoW) model is a model of text which uses an unordered collection (a "multiset, bag") of words. It is used in natural language processing and information retrieval (IR). It disregards word order (and thus most of syntax or grammar) but captures Multiplicity (mathematics), multiplicity. The bag-of-words model is commonly used in methods of document classification where, for example, the (frequency of) occurrence of each word is used as a Feature (machine learning), feature for training a Statistical classification, classifier. It has also been Bag-of-words model in computer vision, used for computer vision. An early reference to "bag of words" in a linguistic context can be found in Zellig Harris's 1954 article on ''Distributional Structure''. Definition The following models a text document using bag-of-words. Here are two simple text documents: (1) John likes to watch movies. Mary likes movies too. (2) Mary also likes to watch football games. Based on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Jaccard Coefficient

The Jaccard index is a statistic used for gauging the similarity and diversity of sample sets. It is defined in general taking the ratio of two sizes (areas or volumes), the intersection size divided by the union size, also called intersection over union (IoU). It was developed by Grove Karl Gilbert in 1884 as his ratio of verification (v) and now is often called the critical success index in meteorology. It was later developed independently by Paul Jaccard, originally giving the French name (coefficient of community), and independently formulated again by Taffee Tadashi Tanimoto. Thus, it is also called Tanimoto index or Tanimoto coefficient in some fields. Overview The Jaccard index measures similarity between finite non-empty sample sets and is defined as the size of the intersection divided by the size of the union of the sample sets: : J(A, B) = \frac = \frac. Note that by design, 0 \le J(A, B) \le 1. If the sets A and B have no elements in common, their intersectio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Rolling Hash

Rolling is a type of motion that combines rotation (commonly, of an axially symmetric object) and translation of that object with respect to a surface (either one or the other moves), such that, if ideal conditions exist, the two are in contact with each other without sliding. Rolling where there is no sliding is referred to as ''pure rolling''. By definition, there is no sliding when there is a frame of reference in which all points of contact on the rolling object have the same velocity as their counterparts on the surface on which the object rolls; in particular, for a frame of reference in which the rolling plane is at rest (see animation), the instantaneous velocity of all the points of contact (for instance, a generating line segment of a cylinder) of the rolling object is zero. In practice, due to small deformations near the contact area, some sliding and energy dissipation occurs. Nevertheless, the resulting rolling resistance is much lower than sliding friction, and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Rabin Fingerprint

The Rabin fingerprinting scheme (aka Polynomial fingerprinting) is a method for implementing fingerprints using polynomials over a finite field. It was proposed by Michael O. Rabin. Scheme Given an ''n''-bit message ''m''0,...,''m''''n''-1, we view it as a polynomial of degree ''n''-1 over the finite field GF(2). : f(x) = m_0 + m_1 x + \ldots + m_ x^ We then pick a random irreducible polynomial of degree ''k'' over GF(2), and we define the fingerprint of the message ''m'' to be the remainder r(x) after division of f(x) by p(x) over GF(2) which can be viewed as a polynomial of degree or as a ''k''-bit number. Applications Many implementations of the Rabin–Karp algorithm internally use Rabin fingerprints. The ''Low Bandwidth Network Filesystem'' (LBFS) from MIT uses Rabin fingerprints to implement variable size shift-resistant blocks.Athicha Muthitacharoen, Benjie Chen, and David Mazières"A Low-bandwidth Network File System"/ref> The basic idea is that the filesystem compu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

K-mer

In bioinformatics, ''k''-mers are substrings of length k contained within a biological sequence. Primarily used within the context of computational genomics and sequence analysis, in which ''k''-mers are composed of nucleotides (''i.e''. A, T, G, and C), ''k''-mers are capitalized upon to assemble DNA sequences, improve heterologous gene expression, identify species in metagenomic samples, and create attenuated vaccines. Usually, the term ''k''-mer refers to all of a sequence's subsequences of length k, such that the sequence AGAT would have four monomers (A, G, A, and T), three 2-mers (AG, GA, AT), two 3-mers (AGA and GAT) and one 4-mer (AGAT). More generally, a sequence of length L will have L - k + 1 ''k''-mers and there exist n^ total possible ''k''-mers, where n is number of possible monomers (e.g. four in the case of DNA). Introduction ''k''-mers are simply length k subsequences. For example, all the possible ''k''-mers of a DNA sequence are shown below: A metho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Jaccard Index

The Jaccard index is a statistic used for gauging the similarity and diversity of sample sets. It is defined in general taking the ratio of two sizes (areas or volumes), the intersection size divided by the union size, also called intersection over union (IoU). It was developed by Grove Karl Gilbert in 1884 as his ratio of verification (v) and now is often called the critical success index in meteorology. It was later developed independently by Paul Jaccard, originally giving the French name (coefficient of community), and independently formulated again by Taffee Tadashi Tanimoto. Thus, it is also called Tanimoto index or Tanimoto coefficient in some fields. Overview The Jaccard index measures similarity between finite non-empty sample sets and is defined as the size of the intersection divided by the size of the union of the sample sets: : J(A, B) = \frac = \frac. Note that by design, 0 \le J(A, B) \le 1. If the sets A and B have no elements in common, their intersectio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Natural Language Processing

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Major tasks in natural language processing are speech recognition, text classification, natural-language understanding, natural language understanding, and natural language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, though at the time that was not articulated as a problem separate from artificial intelligence. The proposed test includes a task that involves the automated interpretation and generation of natural language ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

N-gram

An ''n''-gram is a sequence of ''n'' adjacent symbols in particular order. The symbols may be ''n'' adjacent letter (alphabet), letters (including punctuation marks and blanks), syllables, or rarely whole words found in a language dataset; or adjacent phonemes extracted from a speech-recording dataset, or adjacent base pairs extracted from a genome. They are collected from a text corpus or speech corpus. If Latin numerical prefixes are used, then ''n''-gram of size 1 is called a "unigram", size 2 a "bigram" (or, less commonly, a "digram") etc. If, instead of the Latin ones, the Cardinal number (linguistics), English cardinal numbers are furtherly used, then they are called "four-gram", "five-gram", etc. Similarly, using Greek numerical prefixes such as "monomer", "dimer", "trimer", "tetramer", "pentamer", etc., or English cardinal numbers, "one-mer", "two-mer", "three-mer", etc. are used in computational biology, for polymers or oligomers of a known size, called k-mer, ''k'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Intersection (set Theory)

In set theory, the intersection of two Set (mathematics), sets A and B, denoted by A \cap B, is the set containing all elements of A that also belong to B or equivalently, all elements of B that also belong to A. Notation and terminology Intersection is written using the symbol "\cap" between the terms; that is, in infix notation. For example: \\cap\=\ \\cap\=\varnothing \Z\cap\N=\N \\cap\N=\ The intersection of more than two sets (generalized intersection) can be written as: \bigcap_^n A_i which is similar to capital-sigma notation. For an explanation of the symbols used in this article, refer to the table of mathematical symbols. Definition The intersection of two sets A and B, denoted by A \cap B, is the set of all objects that are members of both the sets A and B. In symbols: A \cap B = \. That is, x is an element of the intersection A \cap B if and only if x is both an element of A and an element of B. For example: * The intersection of the sets and is . * The n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |