|

Topic Modeling

In statistics and natural language processing, a topic model is a type of statistical model for discovering the abstract "topics" that occur in a collection of documents. Topic modeling is a frequently used text-mining tool for discovery of hidden semantic structures in a text body. Intuitively, given that a document is about a particular topic, one would expect particular words to appear in the document more or less frequently: "dog" and "bone" will appear more often in documents about dogs, "cat" and "meow" will appear in documents about cats, and "the" and "is" will appear approximately equally in both. A document typically concerns multiple topics in different proportions; thus, in a document that is 10% about cats and 90% about dogs, there would probably be about 9 times more dog words than cat words. The "topics" produced by topic modeling techniques are clusters of similar words. A topic model captures this intuition in a mathematical framework, which allows examining a set o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ''wikt:Statistik#German, Statistik'', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

American Civil War

The American Civil War (April 12, 1861 – May 26, 1865; also known by other names) was a civil war in the United States. It was fought between the Union ("the North") and the Confederacy ("the South"), the latter formed by states that had seceded. The central cause of the war was the dispute over whether slavery would be permitted to expand into the western territories, leading to more slave states, or be prevented from doing so, which was widely believed would place slavery on a course of ultimate extinction. Decades of political controversy over slavery were brought to a head by the victory in the 1860 U.S. presidential election of Abraham Lincoln, who opposed slavery's expansion into the west. An initial seven southern slave states responded to Lincoln's victory by seceding from the United States and, in 1861, forming the Confederacy. The Confederacy seized U.S. forts and other federal assets within their borders. Led by Confederate President Jefferson Davis, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Natural Language Processing

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves. Challenges in natural language processing frequently involve speech recognition, natural-language understanding, and natural-language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, tho ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gensim

Gensim is an open-source library for unsupervised topic modeling, document indexing, retrieval by similarity, and other natural language processing functionalities, using modern statistical machine learning. Gensim is implemented in Python and Cython for performance. Gensim is designed to handle large text collections using data streaming and incremental online algorithms, which differentiates it from most other machine learning software packages that target only in-memory processing. Main Features Gensim includes streamed parallelized implementations of fastText, word2vec and doc2vec algorithms, as well as latent semantic analysis (LSA, LSI, SVD), non-negative matrix factorization (NMF), latent Dirichlet allocation (LDA), tf-idf and random projections. Some of the novel online algorithms in Gensim were also published in the 2011 PhD dissertation ''Scalability of Semantic Analysis in Natural Language Processing'' of Radim Řehůřek, the creator of Gensim. Uses of Gensim ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mallet (software Project)

MALLET is a Java "Machine Learning for Language Toolkit". Description MALLET is an integrated collection of Java code useful for statistical natural language processing, document classification, cluster analysis, information extraction, topic modeling and other machine learning applications to text. History MALLET was developed primarily by Andrew McCallum, of the University of Massachusetts Amherst, with assistance from graduate students and faculty from both UMASS and the University of Pennsylvania The University of Pennsylvania (also known as Penn or UPenn) is a private research university in Philadelphia. It is the fourth-oldest institution of higher education in the United States and is ranked among the highest-regarded universitie .... See also External links Official website of the projectat the University of Massachusetts Amherst * ThTopic Modeling Toolis an independently developed GUI that outputs MALLET results in CSV and HTML files Free artificial ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unsupervised Learning

Unsupervised learning is a type of algorithm that learns patterns from untagged data. The hope is that through mimicry, which is an important mode of learning in people, the machine is forced to build a concise representation of its world and then generate imaginative content from it. In contrast to supervised learning where data is tagged by an expert, e.g. tagged as a "ball" or "fish", unsupervised methods exhibit self-organization that captures patterns as probability densities or a combination of neural feature preferences encoded in the machine's weights and activations. The other levels in the supervision spectrum are reinforcement learning where the machine is given only a numerical performance score as guidance, and semi-supervised learning where a small portion of the data is tagged. Neural networks Tasks vs. methods Neural network tasks are often categorized as discriminative (recognition) or generative (imagination). Often but not always, discriminative tas ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Classification

In statistics, classification is the problem of identifying which of a set of categories (sub-populations) an observation (or observations) belongs to. Examples are assigning a given email to the "spam" or "non-spam" class, and assigning a diagnosis to a given patient based on observed characteristics of the patient (sex, blood pressure, presence or absence of certain symptoms, etc.). Often, the individual observations are analyzed into a set of quantifiable properties, known variously as explanatory variables or ''features''. These properties may variously be categorical (e.g. "A", "B", "AB" or "O", for blood type), ordinal (e.g. "large", "medium" or "small"), integer-valued (e.g. the number of occurrences of a particular word in an email) or real-valued (e.g. a measurement of blood pressure). Other classifiers work by comparing observations to previous observations by means of a similarity or distance function. An algorithm that implements classification, especially in a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-negative Matrix Factorization

Non-negative matrix factorization (NMF or NNMF), also non-negative matrix approximation is a group of algorithms in multivariate analysis and linear algebra where a matrix is factorized into (usually) two matrices and , with the property that all three matrices have no negative elements. This non-negativity makes the resulting matrices easier to inspect. Also, in applications such as processing of audio spectrograms or muscular activity, non-negativity is inherent to the data being considered. Since the problem is not exactly solvable in general, it is commonly approximated numerically. NMF finds applications in such fields as astronomy, computer vision, document clustering, missing data imputation, chemometrics, audio signal processing, recommender systems, and bioinformatics. History In chemometrics non-negative matrix factorization has a long history under the name "self modeling curve resolution". In this framework the vectors in the right matrix are continuous curves ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hierarchical Dirichlet Process

In statistics and machine learning, the hierarchical Dirichlet process (HDP) is a nonparametric Bayesian approach to clustering grouped data. It uses a Dirichlet process for each group of data, with the Dirichlet processes for all groups sharing a base distribution which is itself drawn from a Dirichlet process. This method allows groups to share statistical strength via sharing of clusters across groups. The base distribution being drawn from a Dirichlet process is important, because draws from a Dirichlet process are atomic probability measures, and the atoms will appear in all group-level Dirichlet processes. Since each atom corresponds to a cluster, clusters are shared across all groups. It was developed by Yee Whye Teh, Michael I. Jordan, Matthew J. Beal and David Blei and published in the ''Journal of the American Statistical Association'' in 2006, as a formalization and generalization of the infinite hidden Markov model published in 2002. Model This model descriptio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Latent Semantic Analysis

Latent semantic analysis (LSA) is a technique in natural language processing, in particular distributional semantics, of analyzing relationships between a set of documents and the terms they contain by producing a set of concepts related to the documents and terms. LSA assumes that words that are close in meaning will occur in similar pieces of text (the distributional hypothesis). A matrix containing word counts per document (rows represent unique words and columns represent each document) is constructed from a large piece of text and a mathematical technique called singular value decomposition (SVD) is used to reduce the number of rows while preserving the similarity structure among columns. Documents are then compared by cosine similarity between any two columns. Values close to 1 represent very similar documents while values close to 0 represent very dissimilar documents. An information retrieval technique using latent semantic structure was patented in 1988US Patent 4,83 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Explicit Semantic Analysis

In natural language processing and information retrieval, explicit semantic analysis (ESA) is a vectoral representation of text (individual words or entire documents) that uses a document corpus as a knowledge base. Specifically, in ESA, a word is represented as a column vector in the tf–idf matrix of the text corpus and a document (string of words) is represented as the centroid of the vectors representing its words. Typically, the text corpus is English Wikipedia, though other corpora including the Open Directory Project have been used. ESA was designed by Evgeniy Gabrilovich and Shaul Markovitch as a means of improving text categorization and has been used by this pair of researchers to compute what they refer to as "semantic relatedness" by means of cosine similarity between the aforementioned vectors, collectively interpreted as a space of "concepts explicitly defined and described by humans", where Wikipedia articles (or ODP entries, or otherwise titles of documents in the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastic Block Model

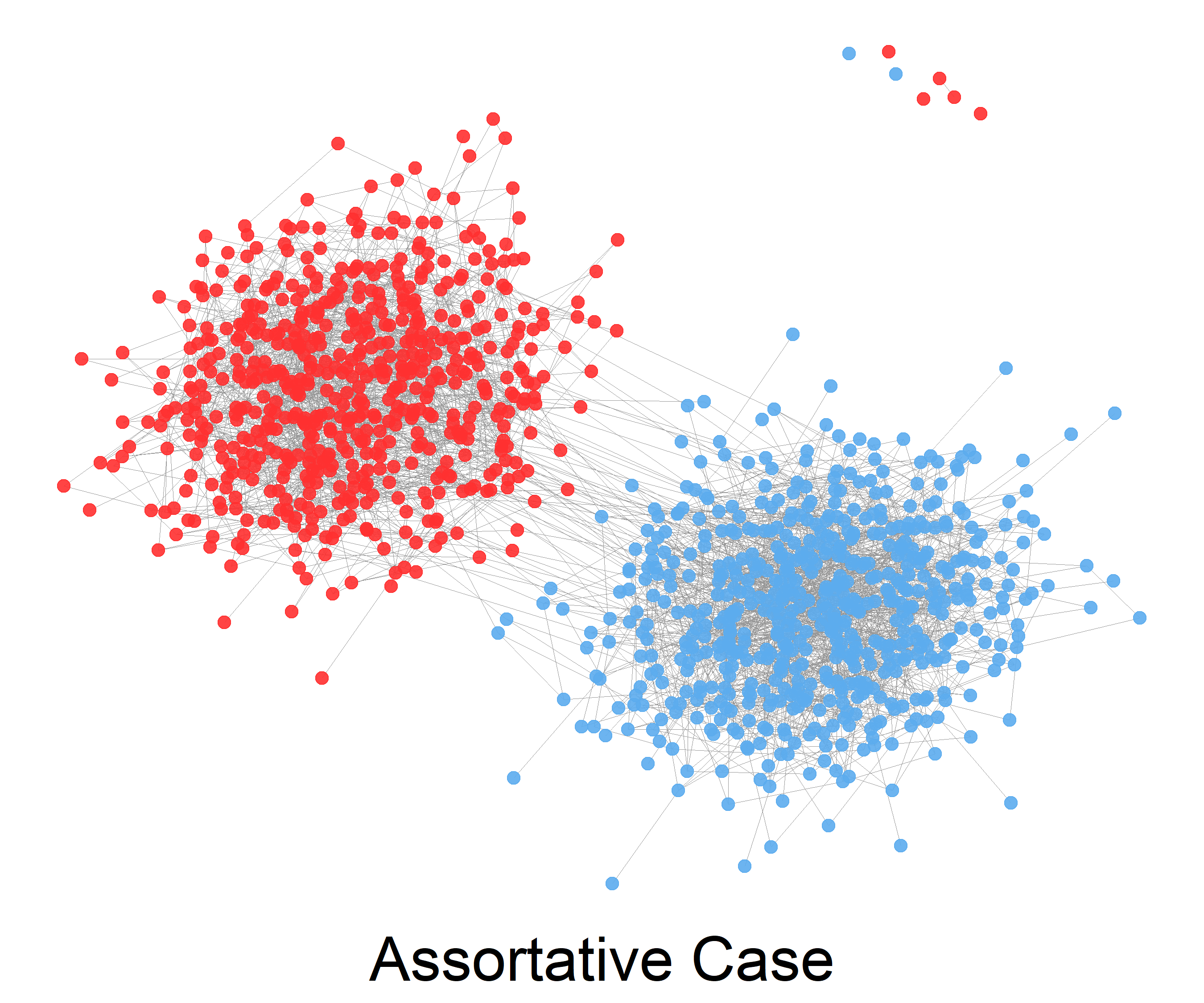

The stochastic block model is a generative model for random graphs. This model tends to produce graphs containing ''communities'', subsets of nodes characterized by being connected with one another with particular edge densities. For example, edges may be more common within communities than between communities. Its mathematical formulation has been firstly introduced in 1983 in the field of social network by Holland et al. The stochastic block model is important in statistics, machine learning, and network science, where it serves as a useful benchmark for the task of recovering community structure in graph data. Definition The stochastic block model takes the following parameters: * The number n of vertices; * a partition of the vertex set \ into disjoint subsets C_1,\ldots,C_r, called ''communities''; * a symmetric r \times r matrix P of edge probabilities. The edge set is then sampled at random as follows: any two vertices u \in C_i and v \in C_j are connected by an edge wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |