|

Staging (data)

A staging area, or landing zone, is an intermediate storage area used for data processing during the extract, transform and load (ETL) process. The data staging area sits between the data source(s) and the data target(s), which are often data warehouses, data marts, or other data repositories.Oracle 9i Data Warehousing Guide, ''Data Warehousing Concepts'' Oracle Corp. Data staging areas are often transient in nature, with their contents being erased prior to running an ETL process or immediately following successful completion of an ETL process. There are staging area architectures, however, which are designed to hold data for extended periods of time for archival or troubleshooting purposes. Implementation Staging areas can be implemented i ...[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Extract, Transform, Load

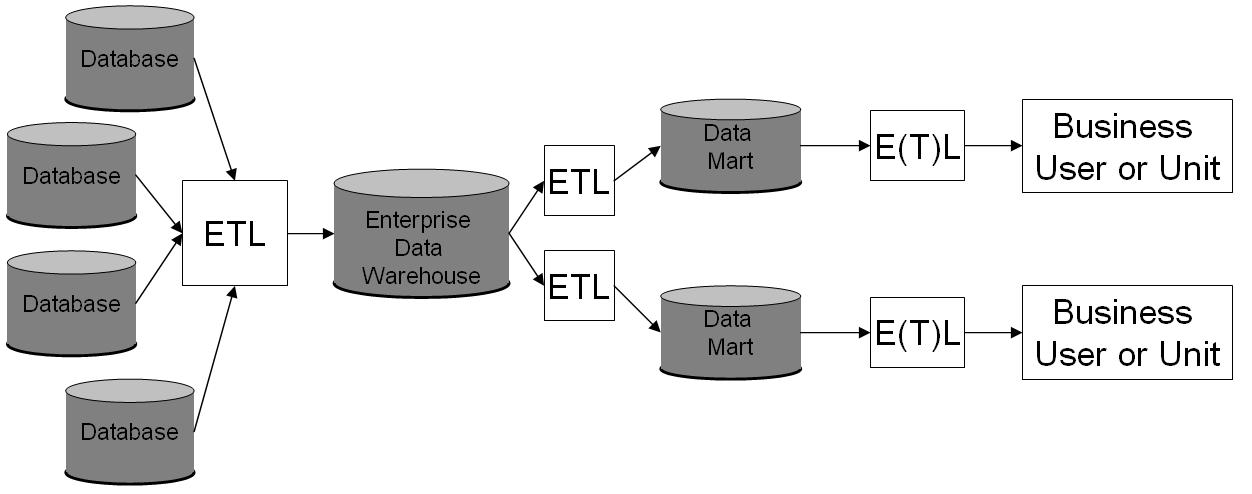

In computing, extract, transform, load (ETL) is a three-phase process where data is extracted, transformed (cleaned, sanitized, scrubbed) and loaded into an output data container. The data can be collated from one or more sources and it can also be outputted to one or more destinations. ETL processing is typically executed using software applications but it can also be done manually by system operators. ETL software typically automates the entire process and can be run manually or on reoccurring schedules either as single jobs or aggregated into a batch of jobs. A properly designed ETL system extracts data from source systems and enforces data type and data validity standards and ensures it conforms structurally to the requirements of the output. Some ETL systems can also deliver data in a presentation-ready format so that application developers can build applications and end users can make decisions. The ETL process became a popular concept in the 1970s and is often used in d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for Business reporting, reporting and data analysis and is considered a core component of business intelligence. DWs are central Repository (version control), repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise. The data stored in the warehouse is uploaded from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the DW for reporting. Extract, transform, load (ETL) and extract, load, transform (ELT) are the two main approaches used to build a data warehouse system. ETL-based data warehousing The typical extract, transform, load (ETL)-based data warehouse uses ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Mart

A data mart is a structure/access pattern specific to ''data warehouse'' environments, used to retrieve client-facing data. The data mart is a subset of the data warehouse and is usually oriented to a specific business line or team. Whereas data warehouses have an enterprise-wide depth, the information in data marts pertains to a single department. In some deployments, each department or business unit is considered the ''owner'' of its data mart including all the ''hardware'', ''software'' and ''data''. This enables each department to isolate the use, manipulation and development of their data. In other deployments where conformed dimensions are used, this business unit owner will not hold true for shared dimensions like customer, product, etc. Warehouses and data marts are built because the information in the database is not organized in a way that makes it readily accessible. This organization requires queries that are too complicated, difficult to access or resource intensive. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reference Data

Reference data is data used to classify or categorize other data. Typically, they are static or slowly changing over time. Examples of reference data include: * Units of measurement * Country codes * Corporate codes * Fixed conversion rates e.g., weight, temperature, and length * Calendar structure and constraints Reference data sets are sometimes alternatively referred to as a "controlled vocabulary" or "lookup" data. Reference data differs from master data. While both provide context for business transactions, reference data is concerned with classification and categorisation, while master data is concerned with business entities. A further difference between reference data and master data is that a change to the reference data values may require an associated change in business process to support the change, while a change in master data will always be managed as part of existing business processes. For example, adding a new customer or sales product is part of the standard ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Master Data Management

Master data management (MDM) is a technology-enabled discipline in which business and information technology work together to ensure the uniformity, accuracy, stewardship, semantic consistency and accountability of the enterprise's official shared master data assets. Drivers for master data management Organisations, or groups of organisations, may establish the need for master data management when they hold more than one copy of data about a business entity. Holding more than one copy of this master data inherently means that there is an inefficiency in maintaining a "single version of the truth" across all copies. Unless people, processes and technology are in place to ensure that the data values are kept aligned across all copies, it is almost inevitable that different versions of information about a business entity will be held. This causes inefficiencies in operational data use, and hinders the ability of organisations to report and analyze. At a basic level, master data ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Concurrency Control

In information technology and computer science, especially in the fields of computer programming, operating systems, multiprocessors, and databases, concurrency control ensures that correct results for Concurrent computing, concurrent operations are generated, while getting those results as quickly as possible. Computer systems, both software and computer hardware, hardware, consist of modules, or components. Each component is designed to operate correctly, i.e., to obey or to meet certain consistency rules. When components that operate concurrently interact by messaging or by sharing accessed data (in Computer memory, memory or Computer data storage, storage), a certain component's consistency may be violated by another component. The general area of concurrency control provides rules, methods, design methodologies, and Scientific theory, theories to maintain the consistency of components operating concurrently while interacting, and thus the consistency and correctness of the who ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Operational Data Store

An operational data store (ODS) is used for operational reporting and as a source of data for the enterprise data warehouse (EDW). It is a complementary element to an EDW in a decision support environment, and is used for operational reporting, controls, and decision making, as opposed to the EDW, which is used for tactical and strategic decision support. An ODS is a database designed to integrate data from multiple sources for additional operations on the data, for reporting, controls and operational decision support. Unlike a production master data store, the data is not passed back to operational systems. It may be passed for further operations and to the data warehouse for reporting. An ODS should not be confused with an enterprise data hub (EDH). An operational data store will take transactional data from one or more production systems and loosely integrate it, in some respects it is still subject oriented, integrated and time variant, but without the volatility constrain ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Change Data Capture

In databases, change data capture (CDC) is a set of software design patterns used to determine and track the data that has changed so that action can be taken using the changed data. CDC is an approach to data integration that is based on the identification, capture and delivery of the changes made to enterprise data sources. CDC occurs often in data-warehouse environments since capturing and preserving the state of data across time is one of the core functions of a data warehouse, but CDC can be utilized in any database or data repository system. Methodology System developers can set up CDC mechanisms in a number of ways and in any one or a combination of system layers from application logic down to physical storage. In a simplified CDC context, one computer system has data believed to have changed from a previous point in time, and a second computer system needs to take action based on that changed data. The former is the source, the latter is the target. It is possible ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Cleansing

Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data. Data cleansing may be performed interactively with data wrangling tools, or as batch processing through scripting or a data quality firewall. After cleansing, a data set should be consistent with other similar data sets in the system. The inconsistencies detected or removed may have been originally caused by user entry errors, by corruption in transmission or storage, or by different data dictionary definitions of similar entities in different stores. Data cleaning differs from data validation in that validation almost invariably means data is rejected from the system at entry and is performed at the time of entry, rather than on batches of data. The actual process of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |