|

Sitemap

A sitemap is a list of pages of a web site within a domain. There are three primary kinds of sitemap: * Sitemaps used during the planning of a website by its designers. * Human-visible listings, typically hierarchical, of the pages on a site. * Structured listings intended for web crawlers such as search engines. Types of sitemaps Sitemaps may be addressed to users or to software. Many sites have user-visible sitemaps which present a systematic view, typically hierarchical, of the site. These are intended to help visitors find specific pages, and can also be used by crawlers. They also act as a navigation aid by providing an overview of a site's content at a single glance. Alphabetically organized sitemaps, sometimes called site indexes, are a different approach. For use by search engines and other crawlers, there is a structured format, the XML Sitemap, which lists the pages in a site, their relative importance, and how often they are updated. This is pointed to from the r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sitemaps

The Sitemaps protocol allows a webmaster to inform search engines about URLs on a website that are available for crawling. A Sitemap is an XML file that lists the URLs for a site. It allows webmasters to include additional information about each URL: when it was last updated, how often it changes, and how important it is in relation to other URLs of the site. This allows search engines to crawl the site more efficiently and to find URLs that may be isolated from the rest of the site's content. The Sitemaps protocol is a URL inclusion protocol and complements robots.txt, a URL exclusion protocol. History Google first introduced Sitemaps 0.84 in June 2005 so web developers could publish lists of links from across their sites. Google, Yahoo! and Microsoft announced joint support for the Sitemaps protocol in November 2006. The schema version was changed to "Sitemap 0.90", but no other changes were made. In April 2007, Ask.com and IBM announced support for Sitemaps. Also, Google ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sitemaps

The Sitemaps protocol allows a webmaster to inform search engines about URLs on a website that are available for crawling. A Sitemap is an XML file that lists the URLs for a site. It allows webmasters to include additional information about each URL: when it was last updated, how often it changes, and how important it is in relation to other URLs of the site. This allows search engines to crawl the site more efficiently and to find URLs that may be isolated from the rest of the site's content. The Sitemaps protocol is a URL inclusion protocol and complements robots.txt, a URL exclusion protocol. History Google first introduced Sitemaps 0.84 in June 2005 so web developers could publish lists of links from across their sites. Google, Yahoo! and Microsoft announced joint support for the Sitemaps protocol in November 2006. The schema version was changed to "Sitemap 0.90", but no other changes were made. In April 2007, Ask.com and IBM announced support for Sitemaps. Also, Google ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Biositemap

A Biositemap is a way for a biomedical research institution of organisation to show how biological information is distributed throughout their Information Technology systems and networks. This information may be shared with other organisations and researchers. The Biositemap enables web browsers, crawlers and robots to easily access and process the information to use in other systems, media and computational formats. Biositemaps protocols provide clues for the Biositemap web harvesters, allowing them to find resources and content across the whole interlink of the Biositemap system. This means that human or machine users can access any relevant information on any topic across all organisations throughout the Biositemap system and bring it to their own systems for assimilation or analysis. File framework The information is normally stored in a biositemap.rdf or biositemap.xml file which contains lists of information about the data, software, tools material and services provided or ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

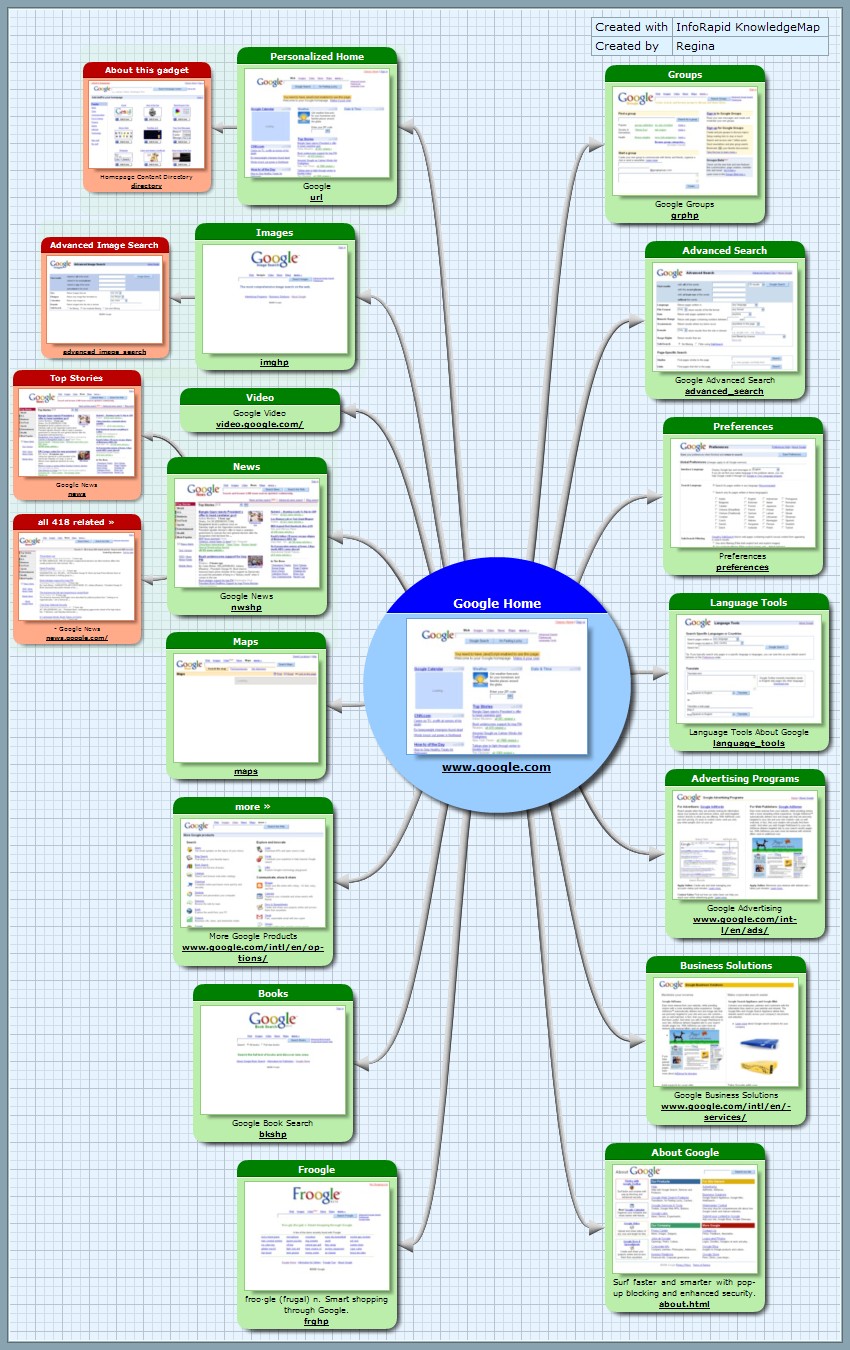

Sitemap Google

A sitemap is a list of pages of a web site within a domain. There are three primary kinds of sitemap: * Sitemaps used during the planning of a website by its designers. * Human-visible listings, typically hierarchical, of the pages on a site. * Structured listings intended for web crawlers such as search engines. Types of sitemaps Sitemaps may be addressed to users or to software. Many sites have user-visible sitemaps which present a systematic view, typically hierarchical, of the site. These are intended to help visitors find specific pages, and can also be used by crawlers. They also act as a navigation aid by providing an overview of a site's content at a single glance. Alphabetically organized sitemaps, sometimes called site indexes, are a different approach. For use by search engines and other crawlers, there is a structured format, the XML Sitemap, which lists the pages in a site, their relative importance, and how often they are updated. This is pointed to from the r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Robots Exclusion Standard

The robots exclusion standard, also known as the robots exclusion protocol or simply robots.txt, is a standard used by websites to indicate to visiting web crawlers and other web robots which portions of the site they are allowed to visit. This relies on voluntary compliance. Not all robots comply with the standard; email harvesters, spambots, malware and robots that scan for security vulnerabilities may even start with the portions of the website where they have been told to stay out. The "robots.txt" file can be used in conjunction with sitemaps, another robot inclusion standard for websites. History The standard was proposed by Martijn Koster, when working for Nexor in February 1994 on the ''www-talk'' mailing list, the main communication channel for WWW-related activities at the time. Charles Stross claims to have provoked Koster to suggest robots.txt, after he wrote a badly-behaved web crawler that inadvertently caused a denial-of-service attack on Koster's server. I ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Search Engine Optimization

Search engine optimization (SEO) is the process of improving the quality and quantity of Web traffic, website traffic to a website or a web page from web search engine, search engines. SEO targets unpaid traffic (known as "natural" or "Organic search, organic" results) rather than direct traffic or Online advertising, paid traffic. Unpaid traffic may originate from different kinds of searches, including image search, video search, academic databases and search engines, academic search, news search, and industry-specific vertical search engines. As an Internet marketing strategy, SEO considers how search engines work, the computer-programmed algorithms that dictate search engine behavior, what people search for, the actual search terms or Keyword research, keywords typed into search engines, and which search engines are preferred by their targeted audience. SEO is performed because a website will receive more visitors from a search engine when websites rank higher on the sear ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google

Google LLC () is an American multinational technology company focusing on search engine technology, online advertising, cloud computing, computer software, quantum computing, e-commerce, artificial intelligence, and consumer electronics. It has been referred to as "the most powerful company in the world" and one of the world's most valuable brands due to its market dominance, data collection, and technological advantages in the area of artificial intelligence. Its parent company Alphabet is considered one of the Big Five American information technology companies, alongside Amazon, Apple, Meta, and Microsoft. Google was founded on September 4, 1998, by Larry Page and Sergey Brin while they were PhD students at Stanford University in California. Together they own about 14% of its publicly listed shares and control 56% of its stockholder voting power through super-voting stock. The company went public via an initial public offering (IPO) in 2004. In 2015, Google was reor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Search Engine

A search engine is a software system designed to carry out web searches. They search the World Wide Web in a systematic way for particular information specified in a textual web search query. The search results are generally presented in a line of results, often referred to as search engine results pages (SERPs). When a user enters a query into a search engine, the engine scans its index of web pages to find those that are relevant to the user's query. The results are then ranked by relevancy and displayed to the user. The information may be a mix of links to web pages, images, videos, infographics, articles, research papers, and other types of files. Some search engines also mine data available in databases or open directories. Unlike web directories and social bookmarking sites, which are maintained by human editors, search engines also maintain real-time information by running an algorithm on a web crawler. Any internet-based content that can't be indexed and searched ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Search Engine

A search engine is a software system designed to carry out web searches. They search the World Wide Web in a systematic way for particular information specified in a textual web search query. The search results are generally presented in a line of results, often referred to as search engine results pages (SERPs). When a user enters a query into a search engine, the engine scans its index of web pages to find those that are relevant to the user's query. The results are then ranked by relevancy and displayed to the user. The information may be a mix of links to web pages, images, videos, infographics, articles, research papers, and other types of files. Some search engines also mine data available in databases or open directories. Unlike web directories and social bookmarking sites, which are maintained by human editors, search engines also maintain real-time information by running an algorithm on a web crawler. Any internet-based content that can't be indexed and searched ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Biology

Computational biology refers to the use of data analysis, mathematical modeling and computational simulations to understand biological systems and relationships. An intersection of computer science, biology, and big data, the field also has foundations in applied mathematics, chemistry, and genetics. It differs from biological computing, a subfield of computer engineering which uses bioengineering to build computers. History Bioinformatics, the analysis of informatics processes in biological systems, began in the early 1970s. At this time, research in artificial intelligence was using network models of the human brain in order to generate new algorithms. This use of biological data pushed biological researchers to use computers to evaluate and compare large data sets in their own field. By 1982, researchers shared information via punch cards. The amount of data grew exponentially by the end of the 1980s, requiring new computational methods for quickly interpreting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Contact Page

{{Unreferenced, date=February 2012 A contact page is a common web page on a website for visitors to contact the organization or individual providing the website. The page contains one or more of the following items: * an e-mail address * a telephone number * a postal address, sometimes accompanied with a map showing the location * links to social media * a contact form for a text message or inquiry In the case of large organizations, the contact page may provide information for several offices (headquarters, field offices, etc.) and departments (customer support, sales, investor relations, press relations, etc.). See also * Home page * Site map A sitemap is a list of pages of a web site within a domain. There are three primary kinds of sitemap: * Sitemaps used during the planning of a website by its designers. * Human-visible listings, typically hierarchical, of the pages on a site. * S ... Web design ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)

.jpg)