|

Shared Memory (interprocess Communication)

In computer science, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Shared memory is an efficient means of passing data between programs. Depending on context, programs may run on a single processor or on multiple separate processors. Using memory for communication inside a single program, e.g. among its multiple threads, is also referred to as shared memory. In hardware In computer hardware, ''shared memory'' refers to a (typically large) block of random access memory (RAM) that can be accessed by several different central processing units (CPUs) in a multiprocessor computer system. Shared memory systems may use: * uniform memory access (UMA): all the processors share the physical memory uniformly; * non-uniform memory access (NUMA): memory access time depends on the memory location relative to a processor; * cache-only memory architecture (COMA): the local memor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shared Memory

In computer science, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Shared memory is an efficient means of passing data between programs. Depending on context, programs may run on a single processor or on multiple separate processors. Using memory for communication inside a single program, e.g. among its multiple threads, is also referred to as shared memory. In hardware In computer hardware, ''shared memory'' refers to a (typically large) block of random access memory (RAM) that can be accessed by several different central processing units (CPUs) in a multiprocessor computer system. Shared memory systems may use: * uniform memory access (UMA): all the processors share the physical memory uniformly; * non-uniform memory access (NUMA): memory access time depends on the memory location relative to a processor; * cache-only memory architecture (COMA): the local memo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Front-side Bus

A front-side bus (FSB) is a computer communication interface (bus) that was often used in Intel-chip-based computers during the 1990s and 2000s. The EV6 bus served the same function for competing AMD CPUs. Both typically carry data between the central processing unit (CPU) and a memory controller hub, known as the northbridge. Depending on the implementation, some computers may also have a back-side bus that connects the CPU to the cache. This bus and the cache connected to it are faster than accessing the system memory (or RAM) via the front-side bus. The speed of the front side bus is often used as an important measure of the performance of a computer. The original front-side bus architecture has been replaced by HyperTransport, Intel QuickPath Interconnect or Direct Media Interface in modern volume CPUs. History The term came into use by Intel Corporation about the time the Pentium Pro and Pentium II products were announced, in the 1990s. "Front side" refers to the extern ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Unix Domain Socket

A Unix domain socket aka UDS or IPC socket ( inter-process communication socket) is a data communications endpoint for exchanging data between processes executing on the same host operating system. It is also referred to by its address family AF_UNIX. Valid socket types in the UNIX domain are: * SOCK_STREAM (compare to TCP) – for a stream-oriented socket * SOCK_DGRAM (compare to UDP) – for a datagram-oriented socket that preserves message boundaries (as on most UNIX implementations, UNIX domain datagram sockets are always reliable and don't reorder datagrams) * SOCK_SEQPACKET (compare to SCTP) – for a sequenced-packet socket that is connection-oriented, preserves message boundaries, and delivers messages in the order that they were sent The Unix domain socket facility is a standard component of POSIX operating systems. The API for Unix domain sockets is similar to that of an Internet socket, but rather than using an underlying network protocol, all communication occurs enti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Named Pipe

In computing, a named pipe (also known as a FIFO for its behavior) is an extension to the traditional pipe concept on Unix and Unix-like systems, and is one of the methods of inter-process communication (IPC). The concept is also found in OS/2 and Microsoft Windows, although the semantics differ substantially. A traditional pipe is " unnamed" and lasts only as long as the process. A named pipe, however, can last as long as the system is up, beyond the life of the process. It can be deleted if no longer used. Usually a named pipe appears as a file, and generally processes attach to it for IPC. In Unix Instead of a conventional, unnamed, shell pipeline, a named pipeline makes use of the filesystem. It is explicitly created using mkfifo() or mknod(), and two separate processes can access the pipe by name — one process can open it as a reader, and the other as a writer. For example, one can create a pipe and set up gzip to compress things piped to it: mkfifo my_pipe gzip -9 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Execute In Place

In computer science, execute in place (XIP) is a method of executing programs directly from long-term storage rather than copying it into RAM. It is an extension of using shared memory to reduce the total amount of memory required. Its general effect is that the program text consumes no writable memory, saving it for dynamic data, and that all instances of the program are run from a single copy. For this to work, several criteria have to be met: * The storage must provide a similar interface to the CPU as regular memory (or an adaptive layer must be present). * This interface must provide sufficiently fast read operations with a random access pattern. * The file system, if one is used, needs to expose appropriate mapping functions. * The program must either be linked to be aware of the address the storage appears at in the system or be position-independent. * The program must not modify data within the loaded image. The storage requirements are usually met by using NOR flash ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shared Library

In computer science, a library is a collection of non-volatile resources used by computer programs, often for software development. These may include configuration data, documentation, help data, message templates, pre-written code and subroutines, classes, values or type specifications. In IBM's OS/360 and its successors they are referred to as partitioned data sets. A library is also a collection of implementations of behavior, written in terms of a language, that has a well-defined interface by which the behavior is invoked. For instance, people who want to write a higher-level program can use a library to make system calls instead of implementing those system calls over and over again. In addition, the behavior is provided for reuse by multiple independent programs. A program invokes the library-provided behavior via a mechanism of the language. For example, in a simple imperative language such as C, the behavior in a library is invoked by using C's normal function- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Virtual Memory

In computing, virtual memory, or virtual storage is a memory management technique that provides an "idealized abstraction of the storage resources that are actually available on a given machine" which "creates the illusion to users of a very large (main) memory". The computer's operating system, using a combination of hardware and software, maps memory addresses used by a program, called '' virtual addresses'', into ''physical addresses'' in computer memory. Main storage, as seen by a process or task, appears as a contiguous address space or collection of contiguous segments. The operating system manages virtual address spaces and the assignment of real memory to virtual memory. Address translation hardware in the CPU, often referred to as a memory management unit (MMU), automatically translates virtual addresses to physical addresses. Software within the operating system may extend these capabilities, utilizing, e.g., disk storage, to provide a virtual address space that ca ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random-access Memory

Random-access memory (RAM; ) is a form of computer memory that can be read and changed in any order, typically used to store working Data (computing), data and machine code. A Random access, random-access memory device allows data items to be read (computer), read or written in almost the same amount of time irrespective of the physical location of data inside the memory, in contrast with other direct-access data storage media (such as hard disks, CD-RWs, DVD-RWs and the older Magnetic tape data storage, magnetic tapes and drum memory), where the time required to read and write data items varies significantly depending on their physical locations on the recording medium, due to mechanical limitations such as media rotation speeds and arm movement. RAM contains multiplexer, multiplexing and demultiplexing circuitry, to connect the data lines to the addressed storage for reading or writing the entry. Usually more than one bit of storage is accessed by the same address, and RAM ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Process (computing)

In computing, a process is the instance of a computer program that is being executed by one or many threads. There are many different process models, some of which are light weight, but almost all processes (even entire virtual machines) are rooted in an operating system (OS) process which comprises the program code, assigned system resources, physical and logical access permissions, and data structures to initiate, control and coordinate execution activity. Depending on the OS, a process may be made up of multiple threads of execution that execute instructions concurrently. While a computer program is a passive collection of instructions typically stored in a file on disk, a process is the execution of those instructions after being loaded from the disk into memory. Several processes may be associated with the same program; for example, opening up several instances of the same program often results in more than one process being executed. Multitasking is a method to allow ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inter-process Communication

In computer science, inter-process communication or interprocess communication (IPC) refers specifically to the mechanisms an operating system provides to allow the processes to manage shared data. Typically, applications can use IPC, categorized as clients and servers, where the client requests data and the server responds to client requests. Many applications are both clients and servers, as commonly seen in distributed computing. IPC is very important to the design process for microkernels and nanokernels, which reduce the number of functionalities provided by the kernel. Those functionalities are then obtained by communicating with servers via IPC, leading to a large increase in communication when compared to a regular monolithic kernel. IPC interfaces generally encompass variable analytic framework structures. These processes ensure compatibility between the multi-vector protocols upon which IPC models rely. An IPC mechanism is either synchronous or asynchronous. Synchr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

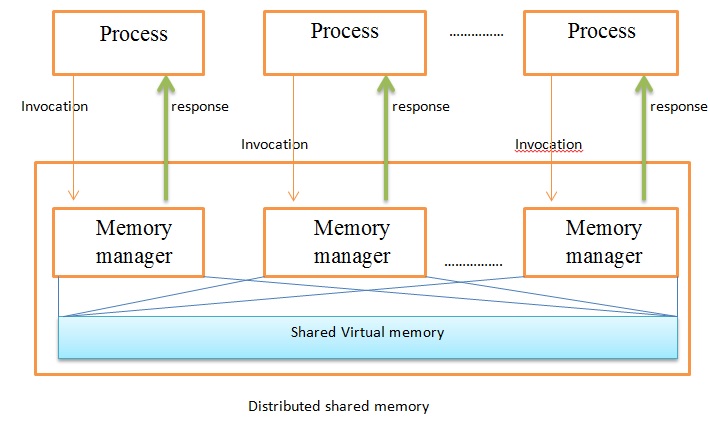

Distributed Shared Memory

In computer science, distributed shared memory (DSM) is a form of memory architecture where physically separated memories can be addressed as a single shared address space. The term "shared" does not mean that there is a single centralized memory, but that the address space is shared—i.e., the same physical address on two processors refers to the same location in memory. Distributed global address space (DGAS), is a similar term for a wide class of software and hardware implementations, in which each node of a cluster has access to shared memory in addition to each node's private (i.e., not shared) memory. Overview A distributed-memory system, often called a multicomputer, consists of multiple independent processing nodes with local memory modules which is connected by a general interconnection network. Software DSM systems can be implemented in an operating system, or as a programming library and can be thought of as extensions of the underlying virtual memory architecture ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed Memory

In computer science, distributed memory refers to a multiprocessor computer system in which each processor has its own private memory. Computational tasks can only operate on local data, and if remote data are required, the computational task must communicate with one or more remote processors. In contrast, a shared memory multiprocessor offers a single memory space used by all processors. Processors do not have to be aware where data resides, except that there may be performance penalties, and that race conditions are to be avoided. In a distributed memory system there is typically a processor, a memory, and some form of interconnection that allows programs on each processor to interact with each other. The interconnect can be organised with point to point links or separate hardware can provide a switching network. The network topology is a key factor in determining how the multiprocessor machine scales. The links between nodes can be implemented using some standard network pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |