|

Sensor Noise

Image noise is random variation of brightness or color information in images, and is usually an aspect of electronic noise. It can be produced by the image sensor and circuitry of a scanner or digital camera. Image noise can also originate in film grain and in the unavoidable shot noise of an ideal photon detector. Image noise is an undesirable by-product of image capture that obscures the desired information. Typically the term “image noise” is used to refer to noise in 2D images, not 3D images. The original meaning of "noise" was "unwanted signal"; unwanted electrical fluctuations in signals received by AM radios caused audible acoustic noise ("static"). By analogy, unwanted electrical fluctuations are also called "noise". Image noise can range from almost imperceptible specks on a digital photograph taken in good light, to optical and radioastronomical images that are almost entirely noise, from which a small amount of information can be derived by sophisticated proce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fat Tail

A fat-tailed distribution is a probability distribution that exhibits a large skewness or kurtosis, relative to that of either a normal distribution or an exponential distribution. In common usage, the terms fat-tailed and heavy-tailed are sometimes synonymous; fat-tailed is sometimes also defined as a subset of heavy-tailed. Different research communities favor one or the other largely for historical reasons, and may have differences in the precise definition of either. Fat-tailed distributions have been empirically encountered in a variety of areas: physics, earth sciences, economics and political science. The class of fat-tailed distributions includes those whose tails decay like a power law, which is a common point of reference in their use in the scientific literature. However, fat-tailed distributions also include other slowly-decaying distributions, such as the log-normal. The extreme case: a power-law distribution The most extreme case of a fat tail is given by a distri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

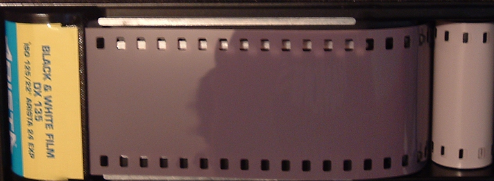

Photographic Film

Photographic film is a strip or sheet of transparent film base coated on one side with a gelatin emulsion containing microscopically small light-sensitive silver halide crystals. The sizes and other characteristics of the crystals determine the sensitivity, contrast, and resolution of the film. The emulsion will gradually darken if left exposed to light, but the process is too slow and incomplete to be of any practical use. Instead, a very short exposure to the image formed by a camera lens is used to produce only a very slight chemical change, proportional to the amount of light absorbed by each crystal. This creates an invisible latent image in the emulsion, which can be chemically developed into a visible photograph. In addition to visible light, all films are sensitive to ultraviolet light, X-rays, gamma rays, and high-energy particles. Unmodified silver halide crystals are sensitive only to the blue part of the visible spectrum, producing unnatural-looking rendit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Dithering

Dither is an intentionally applied form of noise used to randomize quantization error, preventing large-scale patterns such as color banding in images. Dither is routinely used in processing of both digital audio and video data, and is often one of the last stages of mastering audio to a CD. A common use of dither is converting a grayscale image to black and white, such that the density of black dots in the new image approximates the average gray level in the original. Etymology The term ''dither'' was published in books on analog computation and hydraulically controlled guns shortly after World War II. Though he did not use the term ''dither'', the concept of dithering to reduce quantization patterns was first applied by Lawrence G. Roberts in his 1961 MIT master's thesis and 1962 article. By 1964 dither was being used in the modern sense described in this article. The technique was in use at least as early as 1915, though not under the name ''dither''. In digita ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uniform Distribution (continuous)

In probability theory and statistics, the continuous uniform distribution or rectangular distribution is a family of symmetric probability distributions. The distribution describes an experiment where there is an arbitrary outcome that lies between certain bounds. The bounds are defined by the parameters, ''a'' and ''b'', which are the minimum and maximum values. The interval can either be closed (e.g. , b or open (e.g. (a, b)). Therefore, the distribution is often abbreviated ''U'' (''a'', ''b''), where U stands for uniform distribution. The difference between the bounds defines the interval length; all intervals of the same length on the distribution's support are equally probable. It is the maximum entropy probability distribution for a random variable ''X'' under no constraint other than that it is contained in the distribution's support. Definitions Probability density function The probability density function of the continuous uniform distribution is: : f(x)=\be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantization (image Processing)

Quantization, involved in image processing, is a lossy compression technique achieved by compressing a range of values to a single quantum (discrete) value. When the number of discrete symbols in a given stream is reduced, the stream becomes more compressible. For example, reducing the number of colors required to represent a digital image makes it possible to reduce its file size. Specific applications include DCT data quantization in JPEG and DWT data quantization in JPEG 2000. Color quantization Color quantization reduces the number of colors used in an image; this is important for displaying images on devices that support a limited number of colors and for efficiently compressing certain kinds of images. Most bitmap editors and many operating systems have built-in support for color quantization. Popular modern color quantization algorithms include the nearest color algorithm (for fixed palettes), the median cut algorithm, and an algorithm based on octrees. It is common ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Quantization (signal Processing)

Quantization, in mathematics and digital signal processing, is the process of mapping input values from a large set (often a continuous set) to output values in a (countable) smaller set, often with a finite number of elements. Rounding and truncation are typical examples of quantization processes. Quantization is involved to some degree in nearly all digital signal processing, as the process of representing a signal in digital form ordinarily involves rounding. Quantization also forms the core of essentially all lossy compression algorithms. The difference between an input value and its quantized value (such as round-off error) is referred to as quantization error. A device or algorithmic function that performs quantization is called a quantizer. An analog-to-digital converter is an example of a quantizer. Example For example, rounding a real number x to the nearest integer value forms a very basic type of quantizer – a ''uniform'' one. A typical (''mid-tread'') uni ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Deviation

In statistics, the standard deviation is a measure of the amount of variation or dispersion of a set of values. A low standard deviation indicates that the values tend to be close to the mean (also called the expected value) of the set, while a high standard deviation indicates that the values are spread out over a wider range. Standard deviation may be abbreviated SD, and is most commonly represented in mathematical texts and equations by the lower case Greek letter σ (sigma), for the population standard deviation, or the Latin letter '' s'', for the sample standard deviation. The standard deviation of a random variable, sample, statistical population, data set, or probability distribution is the square root of its variance. It is algebraically simpler, though in practice less robust, than the average absolute deviation. A useful property of the standard deviation is that, unlike the variance, it is expressed in the same unit as the data. The standard deviation o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Poisson Distribution

In probability theory and statistics, the Poisson distribution is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time or space if these events occur with a known constant mean rate and independently of the time since the last event. It is named after French mathematician Siméon Denis Poisson (; ). The Poisson distribution can also be used for the number of events in other specified interval types such as distance, area, or volume. For instance, a call center receives an average of 180 calls per hour, 24 hours a day. The calls are independent; receiving one does not change the probability of when the next one will arrive. The number of calls received during any minute has a Poisson probability distribution with mean 3: the most likely numbers are 2 and 3 but 1 and 4 are also likely and there is a small probability of it being as low as zero and a very small probability it could be 10. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LCD Monitor

A thin-film-transistor liquid-crystal display (TFT LCD) is a variant of a liquid-crystal display that uses thin-film-transistor technology to improve image qualities such as addressability and contrast. A TFT LCD is an active matrix LCD, in contrast to passive matrix LCDs or simple, direct-driven (i.e. with segments directly connected to electronics outside the LCD) LCDs with a few segments. TFT LCDs are used in appliances including television sets, computer monitors, mobile phones, handheld devices, video game systems, personal digital assistants, navigation systems, projectors, and dashboards in automobiles. History In February 1957, John Wallmark of RCA filed a patent for a thin film MOSFET. Paul K. Weimer, also of RCA implemented Wallmark's ideas and developed the thin-film transistor (TFT) in 1962, a type of MOSFET distinct from the standard bulk MOSFET. It was made with thin films of cadmium selenide and cadmium sulfide. The idea of a TFT-based liquid-crystal display ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean

There are several kinds of mean in mathematics, especially in statistics. Each mean serves to summarize a given group of data, often to better understand the overall value ( magnitude and sign) of a given data set. For a data set, the '' arithmetic mean'', also known as "arithmetic average", is a measure of central tendency of a finite set of numbers: specifically, the sum of the values divided by the number of values. The arithmetic mean of a set of numbers ''x''1, ''x''2, ..., x''n'' is typically denoted using an overhead bar, \bar. If the data set were based on a series of observations obtained by sampling from a statistical population, the arithmetic mean is the '' sample mean'' (\bar) to distinguish it from the mean, or expected value, of the underlying distribution, the '' population mean'' (denoted \mu or \mu_x).Underhill, L.G.; Bradfield d. (1998) ''Introstat'', Juta and Company Ltd.p. 181/ref> Outside probability and statistics, a wide range of other notions of m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Median

In statistics and probability theory, the median is the value separating the higher half from the lower half of a data sample, a population, or a probability distribution. For a data set, it may be thought of as "the middle" value. The basic feature of the median in describing data compared to the mean (often simply described as the "average") is that it is not skewed by a small proportion of extremely large or small values, and therefore provides a better representation of a "typical" value. Median income, for example, may be a better way to suggest what a "typical" income is, because income distribution can be very skewed. The median is of central importance in robust statistics, as it is the most resistant statistic, having a breakdown point of 50%: so long as no more than half the data are contaminated, the median is not an arbitrarily large or small result. Finite data set of numbers The median of a finite list of numbers is the "middle" number, when those numbers are list ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |