|

Semiparametric Regression

In statistics, semiparametric regression includes regression models that combine parametric and nonparametric models. They are often used in situations where the fully nonparametric model may not perform well or when the researcher wants to use a parametric model but the functional form with respect to a subset of the regressors or the density of the errors is not known. Semiparametric regression models are a particular type of semiparametric modelling and, since semiparametric models contain a parametric component, they rely on parametric assumptions and may be misspecified and inconsistent, just like a fully parametric model. Methods Many different semiparametric regression methods have been proposed and developed. The most popular methods are the partially linear, index and varying coefficient models. Partially linear models A partially linear model is given by : Y_i = X'_i \beta + g\left(Z_i \right) + u_i, \, \quad i = 1,\ldots,n, \, where Y_ is the dependent ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German: '' Statistik'', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.Dodge, Y. (2006) ''The Oxford Dictionary of Statistical Terms'', Oxford University Press. When census data cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regression Analysis

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships between a dependent variable (often called the 'outcome' or 'response' variable, or a 'label' in machine learning parlance) and one or more independent variables (often called 'predictors', 'covariates', 'explanatory variables' or 'features'). The most common form of regression analysis is linear regression, in which one finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared differences between the true data and that line (or hyperplane). For specific mathematical reasons (see linear regression), this allows the researcher to estimate the conditional expectation (or population average value) of the dependent variable when the independent variables take on a given ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parametric Model

In statistics, a parametric model or parametric family or finite-dimensional model is a particular class of statistical models. Specifically, a parametric model is a family of probability distributions that has a finite number of parameters. Definition A statistical model is a collection of probability distributions on some sample space. We assume that the collection, , is indexed by some set . The set is called the parameter set or, more commonly, the parameter space. For each , let denote the corresponding member of the collection; so is a cumulative distribution function. Then a statistical model can be written as : \mathcal = \big\. The model is a parametric model if for some positive integer . When the model consists of absolutely continuous distributions, it is often specified in terms of corresponding probability density functions: : \mathcal = \big\. Examples * The Poisson family of distributions is parametrized by a single number : : \ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kernel Regression

In statistics, kernel regression is a non-parametric technique to estimate the conditional expectation of a random variable. The objective is to find a non-linear relation between a pair of random variables ''X'' and ''Y''. In any nonparametric regression, the conditional expectation of a variable Y relative to a variable X may be written: : \operatorname(Y \mid X) = m(X) where m is an unknown function. Nadaraya–Watson kernel regression Nadaraya and Watson, both in 1964, proposed to estimate m as a locally weighted average, using a kernel as a weighting function. The Nadaraya–Watson estimator is: : \widehat_h(x)=\frac where K_h is a kernel with a bandwidth h. Derivation : \operatorname(Y \mid X=x) = \int y f(y\mid x) \, dy = \int y \frac \, dy Using the kernel density estimation for the joint distribution ''f''(''x'',''y'') and ''f''(''x'') with a kernel ''K'', : \hat(x,y) = \frac\sum_^n K_h(x-x_i) K_h(y-y_i), : \hat(x) = \frac \sum_^n K_h(x-x_i), we ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semiparametric Model

In statistics, a semiparametric model is a statistical model that has parametric and nonparametric components. A statistical model is a parameterized family of distributions: \ indexed by a parameter \theta. * A parametric model is a model in which the indexing parameter \theta is a vector in k-dimensional Euclidean space, for some nonnegative integer k.. Thus, \theta is finite-dimensional, and \Theta \subseteq \mathbb^k. * With a nonparametric model, the set of possible values of the parameter \theta is a subset of some space V, which is not necessarily finite-dimensional. For example, we might consider the set of all distributions with mean 0. Such spaces are vector spaces with topological structure, but may not be finite-dimensional as vector spaces. Thus, \Theta \subseteq V for some possibly infinite-dimensional space V. * With a semiparametric model, the parameter has both a finite-dimensional component and an infinite-dimensional component (often a real-valued functio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Specification (regression)

In statistics, model specification is part of the process of building a statistical model: specification consists of selecting an appropriate functional form for the model and choosing which variables to include. For example, given personal income y together with years of schooling s and on-the-job experience x, we might specify a functional relationship y = f(s,x) as follows: : \ln y = \ln y_0 + \rho s + \beta_1 x + \beta_2 x^2 + \varepsilon where \varepsilon is the unexplained error term that is supposed to comprise independent and identically distributed Gaussian variables. The statistician Sir David Cox has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Specification error and bias Specification error occurs when the functional form or the choice of independent variables poorly represent relevant aspects of the true data-generating process. In particular, bias (the expected value of the di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Consistent Estimator

In statistics, a consistent estimator or asymptotically consistent estimator is an estimator—a rule for computing estimates of a parameter ''θ''0—having the property that as the number of data points used increases indefinitely, the resulting sequence of estimates converges in probability to ''θ''0. This means that the distributions of the estimates become more and more concentrated near the true value of the parameter being estimated, so that the probability of the estimator being arbitrarily close to ''θ''0 converges to one. In practice one constructs an estimator as a function of an available sample of size ''n'', and then imagines being able to keep collecting data and expanding the sample ''ad infinitum''. In this way one would obtain a sequence of estimates indexed by ''n'', and consistency is a property of what occurs as the sample size “grows to infinity”. If the sequence of estimates can be mathematically shown to converge in probability to the true value ' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Partially Linear Model

A partially linear model is a form of semiparametric model In statistics, a semiparametric model is a statistical model that has parametric and nonparametric components. A statistical model is a parameterized family of distributions: \ indexed by a parameter \theta. * A parametric model is a model in ..., since it contains parametric and nonparametric elements. Application of the least squares estimators is available to partially linear model, if the hypothesis of the known of nonparametric element is valid. Partially linear equations were first used in the analysis of the relationship between temperature and usage of electricity by Engle, Granger, Rice and Weiss (1986). Typical application of partially linear model in the field of Microeconomics is presented by Tripathi in the case of profitability of firm's production in 1997. Also, partially linear model applied successfully in some other academic field. In 1994, Zeger and Diggle introduced partially linear model into bio ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heteroskedastic

In statistics, a sequence (or a vector) of random variables is homoscedastic () if all its random variables have the same finite variance. This is also known as homogeneity of variance. The complementary notion is called heteroscedasticity. The spellings ''homoskedasticity'' and ''heteroskedasticity'' are also frequently used. Assuming a variable is homoscedastic when in reality it is heteroscedastic () results in unbiased but inefficient point estimates and in biased estimates of standard errors, and may result in overestimating the goodness of fit as measured by the Pearson coefficient. The existence of heteroscedasticity is a major concern in regression analysis and the analysis of variance, as it invalidates statistical tests of significance that assume that the modelling errors all have the same variance. While the ordinary least squares estimator is still unbiased in the presence of heteroscedasticity, it is inefficient and generalized least squares should be used ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Non-linear Least Squares

Non-linear least squares is the form of least squares analysis used to fit a set of ''m'' observations with a model that is non-linear in ''n'' unknown parameters (''m'' ≥ ''n''). It is used in some forms of nonlinear regression. The basis of the method is to approximate the model by a linear one and to refine the parameters by successive iterations. There are many similarities to linear least squares, but also some significant differences. In economic theory, the non-linear least squares method is applied in (i) the probit regression, (ii) threshold regression, (iii) smooth regression, (iv) logistic link regression, (v) Box-Cox transformed regressors (m(x,\theta_i) = \theta_1 + \theta_2 x^). Theory Consider a set of m data points, (x_1, y_1), (x_2, y_2), \dots, (x_m, y_m), and a curve (model function) \hat = f(x, \boldsymbol \beta), that in addition to the variable x also depends on n parameters, \boldsymbol \beta = (\beta_1, \beta_2, \dots, \beta_n), with m\ge n. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Kernel Density Estimation

In statistics, kernel density estimation (KDE) is the application of kernel smoothing for probability density estimation, i.e., a non-parametric method to estimate the probability density function of a random variable based on '' kernels'' as weights. KDE answers a fundamental data smoothing problem where inferences about the population are made, based on a finite data sample. In some fields such as signal processing and econometrics it is also termed the Parzen–Rosenblatt window method, after Emanuel Parzen and Murray Rosenblatt, who are usually credited with independently creating it in its current form. One of the famous applications of kernel density estimation is in estimating the class-conditional marginal densities of data when using a naive Bayes classifier, which can improve its prediction accuracy. Definition Let (''x''1, ''x''2, ..., ''xn'') be independent and identically distributed samples drawn from some univariate distribution with an unknown density ' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

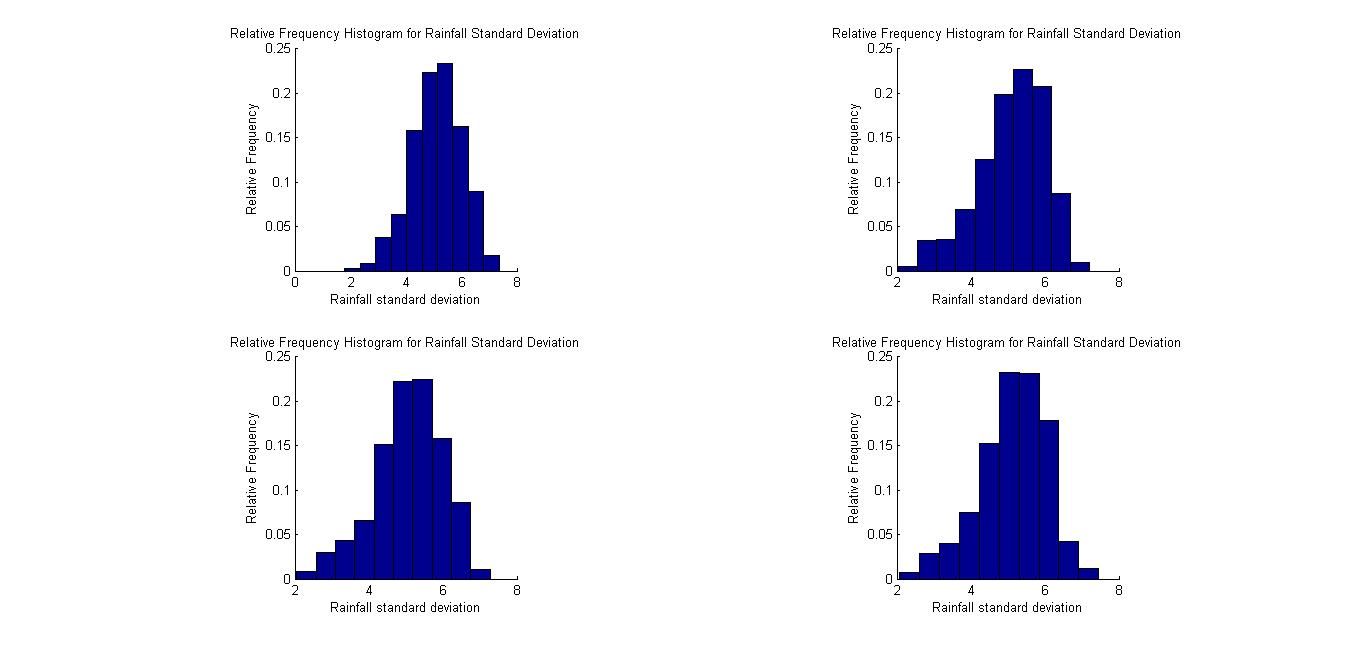

Resampling (statistics)

In statistics, resampling is the creation of new samples based on one observed sample. Resampling methods are: # Permutation tests (also re-randomization tests) # Bootstrapping # Cross validation Permutation tests Permutation tests rely on resampling the original data assuming the null hypothesis. Based on the resampled data it can be concluded how likely the original data is to occur under the null hypothesis. Bootstrap Bootstrapping is a statistical method for estimating the sampling distribution of an estimator by sampling with replacement from the original sample, most often with the purpose of deriving robust estimates of standard errors and confidence intervals of a population parameter like a mean, median, proportion, odds ratio, correlation coefficient or regression coefficient. It has been called the plug-in principle,Logan, J. David and Wolesensky, Willian R. Mathematical methods in biology. Pure and Applied Mathematics: a Wiley-interscience Series of Texts, M ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |