|

Semantic Role Labelling

In natural language processing, semantic role labeling (also called shallow semantic parsing or slot-filling) is the process that assigns labels to words or phrases in a sentence that indicates their semantic role in the sentence, such as that of an agent, goal, or result. It serves to find the meaning of the sentence. To do this, it detects the arguments associated with the predicate or verb of a sentence and how they are classified into their specific roles. A common example is the sentence "Mary sold the book to John." The agent is "Mary," the predicate is "sold" (or rather, "to sell,") the theme is "the book," and the recipient is "John." Another example is how "the book belongs to me" would need two labels such as "possessed" and "possessor" and "the book was sold to John" would need two other labels such as theme and recipient, despite these two clauses being similar to "subject" and "object" functions. History In 1968, the first idea for semantic role labeling was propos ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Major tasks in natural language processing are speech recognition, text classification, natural-language understanding, natural language understanding, and natural language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, though at the time that was not articulated as a problem separate from artificial intelligence. The proposed test includes a task that involves the automated interpretation and generation of natural language ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PropBank

PropBank is a corpus that is annotated with verbal propositions and their arguments—a "proposition bank". Although "PropBank" refers to a specific corpus produced by Martha Palmer ''et al.'', the term ''propbank'' is also coming to be used as a common noun referring to any corpus that has been annotated with propositions and their arguments. The PropBank project has played a role in recent research in natural language processing, and has been used in semantic role labelling. Comparison PropBank differs from FrameNet, the resource to which it is most frequently compared, in several ways. PropBank is a verb-oriented resource, while FrameNet is centered on the more abstract notion of frames, which generalizes descriptions across similar verbs (e.g. "describe" and "characterize") as well as nouns and other words (e.g. "description"). PropBank does not annotate events or states of affairs described using nouns. PropBank commits to annotating all verbs in a corpus, whereas the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Grammar

In linguistics, grammar is the set of rules for how a natural language is structured, as demonstrated by its speakers or writers. Grammar rules may concern the use of clauses, phrases, and words. The term may also refer to the study of such rules, a subject that includes phonology, morphology (linguistics), morphology, and syntax, together with phonetics, semantics, and pragmatics. There are, broadly speaking, two different ways to study grammar: traditional grammar and #Theoretical frameworks, theoretical grammar. Fluency in a particular language variety involves a speaker internalizing these rules, many or most of which are language acquisition, acquired by observing other speakers, as opposed to intentional study or language teaching, instruction. Much of this internalization occurs during early childhood; learning a language later in life usually involves more direct instruction. The term ''grammar'' can also describe the linguistic behaviour of groups of speakers and writer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Annotation

An annotation is extra information associated with a particular point in a document or other piece of information. It can be a note that includes a comment or explanation. Annotations are sometimes presented Marginalia, in the margin of book pages. For annotations of different digital media, see web annotation and text annotation. Literature, grammar and educational purposes Practising visually Annotation Practices are highlighting a phrase or sentence and including a comment, circling a word that needs defining, posing a question when something is not fully understood and writing a short summary of a key section. It also invites students to "(re)construct a history through material engagement and exciting DIY (Do-It-Yourself) annotation practices." Annotation practices that are available today offer a remarkable set of tools for students to begin to work, and in a more collaborative, connected way than has been previously possible. Text and film annotation Text and Film A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

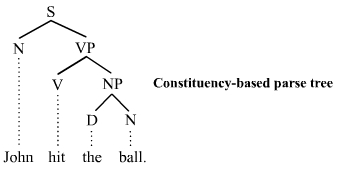

Concrete Syntax Tree

A parse tree or parsing tree (also known as a derivation tree or concrete syntax tree) is an ordered, rooted tree that represents the syntactic structure of a string according to some context-free grammar. The term ''parse tree'' itself is used primarily in computational linguistics; in theoretical syntax, the term ''syntax tree'' is more common. Concrete syntax trees reflect the syntax of the input language, making them distinct from the abstract syntax trees used in computer programming. Unlike Reed-Kellogg sentence diagrams used for teaching grammar, parse trees do not use distinct symbol shapes for different types of constituents. Parse trees are usually constructed based on either the constituency relation of constituency grammars (phrase structure grammars) or the dependency relation of dependency grammars. Parse trees may be generated for sentences in natural languages (see natural language processing), as well as during processing of computer languages, such as progra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lexical Semantics

Lexical semantics (also known as lexicosemantics), as a subfield of linguistics, linguistic semantics, is the study of word meanings.Pustejovsky, J. (2005) Lexical Semantics: Overview' in Encyclopedia of Language and Linguistics, second edition, Volumes 1-14Taylor, J. (2017) Lexical Semantics'. In B. Dancygier (Ed.), The Cambridge Handbook of Cognitive Linguistics (Cambridge Handbooks in Language and Linguistics, pp. 246-261). Cambridge: Cambridge University Press. It includes the study of how words structure their meaning, how they act in grammar and Principle of compositionality, compositionality, and the relationships between the distinct senses and uses of a word. The units of analysis in lexical semantics are lexical units which include not only words but also sub-words or sub-units such as affixes and even compound words and phrases. Lexical units include the catalogue of words in a language, the lexicon. Lexical semantics looks at how the meaning of the lexical units correl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Named Entity Recognition

Named-entity recognition (NER) (also known as (named) entity identification, entity chunking, and entity extraction) is a subtask of information extraction that seeks to locate and classify named entities mentioned in unstructured text into pre-defined categories such as person names (PER), organizations (ORG), locations (LOC), geopolitical entities (GPE), vehicles (VEH), medical codes, time expressions, quantities, monetary values, percentages, etc. Most research on NER/NEE systems has been structured as taking an unannotated block of text, such as transducing: into an annotated block of text that highlights the names of entities: In this example, a person name consisting of one token, a two-token company name and a temporal expression have been detected and classified. Problem Definition In the expression '' named entity'', the word ''named'' restricts the task to those entities for which one or many strings, such as words or phrases, stand (fairly) consistently ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Speech Recognition

Speech recognition is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers. It is also known as automatic speech recognition (ASR), computer speech recognition or speech-to-text (STT). It incorporates knowledge and research in the computer science, linguistics and computer engineering fields. The reverse process is speech synthesis. Some speech recognition systems require "training" (also called "enrollment") where an individual speaker reads text or isolated vocabulary into the system. The system analyzes the person's specific voice and uses it to fine-tune the recognition of that person's speech, resulting in increased accuracy. Systems that do not use training are called "speaker-independent" systems. Systems that use training are called "speaker dependent". Speech recognition applications include voice user interfaces ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Text Mining

Text mining, text data mining (TDM) or text analytics is the process of deriving high-quality information from text. It involves "the discovery by computer of new, previously unknown information, by automatically extracting information from different written resources." Written resources may include websites, books, emails, reviews, and articles. High-quality information is typically obtained by devising patterns and trends by means such as statistical pattern learning. According to Hotho et al. (2005), there are three perspectives of text mining: information extraction, data mining, and knowledge discovery in databases (KDD). Text mining usually involves the process of structuring the input text (usually parsing, along with the addition of some derived linguistic features and the removal of others, and subsequent insertion into a database), deriving patterns within the structured data, and finally evaluation and interpretation of the output. 'High quality' in text mining usually ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Automatic Summarization

Automatic summarization is the process of shortening a set of data computationally, to create a subset (a summary) that represents the most important or relevant information within the original content. Artificial intelligence algorithms are commonly developed and employed to achieve this, specialized for different types of data. Text summarization is usually implemented by natural language processing methods, designed to locate the most informative sentences in a given document. On the other hand, visual content can be summarized using computer vision algorithms. Image summarization is the subject of ongoing research; existing approaches typically attempt to display the most representative images from a given image collection, or generate a video that only includes the most important content from the entire collection. Video summarization algorithms identify and extract from the original video content the most important frames (''key-frames''), and/or the most important video seg ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Question Answering

Question answering (QA) is a computer science discipline within the fields of information retrieval and natural language processing (NLP) that is concerned with building systems that automatically answer questions that are posed by humans in a natural language. Overview A question-answering implementation, usually a computer program, may construct its answers by querying a structured database of knowledge or information, usually a knowledge base. More commonly, question-answering systems can pull answers from an unstructured collection of natural language documents. Some examples of natural language document collections used for question answering systems include: * a collection of reference texts * internal organization documents and web pages * compiled newswire reports * a set of Wikipedia pages * a subset of World Wide Web pages Types of question answering Question-answering research attempts to develop ways of answering a wide range of question types, including fact, li ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |