|

Precomputed

In algorithms, precomputation is the act of performing an initial computation before run time to generate a lookup table that can be used by an algorithm to avoid repeated computation each time it is executed. Precomputation is often used in algorithms that depend on the results of expensive computations that don't depend on the input of the algorithm. A trivial example of precomputation is the use of hardcoded mathematical constants, such as π and e, rather than computing their approximations to the necessary precision at run time. In databases, the term materialization is used to refer to storing the results of a precomputation, such as in a materialized view. Overview Precomputing a set of intermediate results at the beginning of an algorithm's execution can often increase algorithmic efficiency substantially. This becomes advantageous when one or more inputs is constrained to a small enough range that the results can be stored in a reasonably sized block of memory. B ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Rainbow Table

A rainbow table is a precomputed table for caching the outputs of a cryptographic hash function, usually for cracking password hashes. Passwords are typically stored not in plain text form, but as hash values. If such a database of hashed passwords falls into the hands of attackers, they can use a precomputed rainbow table to recover the plaintext passwords. A common defense against this attack is to compute the hashes using a key derivation function that adds a "salt" to each password before hashing it, with different passwords receiving different salts, which are stored in plain text along with the hash. Rainbow tables are a practical example of a space–time tradeoff: they use less computer processing time and more storage than a brute-force attack which calculates a hash on every attempt, but more processing time and less storage than a simple table that stores the hash of every possible password. Rainbow tables were invented by Philippe Oechslin as an application of an ea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Lookup Table

In computer science, a lookup table (LUT) is an array data structure, array that replaces runtime (program lifecycle phase), runtime computation of a mathematical function (mathematics), function with a simpler array indexing operation, in a process termed as ''direct addressing''. The savings in processing time can be significant, because retrieving a value from memory is often faster than carrying out an "expensive" computation or input/output operation. The tables may be precomputation, precalculated and stored in static memory allocation, static program storage, calculated (or prefetcher, "pre-fetched") as part of a program's initialization phase (''memoization''), or even stored in hardware in application-specific platforms. Lookup tables are also used extensively to validate input values by matching against a list of valid (or invalid) items in an array and, in some programming languages, may include pointer functions (or offsets to labels) to process the matching input. Fiel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

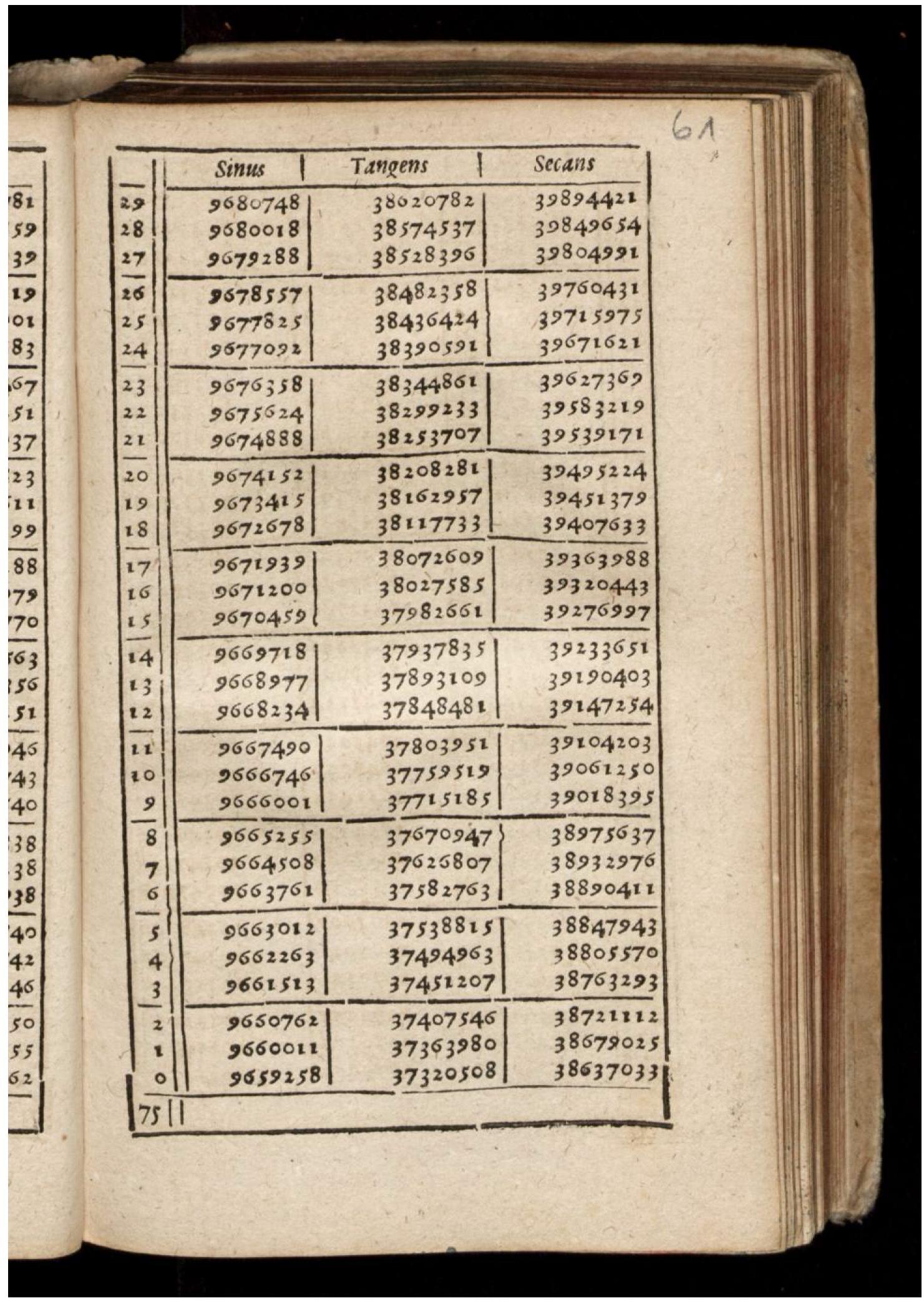

Trigonometric Table

In mathematics, tables of trigonometric functions are useful in a number of areas. Before the existence of pocket calculators, trigonometric tables were essential for navigation, science and engineering. The calculation of mathematical tables was an important area of study, which led to the development of the first mechanical computing devices. Modern computers and pocket calculators now generate trigonometric function values on demand, using special libraries of mathematical code. Often, these libraries use pre-calculated tables internally, and compute the required value by using an appropriate interpolation method. Interpolation of simple look-up tables of trigonometric functions is still used in computer graphics, where only modest accuracy may be required and speed is often paramount. Another important application of trigonometric tables and generation schemes is for fast Fourier transform (FFT) algorithms, where the same trigonometric function values (called ''twiddle fact ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Abramowitz&Stegun

''Abramowitz and Stegun'' (''AS'') is the informal name of a 1964 mathematical reference work edited by Milton Abramowitz and Irene Stegun of the United States National Bureau of Standards (NBS), now the National Institute of Standards and Technology (NIST). Its full title is ''Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables''. A digital successor to the Handbook was released as the "Digital Library of Mathematical Functions" (DLMF) on 11 May 2010, along with a printed version, the '' NIST Handbook of Mathematical Functions'', published by Cambridge University Press. Overview Since it was first published in 1964, the 1046-page ''Handbook'' has been one of the most comprehensive sources of information on special functions, containing definitions, identities, approximations, plots, and tables of values of numerous functions used in virtually all fields of applied mathematics. The notation used in the ''Handbook'' is the '' de facto'' standard for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Roman Numerals

Roman numerals are a numeral system that originated in ancient Rome and remained the usual way of writing numbers throughout Europe well into the Late Middle Ages. Numbers are written with combinations of letters from the Latin alphabet, each with a fixed integer value. The modern style uses only these seven: The use of Roman numerals continued long after the Fall of the Western Roman Empire, decline of the Roman Empire. From the 14th century on, Roman numerals began to be replaced by Arabic numerals; however, this process was gradual, and the use of Roman numerals persisted in various places, including on clock face, clock faces. For instance, on the clock of Big Ben (designed in 1852), the hours from 1 to 12 are written as: The notations and can be read as "one less than five" (4) and "one less than ten" (9), although there is a tradition favouring the representation of "4" as "" on Roman numeral clocks. Other common uses include year numbers on monuments and buildin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Dataflow Analysis

Data-flow analysis is a technique for gathering information about the possible set of values calculated at various points in a computer program. It forms the foundation for a wide variety of compiler optimizations and program verification techniques. A program's control-flow graph (CFG) is used to determine those parts of a program to which a particular value assigned to a variable might propagate. The information gathered is often used by compilers when optimizing a program. A canonical example of a data-flow analysis is reaching definitions. Other commonly used data-flow analyses include live variable analysis, available expressions, constant propagation, and very busy expressions, each serving a distinct purpose in compiler optimization passes. A simple way to perform data-flow analysis of programs is to set up data-flow equations for each node of the control-flow graph and solve them by repeatedly calculating the output from the input locally at each node until the whole sys ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Partial Evaluation

In computing, partial evaluation is a technique for several different types of program optimization by specialization. The most straightforward application is to produce new programs that run faster than the originals while being guaranteed to behave in the same way. A computer program ''prog'' is seen as a mapping of input data into output data: prog : I_\text \times I_\text \to O, where I_\text, the ''static data'', is the part of the input data known at compile time. The partial evaluator transforms \langle prog, I_\text\rangle into prog^* : I_\text \to O by precomputing all static input at compile time. prog^* is called the "residual program" and should run more efficiently than the original program. The act of partial evaluation is said to "residualize" prog to prog^*. Futamura projections A particularly interesting example of the use of partial evaluation, first described in the 1970s by Yoshihiko Futamura, is when ''prog'' is an interpreter for a programming language ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Compiler

In computing, a compiler is a computer program that Translator (computing), translates computer code written in one programming language (the ''source'' language) into another language (the ''target'' language). The name "compiler" is primarily used for programs that translate source code from a high-level programming language to a lower level language, low-level programming language (e.g. assembly language, object code, or machine code) to create an executable program.Compilers: Principles, Techniques, and Tools by Alfred V. Aho, Ravi Sethi, Jeffrey D. Ullman - Second Edition, 2007 There are many different types of compilers which produce output in different useful forms. A ''cross-compiler'' produces code for a different Central processing unit, CPU or operating system than the one on which the cross-compiler itself runs. A ''bootstrap compiler'' is often a temporary compiler, used for compiling a more permanent or better optimised compiler for a language. Related software ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Radiosity (3D Computer Graphics)

In 3D computer graphics, radiosity is an application of the finite element method to solving the rendering equation for scenes with surfaces that reflect light diffusely. Unlike rendering methods that use Monte Carlo algorithms (such as path tracing), which handle all types of light paths, typical radiosity only account for paths (represented by the code "LD*E") which leave a light source and are reflected diffusely some number of times (possibly zero) before hitting the eye. Radiosity is a global illumination algorithm in the sense that the illumination arriving on a surface comes not just directly from the light sources, but also from other surfaces reflecting light. Radiosity is viewpoint independent, which increases the calculations involved, but makes them useful for all viewpoints. Radiosity methods were first developed in about 1950 in the engineering field of heat transfer. They were later refined specifically for the problem of rendering computer graphics in 1984198 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

BSP Tree

In computer science, binary space partitioning (BSP) is a method for space partitioning which recursively subdivides a Euclidean space into two convex sets by using hyperplanes as partitions. This process of subdividing gives rise to a representation of objects within the space in the form of a tree data structure known as a BSP tree. Binary space partitioning was developed in the context of 3D computer graphics in 1969. The structure of a BSP tree is useful in rendering because it can efficiently give spatial information about the objects in a scene, such as objects being ordered from front-to-back with respect to a viewer at a given location. Other applications of BSP include: performing geometrical operations with shapes ( constructive solid geometry) in CAD, collision detection in robotics and 3D video games, ray tracing, virtual landscape simulation, and other applications that involve the handling of complex spatial scenes. History *1969 Schumacker et al. published a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cube Attack

The cube attack is a method of cryptanalysis applicable to a wide variety of symmetric-key algorithms, published by Itai Dinur and Adi Shamir in a September 2008 preprint. A revised version of this preprint was placed online in January 2009, and the paper has also been accepted for presentation at Eurocrypt 2009. Attack A cipher is vulnerable if an output bit can be represented as a sufficiently low degree polynomial over GF(2) of key and input bits; in particular, this describes many stream ciphers based on LFSRs. DES and AES are believed to be immune to this attack. It works by summing an output bit value for all possible values of a subset of public input bits, chosen such that the resulting sum is a linear combination of secret bits; repeated application of this technique gives a set of linear relations between secret bits that can be solved to discover these bits. The authors show that if the cipher resembles a random polynomial of sufficiently low degree then such sets ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Perfect Hash

In computer science, a perfect hash function for a set is a hash function that maps distinct elements in to a set of integers, with no collisions. In mathematical terms, it is an injective function. Perfect hash functions may be used to implement a lookup table with constant worst-case access time. A perfect hash function can, as any hash function, be used to implement hash tables, with the advantage that no collision resolution has to be implemented. In addition, if the keys are not in the data and if it is known that queried keys will be valid, then the keys do not need to be stored in the lookup table, saving space. Disadvantages of perfect hash functions are that needs to be known for the construction of the perfect hash function. Non-dynamic perfect hash functions need to be re-constructed if changes. For frequently changing dynamic perfect hash functions may be used at the cost of additional space. The space requirement to store the perfect hash function is in wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |