|

Persymmetric Matrix

In mathematics, persymmetric matrix may refer to: # a square matrix which is symmetric with respect to the northeast-to-southwest diagonal; or # a square matrix such that the values on each line perpendicular to the main diagonal are the same for a given line. The first definition is the most common in the recent literature. The designation " Hankel matrix" is often used for matrices satisfying the property in the second definition. Definition 1 Let ''A'' = (''a''''ij'') be an ''n'' × ''n'' matrix. The first definition of ''persymmetric'' requires that :a_ = a_ for all ''i'', ''j''.. See page 193. For example, 5 × 5 persymmetric matrices are of the form :A = \begin a_ & a_ & a_ & a_ & a_ \\ a_ & a_ & a_ & a_ & a_ \\ a_ & a_ & a_ & a_ & a_ \\ a_ & a_ & a_ & a_ & a_ \\ a_ & a_ & a_ & a_ & a_ \end. This can be equivalently expressed as ''AJ'' = ''JA''T where ''J'' is the exchange matrix. A symmetric matrix is a matrix whose values are symmetric in the northwest-to-southeast di ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematics

Mathematics is an area of knowledge that includes the topics of numbers, formulas and related structures, shapes and the spaces in which they are contained, and quantities and their changes. These topics are represented in modern mathematics with the major subdisciplines of number theory, algebra, geometry, and mathematical analysis, analysis, respectively. There is no general consensus among mathematicians about a common definition for their academic discipline. Most mathematical activity involves the discovery of properties of mathematical object, abstract objects and the use of pure reason to proof (mathematics), prove them. These objects consist of either abstraction (mathematics), abstractions from nature orin modern mathematicsentities that are stipulated to have certain properties, called axioms. A ''proof'' consists of a succession of applications of inference rule, deductive rules to already established results. These results include previously proved theorems, axioms ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Square Matrix

In mathematics, a square matrix is a matrix with the same number of rows and columns. An ''n''-by-''n'' matrix is known as a square matrix of order Any two square matrices of the same order can be added and multiplied. Square matrices are often used to represent simple linear transformations, such as shearing or rotation. For example, if R is a square matrix representing a rotation (rotation matrix) and \mathbf is a column vector describing the position of a point in space, the product R\mathbf yields another column vector describing the position of that point after that rotation. If \mathbf is a row vector, the same transformation can be obtained using where R^ is the transpose of Main diagonal The entries a_ (''i'' = 1, …, ''n'') form the main diagonal of a square matrix. They lie on the imaginary line which runs from the top left corner to the bottom right corner of the matrix. For instance, the main diagonal of the 4×4 matrix above contains the elements , , , . ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hankel Matrix

In linear algebra, a Hankel matrix (or catalecticant matrix), named after Hermann Hankel, is a square matrix in which each ascending skew-diagonal from left to right is constant, e.g.: \qquad\begin a & b & c & d & e \\ b & c & d & e & f \\ c & d & e & f & g \\ d & e & f & g & h \\ e & f & g & h & i \\ \end. More generally, a Hankel matrix is any n \times n matrix A of the form A = \begin a_ & a_ & a_ & \ldots & \ldots &a_ \\ a_ & a_2 & & & &\vdots \\ a_ & & & & & \vdots \\ \vdots & & & & & a_\\ \vdots & & & & a_& a_ \\ a_ & \ldots & \ldots & a_ & a_ & a_ \end. In terms of the components, if the i,j element of A is denoted with A_, and assuming i\le j, then we have A_ = A_ for all k = 0,...,j-i. Properties * The Hankel matrix is a symmetric matrix. * Let J_n be the n \times n exchange matrix. If H is a m \times n Hankel matrix, then H = T J_n where T is a m \times n Toeplitz matrix. ** If T is real symmetric, then H = T J_n will have the same eigenvalues as T ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix Symmetry Qtl2

Matrix most commonly refers to: * ''The Matrix'' (franchise), an American media franchise ** ''The Matrix'', a 1999 science-fiction action film ** "The Matrix", a fictional setting, a virtual reality environment, within ''The Matrix'' (franchise) * Matrix (mathematics), a rectangular array of numbers, symbols or expressions Matrix (or its plural form matrices) may also refer to: Science and mathematics * Matrix (mathematics), algebraic structure, extension of vector into 2 dimensions * Matrix (logic), part of a formula in prenex normal form * Matrix (biology), the material in between a eukaryotic organism's cells * Matrix (chemical analysis), the non-analyte components of a sample * Matrix (geology), the fine-grained material in which larger objects are embedded * Matrix (composite), the constituent of a composite material * Hair matrix, produces hair * Nail matrix, part of the nail in anatomy Arts and entertainment Fictional entities * Matrix (comics), two comic bo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exchange Matrix

In mathematics, especially linear algebra, the exchange matrices (also called the reversal matrix, backward identity, or standard involutory permutation) are special cases of permutation matrices, where the 1 elements reside on the antidiagonal and all other elements are zero. In other words, they are 'row-reversed' or 'column-reversed' versions of the identity matrix.. : J_=\begin 0 & 1 \\ 1 & 0 \end;\quad J_ = \begin 0 & 0 & 1 \\ 0 & 1 & 0 \\ 1 & 0 & 0 \end; \quad J_ = \begin 0 & 0 & \cdots & 0 & 0 & 1 \\ 0 & 0 & \cdots & 0 & 1 & 0 \\ 0 & 0 & \cdots & 1 & 0 & 0 \\ \vdots & \vdots & & \vdots & \vdots & \vdots \\ 0 & 1 & \cdots & 0 & 0 & 0 \\ 1 & 0 & \cdots & 0 & 0 & 0 \end. Definition If ''J'' is an ''n'' × ''n'' exchange matrix, then the elements of ''J'' are J_ = \begin 1, & i + j = n + 1 \\ 0, & i + j \ne n + 1\\ \end ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Symmetric Matrix

In linear algebra, a symmetric matrix is a square matrix that is equal to its transpose. Formally, Because equal matrices have equal dimensions, only square matrices can be symmetric. The entries of a symmetric matrix are symmetric with respect to the main diagonal. So if a_ denotes the entry in the ith row and jth column then for all indices i and j. Every square diagonal matrix is symmetric, since all off-diagonal elements are zero. Similarly in characteristic different from 2, each diagonal element of a skew-symmetric matrix must be zero, since each is its own negative. In linear algebra, a real symmetric matrix represents a self-adjoint operator represented in an orthonormal basis over a real inner product space. The corresponding object for a complex inner product space is a Hermitian matrix with complex-valued entries, which is equal to its conjugate transpose. Therefore, in linear algebra over the complex numbers, it is often assumed that a symmetric m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bisymmetric Matrix

In mathematics, a bisymmetric matrix is a square matrix that is symmetric about both of its main diagonals. More precisely, an ''n'' × ''n'' matrix ''A'' is bisymmetric if it satisfies both ''A'' = ''AT'' and ''AJ'' = ''JA'' where ''J'' is the ''n'' × ''n'' exchange matrix. For example, any matrix of the form :\begin a & b & c & d & e \\ b & f & g & h & d \\ c & g & i & g & c \\ d & h & g & f & b \\ e & d & c & b & a \end is bisymmetric. Properties *Bisymmetric matrices are both symmetric centrosymmetric and symmetric persymmetric. *The product of two bisymmetric matrices is a centrosymmetric matrix. *Real-valued bisymmetric matrices are precisely those symmetric matrices whose eigenvalues remain the same aside from possible sign changes following pre- or post-multiplication by the exchange matrix. *If ''A'' is a real bisymmetric matrix with distinct eigenvalues, then the matrices that commute with ''A'' must be bisymmetric. *The inverse Inverse o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

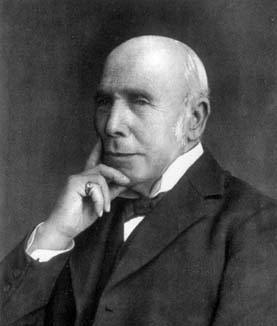

Thomas Muir (mathematician)

Sir Thomas Muir (25 August 1844 – 21 March 1934) was a Scottish mathematician, remembered as an authority on determinants. Life He was born in Stonebyres in South Lanarkshire, and brought up in the small town of Biggar. He was educated at Wishaw Public School. At the University of Glasgow he changed his studies from classics to mathematics after advice from the future Lord Kelvin. After graduating he held positions at the University of St Andrews and the University of Glasgow. From 1874 to 1892 he taught at Glasgow High School. In 1882 he published ''Treatise on the theory of determinants''; then in 1890 he published a ''History of determinants''. In his 1882 work, Muir rediscovered an important lemma that was first proved by Cayley 35 years earlier: In Glasgow he lived at Beechcroft in the Bothwell district. In 1874 he was elected a Fellow of the Royal Society of Edinburgh, His proposers were William Thomson, Lord Kelvin, Hugh Blackburn, Philip Kelland and Peter Gut ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Determinant

In mathematics, the determinant is a scalar value that is a function of the entries of a square matrix. It characterizes some properties of the matrix and the linear map represented by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible and the linear map represented by the matrix is an isomorphism. The determinant of a product of matrices is the product of their determinants (the preceding property is a corollary of this one). The determinant of a matrix is denoted , , or . The determinant of a matrix is :\begin a & b\\c & d \end=ad-bc, and the determinant of a matrix is : \begin a & b & c \\ d & e & f \\ g & h & i \end= aei + bfg + cdh - ceg - bdi - afh. The determinant of a matrix can be defined in several equivalent ways. Leibniz formula expresses the determinant as a sum of signed products of matrix entries such that each summand is the product of different entries, and the number of these summands is n!, the factorial of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Toeplitz Matrix

In linear algebra, a Toeplitz matrix or diagonal-constant matrix, named after Otto Toeplitz, is a matrix in which each descending diagonal from left to right is constant. For instance, the following matrix is a Toeplitz matrix: :\qquad\begin a & b & c & d & e \\ f & a & b & c & d \\ g & f & a & b & c \\ h & g & f & a & b \\ i & h & g & f & a \end. Any ''n'' × ''n'' matrix ''A'' of the form :A = \begin a_0 & a_ & a_ & \cdots & \cdots & a_ \\ a_1 & a_0 & a_ & \ddots & & \vdots \\ a_2 & a_1 & \ddots & \ddots & \ddots & \vdots \\ \vdots & \ddots & \ddots & \ddots & a_ & a_ \\ \vdots & & \ddots & a_1 & a_0 & a_ \\ a_ & \cdots & \cdots & a_2 & a_1 & a_0 \end is a Toeplitz matrix. If the ''i'', ''j'' element of ''A'' is denoted ''A''''i'', ''j'' then we have :A_ = A_ = a_. A Toeplitz matrix is not necessarily square. Solving a Toeplitz system A matrix equation of the form :Ax = b is called a Toeplitz system ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Centrosymmetric Matrix

In mathematics, especially in linear algebra and matrix theory, a centrosymmetric matrix is a matrix which is symmetric about its center. More precisely, an ''n''×''n'' matrix ''A'' = 'A''''i'',''j''is centrosymmetric when its entries satisfy :''A''''i'',''j'' = ''A''''n''−''i'' + 1,''n''−''j'' + 1 for ''i'', ''j'' ∊. If ''J'' denotes the ''n''×''n'' exchange matrix with 1 on the antidiagonal and 0 elsewhere (that is, ''J''''i'',''n'' + 1 − ''i'' = 1; ''J''''i'',''j'' = 0 if ''j'' ≠ ''n'' +1− ''i''), then a matrix ''A'' is centrosymmetric if and only if ''AJ'' = ''JA''. Examples * All 2×2 centrosymmetric matrices have the form \begin a & b \\ b & a \end. * All 3×3 centrosymmetric matrices have the form \begin a & b & c \\ d & e & d \\ c & b & a \end. * Symmetric Toeplitz matrices are centrosymmetric. Algebraic structure and properties *If ''A'' and ''B'' are centrosymmetric matrices over a field ''F'', then so are ''A'' + ''B'' and ''cA'' for any ''c'' in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Determinants

In mathematics, the determinant is a scalar value that is a function of the entries of a square matrix. It characterizes some properties of the matrix and the linear map represented by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible and the linear map represented by the matrix is an isomorphism. The determinant of a product of matrices is the product of their determinants (the preceding property is a corollary of this one). The determinant of a matrix is denoted , , or . The determinant of a matrix is :\begin a & b\\c & d \end=ad-bc, and the determinant of a matrix is : \begin a & b & c \\ d & e & f \\ g & h & i \end= aei + bfg + cdh - ceg - bdi - afh. The determinant of a matrix can be defined in several equivalent ways. Leibniz formula expresses the determinant as a sum of signed products of matrix entries such that each summand is the product of different entries, and the number of these summands is n!, the factorial of (th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |