|

Operational Data Store

An operational data store (ODS) is used for operational reporting and as a source of data for the enterprise data warehouse (EDW). It is a complementary element to an EDW in a decision support environment, and is used for operational reporting, controls, and decision making, as opposed to the EDW, which is used for tactical and strategic decision support. An ODS is a database designed to integrate data from multiple sources for additional operations on the data, for reporting, controls and operational decision support. Unlike a production master data store, the data is not passed back to operational systems. It may be passed for further operations and to the data warehouse for reporting. An ODS should not be confused with an enterprise data hub (EDH). An operational data store will take transactional data from one or more production systems and loosely integrate it, in some respects it is still subject oriented, integrated and time variant, but without the volatility constrain ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Enterprise Data Warehouse

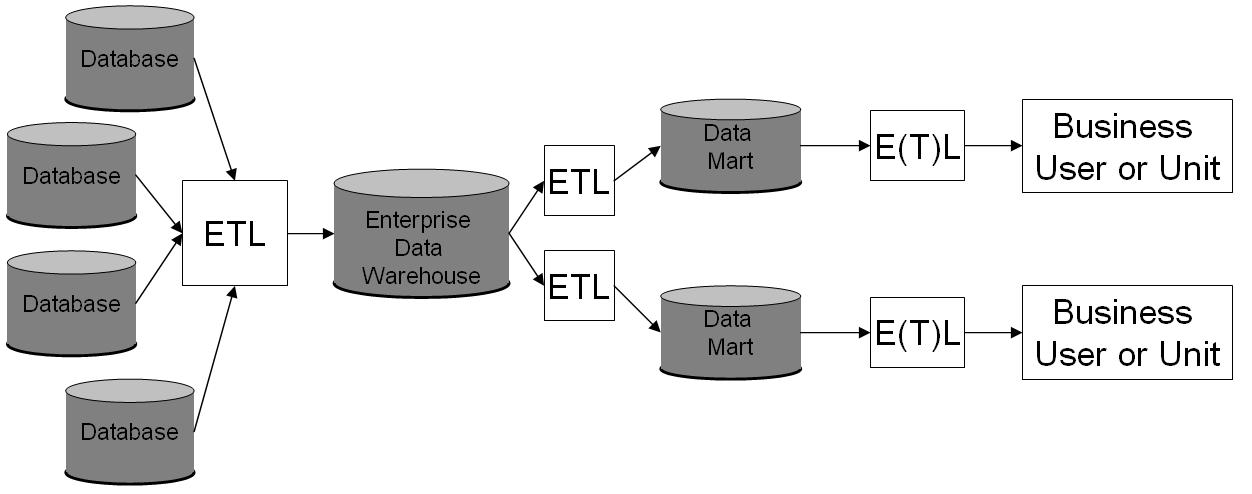

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for Business reporting, reporting and data analysis and is considered a core component of business intelligence. DWs are central Repository (version control), repositories of integrated data from one or more disparate sources. They store current and historical data in one single place that are used for creating analytical reports for workers throughout the enterprise. The data stored in the warehouse is uploaded from the operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the DW for reporting. Extract, transform, load (ETL) and extract, load, transform (ELT) are the two main approaches used to build a data warehouse system. ETL-based data warehousing The typical extract, transform, load (ETL)-based data warehouse uses ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Integrity

Data integrity is the maintenance of, and the assurance of, data accuracy and consistency over its entire Information Lifecycle Management, life-cycle and is a critical aspect to the design, implementation, and usage of any system that stores, processes, or retrieves data. The term is broad in scope and may have widely different meanings depending on the specific context even under the same general umbrella of computing. It is at times used as a proxy term for data quality, while data validation is a prerequisite for data integrity. Data integrity is the opposite of data corruption. The overall intent of any data integrity technique is the same: ensure data is recorded exactly as intended (such as a database correctly rejecting mutually exclusive possibilities). Moreover, upon later Data retrieval, retrieval, ensure the data is the same as when it was originally recorded. In short, data integrity aims to prevent unintentional changes to information. Data integrity is not to be confus ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

John Wiley & Sons

John Wiley & Sons, Inc., commonly known as Wiley (), is an American multinational publishing company founded in 1807 that focuses on academic publishing and instructional materials. The company produces books, journals, and encyclopedias, in print and electronically, as well as online products and services, training materials, and educational materials for undergraduate, graduate, and continuing education students. History The company was established in 1807 when Charles Wiley opened a print shop in Manhattan. The company was the publisher of 19th century American literary figures like James Fenimore Cooper, Washington Irving, Herman Melville, and Edgar Allan Poe, as well as of legal, religious, and other non-fiction titles. The firm took its current name in 1865. Wiley later shifted its focus to scientific, technical, and engineering subject areas, abandoning its literary interests. Wiley's son John (born in Flatbush, New York, October 4, 1808; died in East Orange, New Je ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Architectural Pattern (computer Science)

An architectural pattern is a general, reusable solution to a commonly occurring problem in software architecture within a given context. The architectural patterns address various issues in software engineering, such as computer hardware performance limitations, high availability and minimization of a business risk. Some architectural patterns have been implemented within software frameworks. The use of the word "pattern" in the software industry was influenced by similar concepts as expressed in traditional architecture, such as Christopher Alexander's ''A Pattern Language'' (1977) which discussed the practice in terms of establishing a pattern lexicon, prompting the practitioners of computer science to contemplate their own design lexicon. Usage of this metaphor within the software engineering profession became commonplace after the publication of ''Design Patterns'' (1994) by Erich Gamma, Richard Helm, Ralph Johnson, and John Vlissides—now commonly known as the "Gang of F ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Master Data

Master data represents "data about the business entities that provide context for business transactions". The most commonly found categories of master data are parties (individuals and organisations, and their roles, such as customers, suppliers, employees), products, financial structures (such as ledgers and cost centers) and locational concepts. Master data should be distinguished from reference data. While both provide context for business transactions, reference data is concerned with classification and categorisation, while master data is concerned with business entities. Master data is, by its nature, almost always non-transactional in nature. There exist edge cases where an organization may need to treat certain transactional processes and operations as "master data". This arises, for example, where information about master data entities, such as customers or products, is only contained within transactional data such as orders and receipts and is not housed separately. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Extract, Transform, Load

In computing, extract, transform, load (ETL) is a three-phase process where data is extracted, transformed (cleaned, sanitized, scrubbed) and loaded into an output data container. The data can be collated from one or more sources and it can also be outputted to one or more destinations. ETL processing is typically executed using software applications but it can also be done manually by system operators. ETL software typically automates the entire process and can be run manually or on reoccurring schedules either as single jobs or aggregated into a batch of jobs. A properly designed ETL system extracts data from source systems and enforces data type and data validity standards and ensures it conforms structurally to the requirements of the output. Some ETL systems can also deliver data in a presentation-ready format so that application developers can build applications and end users can make decisions. The ETL process became a popular concept in the 1970s and is often used in d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Federated Database System

A federated database system (FDBS) is a type of meta-database management system (DBMS), which transparently maps multiple autonomous database systems into a single federated database. The constituent databases are interconnected via a computer network and may be geographically decentralized. Since the constituent database systems remain autonomous, a federated database system is a contrastable alternative to the (sometimes daunting) task of merging several disparate databases. A federated database, or virtual database, is a composite of all constituent databases in a federated database system. There is no actual data integration in the constituent disparate databases as a result of data federation. Through data abstraction, federated database systems can provide a uniform user interface, enabling users and clients to store and retrieve data from multiple noncontiguous databases with a single query—even if the constituent databases are heterogeneous. To this end, a federated data ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Virtualization

Data virtualization is an approach to data management that allows an application to retrieve and manipulate data without requiring technical details about the data, such as how it is formatted at source, or where it is physically located, and can provide a single customer view (or single view of any other entity) of the overall data. Unlike the traditional extract, transform, load ("ETL") process, the data remains in place, and real-time access is given to the source system for the data. This reduces the risk of data errors, of the workload moving data around that may never be used, and it does not attempt to impose a single data model on the data (an example of heterogeneous data is a federated database system). The technology also supports the writing of transaction data updates back to the source systems. [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Business Rule

A business rule defines or constrains some aspect of business. It may be expressed to specify an action to be taken when certain conditions are true or may be phrased so it can only resolve to either true or false. Business rules are intended to assert business structure or to control or influence the behavior of the business. Business rules describe the operations, definitions and constraints that apply to an organization. Business rules can apply to people, processes, corporate behavior and computing systems in an organization, and are put in place to help the organization achieve its goals. For example, a business rule might state that ''no credit check is to be performed on return customers''. Other examples of business rules include requiring a rental agent to disallow a rental tenant if their credit rating is too low, or requiring company agents to use a list of preferred suppliers and supply schedules. While a business rule may be informal or even unwritten, documenting the r ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decision Support

A decision support system (DSS) is an information system that supports business or organizational decision-making activities. DSSs serve the management, operations and planning levels of an organization (usually mid and higher management) and help people make decisions about problems that may be rapidly changing and not easily specified in advance—i.e. unstructured and semi-structured decision problems. Decision support systems can be either fully computerized or human-powered, or a combination of both. While academics have perceived DSS as a tool to support decision making processes, DSS users see DSS as a tool to facilitate organizational processes. Some authors have extended the definition of DSS to include any system that might support decision making and some DSS include a decision-making software component; Sprague (1980)Sprague, R;(1980).A Framework for the Development of Decision Support Systems" MIS Quarterly. Vol. 4, No. 4, pp.1-25. defines a properly termed DSS a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Cleaning

Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data. Data cleansing may be performed interactively with data wrangling tools, or as batch processing through scripting or a data quality firewall. After cleansing, a data set should be consistent with other similar data sets in the system. The inconsistencies detected or removed may have been originally caused by user entry errors, by corruption in transmission or storage, or by different data dictionary definitions of similar entities in different stores. Data cleaning differs from data validation in that validation almost invariably means data is rejected from the system at entry and is performed at the time of entry, rather than on batches of data. The actual process o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |