|

Memory Latency

''Memory latency'' is the time (the latency) between initiating a request for a byte or word in memory until it is retrieved by a processor. If the data are not in the processor's cache, it takes longer to obtain them, as the processor will have to communicate with the external memory cells. Latency is therefore a fundamental measure of the speed of memory: the less the latency, the faster the reading operation. Latency should not be confused with memory bandwidth, which measures the throughput of memory. Latency can be expressed in clock cycles or in time measured in nanoseconds. Over time, memory latencies expressed in clock cycles have been fairly stable, but they have improved in time.Crucial Technology, "Speed ''vs.'' Latency: Why CAS latency isn't an accurate measure of memory performance/ref> See also * Burst mode (computing) * CAS latency * Multi-channel memory architecture * Interleaved memory In computing, interleaved memory is a design which compensates for the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Multi-channel Memory Architecture

In the fields of digital electronics and computer hardware, multi-channel memory architecture is a technology that increases the data transfer rate between the DRAM memory and the memory controller by adding more channels of communication between them. Theoretically, this multiplies the data rate by exactly the number of channels present. Dual-channel memory employs two channels. The technique goes back as far as the 1960s having been used in IBM System/360 Model 91 and in CDC 6600. Modern high-end desktop and workstation processors such as the AMD Ryzen Threadripper series and the Intel Core i9 Extreme Edition lineup support quad-channel memory. Server processors from the AMD Epyc series and the Intel Xeon platforms give support to memory bandwidth starting from quad-channel module layout to up to 12-channel layout. In March 2010, AMD released Socket G34 and Magny-Cours Opteron 6100 series processors with support for quad-channel memory. In 2006, Intel released chipsets that sup ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computer Memory

Computer memory stores information, such as data and programs, for immediate use in the computer. The term ''memory'' is often synonymous with the terms ''RAM,'' ''main memory,'' or ''primary storage.'' Archaic synonyms for main memory include ''core'' (for magnetic core memory) and ''store''. Main memory operates at a high speed compared to mass storage which is slower but less expensive per bit and higher in capacity. Besides storing opened programs and data being actively processed, computer memory serves as a Page cache, mass storage cache and write buffer to improve both reading and writing performance. Operating systems borrow RAM capacity for caching so long as it is not needed by running software. If needed, contents of the computer memory can be transferred to storage; a common way of doing this is through a memory management technique called ''virtual memory''. Modern computer memory is implemented as semiconductor memory, where data is stored within memory cell (com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

SDRAM Latency

Memory timings or RAM timings describe the timing information of a memory module or the onboard LPDDRx. Due to the inherent qualities of VLSI and microelectronics, memory chips require time to fully execute commands. Executing commands too quickly will result in data corruption and results in system instability. With appropriate time between commands, memory modules/chips can be given the opportunity to fully switch transistors, charge capacitors and correctly signal back information to the memory controller. Because system performance depends on how fast memory can be used, this timing directly affects the performance of the system. The timing of modern synchronous dynamic random-access memory (SDRAM) is commonly indicated using four parameters: CL, TRCD, TRP, and TRAS in units of clock cycles; they are commonly written as four numbers separated with hyphens, ''e.g.'' 7-8-8-24. The fourth (tRAS) is often omitted, or a fifth, the Command rate, sometimes added (normally 2T or 1T, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

SDRAM Burst Ordering

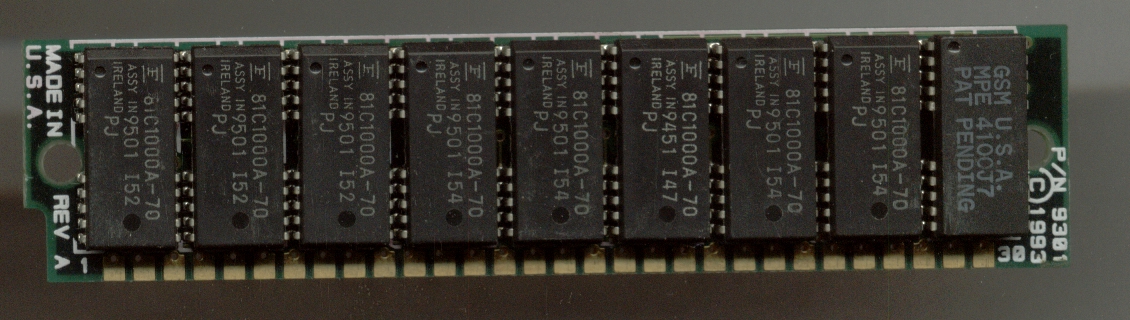

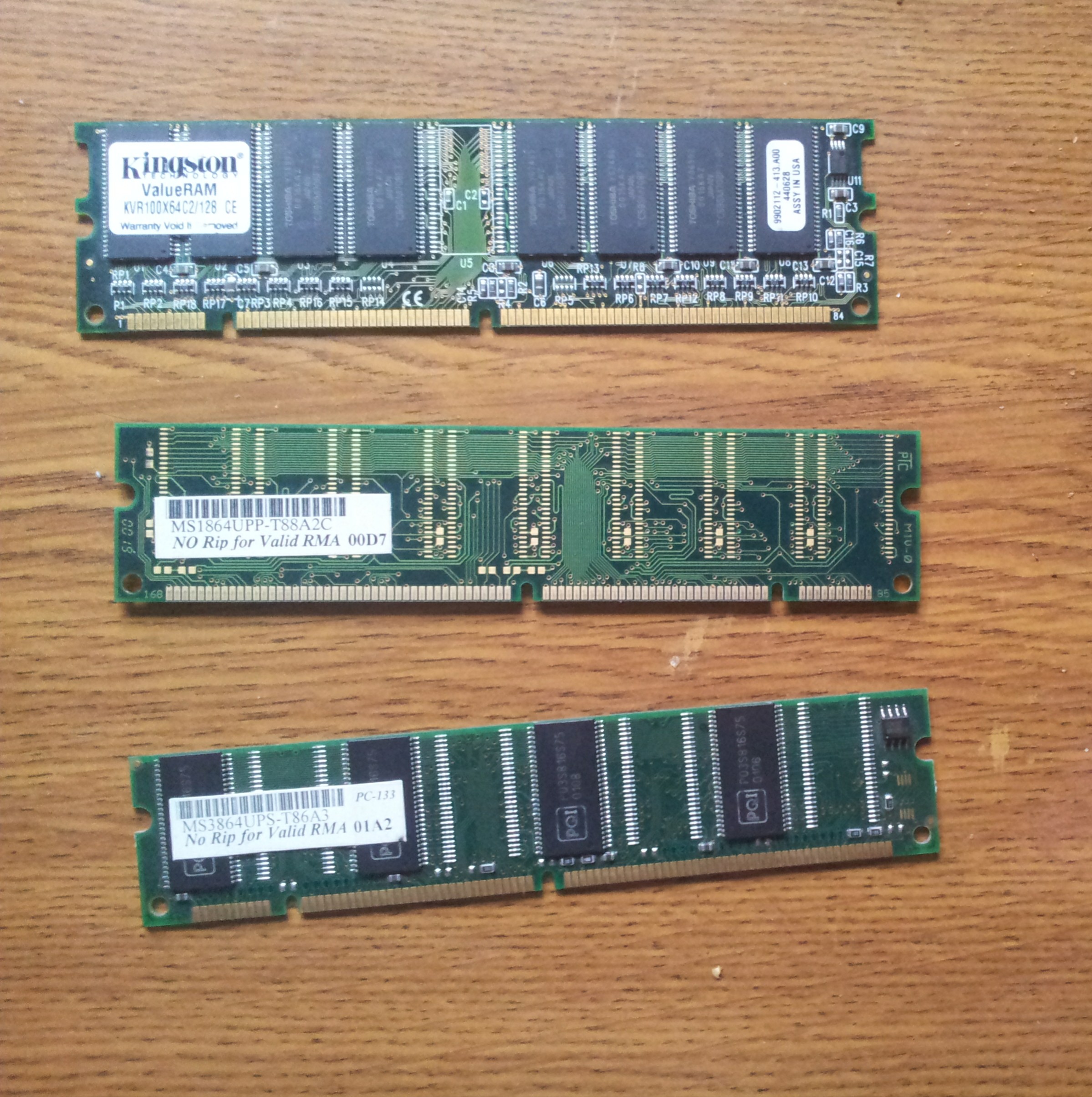

Synchronous dynamic random-access memory (synchronous dynamic RAM or SDRAM) is any DRAM where the operation of its external pin interface is coordinated by an externally supplied clock signal. DRAM integrated circuits (ICs) produced from the early 1970s to the early 1990s used an ''asynchronous'' interface, in which input control signals have a direct effect on internal functions delayed only by the trip across its semiconductor pathways. SDRAM has a ''synchronous'' interface, whereby changes on control inputs are recognised after a rising edge of its clock input. In SDRAM families standardized by JEDEC, the clock signal controls the stepping of an internal finite-state machine that responds to incoming commands. These commands can be pipelined to improve performance, with previously started operations completing while new commands are received. The memory is divided into several equally sized but independent sections called ''banks'', allowing the device to operate on a memory a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Interleaved Memory

In computing, interleaved memory is a design which compensates for the relatively slow speed of dynamic random-access memory (DRAM) or core memory, by spreading memory addresses evenly across memory banks. That way, contiguous memory reads and writes use each memory bank in turn, resulting in higher memory throughput due to reduced waiting for memory banks to become ready for the operations. It is different from multi-channel memory architectures, primarily as interleaved memory does not add more channels between the main memory and the memory controller. However, channel interleaving is also possible, for example in freescale i.MX6 processors, which allow interleaving to be done between two channels. Overview With interleaved memory, memory addresses are allocated to each memory bank in turn. For example, in an interleaved system with two memory banks (assuming word-addressable memory), if logical address 32 belongs to bank 0, then logical address 33 would belong to ban ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

CAS Latency

Column address strobe latency, also called CAS latency or CL, is the delay in clock cycles between the READ command and the moment data is available. In asynchronous DRAM, the interval is specified in nanoseconds (absolute time). In synchronous DRAM, the interval is specified in clock cycles. Because the latency is dependent upon a number of clock ticks instead of absolute time, the actual time for an SDRAM module to respond to a CAS event might vary between uses of the same module if the clock rate differs. RAM operation background Dynamic RAM is arranged in a rectangular array. Each row is selected by a horizontal ''word line''. Sending a logical high signal along a given row enables the MOSFETs present in that row, connecting each storage capacitor to its corresponding vertical ''bit line''. Each bit line is connected to a ''sense amplifier'' that amplifies the small voltage change produced by the storage capacitor. This amplified signal is then output from the DRAM chip ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Latency (engineering)

Latency, from a general point of view, is a time delay between the Causality, cause and the effect of some physical change in the system being observed. Lag (video games), Lag, as it is known in Gaming culture, gaming circles, refers to the latency between the input to a simulation and the visual or auditory response, often occurring because of network delay in online games. The original meaning of “latency”, as used widely in psychology, medicine and most other disciplines, derives from “latent”, a word of Latin origin meaning “hidden”. Its different and relatively recent meaning (this topic) of “lateness” or “delay” appears to derive from its superficial similarity to the word “late”, from the old English “laet”. Latency is physically a consequence of the limited velocity at which any Event (relativity), physical interaction can propagate. The magnitude of this velocity is always less than or equal to the speed of light. Therefore, every physical s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Burst Mode (computing)

Burst may refer to: *Burst mode (other), a mode of operation where events occur in rapid succession **Burst transmission Burst may refer to: *Burst mode (other), a mode of operation where events occur in rapid succession **Burst transmission, a term in telecommunications **Burst switching, a feature of some packet-switched networks **Bursting, a signaling mo ..., a term in telecommunications ** Burst switching, a feature of some packet-switched networks ** Bursting, a signaling mode of neurons * Burst phase, a feature of the PAL television format * Burst fracture, a type of spinal injury * Burst charge, a component of some fireworks * Burst noise, type of electronic noise that occurs in semiconductors *Burst (coin), a cryptocurrency *Burst finish, a two- or three-color faded effect applied to musical instruments e.g. sunburst (finish) * Burst (village), a village in Erpe-Mere * Burst.com, a software company * Burst Radio, the University of Bristol student radio st ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |