|

Locks With Ordered Sharing

In databases and transaction processing the term Locks with ordered sharing comprises several variants of the ''two-phase locking'' (2PL) concurrency control protocol generated by changing the blocking semantics of locks upon conflicts. Further '' softening'' of locks eliminates thrashing. See also * Autonomic computing Autonomic computing (AC) is distributed computing resources with self-management (computer science), self-managing characteristics, adapting to unpredictable changes while hiding intrinsic complexity to operators and users. Initiated by IBM in 2001 ... References * D. Agrawal, A. El Abbadi, A. E. Lang''The Performance of Protocols Based on Locks with Ordered Sharing'' IEEE Transactions on Knowledge and Data Engineering, Volume 6, Issue 5, October 1994, pp. 805–818, * Mahmoud, H. A., Arora, V., Nawab, F., Agrawal, D., & El Abbadi, A. (2014)Maat: Effective and scalable coordination of distributed transactions in the cloud.''Proceedings of the VLDB Endowment'', '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Database

In computing, a database is an organized collection of data or a type of data store based on the use of a database management system (DBMS), the software that interacts with end users, applications, and the database itself to capture and analyze the data. The DBMS additionally encompasses the core facilities provided to administer the database. The sum total of the database, the DBMS and the associated applications can be referred to as a database system. Often the term "database" is also used loosely to refer to any of the DBMS, the database system or an application associated with the database. Before digital storage and retrieval of data have become widespread, index cards were used for data storage in a wide range of applications and environments: in the home to record and store recipes, shopping lists, contact information and other organizational data; in business to record presentation notes, project research and notes, and contact information; in schools as flash c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Transaction Processing

In computer science, transaction processing is information processing that is divided into individual, indivisible operations called ''transactions''. Each transaction must succeed or fail as a complete unit; it can never be only partially complete. For example, when you purchase a book from an online bookstore, you exchange money (in the form of credit) for a book. If your credit is good, a series of related operations ensures that you get the book and the bookstore gets your money. However, if a single operation in the series fails during the exchange, the entire exchange fails. You do not get the book and the bookstore does not get your money. The technology responsible for making the exchange balanced and predictable is called ''transaction processing''. Transactions ensure that data-oriented resources are not permanently updated unless all operations within the transactional unit complete successfully. By combining a set of related operations into a unit that either com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Two-phase Locking

In databases and transaction processing, two-phase locking (2PL) is a pessimistic concurrency control method that guarantees conflict-serializability. Philip A. Bernstein, Vassos Hadzilacos, Nathan Goodman (1987) ''Concurrency Control and Recovery in Database Systems'' Addison Wesley Publishing Company, Gerhard Weikum, Gottfried Vossen (2001) ''Transactional Information Systems'' Elsevier, It is also the name of the resulting set of database transaction schedules (histories). The protocol uses locks, applied by a transaction to data, which may block (interpreted as signals to stop) other transactions from accessing the same data during the transaction's life. By the 2PL protocol, locks are applied and removed in two phases: # Expanding phase: locks are acquired and no locks are released. # Shrinking phase: locks are released and no locks are acquired. Two types of locks are used by the basic protocol: ''Shared'' and ''Exclusive'' locks. Refinements of the basic protocol may ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Concurrency Control

In information technology and computer science, especially in the fields of computer programming, operating systems, multiprocessors, and databases, concurrency control ensures that correct results for concurrent operations are generated, while getting those results as quickly as possible. Computer systems, both software and hardware, consist of modules, or components. Each component is designed to operate correctly, i.e., to obey or to meet certain consistency rules. When components that operate concurrently interact by messaging or by sharing accessed data (in memory or storage), a certain component's consistency may be violated by another component. The general area of concurrency control provides rules, methods, design methodologies, and theories to maintain the consistency of components operating concurrently while interacting, and thus the consistency and correctness of the whole system. Introducing concurrency control into a system means applying operation constraints ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Serializability

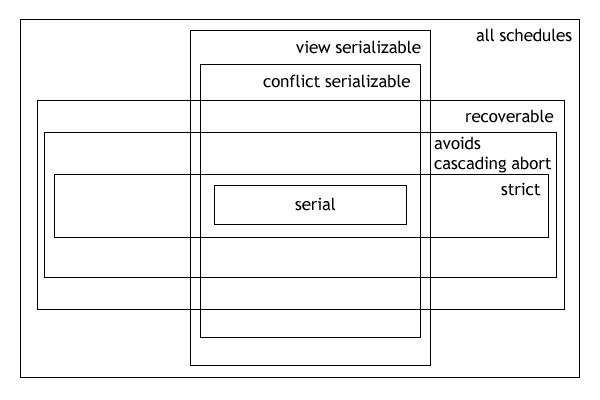

In the fields of databases and transaction processing (transaction management), a schedule (or history) of a system is an abstract model to describe the order of executions in a set of transactions running in the system. Often it is a ''list'' of operations (actions) ordered by time, performed by a set of transactions that are executed together in the system. If the order in time between certain operations is not determined by the system, then a ''partial order'' is used. Examples of such operations are requesting a read operation, reading, writing, aborting, committing, requesting a lock, locking, etc. Often, only a subset of the transaction operation types are included in a schedule. Schedules are fundamental concepts in database concurrency control theory. In practice, most general purpose database systems employ conflict-serializable and strict recoverable schedules. Notation Grid notation: * Columns: The different transactions in the schedule. * Rows: The time order of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Graceful Exit

Gracefulness, or being graceful, is the physical characteristic of displaying "pretty agility", in the form of elegant movement, poise, or balance. The etymological root of ''grace'' is the Latin word ''gratia'' from ''gratus'', meaning pleasing. Gracefulness has been described by reference to its being aesthetically pleasing. For example: The difficulty in defining exactly what constitutes gracefulness is described in this analysis of Henri Bergson's use of the term: Gracefulness is often referenced by simile, for example with people being described as "graceful as a swan", or "as graceful as a ballerina". The concept of gracefulness is applied both to movement, and to inanimate objects. For example, certain trees are commonly referred to as being "graceful", such as the '' Betula albosinensis'', '' Prunus × yedoensis'' (Yoshino cherry), and ''Areca catechu'' (betel-nut palm). Gracefulness is sometimes also attributed to non-corporeal human actions, such as the use of lan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Thrashing (computer Science)

In computer science, thrashing occurs in a system with virtual memory when a computer's real storage resources are ''overcommitted'', leading to a constant state of paging and page faults, slowing most application-level processing. This causes the performance of the computer to degrade or even collapse. The situation can continue indefinitely until the user closes some running applications or the active processes free up additional virtual memory resources. After initialization, most programs operate on a small number of code and data pages compared to the total memory the program requires. The pages most frequently accessed at any point are called the working set, which may change over time. When the working set is not significantly greater than the system's total number of real storage ''page frames'', virtual memory systems work most efficiently, and an insignificant amount of computing is spent resolving page faults. As the total of the working sets grows, resolving page fa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Autonomic Computing

Autonomic computing (AC) is distributed computing resources with self-management (computer science), self-managing characteristics, adapting to unpredictable changes while hiding intrinsic complexity to operators and users. Initiated by IBM in 2001, this initiative ultimately aimed to develop computer systems capable of self-management, to overcome the rapidly growing complexity of computing systems management, and to reduce the barrier that complexity poses to further growth. Description The AC system concept is designed to make adaptive decisions, using high-level policies. It will constantly check and optimize its status and automatically adapt itself to changing conditions. An autonomic computing framework is composed of autonomic Software component, components (AC) interacting with each other. An AC can be modeled in terms of two main control schemes (local and global) with sensors (for self-monitoring), Actuator, effectors (for self-adjustment), knowledge and planner/adapter ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Data Management

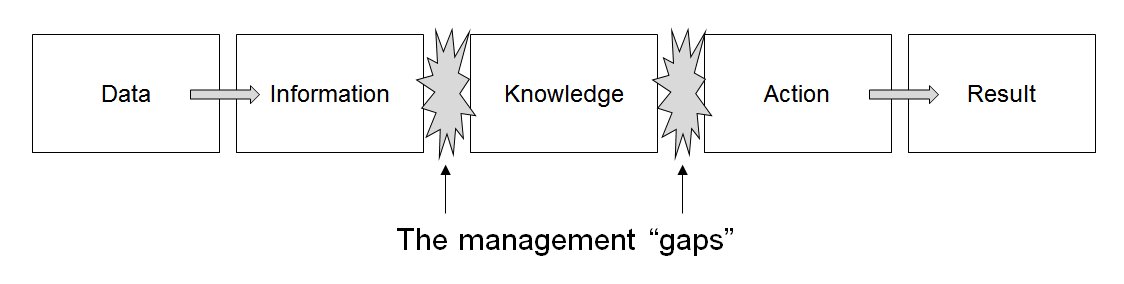

Data management comprises all disciplines related to handling data as a valuable resource, it is the practice of managing an organization's data so it can be analyzed for decision making. Concept The concept of data management emerged alongside the evolution of computing technology. In the 1950s, as computers became more prevalent, organizations began to grapple with the challenge of organizing and storing data efficiently. Early methods relied on punch cards and manual sorting, which were labor-intensive and prone to errors. The introduction of database management systems in the 1970s marked a significant milestone, enabling structured storage and retrieval of data. By the 1980s, relational database models revolutionized data management, emphasizing the importance of data as an asset and fostering a data-centric mindset in business. This era also saw the rise of data governance practices, which prioritized the organization and regulation of data to ensure quality and complian ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Databases

In computing, a database is an organized collection of data or a type of data store based on the use of a database management system (DBMS), the software that interacts with end users, applications, and the database itself to capture and analyze the data. The DBMS additionally encompasses the core facilities provided to administer the database. The sum total of the database, the DBMS and the associated applications can be referred to as a database system. Often the term "database" is also used loosely to refer to any of the DBMS, the database system or an application associated with the database. Before digital storage and retrieval of data have become widespread, index cards were used for data storage in a wide range of applications and environments: in the home to record and store recipes, shopping lists, contact information and other organizational data; in business to record presentation notes, project research and notes, and contact information; in schools as flash card ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Concurrency Control

In information technology and computer science, especially in the fields of computer programming, operating systems, multiprocessors, and databases, concurrency control ensures that correct results for concurrent operations are generated, while getting those results as quickly as possible. Computer systems, both software and hardware, consist of modules, or components. Each component is designed to operate correctly, i.e., to obey or to meet certain consistency rules. When components that operate concurrently interact by messaging or by sharing accessed data (in memory or storage), a certain component's consistency may be violated by another component. The general area of concurrency control provides rules, methods, design methodologies, and theories to maintain the consistency of components operating concurrently while interacting, and thus the consistency and correctness of the whole system. Introducing concurrency control into a system means applying operation constraints ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |