|

Interior Point Methods

Interior-point methods (also referred to as barrier methods or IPMs) are algorithms for solving linear and non-linear convex optimization problems. IPMs combine two advantages of previously-known algorithms: * Theoretically, their run-time is polynomial—in contrast to the simplex method, which has exponential run-time in the worst case. * Practically, they run as fast as the simplex method—in contrast to the ellipsoid method, which has polynomial run-time in theory but is very slow in practice. In contrast to the simplex method which traverses the ''boundary'' of the feasible region, and the ellipsoid method which bounds the feasible region from ''outside'', an IPM reaches a best solution by traversing the ''interior'' of the feasible region—hence the name. History An interior point method was discovered by Soviet mathematician I. I. Dikin in 1967. The method was reinvented in the U.S. in the mid-1980s. In 1984, Narendra Karmarkar developed a method for linear programmin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Karmarkar

Narendra Krishna Karmarkar (born 1956) is an Indian mathematician. He developed Karmarkar's algorithm. He is listed as an ISI highly cited researcher. He invented one of the first probably Time complexity, polynomial time algorithms for linear programming, which is generally referred to as an interior point method. The algorithm is a cornerstone in the field of linear programming. He published his famous result in 1984 while he was working for Bell Laboratories in New Jersey. Biography Karmarkar received his B.Tech. in electrical engineering from IIT Bombay in 1978, Master of Science, M.S. from the California Institute of Technology in 1979, and PhD, Ph.D. in Computer Science from the University of California, Berkeley in 1983 under the supervision of Richard M. Karp. Karmarkar was a post-doctoral research fellow at IBM research (1983), Member of Technical Staff and fellow at Mathematical Sciences Research Center, AT&T Bell Laboratories (1983–1998), professor of mathematics at ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Epigraph (mathematics)

In mathematics, the epigraph or supergraph of a Function (mathematics), function f : X \to [-\infty, \infty] valued in the Extended real number line, extended real numbers [-\infty, \infty] = \Reals \cup \ is the Set (mathematics), set \operatorname f = \ consisting of all points in the Cartesian product X \times \Reals lying on or above the function's Graph of a function, graph. Similarly, the strict epigraph \operatorname_S f is the set of points in X \times \Reals lying strictly above its graph. Importantly, unlike the graph of f, the epigraph consists of points in X \times \Reals (this is true of the graph only when f is real-valued). If the function takes \pm \infty as a value then \operatorname f will be a subset of its epigraph \operatorname f. For example, if f\left(x_0\right) = \infty then the point \left(x_0, f\left(x_0\right)\right) = \left(x_0, \infty\right) will belong to \operatorname f but not to \operatorname f. These two sets are nevertheless closely re ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Newton's Method

In numerical analysis, the Newton–Raphson method, also known simply as Newton's method, named after Isaac Newton and Joseph Raphson, is a root-finding algorithm which produces successively better approximations to the roots (or zeroes) of a real-valued function. The most basic version starts with a real-valued function , its derivative , and an initial guess for a root of . If satisfies certain assumptions and the initial guess is close, then x_ = x_0 - \frac is a better approximation of the root than . Geometrically, is the x-intercept of the tangent of the graph of at : that is, the improved guess, , is the unique root of the linear approximation of at the initial guess, . The process is repeated as x_ = x_n - \frac until a sufficiently precise value is reached. The number of correct digits roughly doubles with each step. This algorithm is first in the class of Householder's methods, and was succeeded by Halley's method. The method can also be extended t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Positive-definite Function

In mathematics, a positive-definite function is, depending on the context, either of two types of function. Definition 1 Let \mathbb be the set of real numbers and \mathbb be the set of complex numbers. A function f: \mathbb \to \mathbb is called ''positive semi-definite'' if for all real numbers ''x''1, …, ''x''''n'' the ''n'' × ''n'' matrix : A = \left(a_\right)_^n~, \quad a_ = f(x_i - x_j) is a positive ''semi-''definite matrix. By definition, a positive semi-definite matrix, such as A, is Hermitian; therefore ''f''(−''x'') is the complex conjugate of ''f''(''x'')). In particular, it is necessary (but not sufficient) that : f(0) \geq 0~, \quad , f(x), \leq f(0) (these inequalities follow from the condition for ''n'' = 1, 2.) A function is ''negative semi-definite'' if the inequality is reversed. A function is ''definite'' if the weak inequality is replaced with a strong ( 0). Examples If (X, \langle \cdot, \cdot \rangle) is a real inner prod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Karmarkar Algorithm

Karmarkar's algorithm is an algorithm introduced by Narendra Karmarkar in 1984 for solving linear programming problems. It was the first reasonably efficient algorithm that solves these problems in polynomial time. The ellipsoid method is also polynomial time but proved to be inefficient in practice. Denoting by n the number of variables, ''m'' the number of inequality constraints, and L the number of bits of input to the algorithm, Karmarkar's algorithm requires O(m^ n^ L) operations on O(L)-digit numbers, as compared to O(n^3(n+m) L) such operations for the ellipsoid algorithm. In "square" problems, when ''m'' is in O(''n''), Karmarkar's algorithm requires O(n^ L) operations on O(L)-digit numbers, as compared to O(n^4 L) such operations for the ellipsoid algorithm. The runtime of Karmarkar's algorithm is thus : O(n^ L^2 \cdot \log L \cdot \log \log L), using FFT-based multiplication (see Big O notation). Karmarkar's algorithm falls within the class of interior-point methods: th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

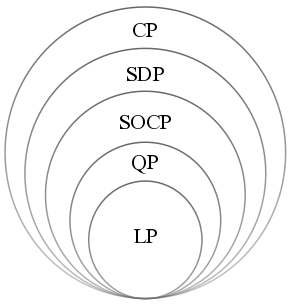

Convex Optimization

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Definition Abstract form A convex optimization problem is defined by two ingredients: * The ''objective function'', which is a real-valued convex function of ''n'' variables, f :\mathcal D \subseteq \mathbb^n \to \mathbb; * The ''feasible set'', which is a convex subset C\subseteq \mathbb^n. The goal of the problem is to find some \mathbf \in C attaining :\inf \. In general, there are three options regarding the existence of a solution: * If such a point ''x''* exists, it is referred to as an ''optimal point'' or ''solution''; the set of all optimal points is called the ''optimal set''; and the problem is called ''solvable''. * If f is unbou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Convex Function

In mathematics, a real-valued function is called convex if the line segment between any two distinct points on the graph of a function, graph of the function lies above or on the graph between the two points. Equivalently, a function is convex if its epigraph (mathematics), ''epigraph'' (the set of points on or above the graph of the function) is a convex set. In simple terms, a convex function graph is shaped like a cup \cup (or a straight line like a linear function), while a concave function's graph is shaped like a cap \cap. A twice-differentiable function, differentiable function of a single variable is convex if and only if its second derivative is nonnegative on its entire domain of a function, domain. Well-known examples of convex functions of a single variable include a linear function f(x) = cx (where c is a real number), a quadratic function cx^2 (c as a nonnegative real number) and an exponential function ce^x (c as a nonnegative real number). Convex functions pl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Convex Program

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Definition Abstract form A convex optimization problem is defined by two ingredients: * The ''objective function'', which is a real-valued convex function of ''n'' variables, f :\mathcal D \subseteq \mathbb^n \to \mathbb; * The ''feasible set'', which is a convex subset C\subseteq \mathbb^n. The goal of the problem is to find some \mathbf \in C attaining :\inf \. In general, there are three options regarding the existence of a solution: * If such a point ''x''* exists, it is referred to as an ''optimal point'' or ''solution''; the set of all optimal points is called the ''optimal set''; and the problem is called ''solvable''. * If f is unbo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Mehrotra Predictor–corrector Method

Mehrotra's predictor–corrector method in optimization is a specific interior point method for linear programming. It was proposed in 1989 by Sanjay Mehrotra. The method is based on the fact that at each iteration of an interior point algorithm it is necessary to compute the Cholesky decomposition (factorization) of a large matrix to find the search direction. The factorization step is the most computationally expensive step in the algorithm. Therefore, it makes sense to use the same decomposition more than once before recomputing it. At each iteration of the algorithm, Mehrotra's predictor–corrector method uses the same Cholesky decomposition to find two different directions: a predictor and a corrector. The idea is to first compute an optimizing search direction based on a first order term (predictor). The step size that can be taken in this direction is used to evaluate how much centrality correction is needed. Then, a corrector term is computed: this contains both a central ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Iteration

Iteration is the repetition of a process in order to generate a (possibly unbounded) sequence of outcomes. Each repetition of the process is a single iteration, and the outcome of each iteration is then the starting point of the next iteration. In mathematics and computer science, iteration (along with the related technique of recursion) is a standard element of algorithms. Mathematics In mathematics, iteration may refer to the process of iterated function, iterating a function, i.e. applying a function repeatedly, using the output from one iteration as the input to the next. Iteration of apparently simple functions can produce complex behaviors and difficult problems – for examples, see the Collatz conjecture and juggler sequences. Another use of iteration in mathematics is in iterative methods which are used to produce approximate numerical solutions to certain mathematical problems. Newton's method is an example of an iterative method. Manual calculation of a number's sq ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Arkadi Nemirovski

Arkadi Nemirovski (; born March 14, 1947) is a professor at the H. Milton Stewart School of Industrial and Systems Engineering at the Georgia Institute of Technology. He has been a leader in continuous optimization and is best known for his work on the ellipsoid method, modern interior-point methods and robust optimization. Biography Nemirovski earned a Ph.D. in Mathematics in 1974 from Moscow State University and a Doctor of Sciences in Mathematics degree in 1990 from the Institute of Cybernetics of the Ukrainian Academy of Sciences in Kiev. He has won three prestigious prizes: the Fulkerson Prize, the George B. Dantzig Prize, and the John von Neumann Theory Prize. He was elected a member of the U.S. National Academy of Engineering (NAE) in 2017 "for the development of efficient algorithms for large-scale convex optimization problems", and the U.S National Academy of Sciences (NAS) in 2020. In 2023, Nemirovski and Yurii Nesterov were jointly awarded th2023 WLA Prize in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Yurii Nesterov

Yurii Nesterov is a Russian mathematician, an internationally recognized expert in convex optimization, especially in the development of efficient algorithms and numerical optimization analysis. He is currently a professor at the University of Louvain (UCLouvain). Biography In 1977, Yurii Nesterov graduated in applied mathematics at Moscow State University. From 1977 to 1992 he was a researcher at the Central Economic Mathematical Institute of the Russian Academy of Sciences. Since 1993, he has been working at UCLouvain, specifically in the Department of Mathematical Engineering from the Louvain School of Engineering, Center for Operations Research and Econometrics. In 2000, Nesterov received the Dantzig Prize. In 2009, Nesterov won the John von Neumann Theory Prize. In 2016, Nesterov received the EURO Gold Medal. In 2023, Yurii Nesterov and Arkadi Nemirovski received the WLA Prize in Computer Science or Mathematics, "for their seminal work in convex optimization theo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |