|

Generalized Logistic Distribution

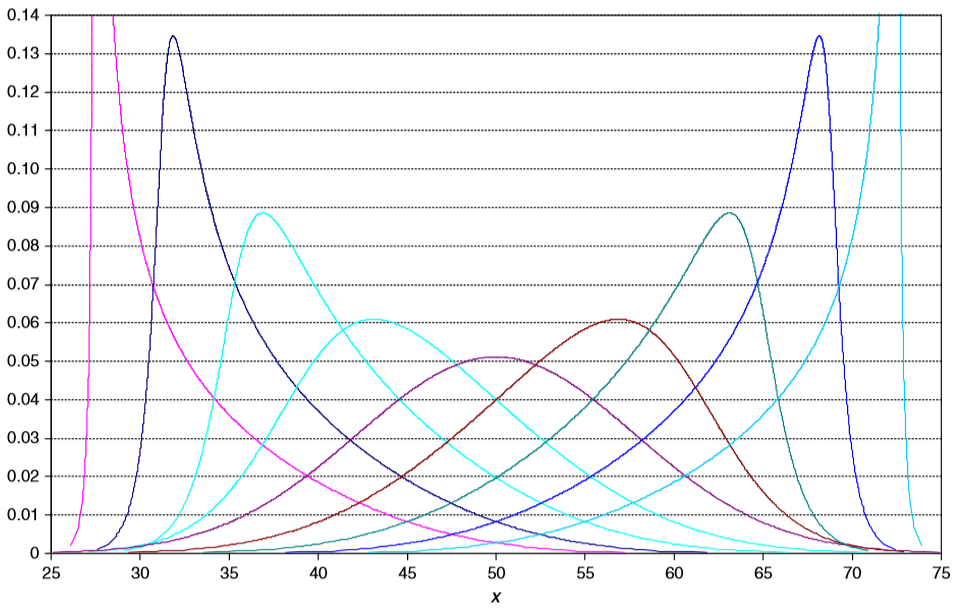

The term generalized logistic distribution is used as the name for several different families of probability distributions. For example, Johnson et al.Johnson, N.L., Kotz, S., Balakrishnan, N. (1995) ''Continuous Univariate Distributions, Volume 2'', Wiley. (pages 140–142) list four forms, which are listed below. The Type I family described below has also been called the skew-logistic distribution. For other families of distributions that have also been called generalized logistic distributions, see the shifted log-logistic distribution, which is a generalization of the log-logistic distribution; and the metalog ("meta-logistic") distribution, which is highly shape-and-bounds flexible and can be fit to data with linear least squares. Definitions The following definitions are for standardized versions of the families, which can be expanded to the full form as a location-scale family. Each is defined using either the cumulative distribution function (''F'') or the probability d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distributions

In probability theory and statistics, a probability distribution is the mathematical Function (mathematics), function that gives the probabilities of occurrence of different possible outcomes for an Experiment (probability theory), experiment. It is a mathematical description of a Randomness, random phenomenon in terms of its sample space and the Probability, probabilities of Event (probability theory), events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that fair coin, the coin is fair). Examples of random phenomena include the weather conditions at some future date, the height of a randomly selected person, the fraction of male students in a school, the results of a Survey methodology, survey to be conducted, etc. Introduction A probability distribution is a mathematical description of the probabilit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Type I

Type 1 or Type I or ''variant'', may refer to: Health *Diabetes mellitus type 1 (also known as "Type 1 Diabetes"), insulin-dependent diabetes * Type I female genital mutilation * Type 1 personality *Type I hypersensitivity (or immediate hypersensitivity), an allergic reaction Vehicles * Type 1 diesel locomotives Civilian automotive *US F1 Type 1, 2010 F1 Car * Bugatti Type 1, an automobile *Peugeot Type 1, 1890s vis-a-vis *Volkswagen Type 1, an automobile commonly known as the Volkswagen Beetle Military *German Type I submarine *Type 001 aircraft carrier, PLAN carrier class variant of the Soviet Kuznetsov class *Nakajima Ki-43, officially designated Army Type 1 Fighter Japanese armoured vehicles of World War II *Type 1 Chi-He, a tank *Type 1 Ho-Ni I, a tank *Type 1 Ho-Ha, an armoured personnel carrier *Type 1 Ho-Ki, an armoured personnel carrier Weapons * Type 1 37 mm Anti-Tank Gun, a Japanese weapon * Type 1 47 mm Anti-Tank Gun, a Japanese weapon *Type 01 LMAT, a Japanese fire- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shifted Log-logistic Distribution

The shifted log-logistic distribution is a probability distribution also known as the generalized log-logistic or the three-parameter log-logistic distribution. It has also been called the generalized logistic distribution, but this conflicts with other uses of the term: see generalized logistic distribution. Definition The shifted log-logistic distribution can be obtained from the log-logistic distribution by addition of a shift parameter \delta. Thus if X has a log-logistic distribution then X+\delta has a shifted log-logistic distribution. So Y has a shifted log-logistic distribution if \log(Y-\delta) has a logistic distribution. The shift parameter adds a location parameter to the scale and shape parameters of the (unshifted) log-logistic. The properties of this distribution are straightforward to derive from those of the log-logistic distribution. However, an alternative parameterisation, similar to that used for the generalized Pareto distribution and the generalized ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Log-logistic Distribution

In probability and statistics, the log-logistic distribution (known as the Fisk distribution in economics) is a continuous probability distribution for a non-negative random variable. It is used in survival analysis as a parametric model for events whose rate increases initially and decreases later, as, for example, mortality rate from cancer following diagnosis or treatment. It has also been used in hydrology to model stream flow and precipitation, in economics as a simple model of the distribution of wealth or income, and in networking to model the transmission times of data considering both the network and the software. The log-logistic distribution is the probability distribution of a random variable whose logarithm has a logistic distribution. It is similar in shape to the log-normal distribution but has heavier tails. Unlike the log-normal, its cumulative distribution function can be written in closed form. Characterization There are several different parameteriza ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metalog Distribution

The metalog distribution is a flexible continuous probability distribution designed for ease of use in practice. Together with its transforms, the metalog family of continuous distributions is unique because it embodies ''all'' of following properties: virtually unlimited shape flexibility; a choice among unbounded, semi-bounded, and bounded distributions; ease of fitting to data with linear least squares; simple, closed-form quantile function (inverse CDF) equations that facilitate simulation; a simple, closed-form PDF; and Bayesian updating in closed form in light of new data. Moreover, like a Taylor series, metalog distributions may have any number of terms, depending on the degree of shape flexibility desired and other application needs. Applications where metalog distributions can be useful typically involve fitting empirical data, simulated data, or expert-elicited quantiles to smooth, continuous probability distributions. Fields of application are wide-ranging, and include ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cumulative Distribution Function

In probability theory and statistics, the cumulative distribution function (CDF) of a real-valued random variable X, or just distribution function of X, evaluated at x, is the probability that X will take a value less than or equal to x. Every probability distribution supported on the real numbers, discrete or "mixed" as well as continuous, is uniquely identified by an ''upwards continuous'' ''monotonic increasing'' cumulative distribution function F : \mathbb R \rightarrow ,1/math> satisfying \lim_F(x)=0 and \lim_F(x)=1. In the case of a scalar continuous distribution, it gives the area under the probability density function from minus infinity to x. Cumulative distribution functions are also used to specify the distribution of multivariate random variables. Definition The cumulative distribution function of a real-valued random variable X is the function given by where the right-hand side represents the probability that the random variable X takes on a value less ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Density Function

In probability theory, a probability density function (PDF), or density of a continuous random variable, is a function whose value at any given sample (or point) in the sample space (the set of possible values taken by the random variable) can be interpreted as providing a ''relative likelihood'' that the value of the random variable would be close to that sample. Probability density is the probability per unit length, in other words, while the ''absolute likelihood'' for a continuous random variable to take on any particular value is 0 (since there is an infinite set of possible values to begin with), the value of the PDF at two different samples can be used to infer, in any particular draw of the random variable, how much more likely it is that the random variable would be close to one sample compared to the other sample. In a more precise sense, the PDF is used to specify the probability of the random variable falling ''within a particular range of values'', as opposed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Beta Function

In mathematics, the beta function, also called the Euler integral of the first kind, is a special function that is closely related to the gamma function and to binomial coefficients. It is defined by the integral : \Beta(z_1,z_2) = \int_0^1 t^(1-t)^\,dt for complex number inputs z_1, z_2 such that \Re(z_1), \Re(z_2)>0. The beta function was studied by Leonhard Euler and Adrien-Marie Legendre and was given its name by Jacques Binet; its symbol is a Greek capital beta. Properties The beta function is symmetric, meaning that \Beta(z_1,z_2) = \Beta(z_2,z_1) for all inputs z_1 and z_2.Davis (1972) 6.2.2 p.258 A key property of the beta function is its close relationship to the gamma function: : \Beta(z_1,z_2)=\frac. A proof is given below in . The beta function is also closely related to binomial coefficients. When (or , by symmetry) is a positive integer, it follows from the definition of the gamma function thatDavis (1972) 6.2.1 p.258 : \Beta(m,n) =\dfrac = \frac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moment Generating Function

In probability theory and statistics, the moment-generating function of a real-valued random variable is an alternative specification of its probability distribution. Thus, it provides the basis of an alternative route to analytical results compared with working directly with probability density functions or cumulative distribution functions. There are particularly simple results for the moment-generating functions of distributions defined by the weighted sums of random variables. However, not all random variables have moment-generating functions. As its name implies, the moment-generating function can be used to compute a distribution’s moments: the ''n''th moment about 0 is the ''n''th derivative of the moment-generating function, evaluated at 0. In addition to real-valued distributions (univariate distributions), moment-generating functions can be defined for vector- or matrix-valued random variables, and can even be extended to more general cases. The moment-generating fun ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Champernowne Distribution

In statistics, the Champernowne distribution is a symmetric, continuous probability distribution, describing random variables that take both positive and negative values. It is a generalization of the logistic distribution that was introduced by D. G. Champernowne. Champernowne developed the distribution to describe the logarithm of income. Definition The Champernowne distribution has a probability density function given by : f(y;\alpha, \lambda, y_0 ) = \frac, \qquad -\infty 0. See also *Generalized logistic distribution The term generalized logistic distribution is used as the name for several different families of probability distributions. For example, Johnson et al.Johnson, N.L., Kotz, S., Balakrishnan, N. (1995) ''Continuous Univariate Distributions, Volume 2' ... References {{DEFAULTSORT:Champernowne distribution Continuous distributions ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |