|

Forward Algorithm

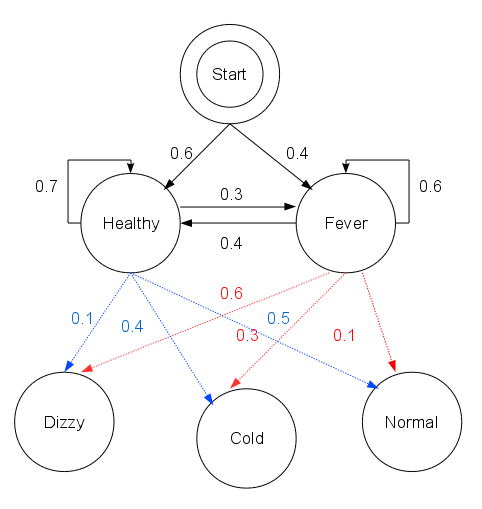

The forward algorithm, in the context of a hidden Markov model (HMM), is used to calculate a 'belief state': the probability of a state at a certain time, given the history of evidence. The process is also known as ''filtering''. The forward algorithm is closely related to, but distinct from, the Viterbi algorithm. The forward and backward algorithms should be placed within the context of probability as they appear to simply be names given to a set of standard mathematical procedures within a few fields. For example, neither "forward algorithm" nor "Viterbi" appear in the Cambridge encyclopedia of mathematics. The main observation to take away from these algorithms is how to organize Bayesian updates and inference to be efficient in the context of directed graphs of variables (see sum-product networks). For an HMM such as this one: this probability is written as p(x_t , y_ ). Here x(t) is the hidden state which is abbreviated as x_t and y_ are the observations 1 to t. The b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hidden Markov Model

A hidden Markov model (HMM) is a statistical Markov model in which the system being modeled is assumed to be a Markov process — call it X — with unobservable ("''hidden''") states. As part of the definition, HMM requires that there be an observable process Y whose outcomes are "influenced" by the outcomes of X in a known way. Since X cannot be observed directly, the goal is to learn about X by observing Y. HMM has an additional requirement that the outcome of Y at time t=t_0 must be "influenced" exclusively by the outcome of X at t=t_0 and that the outcomes of X and Y at t handwriting recognition, handwriting, gesture recognition, part-of-speech tagging, musical score following, partial discharges and bioinformatics. Definition Let X_n and Y_n be discrete-time stochastic processes and n\geq 1. The pair (X_n,Y_n) is a ''hidden Markov model'' if * X_n is a Markov process whose behavior is not directly observable ("hidden"); * \operatorname\bigl(Y_n \i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Viterbi Algorithm

The Viterbi algorithm is a dynamic programming algorithm for obtaining the maximum a posteriori probability estimate of the most likely sequence of hidden states—called the Viterbi path—that results in a sequence of observed events, especially in the context of Markov information sources and hidden Markov models (HMM). The algorithm has found universal application in decoding the convolutional codes used in both CDMA and GSM digital cellular, dial-up modems, satellite, deep-space communications, and 802.11 wireless LANs. It is now also commonly used in speech recognition, speech synthesis, diarization, keyword spotting, computational linguistics, and bioinformatics. For example, in speech-to-text (speech recognition), the acoustic signal is treated as the observed sequence of events, and a string of text is considered to be the "hidden cause" of the acoustic signal. The Viterbi algorithm finds the most likely string of text given the acoustic signal. History The Viterbi a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Belief Propagation

A belief is an attitude that something is the case, or that some proposition is true. In epistemology, philosophers use the term "belief" to refer to attitudes about the world which can be either true or false. To believe something is to take it to be true; for instance, to believe that snow is white is comparable to accepting the truth of the proposition "snow is white". However, holding a belief does not require active introspection. For example, few carefully consider whether or not the sun will rise tomorrow, simply assuming that it will. Moreover, beliefs need not be ''occurrent'' (e.g. a person actively thinking "snow is white"), but can instead be ''dispositional'' (e.g. a person who if asked about the color of snow would assert "snow is white"). There are various different ways that contemporary philosophers have tried to describe beliefs, including as representations of ways that the world could be (Jerry Fodor), as dispositions to act as if certain things are true (Rod ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lawrence Rabiner

Lawrence R. Rabiner (born 28 September 1943) is an electrical engineer working in the fields of digital signal processing and speech processing; in particular in digital signal processing for automatic speech recognition. He has worked on systems for AT&T Corporation for speech recognition. He holds a joint academic appointment between Rutgers University and the University of California, Santa Barbara. Education * B.S. in electrical engineering, Massachusetts Institute of Technology, 1964 * M.S. in electrical engineering, Massachusetts Institute of Technology, 1964 * Ph.D. in electrical engineering, Massachusetts Institute of Technology, 1967 Research interests * Digital signal processing * Speech processing * Multimodal user interfaces * Multimedia communications * Shared collaboration systems for tele-collaboration Life Rabiner was born in Brooklyn, New York, in 1943. During his studies at MIT, he participated in the cooperative program at AT&T Bell Laboratories, during w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE

The Institute of Electrical and Electronics Engineers (IEEE) is a 501(c)(3) professional association for electronic engineering and electrical engineering (and associated disciplines) with its corporate office in New York City and its operations center in Piscataway, New Jersey. The mission of the IEEE is ''advancing technology for the benefit of humanity''. The IEEE was formed from the amalgamation of the American Institute of Electrical Engineers and the Institute of Radio Engineers in 1963. Due to its expansion of scope into so many related fields, it is simply referred to by the letters I-E-E-E (pronounced I-triple-E), except on legal business documents. , it is the world's largest association of technical professionals with more than 423,000 members in over 160 countries around the world. Its objectives are the educational and technical advancement of electrical and electronic engineering, telecommunications, computer engineering and similar disciplines. History Origin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Biology

Computational biology refers to the use of data analysis, mathematical modeling and computational simulations to understand biological systems and relationships. An intersection of computer science, biology, and big data, the field also has foundations in applied mathematics, chemistry, and genetics. It differs from biological computing, a subfield of computer engineering which uses bioengineering to build computers. History Bioinformatics, the analysis of informatics processes in biological systems, began in the early 1970s. At this time, research in artificial intelligence was using network models of the human brain in order to generate new algorithms. This use of biological data pushed biological researchers to use computers to evaluate and compare large data sets in their own field. By 1982, researchers shared information via punch cards. The amount of data grew exponentially by the end of the 1980s, requiring new computational methods for quickly interpreting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Joint Probability Distribution

Given two random variables that are defined on the same probability space, the joint probability distribution is the corresponding probability distribution on all possible pairs of outputs. The joint distribution can just as well be considered for any given number of random variables. The joint distribution encodes the marginal distributions, i.e. the distributions of each of the individual random variables. It also encodes the conditional probability distributions, which deal with how the outputs of one random variable are distributed when given information on the outputs of the other random variable(s). In the formal mathematical setup of measure theory, the joint distribution is given by the pushforward measure, by the map obtained by pairing together the given random variables, of the sample space's probability measure. In the case of real-valued random variables, the joint distribution, as a particular multivariate distribution, may be expressed by a multivariate cumulativ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Marginal Distribution

In probability theory and statistics, the marginal distribution of a subset of a collection of random variables is the probability distribution of the variables contained in the subset. It gives the probabilities of various values of the variables in the subset without reference to the values of the other variables. This contrasts with a conditional distribution, which gives the probabilities contingent upon the values of the other variables. Marginal variables are those variables in the subset of variables being retained. These concepts are "marginal" because they can be found by summing values in a table along rows or columns, and writing the sum in the margins of the table. The distribution of the marginal variables (the marginal distribution) is obtained by marginalizing (that is, focusing on the sums in the margin) over the distribution of the variables being discarded, and the discarded variables are said to have been marginalized out. The context here is that the theoretical ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Independence

In probability theory, conditional independence describes situations wherein an observation is irrelevant or redundant when evaluating the certainty of a hypothesis. Conditional independence is usually formulated in terms of conditional probability, as a special case where the probability of the hypothesis given the uninformative observation is equal to the probability without. If A is the hypothesis, and B and C are observations, conditional independence can be stated as an equality: :P(A\mid B,C) = P(A \mid C) where P(A \mid B, C) is the probability of A given both B and C. Since the probability of A given C is the same as the probability of A given both B and C, this equality expresses that B contributes nothing to the certainty of A. In this case, A and B are said to be conditionally independent given C, written symbolically as: (A \perp\!\!\!\perp B \mid C). The concept of conditional independence is essential to graph-based theories of statistical inference, as it establ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chain Rule (probability)

In probability theory, the chain rule (also called the general product rule) permits the calculation of any member of the joint distribution of a set of random variables using only conditional probabilities. The rule is useful in the study of Bayesian networks, which describe a probability distribution in terms of conditional probabilities. Chain rule for events Two events The chain rule for two random events A and B says P(A \cap B) = P(B \mid A) \cdot P(A). Example This rule is illustrated in the following example. Urn 1 has 1 black ball and 2 white balls and Urn 2 has 1 black ball and 3 white balls. Suppose we pick an urn at random and then select a ball from that urn. Let event A be choosing the first urn: P(A) = P(\overline) = 1/2. Let event B be the chance we choose a white ball. The chance of choosing a white ball, given that we have chosen the first urn, is P(B, A) = 2/3. Event A \cap B would be their intersection: choosing the first urn and a white ball from it. The pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.jpg)