|

Feed-forward Network

A feedforward neural network (FNN) is an artificial neural network wherein connections between the nodes do ''not'' form a cycle. As such, it is different from its descendant: recurrent neural networks. The feedforward neural network was the first and simplest type of artificial neural network devised. In this network, the information moves in only one direction—forward—from the input nodes, through the hidden nodes (if any) and to the output nodes. There are no cycles or loops in the network. Single-layer perceptron The simplest kind of neural network is a ''single-layer perceptron'' network, which consists of a single layer of output nodes; the inputs are fed directly to the outputs via a series of weights. The sum of the products of the weights and the inputs is calculated in each node, and if the value is above some threshold (typically 0) the neuron fires and takes the activated value (typically 1); otherwise it takes the deactivated value (typically -1). Ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feed Forward Neural Net

Feed or The Feed may refer to: Animal foodstuffs * Animal feed, food given to domestic animals in the course of animal husbandry ** Fodder, foodstuffs manufactured for animal consumption ** Forage, foodstuffs that animals gather themselves, such as by grazing * Compound feed, foodstuffs that are blended from various raw materials and additives Arts, entertainment, and media Comedy * A straight man who 'feeds' lines to the funny man in a comic dialogue Film * ''Feed'' (2005 film), a 2005 film directed by Brett Leonard * ''Feed'' (2017 film), a 2017 film directed by Tommy Bertelsen Literature * ''Feed'' (Anderson novel), a 2002 novel by M. T. Anderson * ''Feed'' (Grant novel), a 2010 novel by Seanan McGuire under the name "Mira Grant" Music * "Feed Us", 2007 song by Serj Tankian from ''Elect the Dead'' * "Feed", 2022 song by Demi Lovato from ''Holy Fvck'' Online media * ''Feed Magazine'', one of the earliest e-zines that relied entirely on its original online content * ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Universal Approximation Theorem

In the mathematical theory of artificial neural networks, universal approximation theorems are results that establish the density of an algorithmically generated class of functions within a given function space of interest. Typically, these results concern the approximation capabilities of the feedforward architecture on the space of continuous functions between two Euclidean spaces, and the approximation is with respect to the compact convergence topology. However, there are also a variety of results between non-Euclidean spaces and other commonly used architectures and, more generally, algorithmically generated sets of functions, such as the convolutional neural network (CNN) architecture, radial basis-functions, or neural networks with specific properties. Most universal approximation theorems can be parsed into two classes. The first quantifies the approximation capabilities of neural networks with an arbitrary number of artificial neurons ("''arbitrary width''" case) and the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vanishing Gradient Problem

In machine learning, the vanishing gradient problem is encountered when training artificial neural networks with gradient-based learning methods and backpropagation. In such methods, during each iteration of training each of the neural network's weights receives an update proportional to the partial derivative of the error function with respect to the current weight. The problem is that in some cases, the gradient will be vanishingly small, effectively preventing the weight from changing its value. In the worst case, this may completely stop the neural network from further training. As one example of the problem cause, traditional activation functions such as the hyperbolic tangent function have gradients in the range , and backpropagation computes gradients by the chain rule. This has the effect of multiplying of these small numbers to compute gradients of the early layers in an -layer network, meaning that the gradient (error signal) decreases exponentially with while the ea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

XOR Perceptron Net

Exclusive or or exclusive disjunction is a logical operation that is true if and only if its arguments differ (one is true, the other is false). It is symbolized by the prefix operator J and by the infix operators XOR ( or ), EOR, EXOR, , , , , , and . The negation of XOR is the logical biconditional, which yields true if and only if the two inputs are the same. It gains the name "exclusive or" because the meaning of "or" is ambiguous when both operands are true; the exclusive or operator ''excludes'' that case. This is sometimes thought of as "one or the other but not both". This could be written as "A or B, but not, A and B". Since it is associative, it may be considered to be an ''n''-ary operator which is true if and only if an odd number of arguments are true. That is, ''a'' XOR ''b'' XOR ... may be treated as XOR(''a'',''b'',...). Truth table The truth table of A XOR B shows that it outputs true whenever the inputs differ: Equivalences, elimination, and introduct ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Modular Arithmetic

In mathematics, modular arithmetic is a system of arithmetic for integers, where numbers "wrap around" when reaching a certain value, called the modulus. The modern approach to modular arithmetic was developed by Carl Friedrich Gauss in his book '' Disquisitiones Arithmeticae'', published in 1801. A familiar use of modular arithmetic is in the 12-hour clock, in which the day is divided into two 12-hour periods. If the time is 7:00 now, then 8 hours later it will be 3:00. Simple addition would result in , but clocks "wrap around" every 12 hours. Because the hour number starts over at zero when it reaches 12, this is arithmetic ''modulo'' 12. In terms of the definition below, 15 is ''congruent'' to 3 modulo 12, so "15:00" on a 24-hour clock is displayed "3:00" on a 12-hour clock. Congruence Given an integer , called a modulus, two integers and are said to be congruent modulo , if is a divisor of their difference (that is, if there is an integer such that ). Congruence modu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chain Rule

In calculus, the chain rule is a formula that expresses the derivative of the Function composition, composition of two differentiable functions and in terms of the derivatives of and . More precisely, if h=f\circ g is the function such that h(x)=f(g(x)) for every , then the chain rule is, in Lagrange's notation, :h'(x) = f'(g(x)) g'(x). or, equivalently, :h'=(f\circ g)'=(f'\circ g)\cdot g'. The chain rule may also be expressed in Leibniz's notation. If a variable depends on the variable , which itself depends on the variable (that is, and are dependent variables), then depends on as well, via the intermediate variable . In this case, the chain rule is expressed as :\frac = \frac \cdot \frac, and : \left.\frac\_ = \left.\frac\_ \cdot \left. \frac\_ , for indicating at which points the derivatives have to be evaluated. In integral, integration, the counterpart to the chain rule is the substitution rule. Intuitive explanation Intuitively, the chain rule states that knowing ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Backpropagation

In machine learning, backpropagation (backprop, BP) is a widely used algorithm for training feedforward artificial neural networks. Generalizations of backpropagation exist for other artificial neural networks (ANNs), and for functions generally. These classes of algorithms are all referred to generically as "backpropagation". In fitting a neural network, backpropagation computes the gradient of the loss function with respect to the weights of the network for a single input–output example, and does so efficiently, unlike a naive direct computation of the gradient with respect to each weight individually. This efficiency makes it feasible to use gradient methods for training multilayer networks, updating weights to minimize loss; gradient descent, or variants such as stochastic gradient descent, are commonly used. The backpropagation algorithm works by computing the gradient of the loss function with respect to each weight by the chain rule, computing the gradient one laye ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sigma

Sigma (; uppercase Σ, lowercase σ, lowercase in word-final position ς; grc-gre, σίγμα) is the eighteenth letter of the Greek alphabet. In the system of Greek numerals, it has a value of 200. In general mathematics, uppercase Σ is used as an operator for summation. When used at the end of a letter-case word (one that does not use all caps), the final form (ς) is used. In ' (Odysseus), for example, the two lowercase sigmas (σ) in the center of the name are distinct from the word-final sigma (ς) at the end. The Latin letter S derives from sigma while the Cyrillic letter Es derives from a lunate form of this letter. History The shape (Σς) and alphabetic position of sigma is derived from the Phoenician letter ( ''shin''). Sigma's original name may have been ''san'', but due to the complicated early history of the Greek epichoric alphabets, ''san'' came to be identified as a separate letter in the Greek alphabet, represented as Ϻ. Herodotus reports that "sa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sigmoid Function

A sigmoid function is a mathematical function having a characteristic "S"-shaped curve or sigmoid curve. A common example of a sigmoid function is the logistic function shown in the first figure and defined by the formula: :S(x) = \frac = \frac=1-S(-x). Other standard sigmoid functions are given in the Examples section. In some fields, most notably in the context of artificial neural networks, the term "sigmoid function" is used as an alias for the logistic function. Special cases of the sigmoid function include the Gompertz curve (used in modeling systems that saturate at large values of x) and the ogee curve (used in the spillway of some dams). Sigmoid functions have domain of all real numbers, with return (response) value commonly monotonically increasing but could be decreasing. Sigmoid functions most often show a return value (y axis) in the range 0 to 1. Another commonly used range is from −1 to 1. A wide variety of sigmoid functions including the logistic and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Model

A statistical model is a mathematical model that embodies a set of statistical assumptions concerning the generation of sample data (and similar data from a larger population). A statistical model represents, often in considerably idealized form, the data-generating process. A statistical model is usually specified as a mathematical relationship between one or more random variables and other non-random variables. As such, a statistical model is "a formal representation of a theory" ( Herman Adèr quoting Kenneth Bollen). All statistical hypothesis tests and all statistical estimators are derived via statistical models. More generally, statistical models are part of the foundation of statistical inference. Introduction Informally, a statistical model can be thought of as a statistical assumption (or set of statistical assumptions) with a certain property: that the assumption allows us to calculate the probability of any event. As an example, consider a pair of ordinary six ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logistic Regression

In statistics, the logistic model (or logit model) is a statistical model that models the probability of an event taking place by having the log-odds for the event be a linear function (calculus), linear combination of one or more independent variables. In regression analysis, logistic regression (or logit regression) is estimation theory, estimating the parameters of a logistic model (the coefficients in the linear combination). Formally, in binary logistic regression there is a single binary variable, binary dependent variable, coded by an indicator variable, where the two values are labeled "0" and "1", while the independent variables can each be a binary variable (two classes, coded by an indicator variable) or a continuous variable (any real value). The corresponding probability of the value labeled "1" can vary between 0 (certainly the value "0") and 1 (certainly the value "1"), hence the labeling; the function that converts log-odds to probability is the logistic function, h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Logistic Function

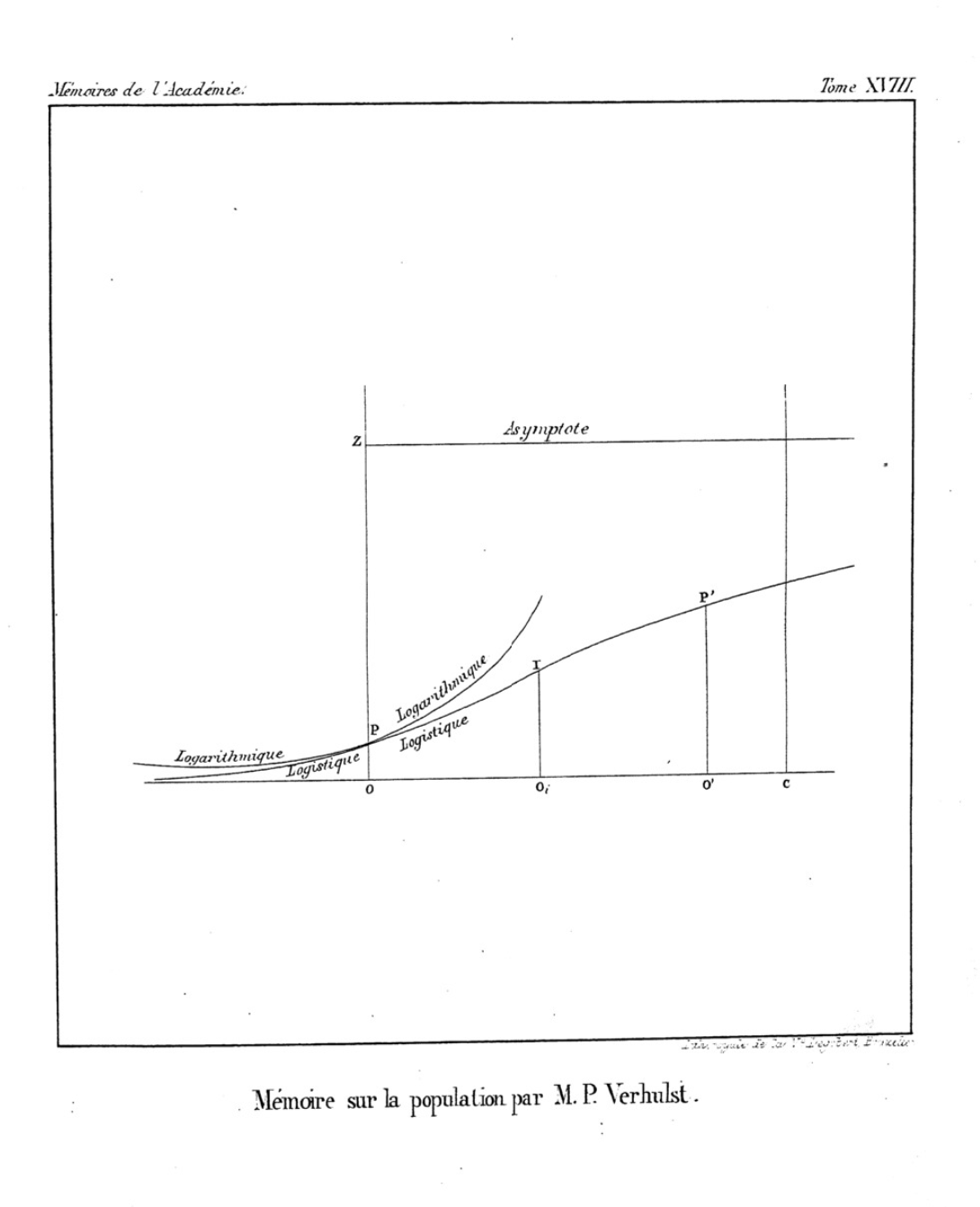

A logistic function or logistic curve is a common S-shaped curve (sigmoid function, sigmoid curve) with equation f(x) = \frac, where For values of x in the domain of real numbers from -\infty to +\infty, the S-curve shown on the right is obtained, with the graph of f approaching L as x approaches +\infty and approaching zero as x approaches -\infty. The logistic function finds applications in a range of fields, including biology (especially ecology), biomathematics, chemistry, demography, economics, geoscience, mathematical psychology, probability, sociology, political science, linguistics, statistics, and artificial neural networks. A generalization of the logistic function is the hyperbolastic functions, hyperbolastic function of type I. The standard logistic function, where L=1,k=1,x_0=0, is sometimes simply called ''the sigmoid''. It is also sometimes called the ''expit'', being the inverse of the logit. History The logistic function was introduced in a series of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |