|

Features From Accelerated Segment Test

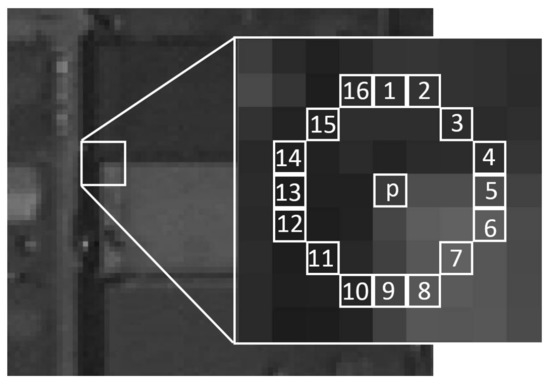

Features from accelerated segment test (FAST) is a corner detection method, which could be used to extract feature points and later used to track and map objects in many computer vision tasks. The FAST corner detector was originally developed by Edward Rosten and Tom Drummond, and was published in 2006. The most promising advantage of the FAST corner detector is its computational efficiency. Referring to its name, it is indeed faster than many other well-known feature extraction methods, such as difference of Gaussians (DoG) used by the SIFT, SUSAN and Harris detectors. Moreover, when machine learning techniques are applied, superior performance in terms of computation time and resources can be realised. The FAST corner detector is very suitable for real-time video processing application because of this high-speed performance. Segment test detector FAST corner detector uses a circle of 16 pixels (a Bresenham circle of radius 3) to classify whether a candidate point p is actually ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Corner Detection

Corner detection is an approach used within computer vision systems to extract certain kinds of features and infer the contents of an image. Corner detection is frequently used in motion detection, image registration, video tracking, image mosaicing, panorama stitching, 3D reconstruction and object recognition. Corner detection overlaps with the topic of interest point detection. Formalization A corner can be defined as the intersection of two edges. A corner can also be defined as a point for which there are two dominant and different edge directions in a local neighbourhood of the point. An interest point is a point in an image which has a well-defined position and can be robustly detected. This means that an interest point can be a corner but it can also be, for example, an isolated point of local intensity maximum or minimum, line endings, or a point on a curve where the curvature is locally maximal. In practice, most so-called corner detection methods detect interes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

C (programming Language)

C (''pronounced like the letter c'') is a General-purpose language, general-purpose computer programming language. It was created in the 1970s by Dennis Ritchie, and remains very widely used and influential. By design, C's features cleanly reflect the capabilities of the targeted CPUs. It has found lasting use in operating systems, device drivers, protocol stacks, though decreasingly for application software. C is commonly used on computer architectures that range from the largest supercomputers to the smallest microcontrollers and embedded systems. A successor to the programming language B (programming language), B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the measuring programming language popularity, most widely used programming languages, with C compilers avail ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pentium D

Pentium D is a range of desktop 64-bit x86-64 processors based on the NetBurst microarchitecture, which is the dual-core variant of the Pentium 4 manufactured by Intel. Each CPU comprised two dies, each containing a single core, residing next to each other on a multi-chip module package. The brand's first processor, codenamed ''Smithfield'' and manufactured on the 90 nm process, was released on May 25, 2005, followed by the 65 nm ''Presler'' nine months later. By 2004, the NetBurst processors reached a clock speed barrier at 3.8 GHz due to a thermal (and power) limit exemplified by the ''Presler's'' 130 watt thermal design power (a higher TDP requires additional cooling that can be prohibitively noisy or expensive). The future belonged to more energy efficient and slower clocked dual-core CPUs on a single die instead of two. However, the Pentium D did not offer significant upgrades in design, still resulting in relatively high power consumption. The final shipment date of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Shi-Tomasi

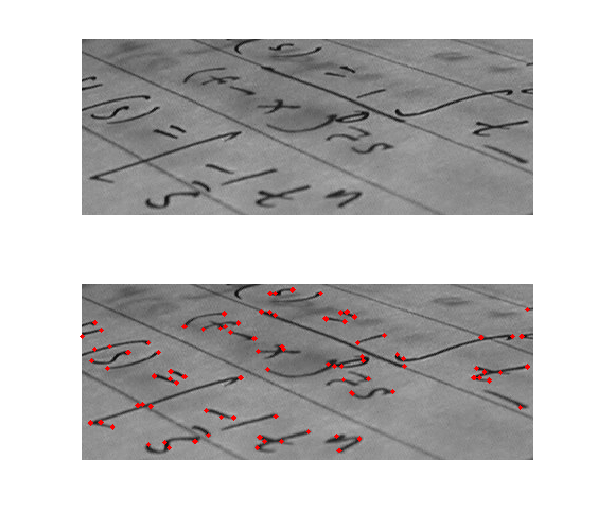

Corner detection is an approach used within computer vision systems to extract certain kinds of Feature detection (computer vision), features and infer the contents of an image. Corner detection is frequently used in motion detection, image registration, video tracking, photographic mosaic, image mosaicing, panorama stitching, 3D reconstruction and object recognition. Corner detection overlaps with the topic of interest point detection. Formalization A corner can be defined as the intersection of two edges. A corner can also be defined as a point for which there are two dominant and different edge directions in a local neighbourhood of the point. An interest point is a point in an image which has a well-defined position and can be robustly detected. This means that an interest point can be a corner but it can also be, for example, an isolated point of local intensity maximum or minimum, line endings, or a point on a curve where the curvature is locally maximal. In practice, mo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Harris–Laplace Detector

In the fields of computer vision and image analysis, the Harris affine region detector belongs to the category of feature detection. Feature detection is a preprocessing step of several algorithms that rely on identifying characteristic points or interest points so to make correspondences between images, recognize textures, categorize objects or build panoramas. Overview The Harris affine detector can identify similar regions between images that are related through affine transformations and have different illuminations. These ''affine-invariant'' detectors should be capable of identifying similar regions in images taken from different viewpoints that are related by a simple geometric transformation: scaling, rotation and shearing. These detected regions have been called both ''invariant'' and ''covariant''. On one hand, the regions are detected ''invariant'' of the image transformation but the regions ''covariantly'' change with image transformation. Do not dwell too much on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pentium 4

Pentium 4 is a series of single-core CPUs for desktops, laptops and entry-level servers manufactured by Intel. The processors were shipped from November 20, 2000 until August 8, 2008. The production of Netburst processors was active from 2000 until May 21, 2010. All Pentium 4 CPUs are based on the NetBurst microarchitecture. The Pentium 4 '' Willamette'' (180 nm) introduced SSE2, while the '' Prescott'' (90 nm) introduced SSE3. Later versions introduced Hyper-Threading Technology (HTT). The first Pentium 4-branded processor to implement 64-bit was the ''Prescott'' (90 nm) (February 2004), but this feature was not enabled. Intel subsequently began selling 64-bit Pentium 4s using the ''"E0" revision'' of the Prescotts, being sold on the OEM market as the Pentium 4, model F. The E0 revision also adds eXecute Disable (XD) (Intel's name for the NX bit) to Intel 64. Intel's official launch of Intel 64 (under the name EM64T at that time) in mainstream de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Simulated Annealing

Simulated annealing (SA) is a probabilistic technique for approximating the global optimum of a given function. Specifically, it is a metaheuristic to approximate global optimization in a large search space for an optimization problem. It is often used when the search space is discrete (for example the traveling salesman problem, the boolean satisfiability problem, protein structure prediction, and job-shop scheduling). For problems where finding an approximate global optimum is more important than finding a precise local optimum in a fixed amount of time, simulated annealing may be preferable to exact algorithms such as gradient descent or branch and bound. The name of the algorithm comes from annealing in metallurgy, a technique involving heating and controlled cooling of a material to alter its physical properties. Both are attributes of the material that depend on their thermodynamic free energy. Heating and cooling the material affects both the temperature and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Metaheuristic

In computer science and mathematical optimization, a metaheuristic is a higher-level procedure or heuristic designed to find, generate, or select a heuristic (partial search algorithm) that may provide a sufficiently good solution to an optimization problem, especially with incomplete or imperfect information or limited computation capacity. Metaheuristics sample a subset of solutions which is otherwise too large to be completely enumerated or otherwise explored. Metaheuristics may make relatively few assumptions about the optimization problem being solved and so may be usable for a variety of problems. Compared to optimization algorithms and iterative methods, metaheuristics do not guarantee that a globally optimal solution can be found on some class of problems. Many metaheuristics implement some form of stochastic optimization, so that the solution found is dependent on the set of random variables generated. In combinatorial optimization, by searching over a large set of fea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Search Algorithm

In computer science, binary search, also known as half-interval search, logarithmic search, or binary chop, is a search algorithm that finds the position of a target value within a sorted array. Binary search compares the target value to the middle element of the array. If they are not equal, the half in which the target cannot lie is eliminated and the search continues on the remaining half, again taking the middle element to compare to the target value, and repeating this until the target value is found. If the search ends with the remaining half being empty, the target is not in the array. Binary search runs in logarithmic time in the worst case, making O(\log n) comparisons, where n is the number of elements in the array. Binary search is faster than linear search except for small arrays. However, the array must be sorted first to be able to apply binary search. There are specialized data structures designed for fast searching, such as hash tables, that can be searched m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decision Tree Model

In computational complexity the decision tree model is the model of computation in which an algorithm is considered to be basically a decision tree, i.e., a sequence of ''queries'' or ''tests'' that are done adaptively, so the outcome of the previous tests can influence the test is performed next. Typically, these tests have a small number of outcomes (such as a yes–no question) and can be performed quickly (say, with unit computational cost), so the worst-case time complexity of an algorithm in the decision tree model corresponds to the depth of the corresponding decision tree. This notion of computational complexity of a problem or an algorithm in the decision tree model is called its decision tree complexity or query complexity. Decision trees models are instrumental in establishing lower bounds for complexity theory for certain classes of computational problems and algorithms. Several variants of decision tree models have been introduced, depending on the computational ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Profile-guided Optimization

Profile-guided optimization (PGO, sometimes pronounced as ''pogo''), also known as profile-directed feedback (PDF), and feedback-directed optimization (FDO) is a compiler optimization technique in computer programming that uses profiling to improve program runtime performance. Method Optimization techniques based on static program analysis of the source code consider code performance improvements without actually executing the program. No dynamic program analysis is performed. The analysis may even consider code within loops including the number of times the loop will execute, for example in loop unrolling. In the absence of all the run time information, static program analysis can not take into account how frequently that code section is actually executed. The first high-level compiler, introduced as the Fortran Automatic Coding System in 1957, broke the code into blocks and devised a table of the frequency each block is executed via a simulated execution of the code in a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |